Beats轻量级日志采集工具

Beats 平台集合了多种单一用途数据采集器。这些采集器安装后可用作轻量型代理,从成百上千或成千上万台机器向 Logstash 或 Elasticsearch 发送数据。常用的Beats有Filebeat(收集文件)、Metricbeat(收集服务、系统的指标数据)、Packetbeat(收集网络包)等。这里主要介绍Filebeat插件。

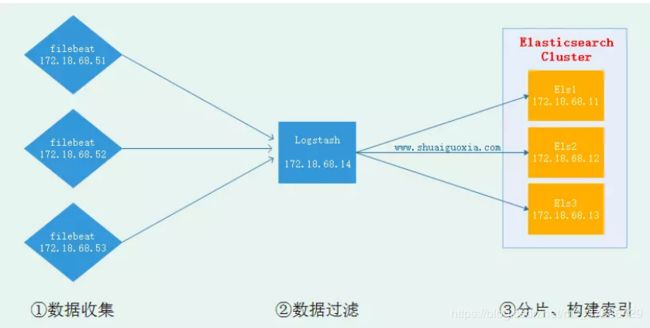

一、架构图

二、安装Filebeat

官网地址: https://www.elastic.co/cn/products/beats

1、下载并安装Filebeat

wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-6.3.2-linux-x86_64.tar.gz

tar -xzf filebeat-6.3.2-linux-x86_64.tar.gz -C /usr/local/

cd /usr/local/

ln -s filebeat-6.3.2-linux-x86_64 filebeat

2、自定义配置文件

① 简单版本的配置文件

cd /usr/local/filebeat/

cat > test.yml << END

filebeat.inputs:

- type: stdin

enabled: true

setup.template.settings:

index.number_of_shards: 3

output.console:

pretty: true

enable: true

END

#启动filebeat,启动filebeat的时候用户需要用filebeat用户或者root用户

./filebeat -e -c test.yml

#测试

启动好后输入任意字符串,如hello,即可输出对应信息。

#启动参数说明:./filebeat -e -c test.yml

-e:输出到标准输出,默认输出到syslog和logs下

-c:指定配置文件

②收集日志文件

cd /usr/local/filebeat/

cat > test.yml << END

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/*.log

- /var/log/messages

exclude_lines: ['^DBG',"^$",".gz$"]

setup.template.settings:

index.number_of_shards: 3

output.console:

pretty: true

enable: true

END

#启动filebeat

./filebeat -e -c test.yml

③自定义字段收集日志文件

cd /usr/local/filebeat/

cat > test.yml << END

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/*.log

- /var/log/messages

exclude_lines: ['^DBG',"^$",".gz$"]

tags: ["web","item"] #自定义tags

fields: #添加自定义字段

from: itcast_from #值随便写

fields_under_root: true #true为添加到根节点中,false为添加到子节点中

setup.template.settings:

index.number_of_shards: 3

output.console:

pretty: true

enable: true

END

#启动filebeat

./filebeat -e -c test.yml

#如果有tags字段在logstash中的书写格式

if "web" in [tags] { }

④收集nginx日志文件输出到ES或者logstash中

cd /usr/local/filebeat/

cat > nginx.yml << END

filebeat.inputs:

- type: log

enabled: true

paths:

- /usr/local/nginx/access/*.log

exclude_lines: ['^DBG',"^$",".gz$"]

document_type: filebeat-nginx_accesslog

tags: ["web","nginx"]

fields:

from: nginx

fields_under_root: true

setup.template.settings:

index.number_of_shards: 3

output.elasticsearch:

hosts: ["192.168.0.117:9200","192.168.0.118:9200","192.168.0.119:9200"]

#output.logstash:

# hosts: ["192.168.0.117:5044"]

END

#启动filebeat

./filebeat -e -c nginx.yml

三、Filebeat收集各个日志到logstash,然后由logstash将日志写到redis,然后再写入到ES

1、filebeat配置文件

cat > dashboard.yml << END

filebeat.inputs:

- input_type: log

paths:

- /var/log/*.log

- /var/log/messages

exclude_lines: ['^DBG',"^$",".gz$"]

document_type: filebeat-systemlog

- input_type: log

paths:

- /usr/local/tomcat/logs/tomcat_access_log.*.log

exclude_lines: ['^DBG',"^$",".gz$"]

document_type: filebeat-tomcat-accesslog

multiline.pattern: '^[0-9]{4}-[0-9]{2}-[0-9]{2}'

multiline.negate: true

multiline.match: after

- type: log

enabled: true

paths:

- /usr/local/nginx/access/*.log

exclude_lines: ['^DBG',"^$",".gz$"]

document_type: filebeat-nginx-accesslog

output.logstash:

hosts: ["192.168.0.117:5044"]

enabled: true

worker: 3

compression_level: 3

END

##启动

./filebeat -e -c dashboard.yml

2、logstash配置文件

①将beats收集的日志写入到logstash中

cat > beats.conf << END

input {

beats {

port => "5044"

#host => "192.168.0.117"

}

}

output {

if [type] == "filebeat-systemlog" {

redis {

data_type => "list"

host => "192.168.0.119"

db => "3"

port => "6379"

password => "123456"

key => "filebeat-systemlog"

}

}

if [type] == "filebeat-tomcat-accesslog" {

redis {

data_type => "list"

host => "192.168.0.119"

db => "3"

port => "6379"

password => "123456"

key => "filebeat-tomcat-accesslog"

}

}

if [type] == "filebeat-nginx-accesslog" {

redis {

data_type => "list"

host => "192.168.0.119"

db => "3"

port => "6379"

password => "123456"

key => "filebeat-nginx-accesslog"

}

}

}

END

②从redis中读取日志写入ES

cat > redis-es.conf << END

input {

redis {

data_type => "list"

host => "192.168.0.119"

db => "3"

port => "6379"

password => "123456"

key => "filebeat-systemlog"

type => "filebeat-systemlog"

}

redis {

data_type => "list"

host => "192.168.0.119"

db => "3"

port => "6379"

password => "123456"

key => "filebeat-tomcat-accesslog"

type => "filebeat-tomcat-accesslog"

}

redis {

data_type => "list"

host => "192.168.0.119"

db => "3"

port => "6379"

password => "123456"

key => "filebeat-nginx-accesslog"

type => "filebeat-nginx-accesslog"

}

}

output {

if [type] == "filebeat-systemlog" {

elasticsearch {

hosts => ["192.168.0.117:9200","192.168.0.118:9200","192.168.0.119:9200"]

index => "logstash-systemlog-%{+YYYY.MM.dd}"

}

}

if [type] == "filebeat-tomcat-accesslog" {

elasticsearch {

hosts => ["192.168.0.117:9200","192.168.0.118:9200","192.168.0.119:9200"]

index => "logstash-tomcat-accesslog-%{+YYYY.MM.dd}"

}

}

if [type] == "filebeat-nginx-accesslog" {

elasticsearch {

hosts => ["192.168.0.117:9200","192.168.0.118:9200","192.168.0.119:9200"]

index => "logstash-nginx-accesslog-%{+YYYY.MM.dd}"

}

}

}

END

备注:使用negate: true和match: after设置来指定任何不符合指定模式的行都属于上一行。更多多行匹配配置请参考