十五、hive-自定义udf、udtf函数

一、系统内置函数查看

1.查看系统自带的函数

hive> show functions;

2.显示自带的函数的用法

hive> desc function upper;

3.详细显示自带的函数的用法

hive> desc function extended upper;

二、自定义函数介绍

1、Hive 自带了一些函数,比如:max/min等,但是数量有限,自己可以通过自定义UDF来方便的扩展。

2、当Hive提供的内置函数无法满足你的业务处理需要时,此时就可以考虑使用用户自定义函数(UDF:user-defined function)。

3、自定义函数类别分为以下三种:

(1)UDF(User-Defined-Function)

一进一出

(2)UDAF(User-Defined Aggregation Function)

聚集函数,多进一出

类似于:count/max/min

(3)UDTF(User-Defined Table-Generating Functions)

一进多出

如lateral view explore()

4、官方文档地址

https://cwiki.apache.org/confluence/display/Hive/HivePlugins

三、自定函数编程

1、自定义UDF-一进一出

1.1创建工程导入依赖

创建工程:function-hive

导入如下依赖:

<dependency>

<groupId>org.apache.hivegroupId>

<artifactId>hive-execartifactId>

<version>1.2.2version>

dependency>

结合自己使用的hive版本

1.2 创建自定义函数类–继承 org.apache.hadoop.hive.ql.exec.UDF

package com.cjy.udf;

import org.apache.hadoop.hive.ql.exec.UDF;

import org.apache.log4j.spi.LoggerFactory;

import org.slf4j.Logger;

public class Lower extends UDF {

//大小字母转小写功能

public String evaluate (final String s) {

if (s == null) {

return null;

}

return s.toLowerCase();

}

}

1.3 打成jar包,上传到服务 /opt/module/hive/ 此目录下

1.4 打开hive

1.将jar包添加到hive中

hive > add jar /opt/module/hive/function-hive-1.0-SNAPSHOT.jar;

2.创建临时函数

create temporary function base_lower as 'com.cjy.udf.Lower';

3. 测试自定义函数

hive (default)> select base_lower('DDDD');

OK

_c0

dddd

demo地址:https://github.com/chenjy512/bigdata_study/tree/master/function-hive

如上:完成了简单的udf一进一出自定义函数功能

2、实现udtf自定义函数功能

2.1 需求将如下数据格式的两条测试数据,分解成下图格式

{"uid":"001","name":"张三","products":[{"pname":"iphone","price":"10101","detail":{"address":"meiguo","color":"red","size":"5.7"}},{"pname":"xiaomi","price":"4300","detail":{"address":"xiaomi","color":"blue","size":"6.0"}}]}

{"uid":"002","name":"李四","products":[{"pname":"sanx","price":"7800","detail":{"address":"sanx","color":"white","size":"6.0"}},{"pname":"onePlus","price":"5999","detail":{"address":"onePlus","color":"black","size":"6.0"}},{"pname":"vivo","price":"4900","detail":{"onePlus":"vivo","color":"white","size":"5.8"}}]}

2.2 测试数据入库

创建测试表

create table t_json2(line string);

导入数据

load data local inpath '/opt/module/hive/json2.txt' into table t_json2;

2.3 编写udf函数类解析,将数据解析成udi、name、products三个部分

package com.cjy.udf;

import org.apache.commons.lang.StringUtils;

import org.apache.hadoop.hive.ql.exec.UDF;

import org.json.JSONException;

import org.json.JSONObject;

public class BaseFieldUDF extends UDF {

/** 测试数据

* {"uid":"002","name":"李四",

* "products":[{"pname":"sanx","price":"7800","detail":{"address":"sanx","color":"white","size":"6.0"}},

* {"pname":"onePlus","price":"5999","detail":{"address":"onePlus","color":"black","size":"6.0"}},

* {"pname":"vivo","price":"4900","detail":{"onePlus":"vivo","color":"white","size":"5.8"}}]}

*/

public String evaluate(String line, String jsonkeysString) {

// 0 准备一个sb 封装数据

StringBuilder sb = new StringBuilder();

// 1 切割jsonkeys uid,name,products

String[] jsonkeys = jsonkeysString.split(",");

//2. 判断

if(StringUtils.isBlank(line)){

return "";

}

// 3 开始处理json

try {

JSONObject jsonObject = new JSONObject(line);

// 循环遍历取值

for (int i = 0; i < jsonkeys.length; i++) {

String filedName = jsonkeys[i].trim();

if (jsonObject.has(filedName)) {

sb.append(jsonObject.getString(filedName)).append("\t");

} else {

sb.append("\t");

}

}

} catch (JSONException e) {

e.printStackTrace();

}

return sb.toString();

}

}

2.4 编写udtf自定义函数

package com.cjy.udtf;

import org.apache.commons.lang.StringUtils;

import org.apache.hadoop.hive.ql.exec.UDFArgumentException;

import org.apache.hadoop.hive.ql.metadata.HiveException;

import org.apache.hadoop.hive.ql.udf.generic.GenericUDTF;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspectorFactory;

import org.apache.hadoop.hive.serde2.objectinspector.StructObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.primitive.PrimitiveObjectInspectorFactory;

import org.json.JSONArray;

import org.json.JSONException;

import java.util.ArrayList;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

public class BaseUDTF extends GenericUDTF {

Logger log = LoggerFactory.getLogger(BaseUDTF.class);

//解析后的字段名

@Override

public StructObjectInspector initialize(StructObjectInspector argOIs) throws UDFArgumentException {

ArrayList<String> fieldNames = new ArrayList<String>();

ArrayList<ObjectInspector> fieldOIs = new ArrayList<ObjectInspector>();

fieldNames.add("pname");

fieldOIs.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector);

fieldNames.add("product_json");

fieldOIs.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector);

return ObjectInspectorFactory.getStandardStructObjectInspector(fieldNames, fieldOIs);

}

//多条数据数组--实现一进多出

@Override

public void process(Object[] objects) throws HiveException {

log.info(objects.toString());

if(objects == null || objects.length == 0)

return;

//1.取出第一条数据

String value = objects[0].toString();

log.info(value);

//2. 判断数据

if(StringUtils.isBlank(value)){

return ;

}else{

try {

//转为json数组

JSONArray ja = new JSONArray(value);

if (ja == null)

return;

for (int i = 0; i < ja.length(); i++) {

//此数组封装数据顺序与initialize写出的字段名称顺序一直

String[] result = new String[2];

try {

// 取出每个产品名称

result[0] = ja.getJSONObject(i).getString("pname");

// 取出每一个产品整体数据

result[1] = ja.getString(i);

} catch (JSONException e) {

continue;

}

// 将结果返回

forward(result);

}

} catch (JSONException e) {

System.out.println(111111);

e.printStackTrace();

}

}

}

@Override

public void close() throws HiveException {

}

}

2.5打包工程上传至服务器进行测试

2.6 打开hive

1.添加jar包

hive > add jar /opt/module/hive/function-hive-1.0-SNAPSHOT.jar;

2.创建临时函数

create temporary function base_udf as 'com.cjy.udf.BaseFieldUDF';

create temporary function base_udtf as 'com.cjy.udtf.BaseUDTF';

3.mr改为本地模式--因为数据量小

set hive.exec.mode.local.auto=true;

4.使用base_udf 将数据分为三个字段

select split(base_udf(line,'uid,name,products'),'\t')[0] as uid,

split(base_udf(line,'uid,name,products'),'\t')[1] as name,

split(base_udf(line,'uid,name,products'),'\t')[2] as products

from t_json2 ;

数据结果:

uid name products

001 张三 [{"pname":"iphone","price":"10101","detail":{"address":"meiguo","color":"red","size":"5.7"}},{"pname":"xiaomi","price":"4300","detail":{"address":"xiaomi","color":"blue","size":"6.0"}}]

002 李四 [{"pname":"sanx","price":"7800","detail":{"address":"sanx","color":"white","size":"6.0"}},{"pname":"onePlus","price":"5999","detail":{"address":"onePlus","color":"black","size":"6.0"}},{"pname":"vivo","price":"4900","detail":{"color":"white","size":"5.8","onePlus":"vivo"}}]

5.使用自定义base_udtf 一进多出函数

select uid,name,pname,product_json

from (select split(base_udf(line,'uid,name,products'),'\t')[0] as uid,

split(base_udf(line,'uid,name,products'),'\t')[1] as name,

split(base_udf(line,'uid,name,products'),'\t')[2] as products

from t_json2

where base_udf(line,'uid,name,products' )<> ''

) t1 lateral view base_udtf(t1.products) tmp_table as pname, product_json;

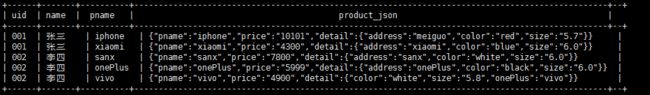

如下数据格式完成需求:

+------+-------+----------+-------------------------------------------------------------------------------------------------+--+

| uid | name | pname | product_json |

+------+-------+----------+-------------------------------------------------------------------------------------------------+--+

| 001 | 张三 | iphone | {"pname":"iphone","price":"10101","detail":{"address":"meiguo","color":"red","size":"5.7"}} |

| 001 | 张三 | xiaomi | {"pname":"xiaomi","price":"4300","detail":{"address":"xiaomi","color":"blue","size":"6.0"}} |

| 002 | 李四 | sanx | {"pname":"sanx","price":"7800","detail":{"address":"sanx","color":"white","size":"6.0"}} |

| 002 | 李四 | onePlus | {"pname":"onePlus","price":"5999","detail":{"address":"onePlus","color":"black","size":"6.0"}} |

| 002 | 李四 | vivo | {"pname":"vivo","price":"4900","detail":{"color":"white","size":"5.8","onePlus":"vivo"}} |

+------+-------+----------+-------------------------------------------------------------------------------------------------+--+