日志分析大数据平台项目、Hive高阶函数、数仓建模模型

1. 日志点击流整体框架

2. 日志点击流大数据平台分析系统

2.1 表模型

-

表模型

-

原始数据 通过flume采集得到的input数据

-

原始访问日志表

- mr数据清洗原始数据. 过滤脏数据,得到weblog_origin

- 通过load方式, 加载到hive的ods层, 源数据

-

pageview表(重视每一次的访问, 一个用户访问一次就记录一次, 并且标记session和每个session中访问了多少个页面)

-

mr处理, input是weblog_origin, k2为ip—> 得到ouput就是pageView

-

load的方式, 加载到hive的ods层

-

-

visit表模型(重视每一次会话的情况, 标记每一个session起始时间)

- mr处理, input是pageView, k2为session–> output就是visit

- load的方式, 加载到hive的ods层

-

2.2 分析手段

-

高收益一定伴随高风险:一般的开公司的回报率大概在 10% - 20%

-

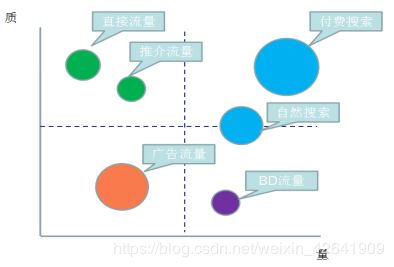

网站分析常见的一些手段:

-

流量分析常见的指标:

-

骨灰级的指标:

IP:网站每日访问不重复的ip的个数。不重复的ip的个数越多,说明我这个网站访问的独立的人越多

Page View:PV值,访问一个页面算作一次PV

Unique Page View: UV值 一天之内访问网站不重复的用户数。不重复的用户越多,说明我这个网址访问的人数越多。使用cookie来进行区分不同的用户

基础级指标:

访问次数:session级别的次数 网站停留时间:可以统计每个人再网站停留多长时间 页面停留时间:页面停留时间,每个页面停留多长时间 -

复合级指标:

人均浏览页数:平均一个人看了几个页面 总的页面浏览次数/去重的人数

跳出率:只访问了一个页面就跑了的人数/总的访问的人数

退出率:只访问了一次(session会话级别)就跑了的人数/总的访问人数

-

基础分析指标:

趋势分析:网站流量的走势

对比分析:同比与环比

当前在线:当前网站有多少个人在线进行访问

访问明细:访问用户的一些详情信息

来源分析:主要就是分析我们网站访问的各种流量都是从哪里来的

受访分析:网站受到访问的一些分析情况

访客分析:分析来我们网站访问的用户,都是哪一类人

* 大数据杀熟 * 滴滴打车 * 转化路径分析

-

-

通过ip地址可以确定我们一个人的大致范围

友盟大数据统计

https://solution.umeng.com/?spm=a211g2.182260.0.0.650d9761zdqr58

-

数据导入导出的工具:canal streamset flume 采集mysql数据 得要下去了解

实际采集mysql数据库的数据

2.3 离线处理框架

-

离线阶段框架梳理:

zookeeper + hadoop + hive + flume + azkaban+ sqoop + impala + oozie + hue

基础框架 zookeeper + hadoop

数据采集: flume

-

离线处理第一套框架:azkaban + hive + sqoop 已经比较陈旧了

-

离线处理第二套框架: oozie + impala + hue + sqoop 来处理我们离线的任务。我们可以通过托拉拽的方式,实现我们离线任务的执行以及离线任务定时执行

基于已有的伪分布式环境,如何转换成HA的环境,并且保证hdfs上面的数据不丢失

为了解决我们所有的大数据软件的安装的烦恼,我们可以使用CM图形化的界面的工具来安装管理我们的集群

以后安装大数据软件,直接在页面上点一点就行了

3. 完整技术流程详解

3.1 flume集数据

-

source Taildir监控某个目录, TailSource可以同时监控tail多个目录中的文件

a1.sources = r1 a1.sources.r1.type = TAILDIR a1.sources.r1.channels = c1 a1.sources.r1.positionFile = /var/log/flume/taildir_position.json a1.sources.r1.filegroups = f1 f2 a1.sources.r1.filegroups.f1 = /var/log/test1/example.log a1.sources.r1.filegroups.f2 = /var/log/test2/.*log.*

3.2 数据清洗, mr过滤不合规的数据(转为结构化数据)

- 视频数据属于非结构化数据

3.2.1 作用

- 得到结构化的数据

- 方便load到hive的ods层

- 处理最原始的数据,也就是flume采集过来的数据, 得到weblog_origin

- 处理weblog_origin, 得到pageView数据, k2 就是weblog_origin的ip

- 处理pageView数据, 得到visit数据, k2就是pageView中标记的session

3.3 数据仓库设计

3.3.1 概述: 数据仓库建模

-

维度表: 主键(不同的维度标识),比方说是时间, 地域,部门,产品

-

事实表:有外键约束(维度表的主键), 一件完整的事情.

-

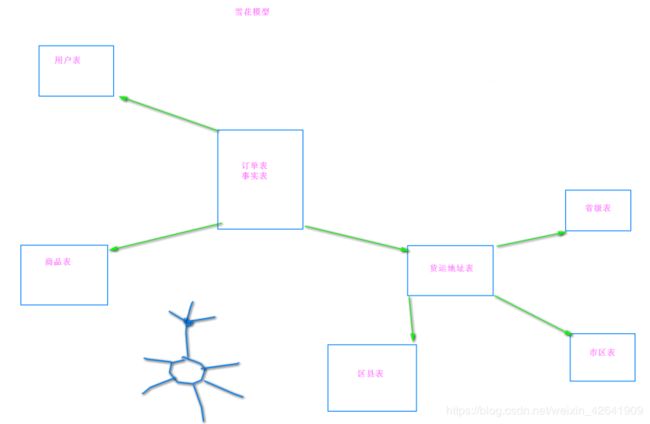

数仓建模的方式

- 星型模型

* 雪花模型

* 星座模型

3.3.2 本项目采用的建模方式

- 星型模型

- 事实表

- 原始数据表: ods_weblog_origin =>对应mr清洗完之后的数据

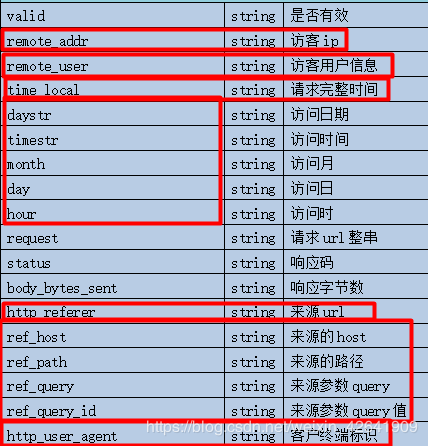

- 访问日志明细宽表:ods_weblog_detail=>对应ods事实表的精细化拆分

-

将time_local 拆分成 时,日,月

-

将http_referer 拆分成 host,来源的路径, 来源参数,来源参数值,agent

UDTF,parse_url_tuple一行进多行出的高阶行数

-

- 维度表

- 时间维度

- 访客地域维度

- 终端类型维度

- 网站栏目维度

3.4 ETL(ods层导入源数据)

Extrol, Transform, Load 抽取,转换,加载: 就是从各个数据源提取数据, 对数据进行转换, 并最终加载填充数据到数据仓库维度建模后的表中.

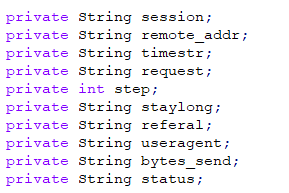

3.4.1 ods层表结构

-

ods_click_stream_visit(重视每一次session的会话情况,每次seession什么时候来的,什么时候走的)

-

ods_weblog_detail(对weblog_origin的细化)

insert into table ods_weblog_detail partition(datestr='20130918') select c.valid, c.remote_addr, c.remote_user, c.time_local, substring(c.time_local,0,10) as daystr, substring(c.time_local,12) as tmstr, substring(c.time_local,6,2) as month, substring(c.time_local,9,2) as day, substring(c.time_local,11,3) as hour, c.request, c.status, c.body_bytes_sent, c.http_referer, c.ref_host, c.ref_path, c.ref_query, c.ref_query_id, c.http_user_agent from (SELECT a.valid, a.remote_addr, a.remote_user, a.time_local, a.request, a.status, a.body_bytes_sent, a.http_referer, a.http_user_agent, b.ref_host, b.ref_path, b.ref_query, b.ref_query_id FROM ods_weblog_origin a LATERAL VIEW parse_url_tuple(regexp_replace(http_referer, "\"", ""), 'HOST','PATH','QUERY', 'QUERY:id') b as ref_host, ref_path, ref_query, ref_query_id) c;

3.5 ods构建明细宽表之Hive高阶函数详解

3.5.1 url解析函数 lateral view parse_url_tuple()

- lateral view parse_usr_tuple(fullurl,‘HOST’,‘PATH’,‘QUERY’,‘QUERY:id’) b as host,path,query,query_id. UDTF一行进多行出的函数, 解析url

- 案例

select b.* from src lateral view parse_usr_tuple(fullurl,'HOST','PATH','QUERY','QUERY:id') b as host,path,query,query_id.

-

b 就是多行出的一个临时表, 每一行的字段asxxx, 然后再b.*查出来

-

解析后的结果如下

http://www.baidu.com/hello/world?username=zhangsan

- HOST:www.baidu.com

- PATH:/hello/world

- QUERY:username

- QUERY_id:zhangsan

3.5.2 替换函数 regexp_replace()

-

regexp_replace(xxx, “被替换的字符”,“要替换为的字符”)

UDF 一行进,一行出. 用来替换字符串中的某个字符

-

案例 desc function extended regexp_replace 可以查看案例

lateral view parse_url_tuple(regexp_replace(http_referer,"\"", ""))- 第一个参数是被替换的, 引号

- 第二个参数是要替换为的字符,是一个空值

3.5.3 截取函数 substring()

-

substring(从第几个开始截取,截几个)截取函数–>注意和java中的函数区分, java中的(内部指的是索引)

-

案例 把ods_weblog_detail中的time_local拆分成5个字段 访问日期,访问时间,访问月,访问日,访问时

3.6 模块开发–统计分析

一 流量分析

1.1 时间/referer/host维度

-

时间维度: 计算每小时/每天的pvs, 访问一次就计算一次pv

drop table if exists dw_pvs_everyhour_oneday; create table if not exists dw_pvs_everyhour_oneday(month string,day string,hour string,pvs bigint) partitioned by(datestr string); insert into table dw_pvs_everyhour_oneday partition(datestr='20130918') select a.month as month,a.day as day,a.hour as hour,count(*) as pvs from ods_weblog_detail a where a.datestr='20130918' group by a.month,a.day,a.hour; create table if not exists dw_pvs_everyday(pvs bigint,month string,day string); insert into table dw_pvs_everyday select count(*) as pvs,a.month as month,a.day as day from ods_weblog_detail a group by a.month,a.day; -

按照referer维度: 统计每小时各来访url产生的pv量,查询结果存入

drop table if exists dw_pvs_referer_everyhour; create table if not exists dw_pvs_referer_everyhour (referer_url string,referer_host string,month string,day string, hour string,pv_referer_cnt bigint) partitioned by(datestr string); insert into table dw_pvs_referer_everyhour partition(datestr='20130918') select http_referer, ref_host, month, day, hour, count(1) as pv_referer_cnt from ods_weblog_detail group by http_referer,ref_host,month,day,hour having ref_host is not null order by hour asc,day asc,month asc,pv_referer_cnt desc; -

按referer维度: 统计每小时各来访host的产生的pv数并排序

drop table dw_pvs_refererhost_everyhour; create table dw_pvs_refererhost_everyhour(ref_host string,month string,day string,hour string,ref_host_cnts bigint) partitioned by(datestr string); insert into table dw_pvs_refererhost_everyhour partition(datestr='20130918') select ref_host, month, day, hour, count(1) as ref_host_cnts from ods_weblog_detail group by ref_host,month,day,hour having ref_host is not null order by hour asc,day asc,month asc,ref_host_cnts desc;

1.2 字符串拼接函数concat()

- contact(xx,yy,zz) as cts UDAF 多行进一行出, 字符串拼接

- 案例 concat(month,day, hour)

1.3 dw层hive函数: 分组求topN

-

案例:

id name sal 1 a 10 2 a 12 3 b 13 4 b 12 5 a 14 6 a 15 7 a 13 8 b 11 9 a 16 10 b 17 11 a 14 -- 需求, 按照各部门对薪水进行排序并求TopN -- 1.1 按照name分区,sal排序,并且每个分区中都为排好序的数据打上标号 select id, name, sal, rank() over(partition by name order by sal desc ) rp, dense_rank() over(partition by name order by sal desc ) drp, row_number()over(partition by name order by sal desc) rmp from f_test -- 1.2 打好标号的结果 id name rp drp rmp a 16 1 1 1 a 15 2 2 2 a 14 3 3 3 a 14 3 3 4 a 13 5 4 5 a 12 6 5 6 a 10 7 6 7 b 17 1 1 1 b 13 2 2 2 b 12 3 3 3 b 11 4 4 4 -- 1.3 三种开窗函数的区别 rank() over(partition by name order by sql desc) as rp 1,1,3顺序排,总序号不变 dense_rank over(partition by name order by sal desc) as drp 1,1,2 并列排序,总序号会减少 row_number() over(partition by name order by sql desc) as rmp 1,2,3 并列排序,总序号不变 -- 2. 按照name分组,求每个组中前3名 select * from (select id, name, sal, rank() over(partition by name order by sal desc ) rp, dense_rank() over(partition by name order by sal desc ) drp, row_number() over(partition by name order by sal desc) rmp from f_test) temp where temp.rmp <= 3; -

需求描述:按照时间维度,统计一天内各小时产生最多pvs的来访的topN

(查询dw_pvs_refererhost_everyhour表)

select ref_host, ref_host_cnts, concat(month,day,hour), row_number() over (partition by concat(month,day,hour) order by ref_host_cnts desc) as od from dw_pvs_refererhost_everyhour; --综上可以得出 drop table dw_pvs_refhost_topn_everyhour; create table dw_pvs_refhost_topn_everyhour( hour string, toporder string, ref_host string, ref_host_cnts string )partitioned by(datestr string); -- 每一个子查询语句,都是可以独立运行的 insert into table dw_pvs_refhost_topn_everyhour partition(datestr='20130918') select t.hour, t.od, t.ref_host, t.ref_host_cnts from (select ref_host, ref_host_cnts, concat(month,day,hour) as hour, row_number() over (partition by concat(month,day,hour) order by ref_host_cnts desc) as od from dw_pvs_refererhost_everyhour) t where od<=3;

1.4 人均浏览页数

-

需求描述:统计今日所有来访者平均请求的页面数。

总页面请求数/去重总人数

drop table dw_avgpv_user_everyday; create table dw_avgpv_user_everyday( day string, avgpv string); -- 添加数据 insert into table dw_avgpv_user_everyday select '20130918', sum(b.pvs) / count(b.remote_addr) from (select remote_addr, count(1) as pvs from osd_weblog_detail where datestr='20130918' group by remote_addr) b;

二 受访分析

页面受到的访问, ods_weblog_detail中的request字段(要访问的页面,这个页面就是受到访问的页面)

1.1 各页面访问统计

select

request as request,

count(1) as cts

from

ods_weblog_detail

group by request

having request is not null

order by cts

limit 20;

1.2 热门页面统计

-

统计20130918这个分区里面的受访页面的top10

drop table dw_hotpages_everyday; create table dw_hotpages_everyday(day string,url string,pvs string); -- ETL从ods层查询数据抽取到dw层 insert into table dw_hotpages_ervery select '20130918', request, count(1) as cts from ods_weblog_detail where request is not null group by request order by cts desc limit 10; -

统计每日最热门页面的top10(分组求topN)

select temp.* from (select concat(month,day) as day, request, count(1) as cts, row_number() over(partition by day order by cts desc) as rmp from ods_weblog_detail where request is not null) temp where temp.rmp >= 10;

三 访客分析

1.1 独立访客

-

需求: 每小时独立访客及其产生的pv(也就是一个用户访问了多少个页面)

安装小时,remote_addr进行分组,然后再count(1)

drop table dw_user_dstc_ip_h; create table dw_user_dstc_ip_h( remote_addr string, pvs bigint, hour string); -- 插入数据 select remote_addr, count(1) as pvs, concat(month,day,hour) as hour from ods_weblog_detail where datestr = '20130918' group by hour,remote_addr;

1.2 每日新访客

-

历史表

-

每日访客表 就是ods_weblog_detail

-

nrewIp left join hist on newIp.remote_addr = hist.ip where hist.ip is null ==>就是每日新访客表

-

得到的新访客需要追加到历史表中

--历史去重访客累积表 drop table dw_user_dsct_history; create table dw_user_dsct_history( day string, ip string) partitioned by(datestr string); --每日新访客表 drop table dw_user_new_d; create table dw_user_new_d ( day string, ip string) partitioned by(datestr string); --每日新用户插入新访客表 insert into table dw_user_new_d partition(datestr='20130918') select tmp.day as day, tmp.remote_addr as new_ip from (select a.day, a.remote_addr, from (select remote_addr, '20130918' as day from ods_weblog_detail newIp where datestr ='20130918' group by remote_addr ) a left join dw_user_dsct_history hist on a.remote_addr = hist.ip where hist.ip is null) temp; --每日新用户追加到历史累计表 insert into table dw_user_dsct_history partition(datestr='20130918') select day,ip from dw_user_new_d where datestr='20130918';

1.4 访客Visit分析(点击流模型)

-

回头访客及其访问的次数

drop table dw_user_returning; create table dw_user_returning( day string, remote_addr string, acc_cnt string) partitioned by (datestr string); -- 插入数据 insert overwrite table dw_user_returning partition(datestr='20130918') select '20130918' as day, remote_addr, count(1) as acc_cnt from ods_click_stream_visit group by remote_addr having acc_cnt > 1; -

人均访问的频次,频次表示我们来了多少个session

次数都是使用session来进行区分,一个session就是表示一次select sum(groupuser) / count(1) from (select count(1) as groupuser from ods_click_stream_visit where datestr = '20130918' group by remote_addr); -

人均页面浏览量 总的pagevisits / 总的去重人数

select sum(pagevisits)/count(distinct remote_addr) from ods_click_stream_visit where datestr='20130918';

四 关键路径转化率分析(漏斗模型)

1.1 hive当做级联求和(inner join自关联)

-- t_salary_detail

username month salary

A,2015-01,5

A,2015-01,15

B,2015-01,5

A,2015-01,8

B,2015-01,25

A,2015-01,5

A,2015-02,4

A,2015-02,6

B,2015-02,10

B,2015-02,5

A,2015-03,7

A,2015-03,9

B,2015-03,11

B,2015-03,6

-- 需求1. 求每个用户每个月总共获得多少消费

select

t.month,

t.username,

sum(salary) as salSum

from t_salary_detail

group by t.username,t.month;

+----------+-------------+---------+--+

| t.month | t.username | salsum |

+----------+-------------+---------+--+

| 2015-01 | A | 33 |

| 2015-02 | A | 10 |

| 2015-03 | A | 16 |

| 2015-01 | B | 30 |

| 2015-02 | B | 15 |

| 2015-03 | B | 17 |

+----------+-------------+---------+--+

-- 需求2. 求每个用户每个月累计小费

-- 第一步: 求每个用户每个月的消费总和

select

t.month,

t.username,

sum(salary) as salSum

from t_salary_detail

group by t.username,t.month;

-- 第二步: 使用inner join 实现自己连接自己(inner join并不以谁为基准, 而是两张表的交集)

select

a.*,

b.*

from

(select

t.month,

t.username,

sum(salSum) as salSum

from

t_salary_detail t

group by t.username,t.month) a

inner join

(select

t.month,

t.username,

sum(salSum) as salSum

from

t_salary_detail t

group by t.username,t.month) b

on a.username = b.username;

+----------+-------------+-----------+----------+-------------+-----------+--+

| a.month | a.username | a.salsum | b.month | b.username | b.salsum |

+----------+-------------+-----------+----------+-------------+-----------+--+

取这一个作为一组

| 2015-01 | A | 33 | 2015-01 | A | 33 |

| 2015-01 | A | 33 | 2015-02 | A | 10 |

| 2015-01 | A | 33 | 2015-03 | A | 16 |

取这两个作为一组

| 2015-02 | A | 10 | 2015-01 | A | 33 |

| 2015-02 | A | 10 | 2015-02 | A | 10 |

| 2015-02 | A | 10 | 2015-03 | A | 16 |

取这三个作为一组

| 2015-03 | A | 16 | 2015-01 | A | 33 |

| 2015-03 | A | 16 | 2015-02 | A | 10 |

| 2015-03 | A | 16 | 2015-03 | A | 16 |

| 2015-01 | B | 30 | 2015-01 | B | 30 |

| 2015-01 | B | 30 | 2015-02 | B | 15 |

| 2015-01 | B | 30 | 2015-03 | B | 17 |

| 2015-02 | B | 15 | 2015-01 | B | 30 |

| 2015-02 | B | 15 | 2015-02 | B | 15 |

| 2015-02 | B | 15 | 2015-03 | B | 17 |

| 2015-03 | B | 17 | 2015-01 | B | 30 |

| 2015-03 | B | 17 | 2015-02 | B | 15 |

| 2015-03 | B | 17 | 2015-03 | B | 17 |

+----------+-------------+-----------+----------+-------------+-----------+--+

-- 加参数继续变形 条件就是b.month <= a.month

select

a.*,

b.*

from

(select

t.month,

t.username,

sum(salSum) as salSum

from

t_salary_detail t

group by t.username,t.month) a

inner join

(select

t.month,

t.username,

sum(salSum) as salSum

from

t_salary_detail t

group by t.username,t.month) b

on a.username = b.username

where b.month <= a.month;

+----------+-------------+-----------+----------+-------------+-----------+--+

| a.month | a.username | a.salsum | b.month | b.username | b.salsum |

+----------+-------------+-----------+----------+-------------+-----------+--+

| 2015-01 | A | 33 | 2015-01 | A | 33 | 33

| 2015-02 | A | 10 | 2015-01 | A | 33 | 43

| 2015-02 | A | 10 | 2015-02 | A | 10 |

| 2015-03 | A | 16 | 2015-01 | A | 33 | 59

| 2015-03 | A | 16 | 2015-02 | A | 10 |

| 2015-03 | A | 16 | 2015-03 | A | 16 |

| 2015-01 | B | 30 | 2015-01 | B | 30 | 30

| 2015-02 | B | 15 | 2015-01 | B | 30 | 45

| 2015-02 | B | 15 | 2015-02 | B | 15 |

| 2015-03 | B | 17 | 2015-01 | B | 30 | 62

| 2015-03 | B | 17 | 2015-02 | B | 15 |

| 2015-03 | B | 17 | 2015-03 | B | 17 |

+----------+-------------+-----------+----------+-------------+-----------+--+

-- 第三步: 从第二步的结果中继续对a.month,a.username进行分组,并对分组后的b.salSum进行sum

select

temp.username,

temp.month,

max(asalSum),

sum(bsalSum)

from

(select

a.username as ausername,

a.month as amonth,

a.salSum as asalSum,

b.salSum as bsalSum

from

(select

t.month,

t.username,

sum(salSum) as salSum

from

t_salary_detail t

group by t.username,t.month) a

inner join

(select

t.month,

t.username,

sum(salSum) as salSum

from

t_salary_detail t) b

on a.username = b.username

where b.month <= a.month;

) temp

order by temp.username,a.month;

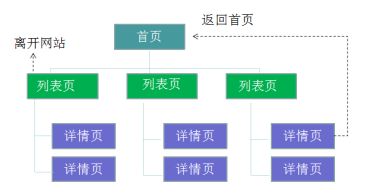

1.2 漏斗模型

第一个指标: 每一步现对于第一步的转化率

第二个指标: 每一步相对于上一步的转化率

1.2.1 查询每一步骤的总访问人数

create table dw_oute_numbs as

select

'step1' as step,

count(distinct remote_addr) as numbs

from

ods_click_pageviews

where datestr = '20130918'

and request like '/item%'

union all

select

'step2' as step,

count(distinct remote_addr) as numbs

from

ods_click_pageviews

where datestr = '20130918'

and request like '/category%'

select

'step3' as step,

count(distinct remote_addr) as numbs

from

ods_click_pageviews

where datestr = '20130918'

and request like '/order%'

select

'step4' as step,

count(distinct remote_addr) as numbs

from

ods_click_pageviews

where datestr = '20130918'

and request like '/index%'

+---------------------+----------------------+--+

| dw_oute_numbs.step | dw_oute_numbs.numbs |

+---------------------+----------------------+--+

| step1 | 1029 |

| step2 | 1029 |

| step3 | 1028 |

| step4 | 1018 |

+---------------------+----------------------+--+

1.2.2 查询每一步骤相对于路径起点人数的比例(级联查询,自己跟自己join)

-- 1. 先自关联得到总表, 这时候自关联就没有on条件了,

select

a.step as astep,

a.numbs as anumbs,

b.step as bstep,

b.numbs as bnumbs

from

dw_oute_numbs a

inner join

dw_oute_numbs b;

+---------+----------+---------+----------+--+

| a.step | a.numbs | b.step | b.numbs |

+---------+----------+---------+----------+--+

| step1 | 1029 | step1 | 1029 |

| step2 | 1029 | step1 | 1029 |

| step3 | 1028 | step1 | 1029 |

| step4 | 1018 | step1 | 1029 |

| step1 | 1029 | step2 | 1029 |

| step2 | 1029 | step2 | 1029 |

| step3 | 1028 | step2 | 1029 |

| step4 | 1018 | step2 | 1029 |

| step1 | 1029 | step3 | 1028 |

| step2 | 1029 | step3 | 1028 |

| step3 | 1028 | step3 | 1028 |

| step4 | 1018 | step3 | 1028 |

| step1 | 1029 | step4 | 1018 |

| step2 | 1029 | step4 | 1018 |

| step3 | 1028 | step4 | 1018 |

| step4 | 1018 | step4 | 1018 |

+---------+----------+---------+----------+--+

-- 2. 加条件 只取 a表的step1

select

a.step as astep,

a.numbs as anumbs,

b.step as bstep,

b.numbs as bnumbs

from

dw_oute_numbs a

inner join

dw_oute_numbs b

where a.step = 'step1';

+---------+----------+---------+----------+--+

| a.step | a.numbs | b.step | b.numbs |

+---------+----------+---------+----------+--+

| step1 | 1029 | step1 | 1029 |

| step1 | 1029 | step2 | 1029 |

| step1 | 1029 | step3 | 1028 |

| step1 | 1029 | step4 | 1018 |

+---------+----------+---------+----------+--+

-- 3. 求比率

select

temp.bnumbs/temp.anumbs as otherToFirstRation

from

(select

a.step as astep,

a.numbs as anumbs,

b.step as bstep,

b.numbs as bnumbs

from

dw_oute_numbs a

inner join

dw_oute_numbs b

where a.step = 'step1';

) temp;

+---------+

| otherToFirstRation |

+---------+

| 1.0 |

| 1.0 |

| 0.9990 |

| 0.9893 |

+---------+

1.2.3 每一步相对于上衣布的转化率 secondToFirstRation==>cast(被转换的数据, 要转换的类型)

- hive中的函数 cast(),转换函数

- UDF 将string类型转为int类型

- cast(substr(xxx,5,1) as int) 将截取后的字符串转为int类型

- casr(‘2013-09-18’, date) 转换为日期类型

- 需求: 每一步相对于上一步的转化率

-- 先过滤出条件

select

a.step as astep,

a.numbs as anumbs,

b.step as bstep,

b.numbs as bnumbs

from

dw_oute_numbs a

inner join

dw_oute_numbs b

where cast(substr(a.step, 5, 1), int) = cast(substr(b.step, 5, 1), int) - 1;

+---------+----------+---------+----------+--+

| a.step | a.numbs | b.step | b.numbs |

+---------+----------+---------+----------+--+

| step1 | 1029 | step2 | 1029 |

| step2 | 1029 | step3 | 1028 |

| step3 | 1028 | step4 | 1018 |

+---------+----------+---------+----------+--+

-- 再求比率

select

temp.bnumbs/temp.anumbs as secondToFirstRation

from

(select

a.step as astep,

a.numbs as anumbs,

b.step as bstep,

b.numbs as bnumbs

from

dw_oute_numbs a

inner join

dw_oute_numbs b

where cast(substr(a.step, 5, 1), int) = cast(substr(b.step, 5, 1), int) - 1

) temp;

+---------+

| secondToFirstRation |

+---------+

| 1.0 |

| 0.9990 |

| 0.9983 |

+---------+

3.7 hive到出到mysql中

-

先在mysql中创建库,创建表

-

sqoop找到hive在hdfs存储位置,默认user/root/warehouse/weblog/表文件夹

/export/servers/sqoop-xxx/bin/sqoop export \ --connect jdbc:mysql://192.168.137.188:3306/weblog \ --username root --passwrod root \ --m 1 \ --export-dir /user/root/warehouse/weblog/xxx \ --table mysql中的表名 \ --input-fields-terminated-by '\001'

3.8 azkaban调度

3.8.1 大体的轮廓

flume一直在运行, 唯一要做的就是要监控十分正常运行

- 数据的清洗 三个mr程序需要运行

- hive当中表数据的加载

- ETL开发

- 将结果数据到出

3.8.2 小记忆

- date -d ‘-1 day’ + %Y%m%d

3.9 echarts数据可视化

4 面试总结

-

一天数据 50G-100G 2-3个人维护

-

集群数量30台左右 每台硬盘配置12T-24T 内存最少64GB CM搭建运行环境

-

首先要确定你要运行哪些框架?

zookeeper hadoop hive flume sqoop

zookeeper:奇数台,7-9台都行

hadoop HA: namenode 2 个 datanode 26个 journalenode 7-9个

zkfc 与namenode同在

resourceManager 2 个 nodeManager 26个

hive:随便找一个datanode装上就行了

sqoop:随便找一个datanode装上就行了集群的服务的规划:主节点彻底分开,不要与其他的节点混淆

如需原始日志资料 请添加qq:1943228490 备注CSDN