Oozie --oozie的使用

oozie的使用

| KEY |

含义 |

| nameNode |

HDFS地址 |

| jobTracker |

jobTracker(ResourceManager)地址 |

| queueName |

Oozie队列(默认填写default) |

| examplesRoot |

全局目录(默认填写examples) |

| oozie.usr.system.libpath |

是否加载用户lib目录(true/false) |

| oozie.libpath |

用户lib库所在的位置 |

| oozie.wf.application.path |

Oozie流程所在hdfs地址(workflow.xml所在的地址) |

| user.name |

当前用户 |

| oozie.coord.application.path |

Coordinator.xml地址(没有可以不写) |

| oozie.bundle.application.path |

Bundle.xml地址(没有可以不写) |

使用oozie调度shell脚本

oozie安装好了之后,需要测试oozie的功能是否完整好使,官方已经给我们带了各种测试案例,我们可以通过官方提供的各种案例来对oozie进行调度

第一步:解压官方提供的调度案例

oozie自带了各种案例,我们可以使用oozie自带的各种案例来作为模板,所以我们这里先把官方提供的各种案例给解压出来

cd /export/servers/oozie-4.1.0-cdh5.14.0

tar -zxf oozie-examples.tar.gz第二步:创建工作目录

在任意地方创建一个oozie的工作目录,以后调度任务的配置文件全部放到oozie的工作目录当中去

我这里直接在oozie的安装目录下面创建工作目录

cd /export/servers/oozie-4.1.0-cdh5.14.0

mkdir oozie_works

第三步:拷贝任务模板到工作目录当中去

任务模板以及工作目录都准备好了之后,我们把shell的任务模板拷贝到我们oozie的工作目录当中去

cd /export/servers/oozie-4.1.0-cdh5.14.0

cp -r examples/apps/shell/ oozie_works/

第四步:随意准备一个shell脚本

cd /export/servers/oozie-4.1.0-cdh5.14.0

vim oozie_works/shell/hello.sh注意:这个脚本一定要是在我们oozie工作路径下的shell路径下的位置

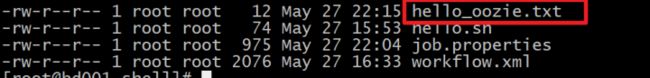

#!/bin/bash

echo "hello world" >> /export/servers/hello_oozie.txt

第五步:修改模板下的配置文件

修改job.properties

cd /export/servers/oozie-4.1.0-cdh5.14.0/oozie_works/shell

vim job.properties

nameNode=hdfs://node01:8020

jobTracker=node01:8032

queueName=default

examplesRoot=oozie_works

oozie.wf.application.path=${nameNode}/user/${user.name}/${examplesRoot}/shell

EXEC=hello.sh修改workflow.xml

vim workflow.xml

${jobTracker}

${nameNode}

mapred.job.queue.name

${queueName}

${EXEC}

/user/root/oozie_works/shell/${EXEC}#${EXEC}

${wf:actionData('shell-node')['my_output'] eq 'Hello Oozie'}

Shell action failed, error message[${wf:errorMessage(wf:lastErrorNode())}]

Incorrect output, expected [Hello Oozie] but was [${wf:actionData('shell-node')['my_output']}]

第六步:上传调度任务到hdfs上面去

注意:上传的hdfs目录为/user/root,因为我们hadoop启动的时候使用的是root用户,如果hadoop启动的是其他用户,那么就上传到

/user/其他用户

cd /export/servers/oozie-4.1.0-cdh5.14.0

hdfs dfs -put oozie_works/ /user/root

第七步:执行调度任务

通过oozie的命令来执行调度任务

cd /export/servers/oozie-4.1.0-cdh5.14.0

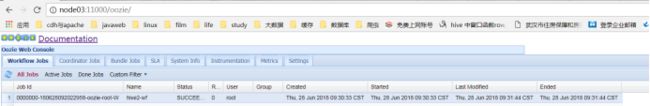

bin/oozie job -oozie http://bd001:11000/oozie -config oozie_works/shell/job.properties -run从监控界面可以看到任务执行成功了

查看hadoop的19888端口,我们会发现,oozie启动了一个MR的任务去执行shell脚本

使用oozie调度hive

第一步:拷贝hive的案例模板

cd /export/servers/oozie-4.1.0-cdh5.14.0

cp -ra examples/apps/hive2/ oozie_works/

第二步:编辑hive模板

这里使用的是hiveserver2来进行提交任务,需要注意我们要将hiveserver2的服务给启动起来

hive --service hiveserver2 &

hive --service metastore &

修改job.properties

cd /export/servers/oozie-4.1.0-cdh5.14.0/oozie_works/hive2

vim job.propertiesnameNode=hdfs://bd001:8020

jobTracker=bd001:8032

queueName=default

jdbcURL=jdbc:hive2://bd001:10000/default

examplesRoot=oozie_works

oozie.use.system.libpath=true

# 配置我们文件上传到hdfs的保存路径 实际上就是在hdfs 的/user/root/oozie_works/hive2这个路径下

oozie.wf.application.path=${nameNode}/user/${user.name}/${examplesRoot}/hive2

修改workflow.xml

vim workflow.xml

${jobTracker}

${nameNode}

mapred.job.queue.name

${queueName}

${jdbcURL}

INPUT=/user/${wf:user()}/${examplesRoot}/input-data/table

OUTPUT=/user/${wf:user()}/${examplesRoot}/output-data/hive2

Hive2 (Beeline) action failed, error message[${wf:errorMessage(wf:lastErrorNode())}]

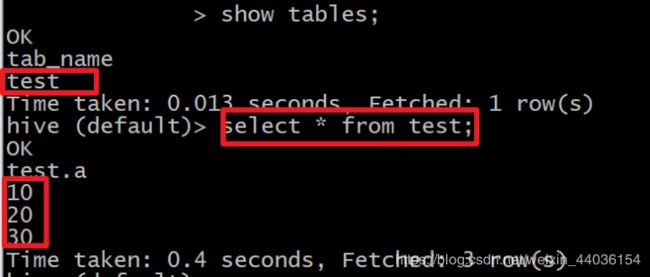

编辑hivesql文件

vim script.qDROP TABLE IF EXISTS test;

CREATE EXTERNAL TABLE default.test (a INT) STORED AS TEXTFILE LOCATION '${INPUT}';

insert into test values(10);

insert into test values(20);

insert into test values(30);

第三步:上传工作文件到hdfs

cd /export/servers/oozie-4.1.0-cdh5.14.0/oozie_works

hdfs dfs -put hive2/ /user/root/oozie_works/

第四步:执行oozie的调度

cd /export/servers/oozie-4.1.0-cdh5.14.0

bin/oozie job -oozie http://bd001:11000/oozie -config oozie_works/hive2/job.properties -run

第五步:查看调度结果

使用oozie调度MR任务

第一步:准备MR执行的数据

我们这里通过oozie调度一个MR的程序的执行,MR的程序可以是自己写的,也可以是hadoop工程自带的,我们这里就选用hadoop工程自带的MR程序来运行wordcount的示例

准备以下数据上传到HDFS的/oozie/input路径下去

hdfs dfs -mkdir -p /oozie/input

vim wordcount.txthello world hadoop

spark hive hadoop将数据上传到hdfs对应目录

hdfs dfs -put wordcount.txt /oozie/input

第二步:执行官方测试案例

hadoop jar /export/servers/hadoop-2.6.0-cdh5.14.0/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.0-cdh5.14.0.jar wordcount /oozie/input/ /oozie/output第三步:准备调度的资源

将需要调度的资源都准备好放到一个文件夹下面去,包括jar包,job.properties,以及workflow.xml。

拷贝MR的任务模板

cd /export/servers/oozie-4.1.0-cdh5.14.0

cp -ra examples/apps/map-reduce/ oozie_works/

删掉MR任务模板lib目录下自带的jar包

cd /export/servers/oozie-4.1.0-cdh5.14.0/oozie_works/map-reduce/lib

rm -rf oozie-examples-4.1.0-cdh5.14.0.jar

第三步:拷贝的jar包到对应目录

从上一步的删除当中,可以看到需要调度的jar包存放在了

/export/servers/oozie-4.1.0-cdh5.14.0/oozie_works/map-reduce/lib这个目录下,所以我们把我们需要调度的jar包也放到这个路径下即可

cp /export/servers/hadoop-2.6.0-cdh5.14.0/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.0-cdh5.14.0.jar /export/servers/oozie-4.1.0-cdh5.14.0/oozie_works/map-reduce/lib/

第四步:修改配置文件

修改job.properties

cd /export/servers/oozie-4.1.0-cdh5.14.0/oozie_works/map-reduce

vim job.properties

nameNode=hdfs://node01:8020

jobTracker=node01:8032

queueName=default

examplesRoot=oozie_works

oozie.wf.application.path=${nameNode}/user/${user.name}/${examplesRoot}/map-reduce/workflow.xml

outputDir=/oozie/output

inputdir=/oozie/input修改workflow.xml

cd /export/servers/oozie-4.1.0-cdh5.14.0/oozie_works/map-reduce

vim workflow.xml

${jobTracker}

${nameNode}

mapred.job.queue.name

${queueName}

mapred.mapper.new-api

true

mapred.reducer.new-api

true

mapreduce.job.output.key.class

org.apache.hadoop.io.Text

mapreduce.job.output.value.class

org.apache.hadoop.io.IntWritable

mapred.input.dir

${nameNode}/${inputdir}

mapred.output.dir

${nameNode}/${outputDir}

mapreduce.job.map.class

org.apache.hadoop.examples.WordCount$TokenizerMapper

mapreduce.job.reduce.class

org.apache.hadoop.examples.WordCount$IntSumReducer

mapred.map.tasks

1

Map/Reduce failed, error message[${wf:errorMessage(wf:lastErrorNode())}]

第五步:上传调度任务到hdfs对应目录

cd /export/servers/oozie-4.1.0-cdh5.14.0/oozie_works

hdfs dfs -put map-reduce/ /user/root/oozie_works/

第六步:执行调度任务

执行调度任务,然后通过oozie的11000端口进行查看任务结果

cd /export/servers/oozie-4.1.0-cdh5.14.0

bin/oozie job -oozie http://bd001:11000/oozie -config oozie_works/map-reduce/job.properties -run

oozie的任务串联

在实际工作当中,肯定会存在多个任务需要执行,并且存在上一个任务的输出结果作为下一个任务的输入数据这样的情况,所以我们需要在workflow.xml配置文件当中配置多个action,实现多个任务之间的相互依赖关系

需求:首先执行一个shell脚本,执行完了之后再执行一个MR的程序,最后再执行一个hive的程序

第一步:准备工作目录

cd /export/servers/oozie-4.1.0-cdh5.14.0/oozie_works

mkdir -p sereval-actions

第二步:准备调度文件

将我们之前的hive,shell,以及MR的执行,进行串联成到一个workflow当中去,准备资源文件

cd /export/servers/oozie-4.1.0-cdh5.14.0/oozie_works

cp hive2/script.q sereval-actions/

cp shell/hello.sh sereval-actions/

cp -ra map-reduce/lib sereval-actions/

第三步:开发调度的配置文件

cd /export/servers/oozie-4.1.0-cdh5.14.0/oozie_works/sereval-actions创建配置文件workflow.xml并编辑

vim workflow.xml

${jobTracker}

${nameNode}

mapred.job.queue.name

${queueName}

${EXEC}

/user/root/oozie_works/sereval-actions/${EXEC}#${EXEC}

${jobTracker}

${nameNode}

mapred.job.queue.name

${queueName}

mapred.mapper.new-api

true

mapred.reducer.new-api

true

mapreduce.job.output.key.class

org.apache.hadoop.io.Text

mapreduce.job.output.value.class

org.apache.hadoop.io.IntWritable

mapred.input.dir

${nameNode}/${inputdir}

mapred.output.dir

${nameNode}/${outputDir}

mapreduce.job.map.class

org.apache.hadoop.examples.WordCount$TokenizerMapper

mapreduce.job.reduce.class

org.apache.hadoop.examples.WordCount$IntSumReducer

mapred.map.tasks

1

${jobTracker}

${nameNode}

mapred.job.queue.name

${queueName}

${jdbcURL}

INPUT=/user/${wf:user()}/${examplesRoot}/input-data/table

OUTPUT=/user/${wf:user()}/${examplesRoot}/output-data/hive2

${wf:actionData('shell-node')['my_output'] eq 'Hello Oozie'}

Shell action failed, error message[${wf:errorMessage(wf:lastErrorNode())}]

Incorrect output, expected [Hello Oozie] but was [${wf:actionData('shell-node')['my_output']}]

开发job.properties配置文件

cd /export/servers/oozie-4.1.0-cdh5.14.0/oozie_works/sereval-actions

vim job.propertiesnameNode=hdfs://bd001:8020

jobTracker=bd001:8032

queueName=default

examplesRoot=oozie_works

EXEC=hello.sh

outputDir=/oozie/output

inputdir=/oozie/input

jdbcURL=jdbc:hive2://bd001:10000/default

oozie.use.system.libpath=true

# 配置我们文件上传到hdfs的保存路径 实际上就是在hdfs 的/user/root/oozie_works/sereval-actions这个路径下

oozie.wf.application.path=${nameNode}/user/${user.name}/${examplesRoot}/sereval-actions/workflow.xml

第四步:上传资源文件夹到hdfs对应路径

cd /export/servers/oozie-4.1.0-cdh5.14.0/oozie_works/

hdfs dfs -put sereval-actions/ /user/root/oozie_works/第五步:执行调度任务

cd /export/servers/oozie-4.1.0-cdh5.14.0/

bin/oozie job -oozie http://bd001:11000/oozie -config oozie_works/sereval-actions/job.properties -run

oozie当中定时任务的设置

第一步:拷贝定时任务的调度模板

cd /export/servers/oozie-4.1.0-cdh5.14.0

cp -r examples/apps/cron oozie_works/cron-job

第二步:拷贝hello.sh脚本

cd /export/servers/oozie-4.1.0-cdh5.14.0/oozie_works

cp shell/hello.sh cron-job/

第三步:修改配置文件

修改job.properties

cd /export/servers/oozie-4.1.0-cdh5.14.0/oozie_works/cron-job

vim job.propertiesnameNode=hdfs://node01:8020

jobTracker=node01:8032

queueName=default

examplesRoot=oozie_works

oozie.coord.application.path=${nameNode}/user/${user.name}/${examplesRoot}/cron-job/coordinator.xml

start=2018-08-22T19:20+0800

end=2019-08-22T19:20+0800

EXEC=hello.sh

workflowAppUri=${nameNode}/user/${user.name}/${examplesRoot}/cron-job/workflow.xml修改coordinator.xml

vim coordinator.xml

${workflowAppUri}

jobTracker

${jobTracker}

nameNode

${nameNode}

queueName

${queueName}

修改workflow.xml

vim workflow.xml

${jobTracker}

${nameNode}

mapred.job.queue.name

${queueName}

${EXEC}

/user/root/oozie_works/cron-job/${EXEC}#${EXEC}

第四步:上传到hdfs对应路径

cd /export/servers/oozie-4.1.0-cdh5.14.0/oozie_works

hdfs dfs -put cron-job/ /user/root/oozie_works/第五步:运行定时任务

cd /export/servers/oozie-4.1.0-cdh5.14.0

bin/oozie job -oozie http://node03:11000/oozie -config oozie_works/cron-job/job.properties -run