初尝Kaggle系列—Leaf Classification(keras)

Leaf Classification 是比较老的一个题目了,目前在Kaggle上已经有了很多的优秀kernel,作为一名课余时间自学深度学习的学生,拿这道题目来熟悉CNN和Keras,同时写一下自己在做这道题的过程中遇到的一些问题和自己感悟(PS:private leaderboard score 0.00191)。如若有错误或不足的地方,欢迎各位大佬指正。

一、解题思路介绍

题目中提供了两种信息,1、原始图片信息,2、预处理特征(the provide pre-extracted features:margin、sharp、texture)。第一种方案,我们可以直接利用已有特征信息,构建常规分类器例如Adaboosting,Svm,Logistic,决策树等等,或者搭建神经网络来处理pre-extracted features。第二种方案,利用图片信息搭建卷积神经网络,当然也可以利用现有的网络模型。第三种方案就是同时利用图片信息与预处理特征,当时看到这个想法的时候(大佬AbhijeetMulgund的思路),就很感兴趣,所以就按照这个思路做了(也算是一个及其简易的modle ensemble吧)。

二、题目代码

1、读取train.csv,test.csv中的信息,读取image信息,class编码,这些都可以参考AbhijeetMulgund的代码(请不要吝啬你对大佬的vote)。

2、因为要搭建CNN网络,所以利用已有的image肯定是不够的,需要Data Augmentation,又因为我们需要将两种特征混合到一个网络之中去,因此不能简单的调用ImageDataGenerator,这里我提供两种方案a、重载class ImageDataGenerator,b、自定义generator。这里我说明一下,其实自定义generator更简单一点,但是因为是初学者,所以我就都写了一遍。

a、重载ImageDataGenerator(利用源代码去改写,我第一次就是打算自己单撸,各种bug)

from keras.preprocessing.image import ImageDataGenerator,NumpyArrayIterator,Iterator

class ImageDataGenerator_leaf(ImageDataGenerator):

def flow(self, x,pre_feature,y,batch_size=32,

shuffle=False, seed=None):

return NumpyArrayIterator_leaf(

x,pre_feature,y,self,

batch_size=batch_size,

shuffle=shuffle,

seed=seed)

class NumpyArrayIterator_leaf(Iterator):

def __init__(self, x,pre_feature, y, image_data_generator,

batch_size=32, shuffle=False, seed=None,

data_format=None,

subset=None)

if data_format is None:

data_format = K.image_data_format()

self.pre_feature = np.asarray(pre_feature) #预处理特征

if self.x.ndim != 4:

raise ValueError('Input data in `NumpyArrayIterator` '

'should have rank 4. You passed an array '

'with shape', self.x.shape)

self.x = np.asarray(x) #传入的image信息

if y is not None:

self.y = np.asarray(y)

else:

self.y = None

self.image_data_generator = image_data_generator

self.data_format = data_format

self.n = len(self.x)

self.batch_size = batch_size

self.shuffle =shuffle

self.seed = seed

self.index_generator = self._flow_index()

super(NumpyArrayIterator_leaf, self).__init__(self.n, batch_size, shuffle, seed)

def _get_batches_of_transformed_samples(self, index_array):

batch_x = np.zeros(tuple([len(index_array)] + list(self.x.shape)[1:]),

dtype=K.floatx())

batch_pre_feature = np.zeros(tuple([len(index_array)] +[len(self.pre_feature[0])] ),

dtype=K.floatx())

for i, j in enumerate(index_array):

x_temp = self.x[j]

if self.image_data_generator.preprocessing_function:

x_temp = self.image_data_generator.preprocessing_function(x_temp)

x_temp = self.image_data_generator.random_transform(x_temp.astype(K.floatx()))

x_temp = self.image_data_generator.standardize(x_temp)

batch_x[i] = x_temp

if self.y is None:

return batch_x

batch_pre_feature = self.pre_feature[index_array]

batch_y = self.y[index_array]

return [batch_x,batch_pre_feature], batch_y

def next(self):

# Keeps under lock only the mechanism which advances

# the indexing of each batch.

with self.lock:

index_array = next(self.index_generator)

# The transformation of images is not under thread lock

# so it can be done in parallel

return self._get_batches_of_transformed_samples(index_array)imgen = ImageDataGenerator_leaf(

rotation_range=20,

zoom_range=0.2,

horizontal_flip=True,

vertical_flip=True,

fill_mode='nearest')

imgen_train = imgen.flow(X_img_tr,X_num_tr, y_tr_cat)b、自定义generator

①、先定义生成器

imgen = ImageDataGenerator(

rotation_range=20,

zoom_range=0.2,

horizontal_flip=True,

vertical_flip=True,

fill_mode='nearest')

②、定义自己的generator

def leaf_generator(generator, X_img_tr, X_num_tr, y_tr_cat): imgen_train = generator.flow(X_img_tr, y_tr_cat) n = (len(X_img_tr)+31)//32 #这里我使用了默认的batch_size:32,然后根据generator源代码里迭代次数的定义

while True: # n = (self.n+batch_size-1)//batch_size 计算n值 for i in range(n): x_img,y = imgen_train.next() x_num = X_num_tr[imgen.index_array[:32]]

yield [x_img,x_num],y③、在使用fit_generator的时候传入自定义的leaf_generator

3、搭建CNN网络

可以参考上面链接里的代码,但是个人感觉这个网络其实是有一定的问题的,以下是我对这个网络结构的一些见解,如有错误,希望各位看官指正。

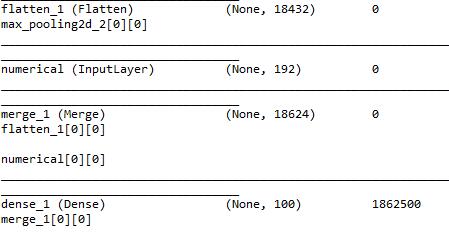

首先我们来看一下网络的summary:

可以看到,进入全连接层之后,图像提取的特征值个数是18432,而预处理的特征值个数是192,这将会导致最终预处理数据所占的权重过低,甚至是几乎不起作用,也就失去了这个思路的意义,因此,可以对图像特征添加一个dense层来降维,使两种特征的权重均衡,或者对预处理特征用dense层进行维度提升。这里因为不想增加网络复杂度(主要是电脑配置太弱),采用的是降维的方案。

def combined_model():

image = Input(shape=(96, 96, 1), name='image')

x = Conv2D(8,kernel_size =(5,5),strides =(1,1),border_mode='same')(image)

x = (Activation('relu'))(x)

x = (MaxPooling2D(pool_size=(2, 2), strides=(2, 2)))(x)

x = Conv2D(32,kernel_size =(5,5),strides =(1,1),border_mode='same')(x)

x = (Activation('relu'))(x)

x = (MaxPooling2D(pool_size=(2, 2), strides=(2, 2)))(x)

x = Flatten()(x)

x = Dense(192,activation='relu')(x)

numerical = Input(shape=(192,), name='numerical')

concatenated = merge([x, numerical], mode='concat')

x = Dense(100, activation='relu')(concatenated)

x = Dropout(.5)(x)

out = Dense(99, activation='softmax')(x)

model = Model(input=[image, numerical], output=out)

model.compile(loss='categorical_crossentropy', optimizer='rmsprop', metrics=['accuracy'])

return model

4、训练网络

三、总结

对于这个网络结构其实可以有很多的改进,例如把它设计成真正的联合模型(针对这个题目其实没必要),增加网络深度等等。作为初探Kaggle的题目,重心放在整体设计流程上也就可以了。麻雀虽小,五脏俱全,整个流程下来涉及到了DataAugmentation,简易Model Ensemble,也熟悉了简易CNN的搭建,也算是有所收获。