requests简单爬取安居网租房信息

对pyquery不是很熟,所以是练习一下用pyquery来筛选数据,

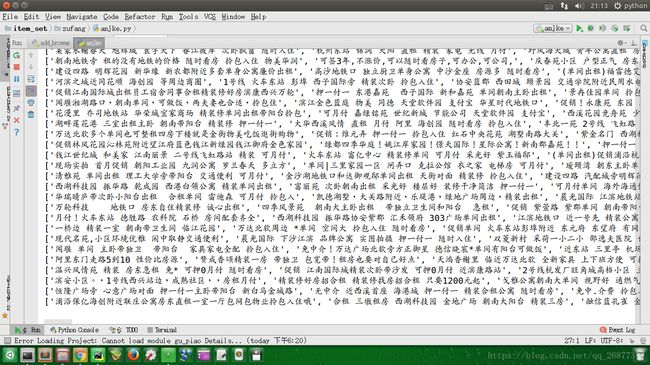

data文件里面是2000个浏览器请求头,每次发生数据前随机获取一个,筛选的数据只能获取50页,3000条,将条件设的更详细点

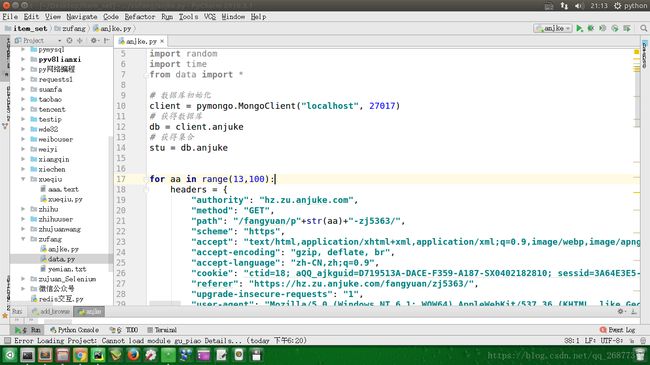

简要代码如下

import requests

import reimport pymongo

from pyquery import PyQuery as pq

import random

import time

from data import *

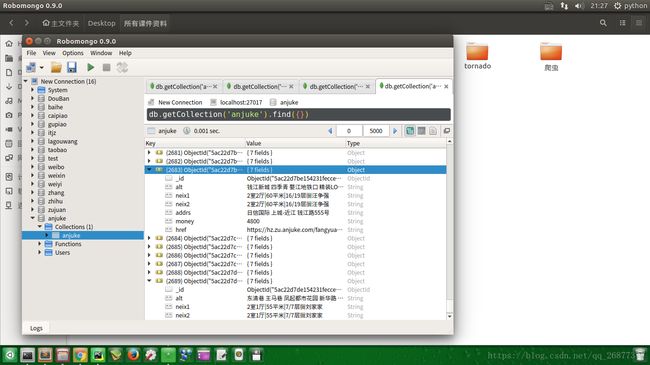

# 数据库初始化

client = pymongo.MongoClient("localhost", 27017)

# 获得数据库

db = client.anjuke

# 获得集合

stu = db.anjuke

for aa in range(1,51):

use = random.choice(data)

print(use)

headers = {

"authority": "hz.zu.anjuke.com",

"method": "GET",

"path": "/fangyuan/p"+str(aa)+"-zj5363/",

"scheme": "https",

"accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8",

"accept-encoding": "gzip, deflate, br",

"accept-language": "zh-CN,zh;q=0.9",

"cookie": "ctid=18; aQQ_ajkguid=D719513A-DACE-F359-A187-SX0402182810; sessid=3A64E3E5-D039-2F62-BB8D-SX0402182810; isp=true; 58tj_uuid=0c0e03d9-e5ee-49b1-9d27-002654ea02c9; init_refer=https%253A%252F%252Fwww.baidu.com%252Fs%253Fwd%253D%2525E4%2525B9%2525B0%2525E6%252588%2525BF%2525E5%2525AD%252590%252520%2525E6%25259D%2525AD%2525E5%2525B7%25259E%2526rsv_spt%253D1%2526rsv_iqid%253D0xed7ddfbf0000455f%2526issp%253D1%2526f%253D8%2526rsv_bp%253D1%2526rsv_idx%253D2%2526ie%253Dutf-8%2526rqlang%253Dcn%2526tn%253Dbaiduhome_pg%2526rsv_enter%253D1%2526oq%253D%252525E4%252525B9%252525B0%252525E6%25252588%252525BF%252525E5%252525AD%25252590%2526inputT%253D1441%2526rsv_t%253D5367sDw2PA08DaWX5MkC7jUrq%25252Fmrvqfh3pDPDL766txMEmGYi4pF0zedxm0WQDL%25252BCUDT%2526rsv_sug3%253D18%2526rsv_sug1%253D7%2526rsv_sug7%253D100%2526rsv_pq%253Dcf15b90a0000294a%2526rsv_sug2%253D0%2526rsv_sug4%253D1877%2526rsv_sug%253D1; new_uv=1; als=0; Hm_lvt_c5899c8768ebee272710c9c5f365a6d8=1522664915; Hm_lpvt_c5899c8768ebee272710c9c5f365a6d8=1522664915; lps=http%3A%2F%2Fhz.zu.anjuke.com%2F%7Chttps%3A%2F%2Fhz.fang.anjuke.com%2Floupan%2F%3Fpi%3Dbaidu-cpcaf-hz-tyong2%26utm_source%3Dbaidu%26kwid%3D3373162370%26utm_term%3Dcpc%26kwid%3D3373162370%26utm_term%3D%25e6%259d%25ad%25e5%25b7%259e%25e4%25bd%258f%25e5%25ae%2585; twe=2; new_session=0; __xsptplusUT_8=1; __xsptplus8=8.1.1522665139.1522665189.2%234%7C%7C%7C%7C%7C%23%23T2SdMJR-NzVKGF0pz_PFreiOVx4_D7p2%23",

"referer": "https://hz.zu.anjuke.com/fangyuan/zj5363/",

"upgrade-insecure-requests": "1",

"user-agent": use

}

url = 'https://hz.zu.anjuke.com/fangyuan/p'+str(aa)+'-zj5363/'

response = requests.get(url=url, headers=headers)

# print(response.text)

# 设置数据筛选

# with open('yemian.txt','r') as f:

# data = f.read()

# print(data)

doc = pq (response.text)

# 获得房间地址 href

addr = doc('.zu-itemmod .img').items()

# 获得房间描述 alt

alt = doc('.zu-itemmod .img').items()

#获得房间类型

neix = doc('.zu-info p').items()

addrs = doc('.zu-info address').items()

money = doc('.zu-side strong').items()

dict_={}

li1 = []

li2 = []

li3 = []

li4 = []

li5 = []

li6 = []

for i in addr:

# print(i.attr('href'))

li1.append(i.attr('href'))

for i in alt:

li2.append(i.attr('alt'))

print(li2)

num = 0

for i in neix:

if num%2 == 0:

# 这个0是获取房间楼层信息 1是整租

li3.append(i.text())

else:

li4.append(i.text())

num += 1

for i in addrs:

li5.append(i.text())

for i in money:

li6.append(i.text())

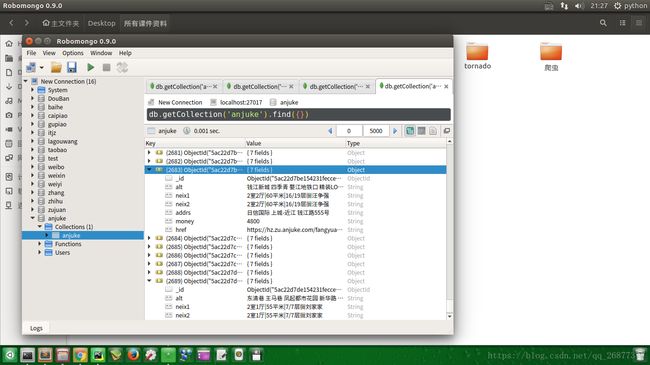

for i in range(60):

dict_['href'] = li1[i]

# print(li1)

dict_['alt'] = li2[i]

dict_['neix1'] = li3[i]

dict_['neix2'] = li3[i]

dict_['addrs'] = li5[i]

dict_['money'] = li6[i]

stu.update({'href': dict_['href']}, dict(dict_), True)

dict_ = {}

print(aa)