kaggle--House Prices

房价预测是kaggle网站上一个较初级的入门竞赛,涉及的变量共79组,包括数值特征组和类别特征。数据可在kaggle官网下载 。

一 导入数据

下载的数据集共包括train和test两部分,其中train部分用来训练数据集,test部分用来计算提交结果。

导入数据集

import pandas as pd

train=pd.read_csv(r'C:\Users\Administrator\Downloads\train.csv')

test=pd.read_csv(r'C:\Users\Administrator\Downloads\test.csv')

二 数据预处理

这部分主要包括缺失值处理,重复值处理,和特征处理等。

1 缺失值处理

首先总体查看缺失列,可以看到缺失列一共有34列,我们需要对其进行缺失值补充。

cols=[col for col in alldata.columns if alldata[col].isnull().any()]

len(cols)

输出:34

在进行缺失值补充之前,先去掉缺失值超过1/4的列。找出缺失值过多的列。

cols_to_use=[col for col in cols if (alldata[col].isnull().sum()/len(alldata))<0.25]

cols_drop=[col for col in cols if col not in cols_to_use]

print(cols_drop)

输出缺失列: [‘Alley’, ‘FireplaceQu’, ‘PoolQC’, ‘Fence’, ‘MiscFeature’]

去掉缺失列。

alldata=alldata.drop(cols_drop,axis=1)

在进行缺失值补充之前,先将特征进行分类。将数值特征采用中位数进行补充,类别特征采用众数进行补充。

#找出数值特征和类别特征

numerical_cols_missing=[col for col in cols_to_use if alldata[col].dtype!='object']

object_cols_missing=[col for col in cols_to_use if alldata[col].dtype=='object']

#进行缺失值处理

for col in object_cols_missing:

alldata[col]=alldata[col].fillna(alldata[col].dropna().mode()[0]) #填充去掉NAN值的众数

for col in numerical_cols_missing:

alldata[col]=alldata[col].fillna(alldata[col].median()) #对数值列填充中位数

2 重复值处理

查看重复值

alldata[alldata.duplicated()]

3 异常值判断

判断异常值的方法有很多,可以通过简单的统计方法,3δ原则,箱型图分布等。

略过。

4 特征工程

这部分对数据集的特征进行处理。

a 将有顺序的类别特征进行label encoder处理

#首先把这部分特征转化为类别型特征

alldata['MSSubClass'] = alldata['MSSubClass'].apply(str)

alldata['LandContour'] = alldata['LandContour'].apply(str)

cols_classify=['MSSubClass','LandContour']

#对类别特征进行label encoder处理

from sklearn.preprocessing import LabelEncoder

for col in cols_classify:

le=LabelEncoder()

le.fit(list(alldata[col].values))

alldata[col]=le.transform(list(alldata[col].values))

#这部分特征量相同

ordinalList = ['ExterQual', 'ExterCond', 'GarageQual', 'GarageCond',\

'KitchenQual', 'HeatingQC', 'BsmtQual','BsmtCond']

ordinalmap = {'Ex': 4,'Gd': 3,'TA': 2,'Fa': 1,'Po': 0}

for c in ordinalList:

alldata[c] = alldata[c].map(ordinalmap)

b 对时间特征进行处理

共有两个时间特征,yearbuilt和yearremodadd

#yearbuilt

alldata['YearBulit']=alldata['YearBuilt'].map(lambda x:(2019-x))

#YearRemodAdd

alldata['YearRemodAdd']=alldata['YearRemodAdd'].map(lambda x:(2019-x))

c 对数值型特征进行处理

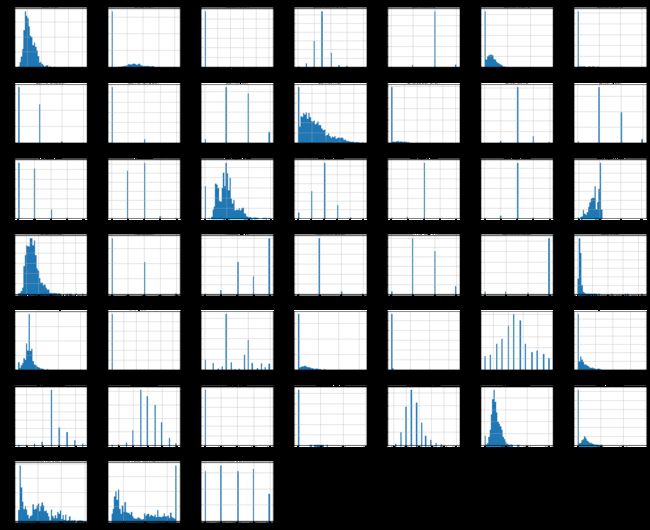

#画出属性柱状图

%matplotlib inline

import matplotlib.pyplot as plt

alldata.hist(bins=50, figsize=(30,25))

plt.show()

#找出数值列

numerical_cols=[col for col in alldata.columns if alldata[col].dtype!='object']

from scipy import stats

from scipy.stats import norm, skew #for some statistics

import numpy as np

#找出偏度大于0.75的数值列

cols_trans=[col for col in numerical_cols if abs(skew(alldata[col]))>0.75]

#进行boxcox1p转换

from scipy.special import boxcox1p

lam=0.15

for col in cols_trans:

alldata[col]=boxcox1p(alldata[col], lam)

#存在异常值,采用rousterscaler进行缩放

from sklearn import preprocessing

robusterscaler=preprocessing.RobustScaler(with_centering=True, with_scaling=True, quantile_range=(25.0,75.0), copy=True)

alldata[numerical_cols]=pd.DataFrame(robusterscaler.fit_transform(alldata[numerical_cols]),columns=numerical_cols)

#对saleprice进行处理

train['SalePrice'] = np.log1p(train['SalePrice'])

d 对无顺序的的类别特征采用one-hot-code处理

alldata=pd.get_dummies(alldata)

至此,所有的特征处理已经完成。

train_data=alldata[:len(train)]

test_data=alldata[len(train):]

三 模型构建

1.分别采用多模型进行预测,并找出最佳参数

岭回归:

from sklearn import linear_model

#5折交叉验证

def crossvalscore(model):

score=np.sqrt(-cross_val_score(model, train_data, train['SalePrice'],\

scoring="neg_mean_squared_error",cv=5))

return score

alphas=[0.01, 0.02, 0.05, 0.1, 0.2, 0.5, 1, 2, 5, 10, 20, 50, 100, 200, 500, 1000]

results=[]

for alpha in alphas:

ridge=linear_model.Ridge(alpha=alpha, random_state=1)

score=crossvalscore(ridge)

results.append((score.mean(),alpha))

print([score.mean(),alpha])

print(min(results,key=lambda x: x[0]))

找到最佳alpha为10

clf1=linear_model.Ridge(alpha=10,random_state=1)

同理:

clf2=linear_model.Lasso(alpha=0.001, random_state=1)

clf3=linear_model.ElasticNet(alpha=0.001, l1_ratio=0.5, random_state=1)

clf4=linear_model.BayesianRidge(alpha_1=1e-08, alpha_2=1)

2 ensemble

采用随机森林进行预测,由于参数较多,不采用五折交叉验证:

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import mean_absolute_error

sample_leaf_options=list(range(1,500,3))

n_estimators_options=list(range(1,1000,5))

results=[]

for leaf_size in sample_leaf_options:

for n_estimators_size in n_estimators_options:

train_x,test_x,train_y,test_y=train_test_split(train_data,train['SalePrice'],random_state=0)

model=RandomForestRegressor(min_samples_leaf=leaf_size,n_estimators=n_estimators_size, random_state=50)

model.fit(train_x,train_y.astype('int'))

predict=model.predict(test_x)

mea=mean_absolute_error(predict, test_y.astype('int'))

results.append((leaf_size, n_estimators_size,mea))

print(mea)

print(min(results, key=lambda x: x[2]))

输出结果为:

(4, 31, 0.06301369863013699)

Clf5=RandomForestRegressor(min_samples_leaf=4,n_estimators=31, random_state=50)

3 stacking

from sklearn.linear_model import LogisticRegression

from mlxtend.regressor import StackingRegressor

from sklearn.svm import SVR

from sklearn import model_selection

clf1 = linear_model.Ridge(alpha=10, random_state=1)

clf2 = linear_model.Lasso(alpha=0.01, random_state=1)

clf3 = linear_model.ElasticNet(alpha=0.001,l1_ratio=0.5, random_state=1)

clf4=linear_model.BayesianRidge(alpha_1=1e-08,alpha_2=1,compute_score=False,

copy_X=True, fit_intercept=True, lambda_1=1e-06, lambda_2=1e-06,

n_iter=300, normalize=False, tol=0.001, verbose=False)

#clf5加入未提高预测精度,去掉

svr_rbf = SVR(kernel='rbf')

#融合四个模型

sclf = StackingRegressor(regressors=[clf1, clf2, clf3,clf4], meta_regressor=svr_rbf)

print('5-fold cross validation:\n')

for clf, label in zip([clf1, clf2, clf3, clf4,sclf],['ridge','lasso','elasticNet','bayesianRidge','StackingRegressor']):

scores=crossvalscore(clf)

print(scores.mean(), scores.std(), label)

输出:

5-fold cross validation:

0.1267584383535164 0.017268287633750996 ridge

0.14191612266114356 0.014271532819083171 lasso

0.12383172541955076 0.0169372826501538 elasticNet

0.12687861740560474 0.017271467133941756 bayesianRidge

0.1207349226516489 0.01170334625859647 StackingRegressor

对test集进行预测,并上传。