Android自定义相机自动对焦、定点对焦

在解决项目中相机某些机型无法自动对焦的问题时,在网上找到了一些资料,写下解决问题过程,以备查看。

Android相机实时自动对焦的完美实现

Android图像滤镜框架GPUImage从配置到应用

GPUImage for Android

Android Camera对焦相关

加速度控制器

当设备移动时,认定需要对焦,然后调用CameraFocusListener 接口的onFocus()方法。

/**

* 加速度控制器 用来控制对焦

* @author zuo

* @date 2018/5/9 14:34

*/

public class SensorController implements SensorEventListener {

private SensorManager mSensorManager;

private Sensor mSensor;

private static SensorController mInstance;

private CameraFocusListener mCameraFocusListener;

public static final int STATUS_NONE = 0;

public static final int STATUS_STATIC = 1;

public static final int STATUS_MOVE = 2;

private int mX, mY, mZ;

private int STATUE = STATUS_NONE;

boolean canFocus = false;

boolean canFocusIn = false;

boolean isFocusing = false;

Calendar mCalendar;

private final double moveIs = 1.4;

private long lastStaticStamp = 0;

public static final int DELAY_DURATION = 500;

private SensorController(Context context) {

mSensorManager = (SensorManager) context.getSystemService(Activity.SENSOR_SERVICE);

if (mSensorManager!=null){

mSensor = mSensorManager.getDefaultSensor(Sensor.TYPE_ACCELEROMETER);

}

start();

}

public static SensorController getInstance(Context context) {

if (mInstance == null) {

mInstance = new SensorController(context);

}

return mInstance;

}

public void setCameraFocusListener(CameraFocusListener mCameraFocusListener) {

this.mCameraFocusListener = mCameraFocusListener;

}

public void start() {

restParams();

canFocus = true;

mSensorManager.registerListener(this, mSensor, SensorManager.SENSOR_DELAY_NORMAL);

}

public void stop() {

mSensorManager.unregisterListener(this, mSensor);

canFocus = false;

}

@Override

public void onSensorChanged(SensorEvent event) {

if (event.sensor == null) {

return;

}

if (isFocusing) {

restParams();

return;

}

if (event.sensor.getType() == Sensor.TYPE_ACCELEROMETER) {

int x = (int) event.values[0];

int y = (int) event.values[1];

int z = (int) event.values[2];

mCalendar = Calendar.getInstance();

long stamp = mCalendar.getTimeInMillis();

int second = mCalendar.get(Calendar.SECOND);

if (STATUE != STATUS_NONE) {

int px = Math.abs(mX - x);

int py = Math.abs(mY - y);

int pz = Math.abs(mZ - z);

double value = Math.sqrt(px * px + py * py + pz * pz);

if (value > moveIs) {

STATUE = STATUS_MOVE;

} else {

if (STATUE == STATUS_MOVE) {

lastStaticStamp = stamp;

canFocusIn = true;

}

if (canFocusIn) {

if (stamp - lastStaticStamp > DELAY_DURATION) {

//移动后静止一段时间,可以发生对焦行为

if (!isFocusing) {

canFocusIn = false;

// onCameraFocus();

if (mCameraFocusListener != null) {

mCameraFocusListener.onFocus();

}

}

}

}

STATUE = STATUS_STATIC;

}

} else {

lastStaticStamp = stamp;

STATUE = STATUS_STATIC;

}

mX = x;

mY = y;

mZ = z;

}

}

@Override

public void onAccuracyChanged(Sensor sensor, int accuracy) {

}

private void restParams() {

STATUE = STATUS_NONE;

canFocusIn = false;

mX = 0;

mY = 0;

mZ = 0;

}

/**

* 对焦是否被锁定

* @return

*/

public boolean isFocusLocked() {

return canFocus && isFocusing;

}

/**

* 锁定对焦

*/

public void lockFocus() {

isFocusing = true;

}

/**

* 解锁对焦

*/

public void unlockFocus() {

isFocusing = false;

}

public void restFocus() {

isFocusing = false;

}

public interface CameraFocusListener {

/**

* 相机对焦中

*/

void onFocus();

}

}自定义相机

1、初始化相机时,进行加速度监听

public Camera1(Activity activity, Callback callback, PreviewImpl preview) {

super(callback, preview);

this.mActivity = activity;

preview.setCallback(new PreviewImpl.Callback() {

@Override

public void onSurfaceChanged() {

if (mCamera != null) {

setUpPreview();

adjustCameraParameters();

}

}

});

sensorController = SensorController.getInstance(mActivity);

sensorController.setCameraFocusListener(new SensorController.CameraFocusListener() {

@Override

public void onFocus() {

if (mCamera != null) {

DisplayMetrics mDisplayMetrics = mActivity.getApplicationContext().getResources()

.getDisplayMetrics();

int mScreenWidth = mDisplayMetrics.widthPixels;

if (!sensorController.isFocusLocked()) {

if (newFocus(mScreenWidth / 2, mScreenWidth / 2)) {

sensorController.lockFocus();

}

}

}

}

});

}

sensorController.start();2、自动对焦代码

private boolean isFocusing;

private boolean newFocus(int x, int y) {

//正在对焦时返回

if (mCamera == null || isFocusing) {

return false;

}

isFocusing = true;

setMeteringRect(x, y);

mCameraParameters.setFocusMode(Camera.Parameters.FOCUS_MODE_AUTO);

mCamera.cancelAutoFocus(); // 先要取消掉进程中所有的聚焦功能

try {

mCamera.setParameters(mCameraParameters);

mCamera.autoFocus(autoFocusCallback);

} catch (Exception e) {

e.printStackTrace();

return false;

}

return true;

}设置感光区域,将手机屏幕点击点对应的感光矩形范围映射到相机的感光矩形坐标系

/**

* 设置感光区域

* 需要将屏幕坐标映射到Rect对象对应的单元格矩形

*

* @param x

* @param y

*/

private void setMeteringRect(int x, int y) {

if (mCameraParameters.getMaxNumMeteringAreas() > 0) {

List areas = new ArrayList();

Rect rect = new Rect(x - 100, y - 100, x + 100, y + 100);

int left = rect.left * 2000 / CameraUtil.screenWidth - 1000;

int top = rect.top * 2000 / CameraUtil.screenHeight - 1000;

int right = rect.right * 2000 / CameraUtil.screenWidth - 1000;

int bottom = rect.bottom * 2000 / CameraUtil.screenHeight - 1000;

// 如果超出了(-1000,1000)到(1000, 1000)的范围,则会导致相机崩溃

left = left < -1000 ? -1000 : left;

top = top < -1000 ? -1000 : top;

right = right > 1000 ? 1000 : right;

bottom = bottom > 1000 ? 1000 : bottom;

Rect area1 = new Rect(left, top, right, bottom);

//只有一个感光区,直接设置权重为1000了

areas.add(new Camera.Area(area1, 1000));

mCameraParameters.setMeteringAreas(areas);

}

} 3、自动对焦回调事件

private Handler mHandler = new Handler();

private final Camera.AutoFocusCallback autoFocusCallback = new Camera.AutoFocusCallback() {

@Override

public void onAutoFocus(boolean success, Camera camera) {

mHandler.postDelayed(new Runnable() {

@Override

public void run() {

//一秒之后才能再次对焦

isFocusing = false;

sensorController.unlockFocus();

}

}, 1000);

}

};4、关闭监听事件

/**

* 关闭摄像头,关掉加速度监听

*

*/

@Override

public void stop(boolean stopAll) {

sensorController.stop();

stopPreview();

releaseCamera();

}测光和调焦

在某些摄像情景中,自动调焦和测光可能不能达到设计结果。从Android4.0(API Level 14)开始,你的Camera应用程序能够提供另外的控制允许应用程序或用户指定图像中特定区域用于进行调焦或光线级别的设置,并且把这些值传递给Camera硬件用于采集图片或视频。

测光和调焦区域的工作与其他Camera功能非常类似,你可以通过Camera.Parameters对象中的方法来控制它们。

1、给Camera设置两个测光区域

Camera.Area对象,包含两个参数:

- Rect对象,它用于指定Camera预览窗口一块矩形区域(测光区域)

- 一个权重值(weight),它告诉Camera这块指定区域应该给予的测光或调焦计算的重要性等级,权重大的优先级高,权重最高为1000

//获取相机实例

Camera.Parameters params = mCamera.getParameters();

//检查是否支持测光区域

if (params.getMaxNumMeteringAreas() > 0){

List<Camera.Area> meteringAreas = new ArrayList<Camera.Area>();

//在图像的中心指定一个测光区域

Rect areaRect1 = new Rect(-100, -100, 100, 100);

//设置权重为600,最高1000

meteringAreas.add(new Camera.Area(areaRect1, 600));

//图像右上方的测光区域

Rect areaRect2 = new Rect(800, -1000, 1000, -800);

//设置权重为400

meteringAreas.add(new Camera.Area(areaRect2, 400));

//将测光区域设置给相机属性

params.setMeteringAreas(meteringAreas);

}

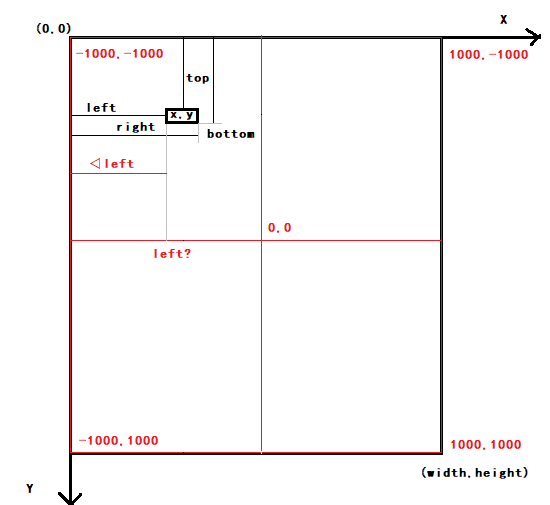

mCamera.setParameters(params);Rect对象,代表了一个2000x2000的单元格矩形,它的坐标对应Camera图像的位置关系可以参考下图,坐标(-1000,-1000)代表Camera图像的左上角,(1000,1000)代表Camera图像的右下角。

2、感光区的计算

在上面设置感光区的setMeteringRect()方法中,为什么从手机屏幕坐标系映射到相机的感光矩形坐标系需要这样计算?

Rect rect = new Rect(x - 100, y - 100, x + 100, y + 100);

int left = rect.left * 2000 / CameraUtil.screenWidth - 1000;

int top = rect.top * 2000 / CameraUtil.screenHeight - 1000;

int right = rect.right * 2000 / CameraUtil.screenWidth - 1000;

int bottom = rect.bottom * 2000 / CameraUtil.screenHeight - 1000;- 首先,我们把手机的屏幕坐标和Camera的感光矩阵坐标对应起来

如图,我用黑色线条绘制了手机的屏幕坐标系,用红色线条绘制了Camera的感光矩阵坐标系,Camera的感光矩阵坐标系是一个2000x2000的单元格矩形,(0,0)单元格在中心,(-1000,-1000)代表Camera图像的左上角,(1000,1000)代表Camera图像的右下角。

现在,我们点击了手机屏幕上的(x,y)这个点,并取这个点上下左右各100个单位的矩形作为要映射到Camera感光矩阵坐标系上的感光(对焦)区域,就是上面代码中的Rect rect = new Rect(x - 100, y - 100, x + 100, y + 100);,好了,我们要开始计算了。

- 计算,将手机屏幕坐标系上的矩形映射到Camera感光矩阵坐标系上

矩形Rect对象入参的定义:Rect(int left, int top, int right, int bottom),下面我们就用rect.left、rect.top、rect.right、rect.bottom来表示该矩形在手机屏幕坐标上的数据,用△left 、△top 、△right 、△bottom表示该矩形在Camera感光矩阵坐标上的距离(长度),用left 、top 、right 、bottom表示该矩形在Camera感光矩阵坐标上的坐标,进行计算,

//1、建立等价式

rect.left / width = △left / 2000 ,距离在两个坐标系上的长度比相同

left = △left - 1000 ,不管在第几象限-1000之后的数据都是他的坐标值计算可得

△left = rect.left * 2000 / width

left = △left - 1000 = rect.left * 2000 / width -1000也就是该矩形在Camera感光矩阵坐标系上的 left 数值就等于 rect.left * 2000 / width -1000 ,width 就是手机屏幕的宽度,也就是上面代码中的 int left = rect.left * 2000 / CameraUtil.screenWidth - 1000;

同理:

int top = rect.top * 2000 / CameraUtil.screenHeight - 1000;

int right = rect.right * 2000 / CameraUtil.screenWidth - 1000;

int bottom = rect.bottom * 2000 / CameraUtil.screenHeight - 1000;好了,感光区的计算就是这样了!