【大数据】ETL工具 Sqoop Linux 环境下安装与基本使用

Sqoop Linux 环境下安装与基本使用

Sqoop安装部署

Sqoop的安装和配置十分简单, 需要linux和Hadoop环境支撑,下面将linux系统进行介绍.所有安装均采用源码安装方式。

系统要求

Linux

JDK(1.8)

Hadoop(目前使用2.7.3)

安装Sqoop

1. 下载

http://www-eu.apache.org/dist/sqoop/1.4.6/

解压:

tar –zxvf sqoop-1.4.6.bin__hadoop-2.0.4-alpha.tar.gz

移动:

mv sqoop-1.4.6.bin__hadoop-2.0.4/ sqoop1

2. 修改配置

vim /etc/profile

增加两行:

export SQOOP_HOME=/usr/local/sqoop1

export PATH=$PATH:$SQOOP_HOME/bin

执行:

source /etc/profile

3. 注释sqoop1/bin/confiure-sqoop HBase,Hive Zookeeper

注释后confiure-sqoop如下所示:

#!/bin/bash

#

# Copyright 2011 The Apache Software Foundation

#

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# This is sourced in by bin/sqoop to set environment variables prior to

# invoking Hadoop.

bin="$1"

if [ -z "${bin}" ]; then

bin=`dirname $0`

bin=`cd ${bin} && pwd`

fi

if [ -z "$SQOOP_HOME" ]; then

export SQOOP_HOME=${bin}/..

fi

SQOOP_CONF_DIR=${SQOOP_CONF_DIR:-${SQOOP_HOME}/conf}

if [ -f "${SQOOP_CONF_DIR}/sqoop-env.sh" ]; then

. "${SQOOP_CONF_DIR}/sqoop-env.sh"

fi

# Find paths to our dependency systems. If they are unset, use CDH defaults.

if [ -z "${HADOOP_COMMON_HOME}" ]; then

if [ -n "${HADOOP_HOME}" ]; then

HADOOP_COMMON_HOME=${HADOOP_HOME}

else

if [ -d "/usr/lib/hadoop" ]; then

HADOOP_COMMON_HOME=/usr/lib/hadoop

else

HADOOP_COMMON_HOME=${SQOOP_HOME}/../hadoop

fi

fi

fi

if [ -z "${HADOOP_MAPRED_HOME}" ]; then

HADOOP_MAPRED_HOME=/usr/lib/hadoop-mapreduce

if [ ! -d "${HADOOP_MAPRED_HOME}" ]; then

if [ -n "${HADOOP_HOME}" ]; then

HADOOP_MAPRED_HOME=${HADOOP_HOME}

else

HADOOP_MAPRED_HOME=${SQOOP_HOME}/../hadoop-mapreduce

fi

fi

fi

# We are setting HADOOP_HOME to HADOOP_COMMON_HOME if it is not set

# so that hcat script works correctly on BigTop

if [ -z "${HADOOP_HOME}" ]; then

if [ -n "${HADOOP_COMMON_HOME}" ]; then

HADOOP_HOME=${HADOOP_COMMON_HOME}

export HADOOP_HOME

fi

fi

#if [ -z "${HBASE_HOME}" ]; then

# if [ -d "/usr/lib/hbase" ]; then

# HBASE_HOME=/usr/lib/hbase

# else

# HBASE_HOME=${SQOOP_HOME}/../hbase

# fi

#fi

#if [ -z "${HCAT_HOME}" ]; then

# if [ -d "/usr/lib/hive-hcatalog" ]; then

# HCAT_HOME=/usr/lib/hive-hcatalog

# elif [ -d "/usr/lib/hcatalog" ]; then

# HCAT_HOME=/usr/lib/hcatalog

# else

# HCAT_HOME=${SQOOP_HOME}/../hive-hcatalog

# if [ ! -d ${HCAT_HOME} ]; then

# HCAT_HOME=${SQOOP_HOME}/../hcatalog

# fi

# fi

#fi

if [ -z "${ACCUMULO_HOME}" ]; then

if [ -d "/usr/lib/accumulo" ]; then

ACCUMULO_HOME=/usr/lib/accumulo

else

ACCUMULO_HOME=${SQOOP_HOME}/../accumulo

fi

fi

#if [ -z "${ZOOKEEPER_HOME}" ]; then

# if [ -d "/usr/lib/zookeeper" ]; then

# ZOOKEEPER_HOME=/usr/lib/zookeeper

# else

# ZOOKEEPER_HOME=${SQOOP_HOME}/../zookeeper

# fi

#fi

#if [ -z "${HIVE_HOME}" ]; then

# if [ -d "/usr/lib/hive" ]; then

# export HIVE_HOME=/usr/lib/hive

# elif [ -d ${SQOOP_HOME}/../hive ]; then

# export HIVE_HOME=${SQOOP_HOME}/../hive

# fi

#fi

# Check: If we can't find our dependencies, give up here.

if [ ! -d "${HADOOP_COMMON_HOME}" ]; then

echo "Error: $HADOOP_COMMON_HOME does not exist!"

echo 'Please set $HADOOP_COMMON_HOME to the root of your Hadoop installation.'

exit 1

fi

if [ ! -d "${HADOOP_MAPRED_HOME}" ]; then

echo "Error: $HADOOP_MAPRED_HOME does not exist!"

echo 'Please set $HADOOP_MAPRED_HOME to the root of your Hadoop MapReduce installation.'

exit 1

fi

## Moved to be a runtime check in sqoop.

#if [ ! -d "${HBASE_HOME}" ]; then

# echo "Warning: $HBASE_HOME does not exist! HBase imports will fail."

# echo 'Please set $HBASE_HOME to the root of your HBase installation.'

#fi

## Moved to be a runtime check in sqoop.

#if [ ! -d "${HCAT_HOME}" ]; then

# echo "Warning: $HCAT_HOME does not exist! HCatalog jobs will fail."

# echo 'Please set $HCAT_HOME to the root of your HCatalog installation.'

#fi

#if [ ! -d "${ACCUMULO_HOME}" ]; then

# echo "Warning: $ACCUMULO_HOME does not exist! Accumulo imports will fail."

# echo 'Please set $ACCUMULO_HOME to the root of your Accumulo installation.'

#fi

#if [ ! -d "${ZOOKEEPER_HOME}" ]; then

# echo "Warning: $ZOOKEEPER_HOME does not exist! Accumulo imports will fail."

# echo 'Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.'

#fi

# Where to find the main Sqoop jar

SQOOP_JAR_DIR=$SQOOP_HOME

# If there's a "build" subdir, override with this, so we use

# the newly-compiled copy.

if [ -d "$SQOOP_JAR_DIR/build" ]; then

SQOOP_JAR_DIR="${SQOOP_JAR_DIR}/build"

fi

function add_to_classpath() {

dir=$1

for f in $dir/*.jar; do

SQOOP_CLASSPATH=${SQOOP_CLASSPATH}:$f;

done

export SQOOP_CLASSPATH

}

# Add sqoop dependencies to classpath.

SQOOP_CLASSPATH=""

if [ -d "$SQOOP_HOME/lib" ]; then

add_to_classpath $SQOOP_HOME/lib

fi

# Add HBase to dependency list

#if [ -e "$HBASE_HOME/bin/hbase" ]; then

# TMP_SQOOP_CLASSPATH=${SQOOP_CLASSPATH}:`$HBASE_HOME/bin/hbase classpath`

# SQOOP_CLASSPATH=${TMP_SQOOP_CLASSPATH}

#fi

# Add HCatalog to dependency list

#if [ -e "${HCAT_HOME}/bin/hcat" ]; then

# TMP_SQOOP_CLASSPATH=${SQOOP_CLASSPATH}:`${HCAT_HOME}/bin/hcat -classpath`

# if [ -z "${HIVE_CONF_DIR}" ]; then

# TMP_SQOOP_CLASSPATH=${TMP_SQOOP_CLASSPATH}:${HIVE_CONF_DIR}

# fi

# SQOOP_CLASSPATH=${TMP_SQOOP_CLASSPATH}

#fi

# Add Accumulo to dependency list

if [ -e "$ACCUMULO_HOME/bin/accumulo" ]; then

for jn in `$ACCUMULO_HOME/bin/accumulo classpath | grep file:.*accumulo.*jar | cut -d':' -f2`; do

SQOOP_CLASSPATH=$SQOOP_CLASSPATH:$jn

done

for jn in `$ACCUMULO_HOME/bin/accumulo classpath | grep file:.*zookeeper.*jar | cut -d':' -f2`; do

SQOOP_CLASSPATH=$SQOOP_CLASSPATH:$jn

done

fi

#ZOOCFGDIR=${ZOOCFGDIR:-/etc/zookeeper}

#if [ -d "${ZOOCFGDIR}" ]; then

# SQOOP_CLASSPATH=$ZOOCFGDIR:$SQOOP_CLASSPATH

#fi

SQOOP_CLASSPATH=${SQOOP_CONF_DIR}:${SQOOP_CLASSPATH}

# If there's a build subdir, use Ivy-retrieved dependencies too.

if [ -d "$SQOOP_HOME/build/ivy/lib/sqoop" ]; then

for f in $SQOOP_HOME/build/ivy/lib/sqoop/*/*.jar; do

SQOOP_CLASSPATH=${SQOOP_CLASSPATH}:$f;

done

fi

add_to_classpath ${SQOOP_JAR_DIR}

HADOOP_CLASSPATH="${SQOOP_CLASSPATH}:${HADOOP_CLASSPATH}"

if [ ! -z "$SQOOP_USER_CLASSPATH" ]; then

# User has elements to prepend to the classpath, forcibly overriding

# Sqoop's own lib directories.

export HADOOP_CLASSPATH="${SQOOP_USER_CLASSPATH}:${HADOOP_CLASSPATH}"

fi

export SQOOP_CLASSPATH

export SQOOP_CONF_DIR

export SQOOP_JAR_DIR

export HADOOP_CLASSPATH

export HADOOP_COMMON_HOME

export HADOOP_MAPRED_HOME

#export HBASE_HOME

#export HCAT_HOME

#export HIVE_CONF_DIR

#export ACCUMULO_HOME

#export ZOOKEEPER_HOME

4. 将驱动添加到sqoop/lib中

Sqoop测试运行实例

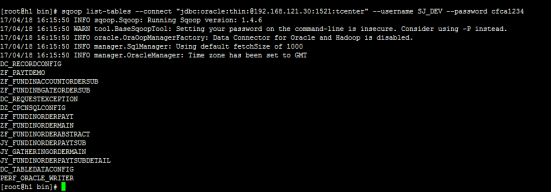

测试是否连接oracle:

执行命令:

sqoop list-tables --connect "jdbc:oracle:thin:@192.168.121.30:1521:tcenter" --username SJ_DEV --password cfca1234

结果显示如下:

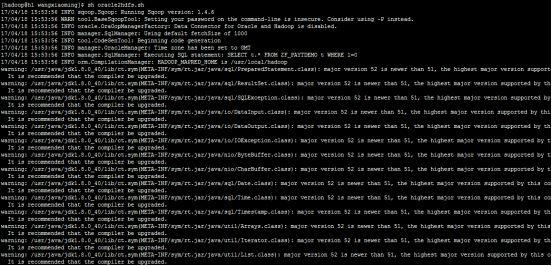

测试将Oracle 数据导出到HDFS

准备脚本oracle2hdfs.sh:

CONNECTURL=jdbc:oracle:thin:@192.168.121.30:1521:tcenter

#oracle username

ORANAME=SJ_DEV

#oracle password

ORAPWD=cfca1234

#table name

oracleTableName=ZF_PAYTDEMO

#orafilename

oraTName=ZF_PAYTDEMO

columns=Systemno

#hdfs

hdfsPath=/user/wangxiaoming/$oraTName 执行命令

sqoop import --append --connect $CONNECTURL --username $ORANAME --password $ORAPWD --target-dir $hdfsPath --num-mappers 1 --table $oracleTableName --columns $columns --fields-terminated-by '\001'

查看执行结果:

查看HDFS文件系统是否有文件:

执行命令:

Hadoop fs -ls /user/wangxiaoming/ZF_PAYTDEMO

查看文件,执行命令:

Hadoop fs –cat /user/wangxiaoming/ZF_PAYTDEMO/part-m-0000000