Redis Cluster服务端 + JedisCluster客户端相关原理、及集群扩容/缩减操作

Redis Cluster环境搭建

Redis Cluster是一种Redis服务端的一种Sharding技术,关于集群环境搭建,在另一博文中,这篇主要介绍cluster集群的一些相关特性与原理,以及集群节点扩容与缩减的实践。

Cluster特性

- 无中心架构,数据按照 slot 存储分布在多个节点,可动态调整slot分布。

- 高扩展性,可线性扩展到1000个节点(官方推荐不超过1000),节点可动态添加或删除。

- 高可性用,通过投票机制完成Slave向Master的转换

- 节点间相互连接,通过集群总线通信,实现数据共享,采用二进制协议,端口为客户端服务端口+10000

- 集群节点不代理查询,而是通过重定向

- cluster模式下,每个节点使用第一个库,即db0。select 1 - 15都会返回错误。

- cluster模式下不支持管道模式

- 对客户端来说,整个cluster被看做是一个整体,与操作单Redis实例一样

Redis Cluster分片原理

1)Slot分配(槽位分配)与 key路由算法

在Redis Cluster中,一共有16384个slot(槽),cluster集群创建的时候,集群中的每Master节点会分摊这16384个slot中的一部分,也就是说每个slot都会对应且只会对应一个Master。

对于客户端的存取请求,服务器会对key进行散列计算,然后与16384取模,来确定它的slot值,从而确定所在Master,达到分片存储的效果。

HASH_SLOT = CRC16(key) mod 16384所以当集群扩容或缩减时,为了保证缓存命中,需要将16384个槽做个再分配,槽中的键值也要迁移。要注意的是,目前这个再分配过程是需要人工参与的,在新加节点或者下线节点时,我们都要保证在处理完成后,所有16384个slot已全部分配完成,否则集群将无法工作。(可以通过配置允许工作,但不建议)

2)集群节点识别

Redis Cluster的新节点识别、故障判断及故障转移能力是通过集群中的每个node都在和其它nodes进行通信,这被称为集群总线(cluster bus)。它们使用特殊的端口号,即对外服务端口号加10000。例如如果某个node的端口号是6379,那么它与其它nodes通信的端口号是16379。nodes之间的通信采用特殊的二进制协议。

3)集群高可用保证

为了增加集群的HA,官方推荐使用Master-Slave模式,如果主节点失效,Redis Cluster会根据选举算法从slave节点中选择一个上升为主节点,保证集群的高可用。

4)重定向(redis-cli加上-c参数后,客户端会自动帮我们重定向)

对客户端来说,整个cluster被看做是一个整体,客户端可以连接任意一个redis实例进行操作,就像操作单一Redis实例一样,当客户端操作的key没有分配到该node上时,Redis会返回Redirected重定向指令(如果key刚好在迁移过程中,则返回Move指令),并告知客户端key的目标ip:port,客户端需要重新发送请求到给定IP地址和端口号拉取数据。这种情况下会有两次往返通信。而随着key的增多,基本上每个客户端与所有Master都会有通信的需求,所以这个时候对客户端连接的缓存就很必要。

这里说名题外话,实际上Jedis客户端在启动的时候已经维护了slot和node之间的长连接,并且最终Jedis会和每个redis实例都建立了连接,而且是一个长连接的线程池。

HashTag原理

服务端通过分片存储,提高了内存利用率,让扩容变得很方便,但同时它也带来了一些新的问题。比如说原本的MSET操作,在单节点上它是一个原子性的操作,所有key要么全部成功要么全部失败。但是在集群环境下,如果将多个key进行分片存储,那么它的原子性就会遭到破坏。所以在redis cluster模式中,对于mset这种批量操作,它要求所有key都映射到相同的slot,即使是映射到同一个节点也不行,否则会出现如下错误。![]()

为了解决这种问题,redis引入了HashTag的概念,说白了也就是一个{}标记。当一个key包含 {} 的时候,服务端就不对整个key做CRC16,而仅对 {} 里面的内容CRC16,所以只要{}中的内容相同,就可以将多个键映射到同一个slot上。比如上面的k01、k02,改成如下就可以执行成功。

同样的,对于mget等批量获取的操作,在cluster集群模式中也必须要求所有key在同一个slot上,否则报出异常,即使这些Key在同一个节点上也不行。

关于集群搭建在以前的博客已经搭建完成,redis-cluster集群支持在运行过程中动态上线或下线节点,

- 上线:会把某些slot及slot中的键值数据从已存在的节点移到新节点上;

- 下线:会把该节点上的slot及数据,转移到其它已存在的节点上。

JedisCluster的工作原理

在JedisCluster初始化的时候,就会随机选择一个node,初始化hashslot -> node映射表,同时为每个节点创建一个JedisPool连接池,每次基于JedisCluster执行操作,首先JedisCluster都会在本地计算key的hashslot,然后在本地映射表找到对应的节点,如果那个node正好还是持有那个hashslot,那么就ok; 如果说进行了reshard这样的操作,可能hashslot已经不在那个node上了,就会返回moved,如果JedisCluter API发现对应的节点返回moved,那么利用该节点的元数据,更新本地的hashslot -> node映射表缓存,重复上面几个步骤,直到找到对应的节点,如果重试超过5次,那么就报错JedisClusterMaxRedirectionException。

public JedisCluster(Set jedisClusterNode, int timeout, int maxRedirections,

final GenericObjectPoolConfig poolConfig) {

this.connectionHandler = new JedisSlotBasedConnectionHandler(jedisClusterNode, poolConfig,

timeout);

this.maxRedirections = maxRedirections;

}

public JedisClusterConnectionHandler(Set nodes,

final GenericObjectPoolConfig poolConfig, int timeout) {

this.cache = new JedisClusterInfoCache(poolConfig, timeout);

initializeSlotsCache(nodes, poolConfig); //初始化

}

public void setNodeIfNotExist(HostAndPort node) {

w.lock();

try {

String nodeKey = getNodeKey(node);

if (nodes.containsKey(nodeKey)) return;

//针对每一个节点创建一个jedispool连接池

JedisPool nodePool = new JedisPool(poolConfig, node.getHost(), node.getPort(), timeout);

nodes.put(nodeKey, nodePool);

} finally {

w.unlock();

}

} 程序启动初始化集群环境:

- 读取配置文件中的节点配置,无论是主从,无论多少个,只拿第一个,获取redis连接实例

- 用获取的redis连接实例执行clusterNodes()方法,实际执行redis服务端cluster nodes命令,获取主从配置信息

- 解析主从配置信息,先把所有节点存放到nodes的map集合中,key为节点的ip:port,value为当前节点的jedisPool

- 解析主节点分配的slots区间段,把slot对应的索引值作为key,第三步中拿到的jedisPool作为value,存储在slots的map集合中就实现了slot槽索引值与jedisPool的映射,这个jedisPool包含了master的节点信息,所以槽和节点是对应的,与redis服务端一致

从集群环境存取值:

- 把key作为参数,执行CRC16算法,获取key对应的slot值

- 通过该slot值,去slots的map集合中获取jedisPool实例

- 通过jedisPool实例获取jedis实例,最终完成redis数据存取工作

上线节点

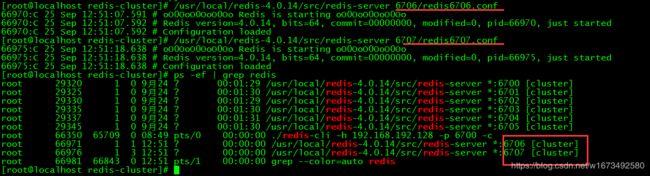

1)首先在/usr/loca/redis-cluster目录下创建6706、6707两个文件夹及配置文件,然后启动节点:

2)将6706作为主节点加入到集群中,语法不清楚的话可以通过./redis-trib.rb help查看

./redis-cli --cluster add-node 新节点ip:port 任一集群节点ip:port 查看节点,发现6706已经成功作为master添加到集群,但此时它还没有分配slot

3)添加6707作为刚才6706的节从点

[root@localhost src]# ./redis-trib.rb add-node --slave --master-id 889459fa1f72c1534cec06c527543af03512cf14 192.168.192.128:6707 192.168.192.128:6700

>>> Adding node 192.168.192.128:6707 to cluster 192.168.192.128:6700

>>> Performing Cluster Check (using node 192.168.192.128:6700)

...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 192.168.192.128:6707 to make it join the cluster.

Waiting for the cluster to join.....

>>> Configure node as replica of 192.168.192.128:6706.

[OK] New node added correctly.4)给新的Master节点分配slot,这将导致数据迁移

[root@localhost src]# ./redis-trib.rb reshard 192.168.192.128:6706 #这里的IP可以是集群中的任何一个ip地址,只是用来连接集群而已。

>>> Performing Cluster Check (using node 192.168.192.128:6706)

...

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 4000 //迁移的slot数量

What is the receiving node ID? 889459fa1f72c1534cec06c527543af03512cf14 //迁移到哪个节点

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1:all //从哪些节点迁出,即迁移源节点,all表示从所有节点迁移,也可以指定源节点ID,然后用done结束。

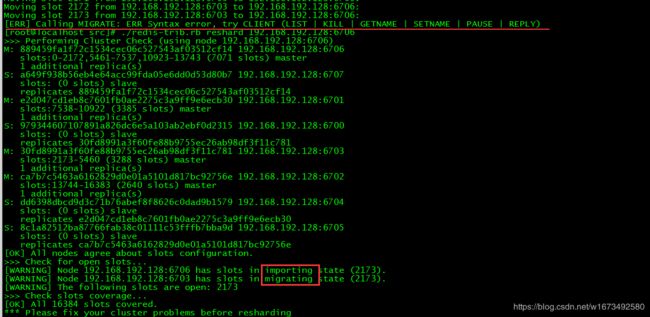

.... 我这里在分配的过程中出现了,[ERR] Calling MIGRATE: ERR Syntax error, try CLIENT (LIST | KILL | GETNAME | SETNAME|PAUSE|REPLY)错误,经查是由于ruby gem安装的redis库版本有问题https://github.com/antirez/redis/issues/5029。

解决步骤:1)卸载重新安装 2)登录到那两个节点,清除importing和migrating状态的slot 3)重新分配成功

[root@localhost src]# gem uninstall redis

...

[root@localhost src]# gem install redis -v 3.3.5

...

[root@localhost src]# ./redis-cli -h 192.168.192.128 -p 6706

192.168.192.128:6706> cluster setslot 2173 stable

OK

192.168.192.128:6706> quit

[root@localhost src]# ./redis-cli -h 192.168.192.128 -p 6703

192.168.192.128:6703> cluster setslot 2173 stable

OK

192.168.192.128:6703> quit

[root@localhost src]# ./redis-trib.rb reshard 192.168.192.128:6706

>>> Performing Cluster Check (using node 192.168.192.128:6706)

...

[root@localhost src]# ./redis-trib.rb check 192.168.192.128:6706

>>> Performing Cluster Check (using node 192.168.192.128:6706)

M: 889459fa1f72c1534cec06c527543af03512cf14 192.168.192.128:6706

slots:0-3584,5461-8991,10923-14876 (11070 slots) master //这里的slot之所以达到1W多,是因为我在本地测试时,对他进行了多次分配操作,加起为差不多给他分了1W多

1 additional replica(s)

...

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.平衡Slot(rebalance)

在上面,由于我本地测试时对6706端口的Redis实例进行了多次分配,所以他的slot数量达到了1W多,通过下面的命令可以平衡各节点的slot。

值得注意的是,如果一个Master的没有分配slot或者slot全部迁出了,那么即使执行平衡操作,这个Master也不会分配到任何slot,关于这一点确实比较奇怪。不知道是不是redis的一个Bug,目前还没找到答案。

[root@localhost src]# ./redis-trib.rb rebalance 192.168.192.128:6700

>>> Performing Cluster Check (using node 192.168.192.128:6700)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Rebalancing across 4 nodes. Total weight = 4

Moving 2589 slots from 192.168.192.128:6706 to 192.168.192.128:6702

###################################################################...

Moving 2220 slots from 192.168.192.128:6706 to 192.168.192.128:6703

###################################################################...

Moving 2165 slots from 192.168.192.128:6706 to 192.168.192.128:6701

###################################################################...

[root@localhost src]#

[root@localhost src]# ./redis-trib.rb check 192.168.192.128:6706

>>> Performing Cluster Check (using node 192.168.192.128:6706)

M: 889459fa1f72c1534cec06c527543af03512cf14 192.168.192.128:6706

slots:8850-8991,10923-14876 (4096 slots) master #平衡之后,各节点的slot数量变得相近

1 additional replica(s)

S: a649f938b56eb4e64acc99fda05e6dd0d53d80b7 192.168.192.128:6707

slots: (0 slots) slave

replicates 889459fa1f72c1534cec06c527543af03512cf14

M: e2d047cd1eb8c7601fb0ae2275c3a9ff9e6ecb30 192.168.192.128:6701

slots:6685-8849,8992-10922 (4096 slots) master

1 additional replica(s)

...

[OK] All 16384 slots covered.

[root@localhost src]# 下线节点

1)先下线Slave

[root@localhost src]# ./redis-trib.rb del-node 192.168.192.128:6700 a649f938b56eb4e64acc99fda05e6dd0d53d80b7

>>> Removing node a649f938b56eb4e64acc99fda05e6dd0d53d80b7 from cluster 192.168.192.128:6700

>>> Sending CLUSTER FORGET messages to the cluster...

>>> SHUTDOWN the node.

[root@localhost src]# 2)将Master的所有Slot迁移

[root@localhost src]# ./redis-trib.rb reshard 192.168.192.128:6706 #

>>> Performing Cluster Check (using node 192.168.192.128:6706)

...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 16384 #要迁移的slot数

What is the receiving node ID? e2d047cd1eb8c7601fb0ae2275c3a9ff9e6ecb30 #迁移到哪个节点

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1:889459fa1f72c1534cec06c527543af03512cf14 #从哪个节点迁出,这里为Master节点。

Source node #2:done

...

Do you want to proceed with the proposed reshard plan (yes/no)? yes

...

[root@localhost src]# ./redis-trib.rb check 192.168.192.128:6706

>>> Performing Cluster Check (using node 192.168.192.128:6706)

M: 889459fa1f72c1534cec06c527543af03512cf14 192.168.192.128:6706

slots: (0 slots) master #slot全部迁出

0 additional replica(s)

M: e2d047cd1eb8c7601fb0ae2275c3a9ff9e6ecb30 192.168.192.128:6701

slots:0-1697,2589-2921,4620-6350,6685-11114 (8192 slots) master

1 additional replica(s) #slot数量*2

S: 979344607107891a826dc6e5a103ab2ebf0d2315 192.168.192.128:6700

slots: (0 slots) slave

replicates 30fd8991a3f60fe88b9755ec26ab98df3f11c781

M: 30fd8991a3f60fe88b9755ec26ab98df3f11c781 192.168.192.128:6703

slots:6351-6684,11115-14876 (4096 slots) master

1 additional replica(s)

...

[OK] All 16384 slots covered.

[root@localhost src]# 注:Master的slot全部迁出后,即使再次执行平衡操作,这个Master也不会再分配slot,但只要有哪怕1个slot都可以平衡成功。如下

[root@localhost src]# ./redis-trib.rb rebalance 192.168.192.128:6706

>>> Performing Cluster Check (using node 192.168.192.128:6706)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Rebalancing across 3 nodes. Total weight = 3 #全部迁出后,执行平衡操作,居然只会按3个节点来分配了

...

[root@localhost src]# ./redis-trib.rb check 192.168.192.128:6706

>>> Performing Cluster Check (using node 192.168.192.128:6706)

M: 889459fa1f72c1534cec06c527543af03512cf14 192.168.192.128:6706

slots: (0 slots) master #平衡后还是0

0 additional replica(s)

M: e2d047cd1eb8c7601fb0ae2275c3a9ff9e6ecb30 192.168.192.128:6701

slots:5320-6350,6685-11114 (5461 slots) master #从结果可以看出已经平衡了

1 additional replica(s)

S: 979344607107891a826dc6e5a103ab2ebf0d2315 192.168.192.128:6700

slots: (0 slots) slave

replicates 30fd8991a3f60fe88b9755ec26ab98df3f11c781

M: 30fd8991a3f60fe88b9755ec26ab98df3f11c781 192.168.192.128:6703

slots:0-1365,6351-6684,11115-14876 (5462 slots) master

1 additional replica(s)

M: ca7b7c5463a6162829d0e01a5101d817bc92756e 192.168.192.128:6702

slots:1366-5319,14877-16383 (5461 slots) master

1 additional replica(s)

S: dd6398dbcd9d3c71b76abef8f8626c0dad9b1579 192.168.192.128:6704

slots: (0 slots) slave

replicates e2d047cd1eb8c7601fb0ae2275c3a9ff9e6ecb30

S: 8c1a82512ba87766fab38c01111c53fffb7bba9d 192.168.192.128:6705

slots: (0 slots) slave

replicates ca7b7c5463a6162829d0e01a5101d817bc92756e

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

[root@localhost src]# 3)下线Master

[root@localhost src]# ./redis-trib.rb del-node 192.168.192.128:6700 889459fa1f72c1534cec06c527543af03512cf14

>>> Removing node 889459fa1f72c1534cec06c527543af03512cf14 from cluster 192.168.192.128:6700

>>> Sending CLUSTER FORGET messages to the cluster...

>>> SHUTDOWN the node.

[root@localhost src]#

[root@localhost src]# ./redis-trib.rb check 192.168.192.128:6700 #6706已移除

>>> Performing Cluster Check (using node 192.168.192.128:6700)

S: 979344607107891a826dc6e5a103ab2ebf0d2315 192.168.192.128:6700

slots: (0 slots) slave

replicates 30fd8991a3f60fe88b9755ec26ab98df3f11c781

S: 8c1a82512ba87766fab38c01111c53fffb7bba9d 192.168.192.128:6705

slots: (0 slots) slave

replicates ca7b7c5463a6162829d0e01a5101d817bc92756e

S: dd6398dbcd9d3c71b76abef8f8626c0dad9b1579 192.168.192.128:6704

slots: (0 slots) slave

replicates e2d047cd1eb8c7601fb0ae2275c3a9ff9e6ecb30

M: 30fd8991a3f60fe88b9755ec26ab98df3f11c781 192.168.192.128:6703

slots:0-1365,6351-6684,11115-14876 (5462 slots) master

1 additional replica(s)

M: ca7b7c5463a6162829d0e01a5101d817bc92756e 192.168.192.128:6702

slots:1366-5319,14877-16383 (5461 slots) master

1 additional replica(s)

M: e2d047cd1eb8c7601fb0ae2275c3a9ff9e6ecb30 192.168.192.128:6701

slots:5320-6350,6685-11114 (5461 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

[root@localhost src]#