spark环境搭建(独立集群模式)

参考文章

Spark Standalone Mode

单机版Spark在Mac上简装笔记[0]

大数据利器:Spark的单机部署与测试笔记

spark 2.0.0

下载最新版本

2.0.0

解压后进入目录

解释

standalone模式为Master-Worker模式,在本地模拟集群模式

启动Master

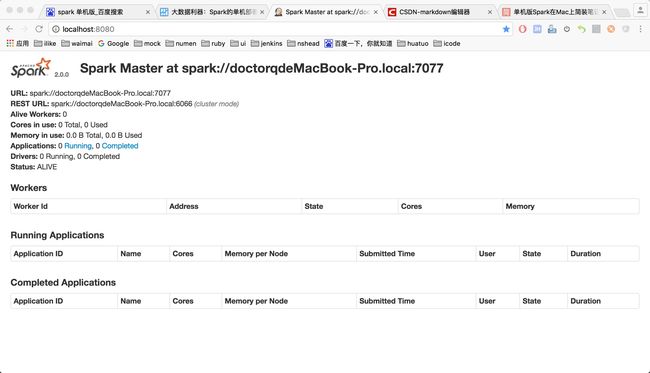

> sbin/start-master.sh打开http://localhost:8080/,界面如下:

启动Slave

根据图中红框标注的URL,开启我们的slave

> sbin/start-slave.sh spark://doctorqdeMacBook-Pro.local:7077这个时候我们再刷新上面的页面http://localhost:8080/

可以看出多了一个Workers信息.

计算实例

shell

>./bin/run-example SparkPi 10

输出:

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

16/09/01 22:54:29 INFO SparkContext: Running Spark version 2.0.0

16/09/01 22:54:29 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

16/09/01 22:54:29 INFO SecurityManager: Changing view acls to: doctorq

16/09/01 22:54:29 INFO SecurityManager: Changing modify acls to: doctorq

16/09/01 22:54:29 INFO SecurityManager: Changing view acls groups to:

16/09/01 22:54:29 INFO SecurityManager: Changing modify acls groups to:

16/09/01 22:54:29 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(doctorq); groups with view permissions: Set(); users with modify permissions: Set(doctorq); groups with modify permissions: Set()

16/09/01 22:54:30 INFO Utils: Successfully started service 'sparkDriver' on port 62953.

16/09/01 22:54:30 INFO SparkEnv: Registering MapOutputTracker

16/09/01 22:54:30 INFO SparkEnv: Registering BlockManagerMaster

16/09/01 22:54:30 INFO DiskBlockManager: Created local directory at /private/var/folders/n9/cdmr_pnj5txgd82gvplmtbh00000gn/T/blockmgr-d431b694-9fb8-42bf-b04a-286369d41ea2

16/09/01 22:54:30 INFO MemoryStore: MemoryStore started with capacity 366.3 MB

16/09/01 22:54:30 INFO SparkEnv: Registering OutputCommitCoordinator

16/09/01 22:54:30 INFO Utils: Successfully started service 'SparkUI' on port 4040.

16/09/01 22:54:30 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://192.168.0.101:4040

16/09/01 22:54:30 INFO SparkContext: Added JAR file:/Users/doctorq/Documents/Developer/spark-2.0.0-bin-hadoop2.7/examples/jars/scopt_2.11-3.3.0.jar at spark://192.168.0.101:62953/jars/scopt_2.11-3.3.0.jar with timestamp 1472741670647

16/09/01 22:54:30 INFO SparkContext: Added JAR file:/Users/doctorq/Documents/Developer/spark-2.0.0-bin-hadoop2.7/examples/jars/spark-examples_2.11-2.0.0.jar at spark://192.168.0.101:62953/jars/spark-examples_2.11-2.0.0.jar with timestamp 1472741670649

16/09/01 22:54:30 INFO Executor: Starting executor ID driver on host localhost

16/09/01 22:54:30 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 62954.

16/09/01 22:54:30 INFO NettyBlockTransferService: Server created on 192.168.0.101:62954

16/09/01 22:54:30 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, 192.168.0.101, 62954)

16/09/01 22:54:30 INFO BlockManagerMasterEndpoint: Registering block manager 192.168.0.101:62954 with 366.3 MB RAM, BlockManagerId(driver, 192.168.0.101, 62954)

16/09/01 22:54:30 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(driver, 192.168.0.101, 62954)

16/09/01 22:54:30 WARN SparkContext: Use an existing SparkContext, some configuration may not take effect.

16/09/01 22:54:31 INFO SharedState: Warehouse path is 'file:/Users/doctorq/Documents/Developer/spark-2.0.0-bin-hadoop2.7/spark-warehouse'.

16/09/01 22:54:31 INFO SparkContext: Starting job: reduce at SparkPi.scala:38

16/09/01 22:54:31 INFO DAGScheduler: Got job 0 (reduce at SparkPi.scala:38) with 10 output partitions

16/09/01 22:54:31 INFO DAGScheduler: Final stage: ResultStage 0 (reduce at SparkPi.scala:38)

16/09/01 22:54:31 INFO DAGScheduler: Parents of final stage: List()

16/09/01 22:54:31 INFO DAGScheduler: Missing parents: List()

16/09/01 22:54:31 INFO DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34), which has no missing parents

16/09/01 22:54:31 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 1832.0 B, free 366.3 MB)

16/09/01 22:54:31 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 1169.0 B, free 366.3 MB)

16/09/01 22:54:31 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 192.168.0.101:62954 (size: 1169.0 B, free: 366.3 MB)

16/09/01 22:54:31 INFO SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:1012

16/09/01 22:54:31 INFO DAGScheduler: Submitting 10 missing tasks from ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34)

16/09/01 22:54:31 INFO TaskSchedulerImpl: Adding task set 0.0 with 10 tasks

16/09/01 22:54:31 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, localhost, partition 0, PROCESS_LOCAL, 5478 bytes)

16/09/01 22:54:31 INFO TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, localhost, partition 1, PROCESS_LOCAL, 5478 bytes)

16/09/01 22:54:31 INFO TaskSetManager: Starting task 2.0 in stage 0.0 (TID 2, localhost, partition 2, PROCESS_LOCAL, 5478 bytes)

16/09/01 22:54:31 INFO TaskSetManager: Starting task 3.0 in stage 0.0 (TID 3, localhost, partition 3, PROCESS_LOCAL, 5478 bytes)

16/09/01 22:54:31 INFO Executor: Running task 1.0 in stage 0.0 (TID 1)

16/09/01 22:54:31 INFO Executor: Running task 0.0 in stage 0.0 (TID 0)

16/09/01 22:54:31 INFO Executor: Running task 2.0 in stage 0.0 (TID 2)

16/09/01 22:54:31 INFO Executor: Running task 3.0 in stage 0.0 (TID 3)

16/09/01 22:54:31 INFO Executor: Fetching spark://192.168.0.101:62953/jars/scopt_2.11-3.3.0.jar with timestamp 1472741670647

16/09/01 22:54:31 INFO TransportClientFactory: Successfully created connection to /192.168.0.101:62953 after 45 ms (0 ms spent in bootstraps)

16/09/01 22:54:31 INFO Utils: Fetching spark://192.168.0.101:62953/jars/scopt_2.11-3.3.0.jar to /private/var/folders/n9/cdmr_pnj5txgd82gvplmtbh00000gn/T/spark-ca630415-5293-4442-950c-0dd300afce94/userFiles-ece307aa-39b1-4125-bb8f-c336385f0543/fetchFileTemp6343692004556899262.tmp

16/09/01 22:54:32 INFO Executor: Adding file:/private/var/folders/n9/cdmr_pnj5txgd82gvplmtbh00000gn/T/spark-ca630415-5293-4442-950c-0dd300afce94/userFiles-ece307aa-39b1-4125-bb8f-c336385f0543/scopt_2.11-3.3.0.jar to class loader

16/09/01 22:54:32 INFO Executor: Fetching spark://192.168.0.101:62953/jars/spark-examples_2.11-2.0.0.jar with timestamp 1472741670649

16/09/01 22:54:32 INFO Utils: Fetching spark://192.168.0.101:62953/jars/spark-examples_2.11-2.0.0.jar to /private/var/folders/n9/cdmr_pnj5txgd82gvplmtbh00000gn/T/spark-ca630415-5293-4442-950c-0dd300afce94/userFiles-ece307aa-39b1-4125-bb8f-c336385f0543/fetchFileTemp5957043619844169017.tmp

16/09/01 22:54:32 INFO Executor: Adding file:/private/var/folders/n9/cdmr_pnj5txgd82gvplmtbh00000gn/T/spark-ca630415-5293-4442-950c-0dd300afce94/userFiles-ece307aa-39b1-4125-bb8f-c336385f0543/spark-examples_2.11-2.0.0.jar to class loader

16/09/01 22:54:32 INFO Executor: Finished task 1.0 in stage 0.0 (TID 1). 1032 bytes result sent to driver

16/09/01 22:54:32 INFO Executor: Finished task 3.0 in stage 0.0 (TID 3). 1032 bytes result sent to driver

16/09/01 22:54:32 INFO Executor: Finished task 0.0 in stage 0.0 (TID 0). 1032 bytes result sent to driver

16/09/01 22:54:32 INFO Executor: Finished task 2.0 in stage 0.0 (TID 2). 945 bytes result sent to driver

16/09/01 22:54:32 INFO TaskSetManager: Starting task 4.0 in stage 0.0 (TID 4, localhost, partition 4, PROCESS_LOCAL, 5478 bytes)

16/09/01 22:54:32 INFO Executor: Running task 4.0 in stage 0.0 (TID 4)

16/09/01 22:54:32 INFO TaskSetManager: Starting task 5.0 in stage 0.0 (TID 5, localhost, partition 5, PROCESS_LOCAL, 5478 bytes)

16/09/01 22:54:32 INFO TaskSetManager: Starting task 6.0 in stage 0.0 (TID 6, localhost, partition 6, PROCESS_LOCAL, 5478 bytes)

16/09/01 22:54:32 INFO Executor: Running task 6.0 in stage 0.0 (TID 6)

16/09/01 22:54:32 INFO TaskSetManager: Finished task 3.0 in stage 0.0 (TID 3) in 515 ms on localhost (1/10)

16/09/01 22:54:32 INFO TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 522 ms on localhost (2/10)

16/09/01 22:54:32 INFO TaskSetManager: Starting task 7.0 in stage 0.0 (TID 7, localhost, partition 7, PROCESS_LOCAL, 5478 bytes)

16/09/01 22:54:32 INFO Executor: Running task 7.0 in stage 0.0 (TID 7)

16/09/01 22:54:32 INFO TaskSetManager: Finished task 2.0 in stage 0.0 (TID 2) in 523 ms on localhost (3/10)

16/09/01 22:54:32 INFO Executor: Running task 5.0 in stage 0.0 (TID 5)

16/09/01 22:54:32 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 592 ms on localhost (4/10)

16/09/01 22:54:32 INFO Executor: Finished task 6.0 in stage 0.0 (TID 6). 872 bytes result sent to driver

16/09/01 22:54:32 INFO TaskSetManager: Starting task 8.0 in stage 0.0 (TID 8, localhost, partition 8, PROCESS_LOCAL, 5478 bytes)

16/09/01 22:54:32 INFO TaskSetManager: Finished task 6.0 in stage 0.0 (TID 6) in 48 ms on localhost (5/10)

16/09/01 22:54:32 INFO Executor: Finished task 7.0 in stage 0.0 (TID 7). 872 bytes result sent to driver

16/09/01 22:54:32 INFO Executor: Running task 8.0 in stage 0.0 (TID 8)

16/09/01 22:54:32 INFO TaskSetManager: Starting task 9.0 in stage 0.0 (TID 9, localhost, partition 9, PROCESS_LOCAL, 5478 bytes)

16/09/01 22:54:32 INFO TaskSetManager: Finished task 7.0 in stage 0.0 (TID 7) in 47 ms on localhost (6/10)

16/09/01 22:54:32 INFO Executor: Running task 9.0 in stage 0.0 (TID 9)

16/09/01 22:54:32 INFO Executor: Finished task 5.0 in stage 0.0 (TID 5). 872 bytes result sent to driver

16/09/01 22:54:32 INFO TaskSetManager: Finished task 5.0 in stage 0.0 (TID 5) in 93 ms on localhost (7/10)

16/09/01 22:54:32 INFO Executor: Finished task 4.0 in stage 0.0 (TID 4). 872 bytes result sent to driver

16/09/01 22:54:32 INFO TaskSetManager: Finished task 4.0 in stage 0.0 (TID 4) in 106 ms on localhost (8/10)

16/09/01 22:54:32 INFO Executor: Finished task 8.0 in stage 0.0 (TID 8). 959 bytes result sent to driver

16/09/01 22:54:32 INFO TaskSetManager: Finished task 8.0 in stage 0.0 (TID 8) in 71 ms on localhost (9/10)

16/09/01 22:54:32 INFO Executor: Finished task 9.0 in stage 0.0 (TID 9). 872 bytes result sent to driver

16/09/01 22:54:32 INFO TaskSetManager: Finished task 9.0 in stage 0.0 (TID 9) in 71 ms on localhost (10/10)

16/09/01 22:54:32 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

16/09/01 22:54:32 INFO DAGScheduler: ResultStage 0 (reduce at SparkPi.scala:38) finished in 0.722 s

16/09/01 22:54:32 INFO DAGScheduler: Job 0 finished: reduce at SparkPi.scala:38, took 1.061299 s

Pi is roughly 3.1395391395391394

16/09/01 22:54:32 INFO SparkUI: Stopped Spark web UI at http://192.168.0.101:4040

16/09/01 22:54:32 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

16/09/01 22:54:32 INFO MemoryStore: MemoryStore cleared

16/09/01 22:54:32 INFO BlockManager: BlockManager stopped

16/09/01 22:54:32 INFO BlockManagerMaster: BlockManagerMaster stopped

16/09/01 22:54:32 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

16/09/01 22:54:32 INFO SparkContext: Successfully stopped SparkContext

16/09/01 22:54:32 INFO ShutdownHookManager: Shutdown hook called

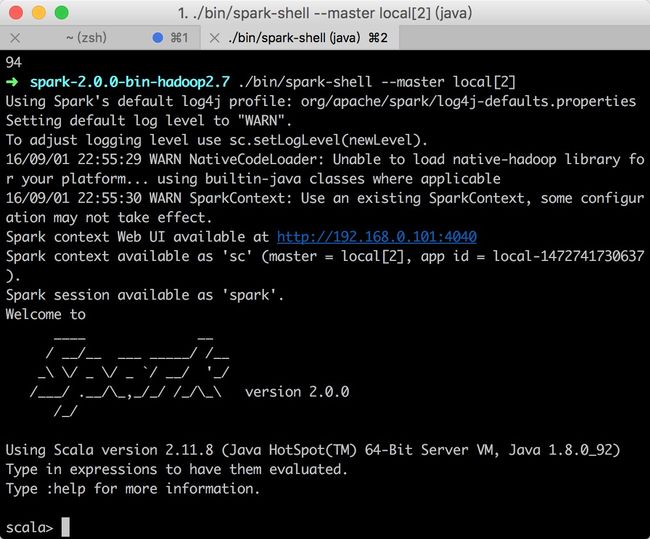

16/09/01 22:54:32 INFO ShutdownHookManager: Deleting directory /private/var/folders/n9/cdmr_pnj5txgd82gvplmtbh00000gn/T/spark-ca630415-5293-4442-950c-0dd300afce94进入scala console

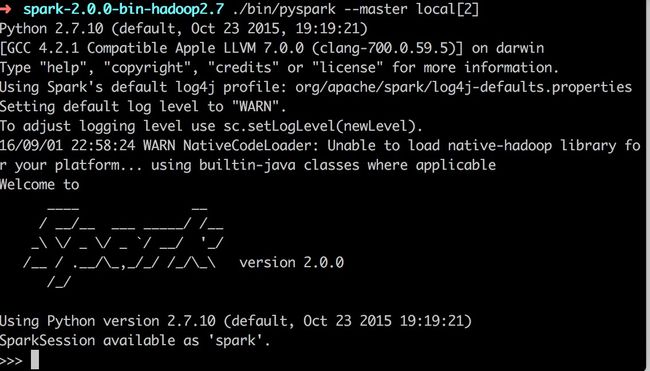

> ./bin/spark-shell --master local[2]进入python console

> ./bin/pyspark --master local[2]python api

R example

R shell