CS231n assignment1 KNN

时间:2019/3/25

内容:assignment1-KNN

参考网上大佬的代码。还是计算机视觉小白,有错误欢迎指正~

https://blog.csdn.net/donghai_yu/article/details/79344539

https://blog.csdn.net/qq_26414307/article/details/79318555

KNN部分

完整代码

import numpy as np

class KNearestNeighbor(object):

""" a kNN classifier with L2 distance """

def __init__(self):

pass

def train(self, X, y):

"""

Train the classifier. For k-nearest neighbors this is just

memorizing the training data.

Inputs:

- X: A numpy array of shape (num_train, D) containing the training data

consisting of num_train samples each of dimension D.

- y: A numpy array of shape (N,) containing the training labels, where

y[i] is the label for X[i].

"""

self.X_train = X

self.y_train = y

def predict(self, X, k=1, num_loops=0):

"""

Predict labels for test data using this classifier.

Inputs:

- X: A numpy array of shape (num_test, D) containing test data consisting

of num_test samples each of dimension D.

- k: The number of nearest neighbors that vote for the predicted labels.

- num_loops: Determines which implementation to use to compute distances

between training points and testing points.

Returns:

- y: A numpy array of shape (num_test,) containing predicted labels for the

test data, where y[i] is the predicted label for the test point X[i].

"""

if num_loops == 0:

dists = self.compute_distances_no_loops(X)

elif num_loops == 1:

dists = self.compute_distances_one_loop(X)

elif num_loops == 2:

dists = self.compute_distances_two_loops(X)

else:

raise ValueError('Invalid value %d for num_loops' % num_loops)

return self.predict_labels(dists, k=k)

def compute_distances_two_loops(self, X):

"""

Compute the distance between each test point in X and each training point

in self.X_train using a nested loop over both the training data and the

test data.

Inputs:

- X: A numpy array of shape (num_test, D) containing test data.

Returns:

- dists: A numpy array of shape (num_test, num_train) where dists[i, j]

is the Euclidean distance between the ith test point and the jth training

point.

"""

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

for i in range(num_test):

for j in range(num_train):

#####################################################################

# TODO: #

# Compute the l2 distance between the ith test point and the jth #

# training point, and store the result in dists[i, j]. You should #

# not use a loop over dimension. #

#####################################################################

dists[i,j]=np.sqrt(np.sum((X[i,:]-self.X_train[j,:])**2))

#####################################################################

# END OF YOUR CODE #

#####################################################################

return dists

def compute_distances_one_loop(self, X):

"""

Compute the distance between each test point in X and each training point

in self.X_train using a single loop over the test data.

Input / Output: Same as compute_distances_two_loops

"""

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

for i in range(num_test):

#######################################################################

# TODO: #

# Compute the l2 distance between the ith test point and all training #

# points, and store the result in dists[i, :]. #

#######################################################################

dists[i,:]=np.sqrt(np.sum(np.square(self.X_train-X[i,:]),axis=1))

#######################################################################

# END OF YOUR CODE #

#######################################################################

return dists

def compute_distances_no_loops(self, X):

"""

Compute the distance between each test point in X and each training point

in self.X_train using no explicit loops.

Input / Output: Same as compute_distances_two_loops

"""

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

#########################################################################

# TODO: #

# Compute the l2 distance between all test points and all training #

# points without using any explicit loops, and store the result in #

# dists. #

# #

# You should implement this function using only basic array operations; #

# in particular you should not use functions from scipy. #

# #

# HINT: Try to formulate the l2 distance using matrix multiplication #

# and two broadcast sums. #

#########################################################################

dists = np.multiply(np.dot(X,self.X_train.T),-2)

sq1 = np.sum(np.square(X),axis=1,keepdims = True)

sq2 = np.sum(np.square(self.X_train),axis=1)

dists = np.add(dists,sq1)

dists = np.add(dists,sq2)

dists = np.sqrt(dists)

#########################################################################

# END OF YOUR CODE #

#########################################################################

return dists

def predict_labels(self, dists, k=1):

"""

Given a matrix of distances between test points and training points,

predict a label for each test point.

Inputs:

- dists: A numpy array of shape (num_test, num_train) where dists[i, j]

gives the distance betwen the ith test point and the jth training point.

Returns:

- y: A numpy array of shape (num_test,) containing predicted labels for the

test data, where y[i] is the predicted label for the test point X[i].

"""

num_test = dists.shape[0]

y_pred = np.zeros(num_test)

for i in range(num_test):

# A list of length k storing the labels of the k nearest neighbors to

# the ith test point.

closest_y = []

#########################################################################

# TODO: #

# Use the distance matrix to find the k nearest neighbors of the ith #

# testing point, and use self.y_train to find the labels of these #

# neighbors. Store these labels in closest_y. #

# Hint: Look up the function numpy.argsort. #

#########################################################################

y_indicies=np.argsort(dists[i,:],axis=0)

#########################################################################

# TODO: #

# Now that you have found the labels of the k nearest neighbors, you #

# need to find the most common label in the list closest_y of labels. #

# Store this label in y_pred[i]. Break ties by choosing the smaller #

# label. #

#########################################################################

closest_y=self.y_train[y_indicies[: k]]

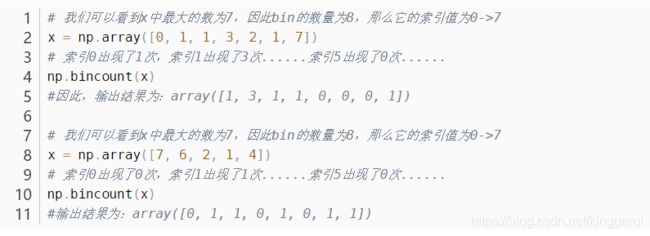

y_pred[i]=np.argmax(np.bincount(closest_y))

#########################################################################

# END OF YOUR CODE #

#########################################################################

return y_pred

代码理解

习题中使用了三种方法来计算L2,首先是用两个循环实现:

def compute_distances_two_loops(self, X):

"""

Compute the distance between each test point in X and each training point

in self.X_train using a nested loop over both the training data and the

test data.

Inputs:

- X: A numpy array of shape (num_test, D) containing test data.

Returns:

- dists: A numpy array of shape (num_test, num_train) where dists[i, j]

is the Euclidean distance between the ith test point and the jth training

point.

"""

num_test = X.shape[0] # 读取X的第一维长度,X的第一维即num_test(见上面注释)

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

for i in range(num_test):

for j in range(num_train):

#####################################################################

# TODO: #

# Compute the l2 distance between the ith test point and the jth #

# training point, and store the result in dists[i, j]. You should #

# not use a loop over dimension. #

#####################################################################

# [i,:]数组切片,是取第1维中下标为i的元素的所有数据,也就是第i行全部元素(从0开始),此处表示第i个样本点

# KNN算法需要计算测试集的点与训练集所有点的距离,也就是训练集与测试集两两点求距离

# L2是欧氏距离(所有点差的平方之和,再开根号)

dists[i,j]=np.sqrt(np.sum((X[i,:]-self.X_train[j,:])**2))

#####################################################################

# END OF YOUR CODE #

#####################################################################

return dists

然后用一个循环实现:

def compute_distances_one_loop(self, X):

"""

Compute the distance between each test point in X and each training point

in self.X_train using a single loop over the test data.

Input / Output: Same as compute_distances_two_loops

"""

# 以下三行与两个循环的一样

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

for i in range(num_test):

#######################################################################

# TODO: #

# Compute the l2 distance between the ith test point and all training #

# points, and store the result in dists[i, :]. #

#######################################################################

# axis=1是按行相加

# numpy的broadcast

dists[i,:]=np.sqrt(np.sum(np.square(self.X_train-X[i,:]),axis=1))

#######################################################################

# END OF YOUR CODE #

#######################################################################

return dists

不用循环实现:

def compute_distances_no_loops(self, X):

"""

Compute the distance between each test point in X and each training point

in self.X_train using no explicit loops.

Input / Output: Same as compute_distances_two_loops

"""

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

#########################################################################

# TODO: #

# Compute the l2 distance between all test points and all training #

# points without using any explicit loops, and store the result in #

# dists. #

# #

# You should implement this function using only basic array operations; #

# in particular you should not use functions from scipy. #

# #

# HINT: Try to formulate the l2 distance using matrix multiplication #

# and two broadcast sums. #

#########################################################################

# 思想:(a-b)^2 = a^2 + b^2 -2ab

dists = np.multiply(np.dot(X,self.X_train.T),-2)

#-2*X*X_train.T ==> [num_test,num_train]

sq1 = np.sum(np.square(X),axis=1,keepdims = True)

#np.square为对元素进行平方

#keepdims=True:如果不加,结果为列向量;如果加,则结果为[num_test,1](矩阵)

sq2 = np.sum(np.square(self.X_train),axis=1)

#结果为[num_train,1]

dists = np.add(dists,sq1)

#矩阵加也有broadcast!!

dists = np.add(dists,sq2)

#矩阵加一维向量,将向量转为行向量,broadcast!!

dists = np.sqrt(dists)

#########################################################################

# END OF YOUR CODE #

#########################################################################

return dists

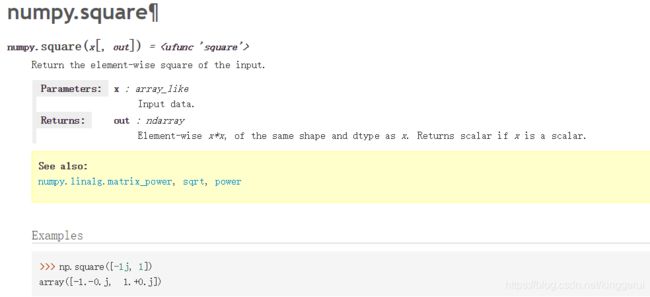

补充关于nump.square的用法:

对于矩阵做平方,结果矩阵和输入的shape相同,结果中的每一个元素是原来位置元素的平方

numpy库中的reshape(-1,1)中-1被理解为unspecified value,意思是未指定为给定的。因此reshape(-1,1)变成1列,同理reshape(1,-1)会变成1行

可以参考: https://blog.csdn.net/wld914674505/article/details/80460042

预测部分:

def predict_labels(self, dists, k=1):

"""

Given a matrix of distances between test points and training points,

predict a label for each test point.

Inputs:

- dists: A numpy array of shape (num_test, num_train) where dists[i, j]

gives the distance betwen the ith test point and the jth training point.

Returns:

- y: A numpy array of shape (num_test,) containing predicted labels for the

test data, where y[i] is the predicted label for the test point X[i].

"""

num_test = dists.shape[0] # 表示dist的行数

y_pred = np.zeros(num_test)

for i in range(num_test):

# A list of length k storing the labels of the k nearest neighbors to

# the ith test point.

closest_y = []

#########################################################################

# TODO: #

# Use the distance matrix to find the k nearest neighbors of the ith #

# testing point, and use self.y_train to find the labels of these #

# neighbors. Store these labels in closest_y. #

# Hint: Look up the function numpy.argsort. #

#########################################################################

# argsort返回数组中值(此处是距离)从小到大的索引值

# [i,:]表示第i行的所有元素

# 主要y_indicies中都是一些索引值

# 由于dists形状是(num_test,num_train),因此每行最前面的是训练集中距离该测试点最近的

y_indicies=np.argsort(dists[i,:],axis=0)

#########################################################################

# TODO: #

# Now that you have found the labels of the k nearest neighbors, you #

# need to find the most common label in the list closest_y of labels. #

# Store this label in y_pred[i]. Break ties by choosing the smaller #

# label. #

#########################################################################

# [: k]表示从开始到k

# argmax返回最大值(此处为次数最大值)对应的索引值;具体分类也是用具体数字表示的所以不会有问题

closest_y=self.y_train[y_indicies[: k]] # 距离最近的训练集前k个点的label

y_pred[i]=np.argmax(np.bincount(closest_y))

#########################################################################

# END OF YOUR CODE #

#########################################################################

return y_pred

交叉验证部分

完整代码

num_folds = 5

k_choices = [1, 3, 5, 8, 10, 12, 15, 20, 50, 100]

X_train_folds = []

y_train_folds = []

################################################################################

# TODO: #

# Split up the training data into folds. After splitting, X_train_folds and #

# y_train_folds should each be lists of length num_folds, where #

# y_train_folds[i] is the label vector for the points in X_train_folds[i]. #

# Hint: Look up the numpy array_split function. #

################################################################################

# Your code

y_train_ = y_train.reshape(-1, 1)

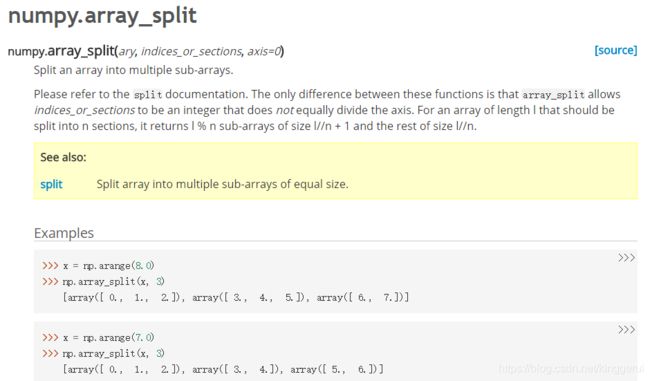

X_train_folds = np.array_split(X_train, num_folds)

y_train_folds = np.array_split(y_train, num_folds)

################################################################################

# END OF YOUR CODE #

################################################################################

# A dictionary holding the accuracies for different values of k that we find

# when running cross-validation. After running cross-validation,

# k_to_accuracies[k] should be a list of length num_folds giving the different

# accuracy values that we found when using that value of k.

k_to_accuracies = {}

################################################################################

# TODO: #

# Perform k-fold cross validation to find the best value of k. For each #

# possible value of k, run the k-nearest-neighbor algorithm num_folds times, #

# where in each case you use all but one of the folds as training data and the #

# last fold as a validation set. Store the accuracies for all fold and all #

# values of k in the k_to_accuracies dictionary. #

################################################################################

# Your code

for k in k_choices:

accuracies = []

for i in range(num_folds):

X_test_cv = X_train_folds[i]

X_train_cv = np.vstack(X_train_folds[:i] + X_train_folds[i+1:])

y_test_cv = y_train_folds[i]

y_train_cv = np.hstack(y_train_folds[:i]+y_train_folds[i+1:])

classifier.train(X_train_cv, y_train_cv)

dists_cv = classifier.compute_distances_no_loops(X_test_cv)

y_test_pred = classifier.predict_labels(dists_cv, k)

num_correct = np.sum(y_test_pred == y_test_cv)

accuracies.append(float(num_correct) * num_folds / num_training)

k_to_accuracies[k] = accuracies

################################################################################

# END OF YOUR CODE #

################################################################################

# Print out the computed accuracies

for k in sorted(k_to_accuracies):

for accuracy in k_to_accuracies[k]:

print('k = %d, accuracy = %f' % (k, accuracy))

代码理解

y_train_ = y_train.reshape(-1, 1)

X_train_folds = np.array_split(X_train, num_folds)

y_train_folds = np.array_split(y_train, num_folds)

for k in k_choices:

accuracies = []

for i in range(num_folds):

X_test_cv = X_train_folds[i]

# 具体这样拼接的原因要根据data的形状分析

X_train_cv = np.vstack(X_train_folds[:i] + X_train_folds[i+1:])

y_test_cv = y_train_folds[i]

y_train_cv = np.hstack(y_train_folds[:i]+y_train_folds[i+1:])

classifier.train(X_train_cv, y_train_cv)

dists_cv = classifier.compute_distances_no_loops(X_test_cv)

y_test_pred = classifier.predict_labels(dists_cv, k)

num_correct = np.sum(y_test_pred == y_test_cv)

accuracies.append(float(num_correct) * num_folds / num_training)

k_to_accuracies[k] = accuracies