【R语言】文本挖掘-情感分析

做中文文本挖掘一定会看到Rwordseg包,但是这是使用R以来遇见过最难安装的一个包,没有之一!!(可能将来还会遇见之二,毕竟本主还处于菜鸟级别)

低版本安装办法如下,曾经用R2.8的时候安装成功过,but,用R3.2.4就fail了

install.packages("Rwordseg", repos = "http://R-Forge.R-project.org")

install.packages("Rwordseg", repos = "http://R-Forge.R-project.org", type = "source")解压缩,copy到R的library文件夹里。成功了!!

library(Rwordseg)

segmentCN('我昨天买了10斤苹果',returnType = 'tm') 以下是对某景区点评内容的情感分析。

library(rJava)

library(Rwordseg)

setwd("D:\\Documents\\work\\myself_learn\\情感分析\\ntusd")

###################获取点评数据###################

p_comment_data<-read.csv("1黄山风景区_3.2.3. 语义分析(积极).csv",stringsAsFactors=FALSE)

n_comment_data<-read.csv("1黄山风景区_3.2.3. 语义分析(消极).csv",stringsAsFactors=FALSE)

comment_data<-rbind(p_comment_data,n_comment_data)

###################分句###################

data<-comment_data$comment

pattern_list="[,]|[,]|[.]|[?]|[?]|[:]|[:]|[~]|[~]|[…]|[!]|[!]"

#pattern_list="[.]|[?]|[?]|[~]|[~]|[…]|[!]|[!]"

replace_data1<-gsub(pattern="-", replacement="", data)

replace_data<-gsub(pattern=pattern_list, replacement="。", replace_data1)

split_data<-unlist(strsplit(replace_data,"[。]"))

del_num_data<-gsub("[0-9 0123456789]","",split_data)

is.vector(del_num_data)

del_num_data<-as.data.frame(del_num_data,stringsAsFactors=FALSE)

is.data.frame(del_num_data)

str(del_num_data)

names(del_num_data)<-"comment"

comment_sentence_data<-subset(del_num_data,comment!="")

#write.csv(split_data,"split_comment.csv",row.names = FALSE)

#write.csv(del_num_data,"del_num_data_comment.csv",row.names = FALSE)

#write.csv(comment_sentence_data,"comment_sentence_data.csv",row.names = FALSE)

#############################################

#negative <-readLines("ntusd-negative.txt",encoding = "UTF-8")

#positive <-readLines("ntusd-positive.txt",encoding = "UTF-8")

dict<-read.csv("dict_黄山.csv",stringsAsFactors=FALSE)

nega_data <-dict$negetive

posi_data<-dict$positive

insertWords(dict$insert_words)

#segmentCN('我去年自驾游,只是玩了大半个中国',returnType = 'tm')

comment_split <-segmentCN(comment_sentence_data$comment) #分词

head(comment_split)

#write.txt(comment_split,"comment_split.txt",row.names = FALSE)

#write.table(comment_split,"comment_split.txt")

comment_split[3]

comment_split[[1]]

comment_split[[1]][4]

is.list(comment_split)

getEmotionalType <- function(x,pwords,nwords){

emotionType <-numeric(0)

xLen <-length(x)

emotionType[1:xLen]<- 0

index <- 1

while(index <=xLen){

yLen <-length(x[[index]])

index2 <- 1

while(index2<= yLen){

if(length(pwords[pwords==x[[index]][index2]]) >= 1){

emotionType[index] <- emotionType[index] +1

}else if(length(nwords[nwords==x[[index]][index2]]) >= 1){

emotionType[index] <- emotionType[index] - 1

}

index2<- index2 + 1

}

#获取进度

if(index%%100==0){

print(round(index/xLen,3))

}

index <-index +1

}

emotionType

}

EmotionRank <-getEmotionalType(comment_split,posi_data,nega_data)

#getEmotionalType(commentTemp,positive,negative)

comment<-as.data.frame(comment_sentence_data$comment)

EmotionRank<-as.data.frame(EmotionRank)

emotionresult_data<-cbind(comment,EmotionRank)

write.csv(emotionresult_data,"emotionresult_data.csv",row.names = FALSE)

##############校验##############

positive[positive==commentTemp[[7]][6]]

negative[negative==commentTemp[[7]][6]]

commentTemp[7]

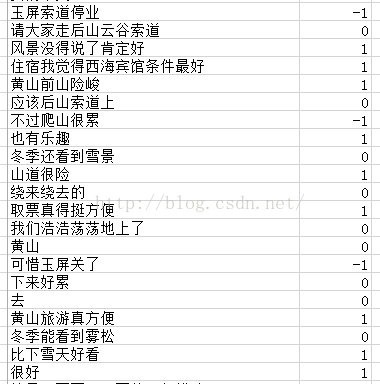

情感分析结果:

此算法比较适合简单句,对于双重否定的复杂句子处理能力比较差,有待优化。

哈哈,至少这次让我了解了情感分析的基本原理,曾经以为是一种非常高深的挖掘算法,然并卵,so easy,从此开始对所有精度不过关的情感分析,抱平和的心态。

参考网址:

http://www.tuicool.com/articles/amaY3iz

http://download.csdn.net/detail/hfutxrg/1063945