pointpillars----nuscene多分类篇

nuScence Evaluation metrics

- mAP:准确率

We use the well-known Average Precision metric, but define a match by considering the 2D center distance on the ground plane rather than intersection over union based affinities. Specifically, we match predictions with the ground truth objects that have the smallest center-distance up to a certain threshold. For a given match threshold we calculate average precision (AP) by integrating the recall vs precision curve for recalls and precisions > 0.1. We finally average over match thresholds of {0.5, 1, 2, 4} meters and compute the mean across classes

大致含义:

(1)官方将采用中心距离最小来确定预测和gt之间匹配

(2)对reall和percision都>0.1的进行积分计算AP

(3)mAP计算和距离相关

- mATE:translation

Euclidean center distance in 2D in meters.

在二维上中心的欧式距离

- mASE:scalel

Calculated as 1 - IOU after aligning centers and orientation.

在对其方向后和中心后计算的1 - IOU(也许就是IOU吧)

- mAOE:orientation

Smallest yaw angle difference between prediction and ground-truth in radians. Orientation error is evaluated at 360 degree for all classes except barriers where it is only evaluated at 180 degrees. Orientation errors for cones are ignored.

(1)预测的方向和gt之间最小的弧度误差。

(2)barriers 为180度内,其余的都是360度,同时锥形体不计算这个。

- mAVE:velocity

Absolute velocity error in m/s. Velocity error for barriers and cones are ignored.

预测的速度误差

- mAAE: attribute

Calculated as 1 - acc, where acc is the attribute classification accuracy. Attribute error for barriers and cones are ignored.

属性分类精度。

- NDS(nuScenes detection score)

We consolidate the above metrics by computing a weighted sum: mAP, mATE, mASE, mAOE, mAVE and mAAE. As a first step we convert the TP errors to TP scores as TP_score = max(1 - TP_error, 0.0). We then assign a weight of 5 to mAP and 1 to each of the 5 TP scores and calculate the normalized sum.

AP和剩下的几种测量55开加权

任务

The goal of this task is to place a 3D bounding box around 10 different object categories, as well as estimating a set of attributes and the current velocity vector.(差不多10类别检测+预测速度)

1.数据预处理

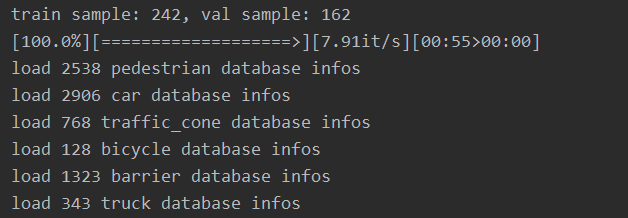

- 先采用Mini_trainal数据集。

- 数据分割:

python create_data.py nuscenes_data_prep --data_path=NUSCENES_TRAINVAL_DATASET_ROOT --version="v1.0-trainval" --max_sweeps=10 --dataset_name="NuScenesDataset"

python create_data.py nuscenes_data_prep --data_path=NUSCENES_TEST_DATASET_ROOT --version="v1.0-test" --max_sweeps=10

在对Test数据集做分割时,是要把下面这句代码注释掉的。

create_groundtruth_database(dataset_name, root_path, Path(root_path) / name)

2 run

按照代码中README中讲的,跑起来就可以了:

python ./pytorch/train.py train --config_path=./configs/nuscenes/all.fhd.config --model_dir=/path/to/model_dir

3 使用作者预训练好的模型测试

python train.py evaluate

--config_path=../configs/nuscenes/all.pp.mhead.config

--model_dir=/home/sn/pointpillars/second/nuScence_model/

--measure_time=True

--batch_size=1

需要注意一点的是,这里选用的nuscence的测试文件需要是v1.0的版本

4 读代码

在前面架构篇,网络结构篇,loss篇的基础上向下讲。

配置文件采用的是在nuscenes中唯一一个使用Multihead的文件:all.pp.mhead.config

网络配置:

-

network_class_name: "VoxelNetNuscenesMultiHead"

voxel_feature_extractor:PillarFeatureNetRadius

middle_feature_extractor:PointPillarsScatter

rpn:RPNNoHead

4.1 前馈过程

前馈过程一样分三个过程

- voxel_feature_extractor

- spatial_features

- rpn_out

4.1.1 voxel_feature_extractor

(1)进去的shape是 [ n u m − p o i n t s , 60 , 4 ] [num-points,60,4] [num−points,60,4],经过该层次变成 [ n u m − p o i n t s , 64 ] [num-points,64] [num−points,64]

(2)debugPillarFeatureNetRadius:第一,输入的 [ n u m − p o i n t s , 60 , 4 ] [num-points,60,4] [num−points,60,4]的大小,对前三个维度 ( x , y , z ) (x,y,z) (x,y,z)求均值

第二,每个voxel中的点减去均值点得到相应的局部信息 f − c l u s t e r [ n u m − p o i n t s , 60 , 3 ] f-cluster[num-points,60,3] f−cluster[num−points,60,3]

第三,计算 x , y x,y x,y两个轴的半径信息,对应的二范数,再和剩下的z轴和r信息concate得到features_radius [ n u m − p o i n t s , 60 , 3 ] [num-points,60,3] [num−points,60,3]

第四,计算每个voxel的中心f_center(只是针对x和y而言的) [ n u m − p o i n t s , 60 , 2 ] [num-points,60,2] [num−points,60,2],最后将上述的半径特征features_radius(3),局部信息特征f_cluster(3)和中心特征f_center(2)结合features_ls [ n u m − p o i n t s , 60 , 8 ] [num-points,60,8] [num−points,60,8](3+3+2)

然后呢,作者又进入了一层叫做pfn的特征提取层,其具体结构是 n n . L i n e a r + B a t c h N o r m 1 d + R e L U nn.Linear+BatchNorm1d+ReLU nn.Linear+BatchNorm1d+ReLU,此时的x的shape为 [ n u m − p o i n t s , 60 , 64 ] [num-points,60,64] [num−points,60,64],然后再进过一层 m a x − p o o l i n g max-pooling max−pooling得到 [ n u m − p o i n t s , 1 , 64 ] [num-points,1,64] [num−points,1,64]

也就是说最后的features成了 [ n u m − p o i n t s , 64 ] [num-points,64] [num−points,64].

4.1.2 spatial_features

上一层的特征voxel_features大小为 [ n u m − p o i n t s , 64 ] [num-points,64] [num−points,64]这是针对点的特征提取,需要解决的第一个问题就是如何回到分块的voxel中 ( 400 × 400 × 64 ) (400×400×64) (400×400×64)。

有一点之前没理解的变量:coords [ n u m − p o i n t s , 4 ] [num-points,4] [num−points,4],最后的这个4不是表示坐标,而是对应着[batchsize,x_voxels,y_voxel,z_voxel]。也就是没一点对应的在哪一个voxel的索引.,也就是说这个变量是整合了一个batch_size中所有的点在同一个变量中

(1)建立一个整个点云空间大小的voxel的变量canvas [ 160000 , 64 ] [160000,64] [160000,64]

(2)找到那些点在canvas的索引indices。

(3)把上一层得到的特征赋值给这里的canvas

(4)对一个batch的所有pc都进行了这样的操作后,就得到了包含点云信息的3D特征集合 [ b a t c h − s i z e , 64 , 400 , 400 ] [batch-size,64,400,400] [batch−size,64,400,400]

4.1.3-1 rpn_out

- 先是

RPNNoHeadBase,也就是和前面的三大网络篇在RPN中的最开始那一个结构,这里跳过。

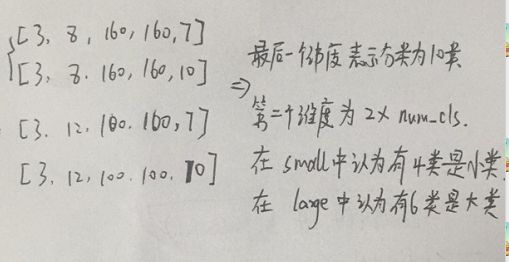

最后输出的结构如下,也就是下采样和上采样每一次的输出,以及最后组合了三层上采样得到的输出out[ b a t c h s i z e , 384 , 160 , 160 ] [batch_size,384,160,160] [batchsize,384,160,160]

4.1.3-2 multi-head

紧接上面的rpn_out

- 作者首先通过下采样的第一层的输出 [ b a t c h − s i z e , 64 , 200 , 200 ] [batch-size,64,200,200] [batch−size,64,200,200]进行预测小物体,在此之前,先裁剪周围信息变成 [ b a t c h − s i z e , 64 , 160 , 160 ] [batch-size,64,160,160] [batch−size,64,160,160],然后执行:

small = self.small_head(r1)

这里很机智的利用了网络前馈的层次结构。

(1)该函数对应着函数

class SmallObjectHead(nn.Module):

(2)函数forward过程如下图:

- 大物体检测

作者这里直接采用的是对综合了所有的上采样层的rpn_out["out"]进行大物体预测。

large = self.large_head(rpn_out["out"])

函数定义为:

class DefaultHead(nn.Module):

也就是最普遍的那个预测方式了。结果如下:

"box_preds": torch.cat([large["box_preds"], small["box_preds"]], dim=1),

"cls_preds": torch.cat([large["cls_preds"], small["cls_preds"]], dim=1),

那么需要理解第二个维度上的含义:

上述补充一点,在定义大小物体时,作者定义的小物体是:{“pedestrian”, “traffic_cone”, “bicycle”, “motorcycle”, “barrier”},作者定义的大物体是{“car”, “truck”, “trailer”, “bus”, “construction_vehicle”}.每一种物体都具有各自定义的旋转朝向,有的有两个,有的是一个,这也就是上图的dim=1维度不一样的原因。如下计算,前五个加起来就是小物体的8,后五个加起来就是大物体的12,对于trailer有些奇特,它的whl是六个维度, [ 3 , 15 , 3.8 , 2 , 3 , 3.8 ] [3, 15, 3.8, 2, 3, 3.8] [3,15,3.8,2,3,3.8],让人费解。

| 物体 | 朝向个数 |

|---|---|

| pedestrian | 无朝向预测,为1:[0] |

| traffic_cone | 无朝向预测,为1:[0] |

| bicycle | 有朝向预测,为2:[0, 1.57] |

| motorcycle | 有朝向预测,为2:[0, 1.57] |

| barrier | 有朝向预测,为2:[0, 1.57] |

| car | 有朝向预测,为2:[0, 1.57] |

| truck | 有朝向预测,为2:[0, 1.57] |

| trailer | 有朝向预测,为4:[0, 1.57] |

| bus | 有朝向预测,为2:[0, 1.57] |

| construction_vehicle | 有朝向预测,为2:[0, 1.57] |

计算代码如下:也就是whl乘以rotat。因此trailer是4.因为rotat=2,whl=6/3=2,因此2*2=4。over!!

def num_anchors_per_localization(self):

num_rot = len(self._rotations)

num_size = np.array(self._sizes).reshape([-1, 3]).shape[0]

return num_rot * num_size

- 为什么

trailer有两组的ehl呢。