- One

- Two

- Three

- Four

爬虫知识点---微信搜狗---xpath--pyquery--csselect--正则--bs4

1. 微信搜狗 大神的代码

import requests, re, pymongo, time

from fake_useragent import UserAgent

from urllib.parse import urlencode

from pyquery import PyQuery

from requests.exceptions import ConnectionError

client = pymongo.MongoClient('localhost')

db = client['weixin1']

key_word = 'python开发'

connection_count = 0 # 连接列表页失败的次数

connection_detail_count = 0# 连接列表页失败的次数

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:60.0) Gecko/20100101 Firefox/60.0',

'Cookie': 'CXID=161A70BF2483DEF017E035BBBACD2A81; ad=Hkllllllll2b4PxFlllllV7W9VGlllll$ZMXqZllll9llllljCxlw@@@@@@@@@@@; SUID=57A70FAB5D68860A5B1E1053000BC731; IPLOC=CN4101; SUV=1528705320668261; pgv_pvi=5303946240; ABTEST=5|1528705329|v1; SNUID=EF1FB713B7B2D9EE6E2A6351B8B3F072; weixinIndexVisited=1; sct=2; SUIR=F607AE0BA0A5CFF9D287956DA129A225; pgv_si=s260076544; JSESSIONID=aaaILWONRn9wK_OiUhlnw; PHPSESSID=1i38a2ium8e5th2ukhnufua6r1; ppinf=5|1528783576|1529993176|dHJ1c3Q6MToxfGNsaWVudGlkOjQ6MjAxN3x1bmlxbmFtZToxODolRTklQUQlOTQlRTklOTUlOUN8Y3J0OjEwOjE1Mjg3ODM1NzZ8cmVmbmljazoxODolRTklQUQlOTQlRTklOTUlOUN8dXNlcmlkOjQ0Om85dDJsdUtPQzE0d05mQkJFeUI2d1VJVkhZUE1Ad2VpeGluLnNvaHUuY29tfA; pprdig=ENOZrtvLfoIOct75SgASWxBJb8HJQztLgFbyhRHBfeqrzcirg5WQkKZU2GDCFZ5wLI93Wej3P0hCr_rST0AlvGpF6MY9h24P267oHdqJvgP2DmCHDr2-nYvkLqKs8bjA7PLM1IEHNaH4zK-q2Shcz2A8V5IDw0qEcEuasGxIZQk; sgid=23-35378887-AVsfYtgBzV8cQricMOyk9icd0; ppmdig=15287871390000007b5820bd451c2057a94d31d05d2afff0',

}

def get_proxy():

try:

response = requests.get("http://127.0.0.1:5010/get/")

if response.status_code == 200:

return response.text

return None

except Exception as e:

print('获取代理异常:',e)

return None

def get_page_list(url):

global connection_count

proxies = get_proxy()

print('列表页代理:', proxies)

# 请求url,获取源码

if proxies != None:

proxies = {

'http':'http://'+proxies

}

try:

response = requests.get(url, allow_redirects=False, headers=headers, proxies=proxies)

if response.status_code == 200:

print('列表页{}请求成功',url)

return response.text

print('状态码:',response.status_code)

if response.status_code == 302:

# 切换代理,递归调用当前函数。

get_page_list(url)

except ConnectionError as e:

print('连接对方主机{}失败: {}',url,e)

connection_count += 1

if connection_count == 3:

return None

# 增加连接次数的判断

get_page_list(url)

def parse_page_list(html):

obj = PyQuery(html)

all_a = obj('.txt-box > h3 > a').items()

for a in all_a:

href = a.attr('href')

yield href

def get_page_detail(url):

global connection_detail_count

"""

请求详情页

:param url: 详情页的url

:return:

"""

proxies = get_proxy()

print('详情页代理:',proxies)

# 请求url,获取源码

if proxies != None:

proxies = {

'http': 'http://' + proxies

}

try:

# 注意:将重定向allow_redirects=False删除。详情页是https: verify=False,

# 注意:将重定向allow_redirects=False删除。详情页是https: verify=False,

# 注意:将重定向allow_redirects=False删除。详情页是https: verify=False,

# 注意:将重定向allow_redirects=False删除。详情页是https: verify=False,

response = requests.get(url, headers=headers, verify=False, proxies=proxies)

if response.status_code == 200:

print('详情页{}请求成功', url)

return response.text

else:

print('状态码:', response.status_code,url)

# 切换代理,递归调用当前函数。

get_page_detail(url)

except ConnectionError as e:

print('连接对方主机{}失败: {}', url, e)

connection_detail_count += 1

if connection_detail_count == 3:

return None

# 增加连接次数的判断

get_page_detail(url)

def parse_page_detail(html):

obj = PyQuery(html)

# title = obj('#activity-name').text()

info = obj('.profile_inner').text()

weixin = obj('.xmteditor').text()

print('info')

return {

'info':info,

'weixin':weixin

}

def save_to_mongodb(data):

# insert_one: 覆盖式的

db['article'].insert_one(data)

# 更新的方法:

# 参数1:指定根据什么字段去数据库中进行查询,字段的值。

# 参数2:如果经过参数1的查询,查询到这条数据,执行更新的操作;反之,执行插入的操作;$set是一个固定的写法。

# 参数3:是否允许更新

db['article'].update_one({'info': data['info']}, {'$set': data}, True)

time.sleep(1)

def main():

for x in range(1, 101):

url = 'http://weixin.sogou.com/weixin?query={}&type=2&page={}'.format(key_word, 1)

html = get_page_list(url)

if html != None:

# 详情页的url

urls = parse_page_list(html)

for url in urls:

detail_html = get_page_detail(url)

if detail_html != None:

data = parse_page_detail(detail_html)

if data != None:

save_to_mongodb(data)

if __name__ == '__main__':

main()

2.刘晓军的代码

import re, pymongo, requests,time

from urllib.parse import urlencode

from pyquery import PyQuery

# fake_useragent: 实现啦User-Agent的动态维护,利用他每次随机后去一个User-Agent的值

from fake_useragent import UserAgent

client = pymongo.MongoClient('localhost')

db = client['wx']

key_word = 'python教程'

connection_count = 0

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:60.0) Gecko/20100101 Firefox/60.0',

'Cookie': 'SNUID=74852C8923264D45D6B96E0A2369AE27; IPLOC=CN4101; SUID=29139F756119940A000000005B090D0D; ld=Okllllllll2bjy12lllllV7JRy1lllllNBDF3yllll9lllllpklll5@@@@@@@@@@; SUV=003F178A759F13295B090D0E7F8CA938; UM_distinctid=163a9f5b4cc161-0f1b3689353b65-46514133-1fa400-163a9f5b4d0176; GOTO=; SMYUV=1527823120301610; pgv_pvi=6897384448; ABTEST=0|1528708438|v1; weixinIndexVisited=1; ppinf=5|1528708459|1529918059|dHJ1c3Q6MToxfGNsaWVudGlkOjQ6MjAxN3x1bmlxbmFtZTozNTpzbWFsbGp1biVFRiVCQyU4MSVFRiVCQyU4MSVFRiVCQyU4MXxjcnQ6MTA6MTUyODcwODQ1OXxyZWZuaWNrOjM1OnNtYWxsanVuJUVGJUJDJTgxJUVGJUJDJTgxJUVGJUJDJTgxfHVzZXJpZDo0NDpvOXQybHVQWWk5LVJkU1JJRHBHemsxWUx0Q01RQHdlaXhpbi5zb2h1LmNvbXw; pprdig=XRQQE_qExWhRS1AiOrwSCaNfWYtcUCrCVODql2R_gSIvCyFpG23pefn3RHO1EOH0L5TJRNEkpYgztrXfE1NvNtpe-1QR2PXH1frohkOL8RKEwJCVfRYhz1fOXSuZf0NQxC4Y9oSCfLimSVaodrUihdiLHmLqf1erzxZkEzFHhG4; sgid=17-35471325-AVsePWtlGzxQU9rKkedgl7k; sct=7; SUIR=18E941E54F4B2115642260494FE28B36; ppmdig=1528802332000000b239e8ac3d4723d0ecc7f25b5f16b073; JSESSIONID=aaaIry_FagLEc59Thglnw'

}

def get_proxy():

try:

response = requests.get('http://127.0.0.1:5010/get/')

if response.status_code == 200:

return response.text

return None

except Exception as e:

print('获取代理异常', e)

return None

def get_page_list(url):

global connection_count

proxies = get_proxy()

print('列表页代理', proxies)

if proxies != None:

proxies = {

'http': 'http://' + proxies

}

try:

response = requests.get(url, headers=headers, allow_redirects=False, proxies=proxies)

if response.status_code == 200:

return response.text

if response.status_code == 302:

get_page_list(url)

except ConnectionError as e:

connection_count += 1

if connection_count == 3:

return None

get_page_list(url)

def parse_page_list(html):

obj = PyQuery(html)

all_a = obj('.txt-box > h3 > a').items()

for a in all_a:

href = a.attr('href')

yield href

def get_page_detail(url):

global connection_detail_count

proxies = get_proxy()

print('列表页代理', proxies)

if proxies != None:

proxies = {

'http': 'http://' + proxies

}

try:

response = requests.get(url, headers=headers, verify=False, proxies=proxies)

if response.status_code == 200:

return response.text

if response.status_code == 302:

get_page_list(url)

except ConnectionError as e:

connection_detail_count += 1

if connection_count == 3:

return None

get_page_list(url)

def parse_page_detail(html):

obj = PyQuery(html)

info = obj('#img-content > h2').text()

weixinID = re.findall(re.compile(r'.*?(.*?)', re.S),html)

return {

'info': info,

'weixinID': weixinID

}

def save_to_mongodb(data):

db['article'].update_one({'info': data['info']}, {'$set': data}, True)

time.sleep(1)

def main():

for x in range(1, 101):

url = 'http://weixin.sogou.com/weixin?query={}&type=2&page={}'.format(key_word, 1)

html = get_page_list(url)

if html != None:

urls = parse_page_list(html)

for url in urls:

detail_html = get_page_detail(url)

if detail_html != None:

data = parse_page_detail(detail_html)

if data != None:

save_to_mongodb(data)

if __name__ == '__main__':

main()

3.xpath

# xpath:跟re, bs4, pyquery一样,都是页面数据提取方法。根据元素的路径来查找页面元素。

# pip install lxml

# element tree: 文档树对象

from lxml.html import etree

html = """

"""

obj = etree.HTML(html)

# HTML 用于 HTML

# fromstring 用于 XML

# 将一个Html文件解析成为对象。

# obj = etree.parse('index.html')

print(type(obj))

# //ul: 从obj中查找ul,不考虑ul所在的位置。

# /li: 找到ul下边的直接子元素li,不包含后代元素。

# [@class="one"]: 给标签设置属性,用于过滤和筛选

# xpath()返回的是一个列表

one_li = obj.xpath('//ul/li[@class="one"]')[0]

# 获取one_li的文本内容

print(one_li.xpath('text()')[0])

# 上述写法的合写方式

print(obj.xpath('//ul/li[@class="one"]/text()')[0])

# 获取所有li的文本内容:all_li = obj.xpath('//ul/li/text()')

# 获取包含某些属性的标签元素

print(obj.xpath('//ul/li[contains(@class,"four3")]'))

# 获取同时包含id和class两个属性元素的写法为

//div[@class='abc'][@id='123']

xpath组合查询: . 表示文本

# @class, @id

# . 表示文本内容

detail_url = weibo.xpath('.//a[contains(., "原文评论[")]/@href').extract_first('')

# 获取谁的属性就直接@谁,例如想要获取a标签中href属性的值: /a/@href

# 获取所有li的文本内容以及class属性的值

all_li = obj.xpath('//ul/li')for li in all_li: class_value = li.xpath('@class')[0]

text_value = li.xpath('text()')[0]

print(class_value, text_value)

# 获取div标签内部的所有文本

# //text():获取所有后代元素的文本内容

# /text():获取直接子元素的文本,不包含后代元素print(obj.xpath('//div[@id="inner"]//text()'))

# 获取ul中第一个li [1]: 位置print(obj.xpath('//ul/li[1]/text()'))此时的位置 1 不是从0 开始的,更不是索引值

# 查找类名中包含four的li的文本内容print(obj.xpath('//ul/li[contains(@class, "four")]/text()'))

# 作业:

# 利用xpath爬取百度贴吧内容 https://tieba.baidu.com/p/3164192117

# 利用xpath爬取猫眼电影Top100的内容 http://maoyan.com/board/4# mongodb, mysql, xlwt, csv(获取的数据是正常的,存入csv时乱码)# {'content': '最后巴西捧杯。4年后我会回来!

', 'sub_content': ['xxx', 'xxx', 'xxxx']}

4.pyquery

# pyquery :仿照jquery语法,封装一个包,和bs4有点类似

from pyquery import PyQuery

html = """

- One

- Two

- Three

- Four

"""

# 利用Pyquery类,对html这个文档进行序列化,结果是一个文档对象

doc_obj = PyQuery(html)

print(type(doc_obj))

# 查找元素的方法

ul = doc_obj('.list') # 从doc_obj这个对象中根据类名匹配元素

# print(ul) # ul 是一个对象

# print(type(ul))

# 从ul 中查找a

print(ul('a'))

# 当前元素对象.find(): 在当前对象中查找后代元素

# 当前元素对象.chrildren(): 在当前对象中查找直接子元素

print(ul.find('a'), '后代元素')

# 父元素的查找

# parent(): 直接父元素

# parents(): 所有的父元素

a = ul('a')

print(a.parent('#inner'), '直接父元素')

print(a.parents(), '所有父元素')

# 兄弟元素的查找,不包含自己,和自己同一级的兄弟标签

li = doc_obj('.one')

print(li.siblings(),'所有siblings')

print(li.siblings('.two'), '我是siblings')

# 遍历元素

ul = doc_obj('.list')

# generator object

res = ul('li').items()

print(res,'我是res')

for li in res:

print(li,'哈哈哈')

# 获取标签对象的文本内容

print(li.text(), '文本内容')

# 获取标签属性

print(li.attr('class'), '属性')等同于 或 print(li.attr.class)

使用CSS选择特定的标签

#使用CSS3 特定的伪类选择器,选择特定的标签

#用例: 伪类选择器

html = ‘‘‘

first item

second item

third item

fourth item

fifth item

5.csselect

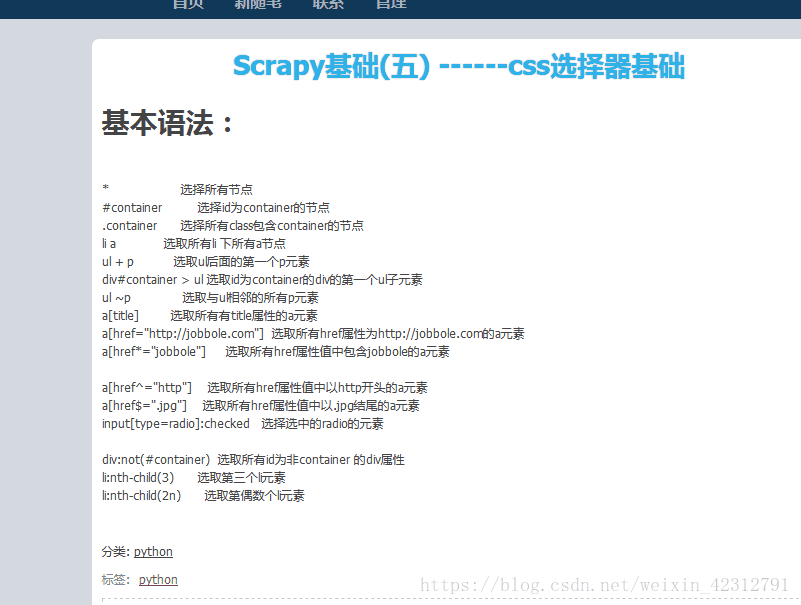

# cssselector:和xpath是使用比较多的两种数据提取方式。

# scrapy爬虫框架:支持xpath/css

# pyspider爬虫框架:支持PyQuery,也是通过css样式选择器实现的

# pip install cssselector

import cssselect

from lxml.html import etree

html = """

- 哈哈

- Two

- Three

- Four

"""

html_obj = etree.HTML(html)

span = html_obj.cssselect('.list > .four')[0]

print(span.text) # 获取文本内容

# print(help(span))# 查找方法

# print(span.attrib['id']) # 获取属性:是一个字

# csv:

xpath和cssselector之间的差别

在scrapy中这样用 其他按照正常的来

a.属性名 来表明a的

6.正则

import re

# re: 用于提取字符串内容的模块。

# 1> 创建正则对象;2> 匹配查找;3> 提取数据保存;

string = """