基于Apollo的动态REDIS和动态DRUID数据源

由于公司要使用携程开源的Apollo作为项目的动态配置中心,命我完成数据源和REDIS的动态修改。接到任务心情甚是忐忑,但是也不得不迎难而上。

Apollo(阿波罗)是携程框架部门研发的配置管理平台,能够集中化管理应用不同环境、不同集群的配置,配置修改后能够实时推送到应用端,并且具备规范的权限、流程治理等特性。

此工具的安装及使用,在此不做讲解。如果不会配置,安装也可使用携程提供的DEMO。闲言少叙,数据源动态替换,携程已经给了例子,我也只是照着人家的步骤,来了一遍。携程动态数据源例子地址:https://github.com/ctripcorp/apollo-use-cases/tree/master/dynamic-datasource。

携程的例子是使用了HikariCP数据源,本次也是仿照他来写。他也给提供了博文。http://www.kailing.pub/article/index/arcid/198.html。

核心思想,就是提供一个自定义的数据源,定义一个数据源属性,然后替换此属性。同理,REDIS链接工厂也可以这么干。我们需要做的就是,创建链接工厂或者数据源,替换链接工厂或者数据源,监听配置变化,执行前面的步骤。

核心额的链接工厂及数据源代码(详细的代码,可以参见我的GIHUB,https://github.com/antianchi/apollo-demo-datasource)。项目是springBOOT的,直接执行MAIN可以运行的。

package com.an.config;

import java.io.PrintWriter;

import java.sql.Connection;

import java.sql.SQLException;

import java.sql.SQLFeatureNotSupportedException;

import java.util.concurrent.atomic.AtomicReference;

import java.util.logging.Logger;

import javax.sql.DataSource;

public class DynamicDataSource implements DataSource {

private AtomicReference dataSource;

public DynamicDataSource(DataSource dataSource) {

this.dataSource = new AtomicReference(dataSource);

}

/**

* set the new data source and return the previous one

*/

public DataSource setDataSource(DataSource newDataSource){

return dataSource.getAndSet(newDataSource);

}

@Override

public PrintWriter getLogWriter() throws SQLException {

return dataSource.get().getLogWriter();

}

@Override

public void setLogWriter(PrintWriter out) throws SQLException {

dataSource.get().setLogWriter(out);

}

@Override

public void setLoginTimeout(int seconds) throws SQLException {

dataSource.get().setLoginTimeout(seconds);

}

@Override

public int getLoginTimeout() throws SQLException {

return dataSource.get().getLoginTimeout();

}

@Override

public Logger getParentLogger() throws SQLFeatureNotSupportedException {

return dataSource.get().getParentLogger();

}

@Override

public T unwrap(Class iface) throws SQLException {

return dataSource.get().unwrap(iface);

}

@Override

public boolean isWrapperFor(Class iface) throws SQLException {

return dataSource.get().isWrapperFor(iface);

}

@Override

public Connection getConnection() throws SQLException {

return dataSource.get().getConnection();

}

@Override

public Connection getConnection(String username, String password)

throws SQLException {

return dataSource.get().getConnection(username,password);

}

}

// 以下是REDIS链接工厂

package com.an.config;

import java.util.Map;

import java.util.concurrent.atomic.AtomicReference;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.BeansException;

import org.springframework.context.ApplicationContext;

import org.springframework.context.ApplicationContextAware;

import org.springframework.dao.DataAccessException;

import org.springframework.data.redis.connection.RedisClusterConnection;

import org.springframework.data.redis.connection.RedisConnection;

import org.springframework.data.redis.connection.RedisConnectionFactory;

import org.springframework.data.redis.connection.RedisSentinelConnection;

public class DynamicRedisConnectionFactory implements RedisConnectionFactory,ApplicationContextAware{

private static final Logger LOGGER = LoggerFactory.getLogger(DynamicRedisConnectionFactory.class);

private AtomicReference redisConnectionFactory;

private ApplicationContext applicationContext;

// @Autowired

// private RedisAccessor accessor;

public DynamicRedisConnectionFactory(

RedisConnectionFactory redisConnectionFactory) {

super();

this.redisConnectionFactory =new AtomicReference(redisConnectionFactory);

}

public RedisConnectionFactory getAndSet(RedisConnectionFactory connectionFactory) {

// Map beansOfType = applicationContext.getBeansOfType(RedisTemplate.class);

// for (Map.Entry entry : beansOfType.entrySet()) {

// LOGGER.info("RedisTemplate替换连接工厂BEANNAME[{}]",entry.getKey());

// entry.getValue().setConnectionFactory(connectionFactory);

// }

return redisConnectionFactory.getAndSet(connectionFactory);

}

@Override

public DataAccessException translateExceptionIfPossible(RuntimeException ex) {

return redisConnectionFactory.get().translateExceptionIfPossible(ex);

}

@Override

public RedisConnection getConnection() {

return redisConnectionFactory.get().getConnection();

}

@Override

public RedisClusterConnection getClusterConnection() {

return redisConnectionFactory.get().getClusterConnection();

}

@Override

public boolean getConvertPipelineAndTxResults() {

return redisConnectionFactory.get().getConvertPipelineAndTxResults();

}

@Override

public RedisSentinelConnection getSentinelConnection() {

return redisConnectionFactory.get().getSentinelConnection();

}

@Override

public void setApplicationContext(ApplicationContext applicationContext)

throws BeansException {

this.applicationContext = applicationContext;

}

}

- 配置本地配置文件:

application.yml,需要配置apollo上项目的app.id.

debug: true

server:

port: 8888

app:

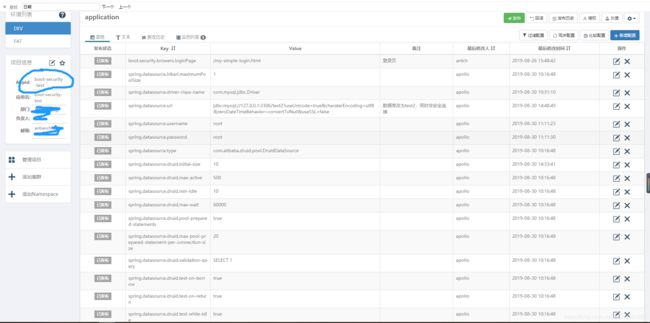

id: boot-security-test- apollo上的配置:

boot.security.browers.loginPage = /my-simple-login.html

# hikari specific settings

spring.datasource.hikari.maximumPoolSize = 1

spring.datasource.driver-class-name = com.mysql.jdbc.Driver

spring.datasource.url = jdbc:mysql://127.0.0.1:3306/test2?useUnicode=true&charaterEncoding=utf8&zeroDateTimeBehavior=convertToNull&useSSL=false

spring.datasource.username = root

spring.datasource.password = root

spring.datasource.type = com.alibaba.druid.pool.DruidDataSource

spring.datasource.druid.initial-size = 10

spring.datasource.druid.max-active = 500

spring.datasource.druid.min-idle = 10

spring.datasource.druid.max-wait = 60000

spring.datasource.druid.pool-prepared-statements = true

spring.datasource.druid.max-pool-prepared-statement-per-connection-size = 20

spring.datasource.druid.validation-query = SELECT 1

spring.datasource.druid.test-on-borrow = true

spring.datasource.druid.test-on-return = true

spring.datasource.druid.test-while-idle = true

spring.datasource.druid.time-between-eviction-runs-millis = 60000

spring.datasource.druid.max-evictable-idle-time-millis = 600000

spring.datasource.druid.filters = stat,wall

spring.datasource.druid.remove-abandoned-timeout = 60000

spring.datasource.druid.remove-abandoned = true

# redis

spring.redis.lettuce.pool.max-active = 500

spring.redis.lettuce.pool.max-wait = 10000

spring.redis.lettuce.pool.min-idle = 10

spring.redis.lettuce.pool.max-idle = 100

spring.redis.lettuce.shutdown-timeout = 5000

spring.redis.database = 0

spring.redis.host = redis.eastcom.io

spring.redis.port = 6379

spring.redis.password = AS@redis

spring.datasource.druid.min-evictable-idle-time-millis = 30000

- 启动执行(需要指定JVM参数apollo的meta.server地址,或者config.service地址):

-Dapp.id=boot-security-test

-Dapollo.configService=http://dev.apollo.io/

谢谢各位参照,有指导的,请评论!!!!