应用 WIDER FACE数据集 训练Faster R-CNN,进行人脸检测

上一篇:Faster R-CNN Github源码 tensorflow GPU下demo运行,训练,测试,验证,可视化。

本博文的测试环境都是与上一篇的相同,唯一的区别是tensorflow-gpu版本变为1.8.0。

其实,tensorflow-gpu1.7.0/1.8.0亦可运行。

应用的Faster R-CNN源码为:endernewton

本博文分为以下四章节:

- WIDER FACE数据集准备

- 创建WIDER FACE数据集读取路径,修改源码数据接口

- 训练网络

- 测试,得出mAP

一、WIDER FACE数据集准备

1.点击:http://mmlab.ie.cuhk.edu.hk/projects/WIDERFace/,进入网页,下载如下两个数据集和数据集注解。或者点击如下文字,进入百度网盘下载。

- WIDER Face Training Images

- WIDER Face Validation Images

- Face annotations

2.将下载的三个zip压缩包文件放在新建的一个文件夹,进行解压。

WIDER_train和WIDER_val文件夹下的是62个场景下的.jpg格式图片。

wider_face_split文件夹下的是训练、测试、验证数据集的注释文件。打开readme.txt,查看ground truth的格式:

x1, y1, w, h, blur, expression, illumination, invalid, occlusion, pose。

x1,x2左上角坐标,w,h表示ground truth的宽长,后面这些参数就不用理了(blur人脸模糊程度,expression..)。

3.制作VOC2007格式数据(VOC2007数据集格式,点击查看)

准备条件:安装有opencv,应用指令:pip install opencv-python 安装。

1)获取训练数据集的路径

file_path = "/home/fa/Documents/WinderFace/wider_face_split/wider_face_train_bbx_gt.txt"

image_path = "/home/fa/Documents/WinderFace/"

num_line = 0

with open(file_path) as fr:

image_name = fr.readline()

while image_name:

line_dict = image_name.split(".")

if line_dict[-1].strip("\n") == "jpg":

with open(image_path + "image_path.txt", mode='a') as fw:

fw.write(image_name)

image_name = fr.readline()file_path:训练数据集的注释文件路径

image_path:训练数据集各图片的路径保存位置

最终生成image_path.txt文件。

2)更改图片名字(其实是重新生成新的图片):

import cv2

img_path = "/home/fa/Documents/WinderFace/WIDER_trainl/images/"

file_path = "/home/fa/Documents/WinderFace/img_test_path.txt"

img_store_path = "/home/fa/Documents/WinderFace/image_train"

img_num = 12880

with open(file_path) as fr:

line = fr.readline()

print(line)

while line:

line = line.strip("\n")

img_num = img_num + 1

print(img_path + line)

img = cv2.imread(img_path + line)

if image.data:

print(img_store_path + "{:05d}.jpg".format(img_num))

cv2.imwrite(img_store_path + "{:05d}.jpg".format(img_num), img)

else:

print("loading image appearing error!")

print("The num of image: %d" % img_num)

line = fr.readline()3)生成注解文件:

file_path = "/home/fa/Documents/WinderFace/wider_face_split/wider_face_train_bbx_gt.txt"

store_path = "//home/fa/Documents/WinderFace/Annotations/"

image_index = 0

with open(file_path) as fr:

image_name = fr.readline()

while image_name:

line_dict = image_name.split(".")

if line_dict[-1].strip("\n") == "jpg":

image_index = image_index + 1

print(image_name)

objs = fr.readline()

obj_num = int(objs.strip("\n"))

with open(store_path + "{:05d}.txt".format(image_index), mode='a') as fw:

fw.write(str(obj_num))

fw.write("\n")

for i in range(obj_num):

line = fr.readline()

with open(store_path + "{:05d}.txt".format(image_index), mode='a') as fw:

line = line.strip("\n").split(" ")

bbox_gt = line[:4]

bbox_gt = bbox_gt[0]+" "+bbox_gt[1]+" "\

+str(int(bbox_gt[2])+int(bbox_gt[0]))+" "+str(int(bbox_gt[3])+int(bbox_gt[1]))

fw.write(bbox_gt)

fw.write("\n")

image_name = fr.readline()4)生成图片序号:

file_path = "/home/fa/Documents/WinderFace/trainval.txt"

with open(file_path, mode='a') as fw:

for image_index in range(12880):

fw.write(str(image_index+1))

fw.write("\n")

数据格式到此制作完毕!

二、创建WIDER FACE数据集读取路径,修改源码数据接口

1.在/home/fa/tf-faster-rcnn/data下新建VOCdevkit/VOC2007文件夹,将上述生成的文件分别拷贝到如下目录:

./VOCdevkit/VOC2007/Annotations(注释文件复制在该文件夹下)

./VOCdevkit/VOC2007/ImageSets/Main/trainval.txt(图片序列号文件)

./VOCdevkit/VOC2007/JPRGImages/(将更名后的12880张图片复制在该文件夹下)

2.为PASCAL VOC数据集创建符号链接

cd $tf-faster-rcnn/data

ln -s $VOCdevkit VOCdevkit20073.更改/home/fa/tf-faster-rcnn-face/lib/datasets/pascal_voc.py文件

主要修改两个地方,分别是:

检测的类型改为:self._class=('__backgroud__', 'face')

读取注释文件def _load_pascal_annotation(self, index)函数改为如下:

def _load_pascal_annotation(self, index):

"""

Load image and bounding boxes info from XML file in the PASCAL VOC

format.

"""

filename = os.path.join(self._data_path, 'Annotations', index + '.txt')

# tree = ET.parse(filename)

# objs = tree.findall('object')

# if not self.config['use_diff']:

# # Exclude the samples labeled as difficult

# non_diff_objs = [

# obj for obj in objs if int(obj.find('difficult').text) == 0]

# # if len(non_diff_objs) != len(objs):

# # print 'Removed {} difficult objects'.format(

# # len(objs) - len(non_diff_objs))

# objs = non_diff_objs

#更改读取注释文件

with open(filename) as fr:

num_line = fr.readline()

face_num = int(num_line.strip("\n"))

num_objs = face_num

boxes = np.zeros((num_objs, 4), dtype=np.uint16)

gt_classes = np.zeros((num_objs), dtype=np.int32)

overlaps = np.zeros((num_objs, self.num_classes), dtype=np.float32)

# "Seg" area for pascal is just the box area

# seg_areas = np.zeros((num_objs), dtype=np.float32)

# Load object bounding boxes into a data frame.

num_objs = [obj for obj in range(num_objs)]

for ix, obj in enumerate(num_objs):

box_gt = fr.readline()

box_gt = box_gt.strip("\n").split(" ")

x1 = float(int(box_gt[0]))

y1 = float(int(box_gt[1]))

x2 = float(int(box_gt[2]))

y2 = float(int(box_gt[3]))

cls = self._class_to_ind["face"]

boxes[ix, :] = [x1, y1, x2, y2]

gt_classes[ix] = cls

overlaps[ix, cls] = 1.0

# seg_areas[ix] = (x2 - x1 + 1) * (y2 - y1 + 1)

overlaps = scipy.sparse.csr_matrix(overlaps)

return {'boxes': boxes,

'gt_classes': gt_classes,

'gt_overlaps': overlaps,

'flipped': False,

'seg_areas': 0}

另:数据集中的注释有些地方是错的,需要在imdb.py文件的def append_flipped_images(self)函数增加三行代码:

def append_flipped_images(self):

num_images = self.num_images

widths = self._get_widths()

for i in range(num_images):

boxes = self.roidb[i]['boxes'].copy()

oldx1 = boxes[:, 0].copy()

oldx2 = boxes[:, 2].copy()

boxes[:, 0] = widths[i] - oldx2 - 1

boxes[:, 2] = widths[i] - oldx1 - 1

#增加判断,因为注释有错

for b in range(len(boxes)):

if boxes[b][2] < boxes[b][0]:

boxes[b][0] = 0

assert (boxes[:, 2] >= boxes[:, 0]).all()

entry = {'boxes': boxes,

'gt_overlaps': self.roidb[i]['gt_overlaps'],

'gt_classes': self.roidb[i]['gt_classes'],

'flipped': True}

self.roidb.append(entry)

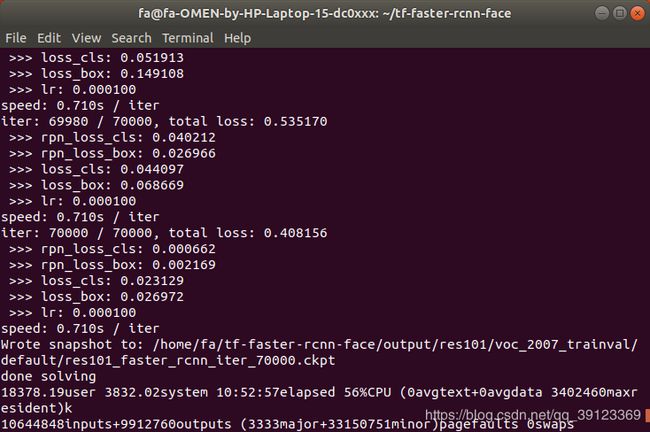

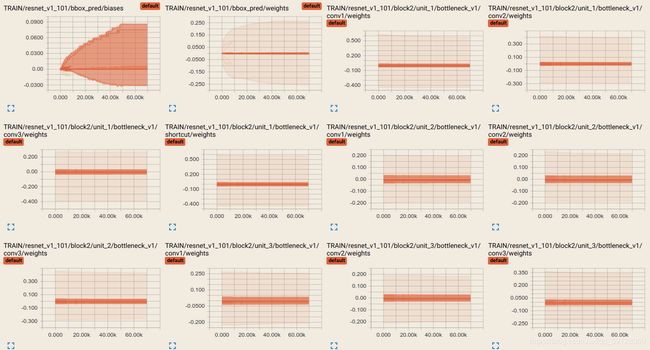

self._image_index = self._image_index * 2三、训练网络

1.进入/home/fa/tf-faster-rcnn/data文件夹:

./experiments/scripts/train_faster_rcnn.sh 0 pascal_voc res101训练过程需要的条件,参考上一篇博文。

total loss 10K次以后一直在波动,并没又很好的减少,遇到了瓶颈!

2.人脸检测效果

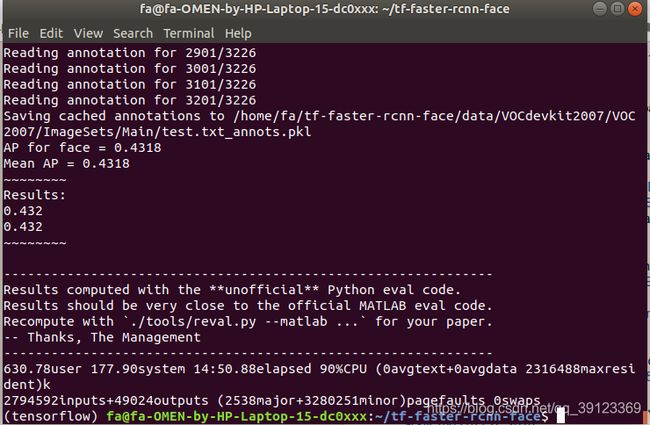

四、测试得出mAp

mAP...

待续补充: