强化学习之迷宫问题(MC, Sarsa, Q-learning实现)

通过简易迷宫问题,理解Monte-Carlo, Sarsa和Q-learning算法

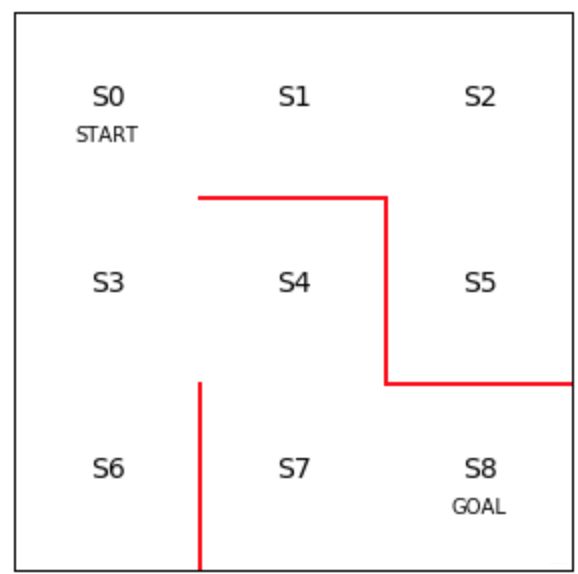

3 × 3 3\times3 3×3的迷宫如下

可以通过每一步都随机地走,直到走到S8为止

这里主要写通过强化学习,找到最佳路线

MC算法:

首先定义一个policy,它是一个矩阵,行代表S1~S8

列代表action的4个方向,这里定义方向为↑、→、↓、←的顺序

policy定义为 π θ ( s , a ) \pi_{\theta}(s, a) πθ(s,a), 其中 θ \theta θ是决定 π \pi π的参数,首先定义初始 θ 0 \theta_{0} θ0

θ 0 \theta_{0} θ0矩阵中值为1代表这个方向可以走,如果是墙壁,就定义为np.nan

#row: S0~S7, column: ↑、→、↓、←

#theta: parameter of policy pi(s, a)

theta_0 = np.array([[np.nan, 1, 1, np.nan], #S0

[np.nan, 1, np.nan, 1], #S1

[np.nan, np.nan, 1, 1], #S2

[1, 1, 1, np.nan], #S3

[np.nan, np.nan, 1, 1], #S4

[1, np.nan, np.nan, np.nan], #S5

[1, np.nan, np.nan, np.nan], #S6

[1, 1, np.nan, np.nan], #S7

#S8 is goal, no policy

])

下面由 θ 0 \theta_{0} θ0求 π θ ( s , a ) \pi_{\theta}(s, a) πθ(s,a),可以单纯求比例,也可以用softmax

soft公式如下:

P ( θ i ) = e x p ( β θ i ) ∑ i = 1 N a e x p ( β θ i ) P(\theta_{i}) = \frac{exp(\beta\theta_{i})}{\sum_{i=1}^{N_{a}}exp(\beta\theta_{i})} P(θi)=∑i=1Naexp(βθi)exp(βθi)

N a N_{a} Na表示action的种类数(4个)

#use softmax to get policy

def softmax_convert_into_pi_from_theta(theta):

beta = 1.0

[m, n] = theta.shape

pi = np.zeros((m,n))

exp_theta = np.exp(beta * theta)

for i in range(0, m):

pi[i, :] = exp_theta[i, :] /np.nansum(exp_theta[i, :])

pi = np.nan_to_num(pi)

return pi

打印初期 π θ \pi_{\theta} πθ可以看到在不同的state下不同action的概率

pi_0 = softmax_convert_into_pi_from_theta(theta_0)

print(pi_0)

#output:

#[[ 0. 0.5 0.5 0. ]

# [ 0. 0.5 0. 0.5 ]

# [ 0. 0. 0.5 0.5 ]

# [ 0.33333333 0.33333333 0.33333333 0. ]

# [ 0. 0. 0.5 0.5 ]

# [ 1. 0. 0. 0. ]

# [ 1. 0. 0. 0. ]

# [ 0.5 0.5 0. 0. ]]

通过policy来确定agent的动作

通过 π \pi π的概率来选择下一步的action和state

def get_action_and_next_s(pi, s):

direction = ["up", "right", "down", "left"]

next_direction = np.random.choice(direction, p=pi[s, :])

if next_direction == "up":

action = 0

s_next = s - 3

elif next_direction == "right":

action = 1

s_next = s + 1

elif next_direction == "down":

action = 2

s_next = s + 3

elif next_direction == "left":

action = 3

s_next = s - 1

return [action, s_next]

直到走到Goal,一直不停探索路径,到达goal的路径保存在s_a_history

def goal_maze_ret_s_a(pi):

s = 0 #start

s_a_history = [[0, np.nan]]

while(1):

[action, next_s] = get_action_and_next_s(pi, s)

s_a_history[-1][1] = action

#print([action, next_s])

s_a_history.append([next_s, np.nan]) #now don't know next action, add np.nan as next action

if next_s == 8:

break

else:

s = next_s

return s_a_history

随机走一条路径

s_a_history = goal_maze_ret_s_a(pi_0)

print(s_a_history)

#output:

#[[0, 2], [3, 0], [0, 2], [3, 0], [0, 1], [1, 3], [0, 2], [3, 0], [0, 2], [3, #1], [4, 2], [7, 0], [4, 3], [3, 1], [4, 2], [7, 1], [8, nan]]

#17 steps

MC算法需要完整的路径来更新policy,所以每次得到完整路径后去更新 θ \theta θ,通过 θ \theta θ得到 π \pi π,

重复上面的步骤直到policy收敛

θ \theta θ的更新公式:

θ s i , a j = θ s i , a j + η . Δ θ s i , a j \theta_{s_{i},a_{j}} = \theta_{s_{i},a_{j}} + \eta.\Delta\theta_{s_{i},a_{j}} θsi,aj=θsi,aj+η.Δθsi,aj

Δ θ s i , a j = { N ( s i , a j ) + P ( s i , a j ) N ( s i , a ) } / T \Delta\theta_{s_{i},a_{j}} = \{N(s_{i}, a_{j}) + P(s_{i}, a_{j})N(s_{i}, a)\}/T Δθsi,aj={N(si,aj)+P(si,aj)N(si,a)}/T

N N N表示次数, T T T表示到达goal的总step数

def update_theta(theta, pi, s_a_history):

eta = 0.1 #learning rate

T = len(s_a_history) - 1

[m, n] = theta.shape

delta_theta = theta.copy() #deep copy

for i in range(0, m):

for j in range(0, n):

if not(np.isnan(theta[i, j])):

SA_i = [SA for SA in s_a_history if SA[0] == i] #the (s,a) pair with state i

SA_ij = [SA for SA in s_a_history if SA == [i, j]] #the (s,a) pair with state i, action j

N_i = len(SA_i)

N_ij = len(SA_ij)

delta_theta[i, j] = (N_ij + pi[i, j] * N_i) / T

new_theta = theta + eta * delta_theta

return new_theta

MC算法迭代,定义收敛标准

stop_epsilon = 10**-8

theta = theta_0

pi = pi_0

is_continue = True

count = 1

while is_continue:

s_a_history = goal_maze_ret_s_a(pi)

new_theta = update_theta(theta, pi, s_a_history)

new_pi = softmax_convert_into_pi_from_theta(new_theta)

print(np.sum(np.abs(new_pi - pi)))

print("steps to reach goal is: " + str(len(s_a_history) - 1))

if np.sum(np.abs(new_pi - pi)) < stop_epsilon:

is_continue = False

else:

theta = new_theta

pi = new_pi

最后确认下收敛后的policy

np.set_printoptions(precision=3, suppress=True) #not display by e

print(pi)

#output

#[[ 0. 0. 1. 0. ]

# [ 0. 0.38 0. 0.62 ]

# [ 0. 0. 0.474 0.526]

# [ 0. 1. 0. 0. ]

# [ 0. 0. 1. 0. ]

# [ 1. 0. 0. 0. ]

# [ 1. 0. 0. 0. ]

# [ 0. 1. 0. 0. ]]

可以看到policy已经可以找到最佳路径,而未收敛的概率处是因为通过最优路径不会再采取那个action,所以仍保留初始附近的概率

Sarsa算法

名称解释,Sarsa指当前State(S), Action(a)得到即时Reward( r), 并通过下一步的State(s), Action(a)得到预期reward

定义Q矩阵,通过 ϵ − g r e e d y \epsilon-greedy ϵ−greedy方法选择下一步的action, 在每一个step都更新Q值,而不是MC算法的每个episode更新policy

最后Q矩阵收敛可以得到最优路径

Q矩阵的size和上面policy一样,行代表state,列代表action,初始值为random

但是为了保持墙壁的限制条件,让Q乘 θ 0 \theta_{0} θ0

将Q的terminal state设为0

#row: S0~S7, column: ↑、→、↓、←

#theta: policy pi(s, a)

theta_0 = np.array([[np.nan, 1, 1, np.nan], #S0

[np.nan, 1, np.nan, 1], #S1

[np.nan, np.nan, 1, 1], #S2

[1, 1, 1, np.nan], #S3

[np.nan, np.nan, 1, 1], #S4

[1, np.nan, np.nan, np.nan], #S5

[1, np.nan, np.nan, np.nan], #S6

[1, 1, np.nan, np.nan], #S7

#S8 is goal, no policy

])

[a, b] = theta_0.shape

Q = np.random.rand(a, b) * theta_0

Q[7,3]=0

print(Q)

#output:

[[ nan 0.745 0.711 nan]

[ nan 0.286 nan 0.385]

[ nan nan 0.513 0.056]

[ 0.501 0.921 0.993 nan]

[ nan nan 0.891 0.01 ]

[ 0.875 nan nan nan]

[ 0.439 nan nan nan]

[ 0.958 0.936 nan 0. ]]

ϵ − g r e e d y \epsilon-greedy ϵ−greedy(选择下一步的action):

greedy表示每次选择Q[s, :]中的最大值作为下一步的action,但是它有一个问题,就是容易陷入local optimal,所以要平衡随机探索和greedy

于是以 ϵ \epsilon ϵ的概率进行随机探索, 1 − ϵ 1-\epsilon 1−ϵ的概率采取greedy

ϵ \epsilon ϵ随着step增加而衰减

而且有一个理论,也就是说 ϵ − g r e e d y \epsilon-greedy ϵ−greedy是可以改进policy的:

for any ϵ − g r e e d y \epsilon-greedy ϵ−greedy policy π \pi π, ϵ − g r e e d y \epsilon-greedy ϵ−greedy policy π ′ \pi' π′ with respect to q π q_{\pi} qπ is an improvement. V π ′ ( s ) ≥ V π ( s ) V_{\pi'}(s) \geq V_{\pi}(s) Vπ′(s)≥Vπ(s)

证明就不写了

由于涉及到随机探索,所以仍然需要探索用的policy

这里不用softmax,而是用简单的概率

def simple_convert_into_pi_from_theta(theta):

[m, n] = theta.shape

pi = np.zeros((m, n))

for i in range(0, m):

pi[i, :] = theta[i, :] / np.nansum(theta[i, :])

pi = np.nan_to_num(pi)

return pi

pi_0 = simple_convert_into_pi_from_theta(theta_0)

实现 ϵ − g r e e d y \epsilon-greedy ϵ−greedy:

#epsilon-greedy

#get the action

def get_action(s, Q, epsilon, pi_0):

direction = ["up", "right", "down", "left"]

#print("s = " + str(s))

#probability epsilon to random search

if np.random.rand() < epsilon:

next_direction = np.random.choice(direction, p=pi_0[s, :])

else:

#move by the maximum Q

next_direction = direction[np.nanargmax(Q[s, :])]

if next_direction == "up":

action = 0

elif next_direction == "right":

action = 1

elif next_direction == "down":

action = 2

elif next_direction == "left":

action = 3

return action

#get next state by action

def get_s_next(s, a, Q, epsilon, pi_0):

direction = ["up", "right", "down", "left"]

next_direction = direction[a]

if next_direction == "up":

s_next = s - 3

elif next_direction == "right":

s_next = s + 1

elif next_direction == "down":

s_next = s + 3

elif next_direction == "left":

s_next = s - 1

return s_next

通过state, action更新Q矩阵的Sarsa算法:

推导:

μ k = 1 k ∑ j = 1 k x j \mu_{k} = \frac{1}{k}\sum_{j=1}^{k}x_{j} μk=k1∑j=1kxj

= 1 k ( x k + ∑ j = 1 k − 1 x j ) =\frac{1}{k}(x_{k} + \sum_{j=1}^{k-1}x_{j}) =k1(xk+∑j=1k−1xj)

= 1 k ( x k + ( k − 1 ) μ k − 1 ) =\frac{1}{k}(x_{k} + (k-1)\mu_{k-1}) =k1(xk+(k−1)μk−1)

= μ k − 1 + 1 k ( x k − μ k − 1 ) =\mu_{k-1} + \frac{1}{k}(x_{k} -\mu_{k-1}) =μk−1+k1(xk−μk−1)

for each state S t S_{t} St with G t G_{t} Gt:

N ( S t ) ← N ( S t ) + 1 N(S_{t})\leftarrow N(S_{t})+1 N(St)←N(St)+1

V ( S t ) ← V ( S t ) + 1 N ( S t ) ( G t − V ( S t ) ) V(S_{t})\leftarrow V(S_{t}) + \frac{1}{N(S_{t})}(G_{t}-V(S_{t})) V(St)←V(St)+N(St)1(Gt−V(St))

G t = R t + 1 + γ V ( S t + 1 ) G_{t}=R_{t+1} +\gamma V(S_{t+1}) Gt=Rt+1+γV(St+1)

得到:

Q ( s t , a t ) = Q ( s t , a t ) + η ∗ ( R t + 1 + γ Q ( s t + 1 , a t + 1 ) − Q ( s t , a t ) ) Q(s_{t}, a_{t}) = Q(s_{t}, a_{t}) + \eta*(R_{t+1} +\gamma Q(s_{t+1},a_{t+1})-Q(s_{t}, a_{t})) Q(st,at)=Q(st,at)+η∗(Rt+1+γQ(st+1,at+1)−Q(st,at))

其中 R t + 1 + γ Q ( s t + 1 , a t + 1 ) − Q ( s t , a t ) R_{t+1} +\gamma Q(s_{t+1},a_{t+1})-Q(s_{t}, a_{t}) Rt+1+γQ(st+1,at+1)−Q(st,at)叫做TD error

根据上述公式更新Q:

def Sarsa(s, a, r, s_next, a_next, Q, eta, gamma):

if s_next == 8: #the goal, next state not exist

Q[s, a] = Q[s, a] + eta * (r - Q[s, a])

else:

Q[s, a] = Q[s, a] + eta * (r + gamma*Q[s_next, a_next] - Q[s, a])

return Q

一个episode:

def goal_maze_ret_s_a_Q(Q, epsilon, eta, gamma, pi):

s = 0 #start state

a = a_next = get_action(s, Q, epsilon, pi) #get action by epsilon-greedy

s_a_history = [[0, np.nan]]

while(1): #until reach the goal

a = a_next

s_a_history[-1][1] = a

s_next = get_s_next(s, a, Q, epsilon, pi)

s_a_history.append([s_next, np.nan])

if s_next == 8:

r = 1 #get the reward

a_next = np.nan

else:

r = 0

a_next = get_action(s_next, Q, epsilon, pi)

Q = Sarsa(s, a, r, s_next, a_next, Q, eta, gamma) #update Q

if s_next == 8:

break

else:

s = s_next

return [s_a_history, Q]

完整迭代:

eta = 0.1

gamma = 0.9

epsilon = 0.5

v = np.nanmax(Q, axis=1) #select the maximum Q value for each state

is_continue = True

episode = 1

while is_continue:

print("episode: " + str(episode))

epsilon = epsilon / 2 #epsilon-greedy

[s_a_history, Q] = goal_maze_ret_s_a_Q(Q, epsilon, eta, gamma, pi_0)

new_v = np.nanmax(Q, axis=1) #maximum value for each state

print(np.sum(np.abs(new_v - v)))

v = new_v

print("steps to reach goal is: " + str(len(s_a_history) - 1))

episode = episode + 1

if episode > 100:

break

虽然写了到100 episode,但是从output看出很快就收敛到最优路径

episode: 1

0.227489819094

steps to reach goal is: 14

episode: 2

0.154785251991

steps to reach goal is: 10

episode: 3

0.0105243446365

steps to reach goal is: 4

episode: 4

0.00946282714278

steps to reach goal is: 4

episode: 5

0.00848520483778

steps to reach goal is: 4

episode: 6

0.00758386849718

steps to reach goal is: 4

episode: 7

0.0051117594454

steps to reach goal is: 4

episode: 8

看下收敛后的路径及Q矩阵

print(s_a_history)

#output:

[[0, 2], [3, 1], [4, 2], [7, 1], [8, nan]]

print(Q)

#output:

[[ nan 0.705 0.729 nan]

[ nan 0.286 nan 0.41 ]

[ nan nan 0.513 0.056]

[ 0.501 0.81 0.775 nan]

[ nan nan 0.9 0.088]

[ 0.875 nan nan nan]

[ 0.575 nan nan nan]

[ 0.928 1. nan 0. ]]

标准Sarsa只能backward一步,而Sarsa( λ ) \lambda) λ)算法一次可以更新整个路径,与Sarsa不同的是加了一个 E E E矩阵

(这个单独写)

Q-learning

Q-learning与Sarsa的区别:

对比Q的更新公式:

Sarsa:

Q ( s t , a t ) = Q ( s t , a t ) + η ∗ ( R t + 1 + γ Q ( s t + 1 , a t + 1 ) − Q ( s t , a t ) ) Q(s_{t}, a_{t}) = Q(s_{t}, a_{t}) + \eta*(R_{t+1} +\gamma Q(s_{t+1},a_{t+1})-Q(s_{t}, a_{t})) Q(st,at)=Q(st,at)+η∗(Rt+1+γQ(st+1,at+1)−Q(st,at))

Q-learning:

Q ( s t , a t ) = Q ( s t , a t ) + η ∗ ( R t + 1 + γ max a Q ( s t + 1 , a ) − Q ( s t , a t ) ) Q(s_{t}, a_{t}) = Q(s_{t}, a_{t}) + \eta*(R_{t+1} +\gamma \mathop{\max}\limits_{a }Q(s_{t+1},a)-Q(s_{t}, a_{t})) Q(st,at)=Q(st,at)+η∗(Rt+1+γamaxQ(st+1,a)−Q(st,at))

可以看到Sarsa更新时需要求并且使用 a t + 1 a_{t+1} at+1,而Q-learning需要用到 s t + 1 s_{t+1} st+1对应的最大Q值。

a t + 1 a_{t+1} at+1是由决定下一步action的policy决定的,所以Q的更新依赖于policy,称为online-policy

而Q-learning不依赖于决定action的policy,称为offline-policy

Sarsa中用到的 ϵ − g r e e d y \epsilon-greedy ϵ−greedy中random的部分Q-learning中没有,所以Q-learning有比Sarsa收敛更快的特征

按照Q-learning公式:

def Q_learning(s, a, r, s_next, Q, eta, gamma):

if s_next == 8: #goal

Q[s, a] = Q[s, a] + eta*(r - Q[s, a])

else:

Q[s, a] = Q[s, a] + eta*(r + gamma*np.nanmax(Q[s_next,:]) - Q[s, a])

return Q

因为不用 ϵ − g r e e d y \epsilon-greedy ϵ−greedy,所以action函数去掉随机的部分:

#get the action

def get_action(s, Q):

direction = ["up", "right", "down", "left"]

#print("s = " + str(s))

next_direction = direction[np.nanargmax(Q[s, :])]

if next_direction == "up":

action = 0

elif next_direction == "right":

action = 1

elif next_direction == "down":

action = 2

elif next_direction == "left":

action = 3

return action

def get_s_next(s, a):

direction = ["up", "right", "down", "left"]

next_direction = direction[a]

if next_direction == "up":

s_next = s - 3

elif next_direction == "right":

s_next = s + 1

elif next_direction == "down":

s_next = s + 3

elif next_direction == "left":

s_next = s - 1

return s_next

一次episode:

def goal_maze_ret_Q_learning(Q, eta, gamma):

s = 0 #start state

a = a_next = get_action(s, Q) #get action by maximum Q value

s_a_history = [[0, np.nan]]

while(1): #until reach the goal

a = a_next

s_a_history[-1][1] = a

s_next = get_s_next(s, a)

s_a_history.append([s_next, np.nan])

if s_next == 8:

r = 1 #get the reward

a_next = np.nan

else:

r = 0

a_next = get_action(s_next, Q)

Q = Q_learning(s, a, r, s_next, Q, eta, gamma) #update Q

if s_next == 8:

break

else:

s = s_next

return [s_a_history, Q]

完整迭代到100次:

eta = 0.1 #learning-rate

gamma = 0.9 #decrease rate

v = np.nanmax(Q, axis=1) #maximum value for each state

is_continue = True

episode = 1

V = [] #state value for each episode

V.append(np.nanmax(Q, axis=1)) #get the maximum value for each state

while is_continue:

print("episode " + str(episode))

[s_a_history, Q] = goal_maze_ret_Q_learning(Q, eta, gamma) #get one path

new_v = np.nanmax(Q, axis=1)

print(np.sum(np.abs(new_v - v))) #get the error

v = new_v

V.append(v)

print("steps to reach goal: " + str(len(s_a_history) - 1))

episode = episode + 1

if episode > 100:

is_continue = False

收敛结果

episode 1

0.100490460992

steps to reach goal: 20

episode 2

0.0969381947436

steps to reach goal: 16

episode 3

0.0943713455446

steps to reach goal: 8

episode 4

0.0932547392558

steps to reach goal: 6

episode 5

0.0918454990963

steps to reach goal: 6

episode 6

0.0904328729472

steps to reach goal: 4

episode 7

0.0895058129093

steps to reach goal: 4

episode 8

0.0885468091866

steps to reach goal: 4

episode 9

0.0875441463751

steps to reach goal: 4

episode 10

0.0864873768061

steps to reach goal: 4

episode 11

0.0853677368575

steps to reach goal: 4

episode 12

0.0841783707317

steps to reach goal: 4

episode 13

0.0829144098366

steps to reach goal: 4

episode 14

0.0815729464098

steps to reach goal: 4

episode 15

0.0801529321705

steps to reach goal: 4

episode 16

0.0786550263091

steps to reach goal: 4

episode 17

0.0770814118009

steps to reach goal: 4

episode 18

0.0754355946909

steps to reach goal: 4

episode 19

0.0737221974552

steps to reach goal: 4

episode 20

0.0719467546908

steps to reach goal: 4