Flink 1.7 本地模式安装配置(local)

centos7.5

安装jdk:

# rpm -ivh jdk-8u172-linux-x64.rpm

# java -version

java version "1.8.0_172"

Java(TM) SE Runtime Environment (build 1.8.0_172-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.172-b11, mixed mode)

# tar -xzvf flink-1.7.1-bin-scala_2.12.tgz -C /usr/local/

# mv /usr/local/flink-1.7.1/ /usr/local/flink

设置环境变量:

# cat /etc/profile.d/flink.sh

export MYSQL_HOME=/usr/local/flink

export PATH=$PATH:/usr/local/flink/bin

flink版本查询:

# flink --version

Version: 1.7.1, Commit ID: 89eafb4

# flink list

Waiting for response...

No running jobs.

No scheduled jobs.

--启动flink:

# ./jobmanager.sh start

# ./taskmanager.sh start

# ./historyserver.sh start

Starting historyserver daemon on host node4.

# ./standalone-job.sh start

Starting standalonejob daemon on host node4.

注意:historyserver的启动依赖于hadoop

--直接启动的脚本:

# ./start-cluster.sh

Starting cluster.

Starting standalonesession daemon on host node4.

Starting taskexecutor daemon on host node4.

#./stop-cluster.sh stop

--相关的参数:

# ./historyserver.sh

Unknown daemon ''. Usage: flink-daemon.sh (start|stop|stop-all) (taskexecutor|zookeeper|historyserver|standalonesession|standalonejob) [args].

# ./jobmanager.sh

Usage: jobmanager.sh ((start|start-foreground) [host] [webui-port])|stop|stop-all

# ./taskmanager.sh

Usage: taskmanager.sh (start|start-foreground|stop|stop-all)

# ./standalone-job.sh

Usage: standalone-job.sh ((start|start-foreground))|stop [args]

--相关的命令:

# ll

total 128

-rwxrwxrwx 1 root root 28869 Dec 11 23:30 config.sh

-rwxrwxrwx 1 root root 2224 Dec 11 20:39 flink

-rwxrwxrwx 1 root root 1271 Dec 11 20:39 flink.bat

-rwxrwxrwx 1 root root 2774 Dec 11 23:30 flink-console.sh

-rwxrwxrwx 1 root root 6358 Dec 11 23:30 flink-daemon.sh

-rwxrwxrwx 1 root root 1564 Dec 11 23:30 historyserver.sh

-rwxrwxrwx 1 root root 2891 Dec 11 23:30 jobmanager.sh

-rwxrwxrwx 1 root root 1802 Dec 11 20:39 mesos-appmaster-job.sh

-rwxrwxrwx 1 root root 1834 Dec 11 23:30 mesos-appmaster.sh

-rwxrwxrwx 1 root root 1888 Dec 11 23:30 mesos-taskmanager.sh

-rwxrwxrwx 1 root root 1164 Dec 11 20:39 pyflink.bat

-rwxrwxrwx 1 root root 1107 Dec 11 20:39 pyflink.sh

-rwxrwxrwx 1 root root 1182 Dec 11 20:39 pyflink-stream.sh

-rwxrwxrwx 1 root root 3434 Dec 11 20:40 sql-client.sh

-rwxrwxrwx 1 root root 2533 Dec 11 23:30 standalone-job.sh

-rwxrwxrwx 1 root root 3364 Dec 11 20:39 start-cluster.bat

-rwxrwxrwx 1 root root 1836 Dec 11 20:39 start-cluster.sh

-rwxrwxrwx 1 root root 2960 Dec 14 23:45 start-scala-shell.sh

-rwxrwxrwx 1 root root 1854 Dec 11 20:39 start-zookeeper-quorum.sh

-rwxrwxrwx 1 root root 1616 Dec 11 20:39 stop-cluster.sh

-rwxrwxrwx 1 root root 1845 Dec 11 20:39 stop-zookeeper-quorum.sh

-rwxrwxrwx 1 root root 3845 Dec 11 23:30 taskmanager.sh

-rwxrwxrwx 1 root root 1674 Dec 11 20:39 yarn-session.sh

-rwxrwxrwx 1 root root 2281 Dec 11 20:39 zookeeper.sh

--启动之后的日志信息:

# ls -l

total 48

-rw-r--r-- 1 root root 6093 Dec 25 10:06 flink-root-client-node4.log

-rw-r--r-- 1 root root 2749 Dec 25 10:10 flink-root-historyserver-0-node4.log

-rw-r--r-- 1 root root 693 Dec 25 10:10 flink-root-historyserver-0-node4.out

-rw-r--r-- 1 root root 3868 Dec 25 10:11 flink-root-standalonejob-0-node4.log

-rw-r--r-- 1 root root 1422 Dec 25 10:11 flink-root-standalonejob-0-node4.out

-rw-r--r-- 1 root root 11020 Dec 25 09:59 flink-root-standalonesession-0-node4.log

-rw-r--r-- 1 root root 0 Dec 25 09:59 flink-root-standalonesession-0-node4.out

-rw-r--r-- 1 root root 11636 Dec 25 09:59 flink-root-taskexecutor-0-node4.log

-rw-r--r-- 1 root root 0 Dec 25 09:59 flink-root-taskexecutor-0-node4.out

-- 配置文件:

# cat /usr/local/flink/conf/flink-conf.yaml

################################################################################

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

################################################################################

#==============================================================================

# Common

#==============================================================================

# The external address of the host on which the JobManager runs and can be

# reached by the TaskManagers and any clients which want to connect. This setting

# is only used in Standalone mode and may be overwritten on the JobManager side

# by specifying the --host parameter of the bin/jobmanager.sh executable.

# In high availability mode, if you use the bin/start-cluster.sh script and setup

# the conf/masters file, this will be taken care of automatically. Yarn/Mesos

# automatically configure the host name based on the hostname of the node where the

# JobManager runs.

jobmanager.rpc.address: localhost

# The RPC port where the JobManager is reachable.

jobmanager.rpc.port: 6123

# The heap size for the JobManager JVM

jobmanager.heap.size: 1024m

# The heap size for the TaskManager JVM

taskmanager.heap.size: 1024m

# The number of task slots that each TaskManager offers. Each slot runs one parallel pipeline.

taskmanager.numberOfTaskSlots: 1

# The parallelism used for programs that did not specify and other parallelism.

parallelism.default: 1

# The default file system scheme and authority.

#

# By default file paths without scheme are interpreted relative to the local

# root file system 'file:///'. Use this to override the default and interpret

# relative paths relative to a different file system,

# for example 'hdfs://mynamenode:12345'

#

# fs.default-scheme

#==============================================================================

# High Availability

#==============================================================================

# The high-availability mode. Possible options are 'NONE' or 'zookeeper'.

#

# high-availability: zookeeper

# The path where metadata for master recovery is persisted. While ZooKeeper stores

# the small ground truth for checkpoint and leader election, this location stores

# the larger objects, like persisted dataflow graphs.

#

# Must be a durable file system that is accessible from all nodes

# (like HDFS, S3, Ceph, nfs, ...)

#

# high-availability.storageDir: hdfs:///flink/ha/

# The list of ZooKeeper quorum peers that coordinate the high-availability

# setup. This must be a list of the form:

# "host1:clientPort,host2:clientPort,..." (default clientPort: 2181)

#

# high-availability.zookeeper.quorum: localhost:2181

# ACL options are based on https://zookeeper.apache.org/doc/r3.1.2/zookeeperProgrammers.html#sc_BuiltinACLSchemes

# It can be either "creator" (ZOO_CREATE_ALL_ACL) or "open" (ZOO_OPEN_ACL_UNSAFE)

# The default value is "open" and it can be changed to "creator" if ZK security is enabled

#

# high-availability.zookeeper.client.acl: open

#==============================================================================

# Fault tolerance and checkpointing

#==============================================================================

# The backend that will be used to store operator state checkpoints if

# checkpointing is enabled.

#

# Supported backends are 'jobmanager', 'filesystem', 'rocksdb', or the

# .

#

# state.backend: filesystem

# Directory for checkpoints filesystem, when using any of the default bundled

# state backends.

#

# state.checkpoints.dir: hdfs://namenode-host:port/flink-checkpoints

# Default target directory for savepoints, optional.

#

# state.savepoints.dir: hdfs://namenode-host:port/flink-checkpoints

# Flag to enable/disable incremental checkpoints for backends that

# support incremental checkpoints (like the RocksDB state backend).

#

# state.backend.incremental: false

#==============================================================================

# Web Frontend

#==============================================================================

# The address under which the web-based runtime monitor listens.

#

web.address: 0.0.0.0

# The port under which the web-based runtime monitor listens.

# A value of -1 deactivates the web server.

rest.port: 8081

# Flag to specify whether job submission is enabled from the web-based

# runtime monitor. Uncomment to disable.

#web.submit.enable: false

#==============================================================================

# Advanced

#==============================================================================

# Override the directories for temporary files. If not specified, the

# system-specific Java temporary directory (java.io.tmpdir property) is taken.

#

# For framework setups on Yarn or Mesos, Flink will automatically pick up the

# containers' temp directories without any need for configuration.

#

# Add a delimited list for multiple directories, using the system directory

# delimiter (colon ':' on unix) or a comma, e.g.:

# /data1/tmp:/data2/tmp:/data3/tmp

#

# Note: Each directory entry is read from and written to by a different I/O

# thread. You can include the same directory multiple times in order to create

# multiple I/O threads against that directory. This is for example relevant for

# high-throughput RAIDs.

#

# io.tmp.dirs: /tmp

# Specify whether TaskManager's managed memory should be allocated when starting

# up (true) or when memory is requested.

#

# We recommend to set this value to 'true' only in setups for pure batch

# processing (DataSet API). Streaming setups currently do not use the TaskManager's

# managed memory: The 'rocksdb' state backend uses RocksDB's own memory management,

# while the 'memory' and 'filesystem' backends explicitly keep data as objects

# to save on serialization cost.

#

# taskmanager.memory.preallocate: false

# The classloading resolve order. Possible values are 'child-first' (Flink's default)

# and 'parent-first' (Java's default).

#

# Child first classloading allows users to use different dependency/library

# versions in their application than those in the classpath. Switching back

# to 'parent-first' may help with debugging dependency issues.

#

# classloader.resolve-order: child-first

# The amount of memory going to the network stack. These numbers usually need

# no tuning. Adjusting them may be necessary in case of an "Insufficient number

# of network buffers" error. The default min is 64MB, teh default max is 1GB.

#

# taskmanager.network.memory.fraction: 0.1

# taskmanager.network.memory.min: 64mb

# taskmanager.network.memory.max: 1gb

#==============================================================================

# Flink Cluster Security Configuration

#==============================================================================

# Kerberos authentication for various components - Hadoop, ZooKeeper, and connectors -

# may be enabled in four steps:

# 1. configure the local krb5.conf file

# 2. provide Kerberos credentials (either a keytab or a ticket cache w/ kinit)

# 3. make the credentials available to various JAAS login contexts

# 4. configure the connector to use JAAS/SASL

# The below configure how Kerberos credentials are provided. A keytab will be used instead of

# a ticket cache if the keytab path and principal are set.

# security.kerberos.login.use-ticket-cache: true

# security.kerberos.login.keytab: /path/to/kerberos/keytab

# security.kerberos.login.principal: flink-user

# The configuration below defines which JAAS login contexts

# security.kerberos.login.contexts: Client,KafkaClient

#==============================================================================

# ZK Security Configuration

#==============================================================================

# Below configurations are applicable if ZK ensemble is configured for security

# Override below configuration to provide custom ZK service name if configured

# zookeeper.sasl.service-name: zookeeper

# The configuration below must match one of the values set in "security.kerberos.login.contexts"

# zookeeper.sasl.login-context-name: Client

#==============================================================================

# HistoryServer

#==============================================================================

# The HistoryServer is started and stopped via bin/historyserver.sh (start|stop)

# Directory to upload completed jobs to. Add this directory to the list of

# monitored directories of the HistoryServer as well (see below).

#jobmanager.archive.fs.dir: hdfs:///completed-jobs/

# The address under which the web-based HistoryServer listens.

#historyserver.web.address: 0.0.0.0

# The port under which the web-based HistoryServer listens.

#historyserver.web.port: 8082

# Comma separated list of directories to monitor for completed jobs.

#historyserver.archive.fs.dir: hdfs:///completed-jobs/

# Interval in milliseconds for refreshing the monitored directories.

#historyserver.archive.fs.refresh-interval: 10000

--端口说明:

jobmanager 6123

rest 8081

historyserver 8082

--java 进程查看:

# jps

8093 TaskManagerRunner

21054 Jps

7535 StandaloneSessionClusterEntrypoint

可以看到在单主机上有TaskManagerRunner和StandaloneSessionClusterEntrypoint进程。

--启动之后的端口:

# netstat -nultp | grep -i 8081

tcp6 0 0 :::8081 :::* LISTEN 7535/java

# netstat -nultp | grep -i 6123

tcp6 0 0 :::6123 :::* LISTEN 7535/java

--进程:

# ps -ef | grep -i flink

root 7535 1 1 09:59 pts/2 00:00:15 java -Xms1024m -Xmx1024m -Dlog.file=/usr/local/flink/log/flink-root-standalonesession-0-node4.log -Dlog4j.configuration=file:/usr/local/flink/conf/log4j.properties -Dlogback.configurationFile=file:/usr/local/flink/conf/logback.xml -classpath /usr/local/flink/lib/flink-python_2.12-1.7.1.jar:/usr/local/flink/lib/log4j-1.2.17.jar:/usr/local/flink/lib/slf4j-log4j12-1.7.15.jar:/usr/local/flink/lib/flink-dist_2.12-1.7.1.jar::: org.apache.flink.runtime.entrypoint.StandaloneSessionClusterEntrypoint --configDir /usr/local/flink/conf --executionMode cluster

root 8093 1 1 09:59 pts/2 00:00:12 java -XX:+UseG1GC -Xms922M -Xmx922M -XX:MaxDirectMemorySize=8388607T -Dlog.file=/usr/local/flink/log/flink-root-taskexecutor-0-node4.log -Dlog4j.configuration=file:/usr/local/flink/conf/log4j.properties -Dlogback.configurationFile=file:/usr/local/flink/conf/logback.xml -classpath /usr/local/flink/lib/flink-python_2.12-1.7.1.jar:/usr/local/flink/lib/log4j-1.2.17.jar:/usr/local/flink/lib/slf4j-log4j12-1.7.15.jar:/usr/local/flink/lib/flink-dist_2.12-1.7.1.jar::: org.apache.flink.runtime.taskexecutor.TaskManagerRunner --configDir /usr/local/flink/conf

--日志查看信息:

# ll

total 48

-rw-r--r-- 1 root root 6093 Dec 25 10:06 flink-root-client-node4.log

-rw-r--r-- 1 root root 2749 Dec 25 10:10 flink-root-historyserver-0-node4.log

-rw-r--r-- 1 root root 693 Dec 25 10:10 flink-root-historyserver-0-node4.out

-rw-r--r-- 1 root root 3868 Dec 25 10:11 flink-root-standalonejob-0-node4.log

-rw-r--r-- 1 root root 1422 Dec 25 10:11 flink-root-standalonejob-0-node4.out

-rw-r--r-- 1 root root 11020 Dec 25 09:59 flink-root-standalonesession-0-node4.log

-rw-r--r-- 1 root root 0 Dec 25 09:59 flink-root-standalonesession-0-node4.out

-rw-r--r-- 1 root root 11636 Dec 25 09:59 flink-root-taskexecutor-0-node4.log

-rw-r--r-- 1 root root 0 Dec 25 09:59 flink-root-taskexecutor-0-node4.out

--查看task的日志:

# cat flink-root-taskexecutor-0-node4.log

2018-12-25 09:59:53,282 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - --------------------------------------------------------------------------------

2018-12-25 09:59:53,284 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - Starting TaskManager (Version: 1.7.1, Rev:89eafb4, Date:14.12.2018 @ 15:48:34 GMT)

2018-12-25 09:59:53,284 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - OS current user: root

2018-12-25 09:59:53,284 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - Current Hadoop/Kerberos user:

2018-12-25 09:59:53,284 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - JVM: Java HotSpot(TM) 64-Bit Server VM - Oracle Corporation - 1.8/25.172-b11

2018-12-25 09:59:53,284 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - Maximum heap size: 922 MiBytes

2018-12-25 09:59:53,285 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - JAVA_HOME: (not set)

2018-12-25 09:59:53,285 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - No Hadoop Dependency available

2018-12-25 09:59:53,285 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - JVM Options:

2018-12-25 09:59:53,285 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - -XX:+UseG1GC

2018-12-25 09:59:53,285 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - -Xms922M

2018-12-25 09:59:53,285 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - -Xmx922M

2018-12-25 09:59:53,285 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - -XX:MaxDirectMemorySize=8388607T

2018-12-25 09:59:53,286 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - -Dlog.file=/usr/local/flink/log/flink-root-taskexecutor-0-node4.log

2018-12-25 09:59:53,286 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - -Dlog4j.configuration=file:/usr/local/flink/conf/log4j.properties

2018-12-25 09:59:53,286 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - -Dlogback.configurationFile=file:/usr/local/flink/conf/logback.xml

2018-12-25 09:59:53,286 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - Program Arguments:

2018-12-25 09:59:53,286 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - --configDir

2018-12-25 09:59:53,286 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - /usr/local/flink/conf

2018-12-25 09:59:53,286 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - Classpath: /usr/local/flink/lib/flink-python_2.12-1.7.1.jar:/usr/local/flink/lib/log4j-1.2.17.jar:/usr/local/flink/lib/slf4j-log4j12-1.7.15.jar:/usr/local/flink/lib/flink-dist_2.12-1.7.1.jar:::

2018-12-25 09:59:53,286 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - --------------------------------------------------------------------------------

2018-12-25 09:59:53,288 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - Registered UNIX signal handlers for [TERM, HUP, INT]

2018-12-25 09:59:53,292 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - Maximum number of open file descriptors is 131072.

2018-12-25 09:59:53,306 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: jobmanager.rpc.address, localhost

2018-12-25 09:59:53,306 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: jobmanager.rpc.port, 6123

2018-12-25 09:59:53,307 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: jobmanager.heap.size, 1024m

2018-12-25 09:59:53,307 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: taskmanager.heap.size, 1024m

2018-12-25 09:59:53,307 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: taskmanager.numberOfTaskSlots, 1

2018-12-25 09:59:53,307 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: parallelism.default, 1

2018-12-25 09:59:53,308 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: rest.port, 8081

2018-12-25 09:59:53,316 INFO org.apache.flink.core.fs.FileSystem - Hadoop is not in the classpath/dependencies. The extended set of supported File Systems via Hadoop is not available.

2018-12-25 09:59:53,340 INFO org.apache.flink.runtime.security.modules.HadoopModuleFactory - Cannot create Hadoop Security Module because Hadoop cannot be found in the Classpath.

2018-12-25 09:59:53,364 INFO org.apache.flink.runtime.security.SecurityUtils - Cannot install HadoopSecurityContext because Hadoop cannot be found in the Classpath.

2018-12-25 09:59:53,944 WARN org.apache.flink.configuration.Configuration - Config uses deprecated configuration key 'jobmanager.rpc.address' instead of proper key 'rest.address'

2018-12-25 09:59:53,949 INFO org.apache.flink.runtime.util.LeaderRetrievalUtils - Trying to select the network interface and address to use by connecting to the leading JobManager.

2018-12-25 09:59:53,950 INFO org.apache.flink.runtime.util.LeaderRetrievalUtils - TaskManager will try to connect for 10000 milliseconds before falling back to heuristics

2018-12-25 09:59:53,954 INFO org.apache.flink.runtime.net.ConnectionUtils - Retrieved new target address localhost/127.0.0.1:6123.

2018-12-25 09:59:53,969 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - TaskManager will use hostname/address 'node4' (172.16.1.92) for communication.

2018-12-25 09:59:53,972 INFO org.apache.flink.runtime.rpc.akka.AkkaRpcServiceUtils - Trying to start actor system at node4:0

2018-12-25 09:59:54,552 INFO akka.event.slf4j.Slf4jLogger - Slf4jLogger started

2018-12-25 09:59:54,663 INFO akka.remote.Remoting - Starting remoting

2018-12-25 09:59:54,840 INFO akka.remote.Remoting - Remoting started; listening on addresses :[akka.tcp://flink@node4:37498]

2018-12-25 09:59:54,859 INFO org.apache.flink.runtime.rpc.akka.AkkaRpcServiceUtils - Actor system started at akka.tcp://flink@node4:37498

2018-12-25 09:59:54,864 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - Trying to start actor system at node4:0

2018-12-25 09:59:54,889 INFO akka.event.slf4j.Slf4jLogger - Slf4jLogger started

2018-12-25 09:59:54,901 INFO akka.remote.Remoting - Starting remoting

2018-12-25 09:59:54,911 INFO akka.remote.Remoting - Remoting started; listening on addresses :[akka.tcp://flink-metrics@node4:38979]

2018-12-25 09:59:54,912 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - Actor system started at akka.tcp://flink-metrics@node4:38979

2018-12-25 09:59:54,926 INFO org.apache.flink.runtime.metrics.MetricRegistryImpl - No metrics reporter configured, no metrics will be exposed/reported.

2018-12-25 09:59:54,934 INFO org.apache.flink.runtime.blob.PermanentBlobCache - Created BLOB cache storage directory /tmp/blobStore-21e2a4f4-e316-4c22-888c-9f0fe630c131

2018-12-25 09:59:54,937 INFO org.apache.flink.runtime.blob.TransientBlobCache - Created BLOB cache storage directory /tmp/blobStore-f2095fc5-0582-44b3-865d-ed46290b27a4

2018-12-25 09:59:54,938 INFO org.apache.flink.runtime.taskexecutor.TaskManagerRunner - Starting TaskManager with ResourceID: 1f41e6a42170b7023adffffbc2737f84

2018-12-25 09:59:54,942 INFO org.apache.flink.runtime.io.network.netty.NettyConfig - NettyConfig [server address: node4/172.16.1.92, server port: 0, ssl enabled: false, memory segment size (bytes): 32768, transport type: NIO, number of server threads: 1 (manual), number of client threads: 1 (manual), server connect backlog: 0 (use Netty's default), client connect timeout (sec): 120, send/receive buffer size (bytes): 0 (use Netty's default)]

2018-12-25 09:59:55,002 INFO org.apache.flink.runtime.taskexecutor.TaskManagerServices - Temporary file directory '/tmp': total 495 GB, usable 351 GB (70.91% usable)

2018-12-25 09:59:55,133 INFO org.apache.flink.runtime.io.network.buffer.NetworkBufferPool - Allocated 102 MB for network buffer pool (number of memory segments: 3278, bytes per segment: 32768).

2018-12-25 09:59:55,190 INFO org.apache.flink.runtime.query.QueryableStateUtils - Could not load Queryable State Client Proxy. Probable reason: flink-queryable-state-runtime is not in the classpath. To enable Queryable State, please move the flink-queryable-state-runtime jar from the opt to the lib folder.

2018-12-25 09:59:55,190 INFO org.apache.flink.runtime.query.QueryableStateUtils - Could not load Queryable State Server. Probable reason: flink-queryable-state-runtime is not in the classpath. To enable Queryable State, please move the flink-queryable-state-runtime jar from the opt to the lib folder.

2018-12-25 09:59:55,191 INFO org.apache.flink.runtime.io.network.NetworkEnvironment - Starting the network environment and its components.

2018-12-25 09:59:55,232 INFO org.apache.flink.runtime.io.network.netty.NettyClient - Successful initialization (took 38 ms).

2018-12-25 09:59:55,287 INFO org.apache.flink.runtime.io.network.netty.NettyServer - Successful initialization (took 55 ms). Listening on SocketAddress /172.16.1.92:44140.

2018-12-25 09:59:55,288 INFO org.apache.flink.runtime.taskexecutor.TaskManagerServices - Limiting managed memory to 0.7 of the currently free heap space (641 MB), memory will be allocated lazily.

2018-12-25 09:59:55,292 INFO org.apache.flink.runtime.io.disk.iomanager.IOManager - I/O manager uses directory /tmp/flink-io-35c22c33-a856-4bfa-aa91-dcb106325bfd for spill files.

2018-12-25 09:59:55,362 INFO org.apache.flink.runtime.taskexecutor.TaskManagerConfiguration - Messages have a max timeout of 10000 ms

2018-12-25 09:59:55,374 INFO org.apache.flink.runtime.rpc.akka.AkkaRpcService - Starting RPC endpoint for org.apache.flink.runtime.taskexecutor.TaskExecutor at akka://flink/user/taskmanager_0 .

2018-12-25 09:59:55,390 INFO org.apache.flink.runtime.taskexecutor.JobLeaderService - Start job leader service.

2018-12-25 09:59:55,391 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor - Connecting to ResourceManager akka.tcp://flink@localhost:6123/user/resourcemanager(00000000000000000000000000000000).

2018-12-25 09:59:55,391 INFO org.apache.flink.runtime.filecache.FileCache - User file cache uses directory /tmp/flink-dist-cache-997e7b7b-16af-4254-a6e6-5fe99f4b365c

2018-12-25 09:59:55,624 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor - Resolved ResourceManager address, beginning registration

2018-12-25 09:59:55,624 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor - Registration at ResourceManager attempt 1 (timeout=100ms)

2018-12-25 09:59:55,699 INFO org.apache.flink.runtime.taskexecutor.TaskExecutor - Successful registration at resource manager akka.tcp://flink@localhost:6123/user/resourcemanager under registration id 346779405ab613a2bc2bb829e7fff6be.

--还可以查看

# cat flink-root-standalonesession-0-node4.log

2018-12-25 09:59:33,139 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - --------------------------------------------------------------------------------

2018-12-25 09:59:33,140 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - Starting StandaloneSessionClusterEntrypoint (Version: 1.7.1, Rev:89eafb4, Date:14.12.2018 @ 15:48:34 GMT)

2018-12-25 09:59:33,140 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - OS current user: root

2018-12-25 09:59:33,141 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - Current Hadoop/Kerberos user:

2018-12-25 09:59:33,141 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - JVM: Java HotSpot(TM) 64-Bit Server VM - Oracle Corporation - 1.8/25.172-b11

2018-12-25 09:59:33,141 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - Maximum heap size: 981 MiBytes

2018-12-25 09:59:33,141 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - JAVA_HOME: (not set)

2018-12-25 09:59:33,141 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - No Hadoop Dependency available

2018-12-25 09:59:33,141 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - JVM Options:

2018-12-25 09:59:33,141 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - -Xms1024m

2018-12-25 09:59:33,141 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - -Xmx1024m

2018-12-25 09:59:33,142 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - -Dlog.file=/usr/local/flink/log/flink-root-standalonesession-0-node4.log

2018-12-25 09:59:33,142 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - -Dlog4j.configuration=file:/usr/local/flink/conf/log4j.properties

2018-12-25 09:59:33,142 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - -Dlogback.configurationFile=file:/usr/local/flink/conf/logback.xml

2018-12-25 09:59:33,142 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - Program Arguments:

2018-12-25 09:59:33,142 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - --configDir

2018-12-25 09:59:33,142 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - /usr/local/flink/conf

2018-12-25 09:59:33,142 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - --executionMode

2018-12-25 09:59:33,142 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - cluster

2018-12-25 09:59:33,142 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - Classpath: /usr/local/flink/lib/flink-python_2.12-1.7.1.jar:/usr/local/flink/lib/log4j-1.2.17.jar:/usr/local/flink/lib/slf4j-log4j12-1.7.15.jar:/usr/local/flink/lib/flink-dist_2.12-1.7.1.jar:::

2018-12-25 09:59:33,142 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - --------------------------------------------------------------------------------

2018-12-25 09:59:33,144 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - Registered UNIX signal handlers for [TERM, HUP, INT]

2018-12-25 09:59:33,160 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: jobmanager.rpc.address, localhost

2018-12-25 09:59:33,160 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: jobmanager.rpc.port, 6123

2018-12-25 09:59:33,161 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: jobmanager.heap.size, 1024m

2018-12-25 09:59:33,161 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: taskmanager.heap.size, 1024m

2018-12-25 09:59:33,161 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: taskmanager.numberOfTaskSlots, 1

2018-12-25 09:59:33,161 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: parallelism.default, 1

2018-12-25 09:59:33,162 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: rest.port, 8081

2018-12-25 09:59:33,245 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - Starting StandaloneSessionClusterEntrypoint.

2018-12-25 09:59:33,245 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - Install default filesystem.

2018-12-25 09:59:33,251 INFO org.apache.flink.core.fs.FileSystem - Hadoop is not in the classpath/dependencies. The extended set of supported File Systems via Hadoop is not available.

2018-12-25 09:59:33,260 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - Install security context.

2018-12-25 09:59:33,269 INFO org.apache.flink.runtime.security.modules.HadoopModuleFactory - Cannot create Hadoop Security Module because Hadoop cannot be found in the Classpath.

2018-12-25 09:59:33,283 INFO org.apache.flink.runtime.security.SecurityUtils - Cannot install HadoopSecurityContext because Hadoop cannot be found in the Classpath.

2018-12-25 09:59:33,284 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - Initializing cluster services.

2018-12-25 09:59:33,711 INFO org.apache.flink.runtime.rpc.akka.AkkaRpcServiceUtils - Trying to start actor system at localhost:6123

2018-12-25 09:59:34,276 INFO akka.event.slf4j.Slf4jLogger - Slf4jLogger started

2018-12-25 09:59:34,379 INFO akka.remote.Remoting - Starting remoting

2018-12-25 09:59:34,723 INFO akka.remote.Remoting - Remoting started; listening on addresses :[akka.tcp://flink@localhost:6123]

2018-12-25 09:59:34,745 INFO org.apache.flink.runtime.rpc.akka.AkkaRpcServiceUtils - Actor system started at akka.tcp://flink@localhost:6123

2018-12-25 09:59:34,766 WARN org.apache.flink.configuration.Configuration - Config uses deprecated configuration key 'jobmanager.rpc.address' instead of proper key 'rest.address'

2018-12-25 09:59:34,785 INFO org.apache.flink.runtime.blob.BlobServer - Created BLOB server storage directory /tmp/blobStore-decd5fe3-33d7-4dd4-b02a-a35d2d832cf3

2018-12-25 09:59:34,829 INFO org.apache.flink.runtime.blob.BlobServer - Started BLOB server at 0.0.0.0:32941 - max concurrent requests: 50 - max backlog: 1000

2018-12-25 09:59:34,845 INFO org.apache.flink.runtime.metrics.MetricRegistryImpl - No metrics reporter configured, no metrics will be exposed/reported.

2018-12-25 09:59:34,849 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - Trying to start actor system at localhost:0

2018-12-25 09:59:34,891 INFO akka.event.slf4j.Slf4jLogger - Slf4jLogger started

2018-12-25 09:59:34,901 INFO akka.remote.Remoting - Starting remoting

2018-12-25 09:59:34,912 INFO akka.remote.Remoting - Remoting started; listening on addresses :[akka.tcp://flink-metrics@localhost:46387]

2018-12-25 09:59:34,913 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - Actor system started at akka.tcp://flink-metrics@localhost:46387

2018-12-25 09:59:34,919 INFO org.apache.flink.runtime.dispatcher.FileArchivedExecutionGraphStore - Initializing FileArchivedExecutionGraphStore: Storage directory /tmp/executionGraphStore-8c5bbef1-dd8f-48e2-bfcd-1d8955b0af79, expiration time 3600000, maximum cache size 52428800 bytes.

2018-12-25 09:59:35,195 INFO org.apache.flink.runtime.blob.TransientBlobCache - Created BLOB cache storage directory /tmp/blobStore-9566b903-5b72-40af-bd6f-78f642b14a1d

2018-12-25 09:59:35,220 WARN org.apache.flink.configuration.Configuration - Config uses deprecated configuration key 'jobmanager.rpc.address' instead of proper key 'rest.address'

2018-12-25 09:59:35,222 WARN org.apache.flink.runtime.dispatcher.DispatcherRestEndpoint - Upload directory /tmp/flink-web-4369ddd5-0e9c-4af9-ad18-952e6a4685ff/flink-web-upload does not exist, or has been deleted externally. Previously uploaded files are no longer available.

2018-12-25 09:59:35,223 INFO org.apache.flink.runtime.dispatcher.DispatcherRestEndpoint - Created directory /tmp/flink-web-4369ddd5-0e9c-4af9-ad18-952e6a4685ff/flink-web-upload for file uploads.

2018-12-25 09:59:35,229 INFO org.apache.flink.runtime.dispatcher.DispatcherRestEndpoint - Starting rest endpoint.

2018-12-25 09:59:35,515 INFO org.apache.flink.runtime.webmonitor.WebMonitorUtils - Determined location of main cluster component log file: /usr/local/flink/log/flink-root-standalonesession-0-node4.log

2018-12-25 09:59:35,515 INFO org.apache.flink.runtime.webmonitor.WebMonitorUtils - Determined location of main cluster component stdout file: /usr/local/flink/log/flink-root-standalonesession-0-node4.out

2018-12-25 09:59:35,682 INFO org.apache.flink.runtime.dispatcher.DispatcherRestEndpoint - Rest endpoint listening at localhost:8081

2018-12-25 09:59:35,682 INFO org.apache.flink.runtime.dispatcher.DispatcherRestEndpoint - http://localhost:8081 was granted leadership with leaderSessionID=00000000-0000-0000-0000-000000000000

2018-12-25 09:59:35,682 INFO org.apache.flink.runtime.dispatcher.DispatcherRestEndpoint - Web frontend listening at http://localhost:8081.

2018-12-25 09:59:35,859 INFO org.apache.flink.runtime.rpc.akka.AkkaRpcService - Starting RPC endpoint for org.apache.flink.runtime.resourcemanager.StandaloneResourceManager at akka://flink/user/resourcemanager .

2018-12-25 09:59:35,879 INFO org.apache.flink.runtime.rpc.akka.AkkaRpcService - Starting RPC endpoint for org.apache.flink.runtime.dispatcher.StandaloneDispatcher at akka://flink/user/dispatcher .

2018-12-25 09:59:35,895 INFO org.apache.flink.runtime.resourcemanager.StandaloneResourceManager - ResourceManager akka.tcp://flink@localhost:6123/user/resourcemanager was granted leadership with fencing token 00000000000000000000000000000000

2018-12-25 09:59:35,895 INFO org.apache.flink.runtime.resourcemanager.slotmanager.SlotManager - Starting the SlotManager.

2018-12-25 09:59:35,910 INFO org.apache.flink.runtime.dispatcher.StandaloneDispatcher - Dispatcher akka.tcp://flink@localhost:6123/user/dispatcher was granted leadership with fencing token 00000000-0000-0000-0000-000000000000

2018-12-25 09:59:35,914 INFO org.apache.flink.runtime.dispatcher.StandaloneDispatcher - Recovering all persisted jobs.

2018-12-25 09:59:55,685 INFO org.apache.flink.runtime.resourcemanager.StandaloneResourceManager - Registering TaskManager with ResourceID 1f41e6a42170b7023adffffbc2737f84 (akka.tcp://flink@node4:37498/user/taskmanager_0) at ResourceManager

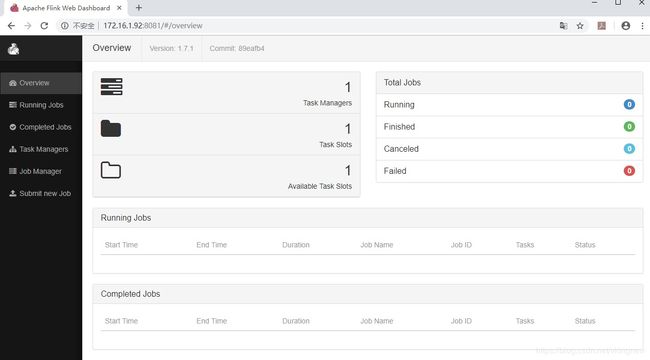

--通过web方式访问:

可以看到运行的时候只有1个task manager和1个task slot.

可以看到运行的时候只有1个task manager和1个task slot.

--创建文件及内容:

# cat /opt/flink/hello.txt

Java flink java flink flink

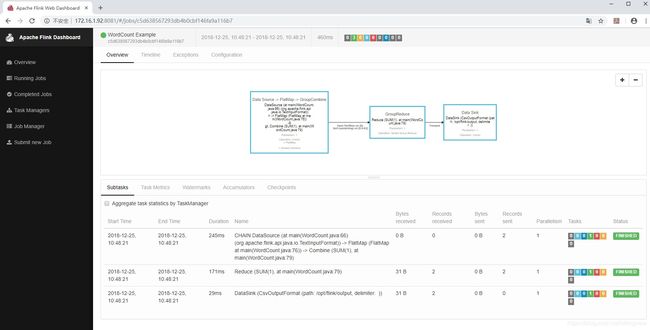

运行实例:

# flink run -m 172.16.1.92:8081 /usr/local/flink/examples/batch/WordCount.jar --input /opt/flink/hello.txt --output /opt/flink/output

Starting execution of program

Program execution finished

Job with JobID c5d638567293db4b0cbf146fa9a116b7 has finished.

Job Runtime: 460 ms

--结果查看:

# cat /opt/flink/output

flink 3

java 2

运行过程:

--流式计算实例:

# nc -l 9000

china

java

java

java

china

java

同时按ctrl+z结束。

查看输出的统计:

# tail -f /usr/local/flink/log/flink-root-taskexecutor-0-node4.out