LearnOpenCV学习——平均脸

原文地址: http://www.learnopencv.com/average-face-opencv-c-python-tutorial/

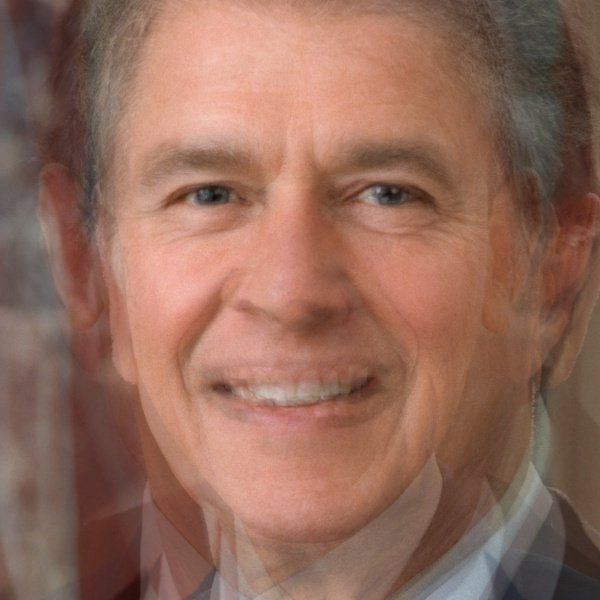

先看实验效果:最右边的人脸是由左边6幅人脸平均得到的。

Figure 2 : Average face of US Presidents : Carter to Obama.

实现过程:

1、人脸关键点检测:

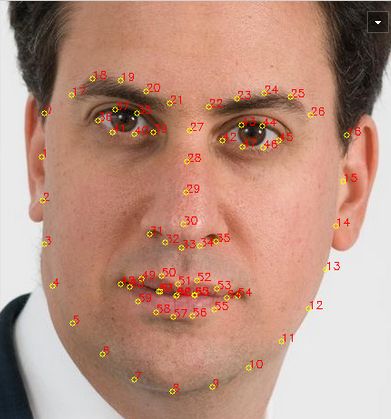

采用dlib的关键点检测器,获得人脸的68个关键点。

2、坐标变换:

由于输入图像的尺寸是大小不一的,人脸区域大小也不相同,所以要通过坐标变换,对人脸图像进行归一化操作。

在这里,我们把人脸区域warp成600*600大小,使得左眼(外眼角)位置在(180,200),右眼(外眼角)位置在(420,200)。

选择这两个位置的原因是:我们希望人脸中心在图像高度的1/3位置,并且两个眼睛保持水平,所以我们选择左眼角位置为( 0.3*width, height / 3 ),右眼角位置为(0.7*width , height / 3) 。

dlib关键点检测器,检测到的左外眼角是第36个点,右外眼角为第45个点,如下图:

利用这两个点计算图像的变换矩阵(similarity transform),该矩阵是一个2*3的矩阵,如下:

如果我们想对一个矩形进行变换,其中x、y方向的缩放因为分别为sx,sy,同时旋转一个角度 ,然后再在x方向平移tx, 在y方向平移ty, 则这样的一个变换矩阵可以写成:

第一列和第二列是缩放和旋转,第三列是平移。

Given, a point , the above similarity transform, moves it to point using the equation given below:

利用opencv的estimateRigidTransform方法,可以获得这样的变换矩阵,但遗憾的是,estimateRigidTransform至少需要三个点,所以我们需要构选第三个点,构造方法是用第三个点与已有的两个点构成等边三角形,这样第三个点的坐标为:

相似变换矩阵计算出来之的一,就可以用它来对图像和landmark进行变换。The image is transformed using warpAffine and the points are transformed using transform.

3、人脸对齐

经过步骤2之后,所有的图像都变成一样大小,并且两个眼睛的位置是保持一致。如果就这样进行平均脸计算的话,会得到下面这种效果,因为除了眼睛对齐了之外,其他点并没有对齐,所以会出现这种效果。

Figure 5 : Result of naive face averaging

其他点怎么对齐呢?我们已经知道输入图像68个点的位置,如果还能知道输出图像68个点的位置,那自然是很容易对齐的。遗憾的是,除了眼睛的位置我们可以事先定义之外,其他点的位置一般很难给出合理的定义。

解决办法是Delaunay Triangulation,具体如下:

(1)Calculate Mean Face Points

计算N张similarity transform之后的输出图像的所有关键点位置的平均值,即平均脸的第i个关键点位置,等于所有经过similarity transform之后的图像的第i个关键点位置的平均值。

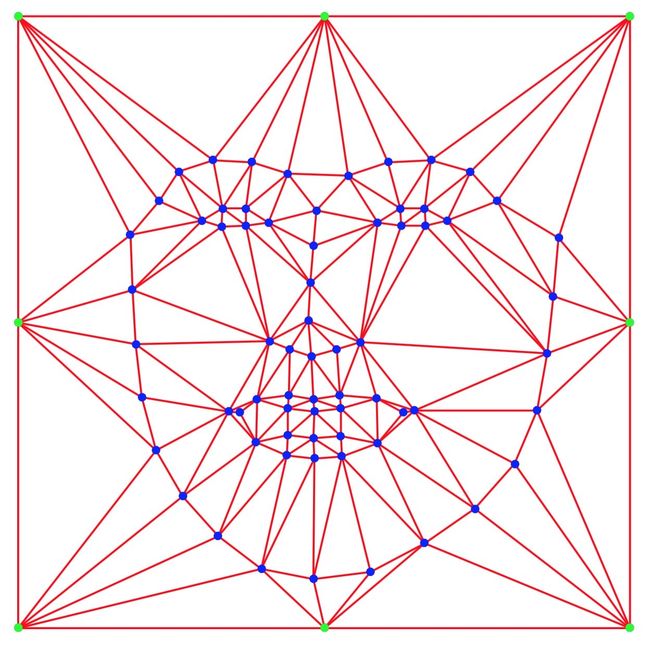

(2)Calculate Delaunay Triangulation

利用平均脸的68个关键点,以及图像边界的8个点,计算Delaunay Triangulation,如下图所示。The result of Delaunay triangulation is a list of triangles represented by the indices of points in the 76 points ( 68 face points + 8 boundary points ) array。

Figure 6 : Delaunay Triangulation of average landmark points.

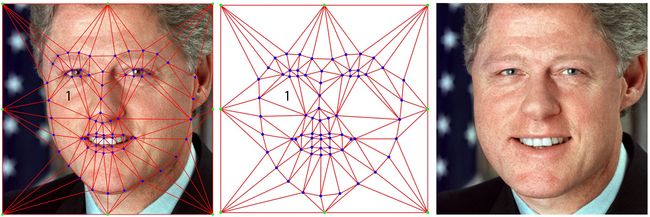

(3)Warp Triangles

对输入图像(similarity transform之后的图像)和平均脸分别计算Delaunay Triangulation,如图7的left image 和middle image,left image里面的triangle 1对应middle image里面的triangle 1,通过这两个三角形,可以计算一个从输入图像triangle 1到平均脸triangle 1的放射变换,从而把输入图像triangle 1里面的所有像素,变换成平均脸triangle 1的所有像素。其他triangle 也进行同样的操作,得到的结果就如right image所示。

Figure 7 : Image Warping based on Delaunay Triangulation.

The left image shows Delaunay triangles on the transformed input image.

The middle image shows the triangulation on the average landmarks.

The right image is simply the left image warped to the average face

4、人脸平均

经过warp之后,将所有人脸的对应像素的灰度值加起来求平均,即是平均脸图像。

原作者的实验效果:

Figure 8 : Facial Average of Mark Zuckerberg, Larry Page, Elon Musk and Jeff Bezos

Figure 9 : Facial Average of last four best actress winners : Brie Larson, Julianne Moore, Cate Blanchett and Jennifer Lawrence

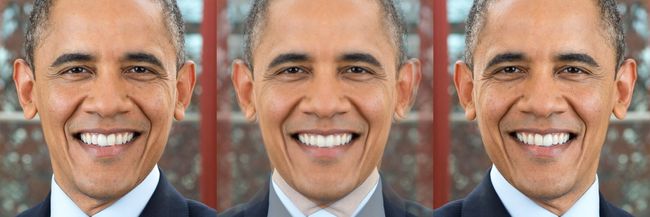

还有一个有趣的实验是,将Obama左右镜像的两张图像进行平均之后,得到一张对称脸,如下图:

Figure 10 : President Obama made symmetric (center) by averaging his image (left) with its mirror reflection (right).

完整代码:

#!/usr/bin/env python

# Copyright (c) 2016 Satya Mallick