TensorRT-5.1.5.0-SSD

文档:

https://docs.nvidia.com/deeplearning/sdk/tensorrt-archived/tensorrt-513

https://docs.nvidia.com/deeplearning/sdk/tensorrt-api/c_api/index.html

安装:

https://docs.nvidia.com/deeplearning/sdk/tensorrt-install-guide/index.html

https://docs.nvidia.com/deeplearning/sdk/tensorrt-install-guide/index.html#installing-tar

export CUDA_HOME=/usr/local/cuda

export PATH=/usr/local/cuda/bin${PATH:+:${PATH}}

export LD_LIBRARY_PATH=/usr/local/cuda/lib64${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/home/boyun/NVIDIA/TensorRT-5.1.5.0/libsudo ./cuda_9.0.176_384.81_linux.run

sudo ./cuda_9.0.176.1_linux.run

sudo ./cuda_9.0.176.2_linux.run

sudo ./cuda_9.0.176.3_linux.run

sudo ./cuda_9.0.176.4_linux.run

cp cudnn-9.0-linux-x64-v7.5.1.10.solitairetheme8 cudnn-9.0-linux-x64-v7.5.1.10.tgz

sudo cp cuda/include/cudnn.h /usr/local/cuda-9.0/include/

sudo cp cuda/lib64/libcudnn* /usr/local/cuda-9.0/lib64/

cd /usr/local/cuda-9.0/lib64/

sudo rm -rf libcudnn.so.7

sudo rm -rf libcudnn.so

sudo ln -s libcudnn.so.7.5.1 libcudnn.so.7

sudo ln -s libcudnn.so.7 libcudnn.so

sudo gedit ~/.bashrc

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/home/boyun/NVIDIA/TensorRT-5.1.5.0/lib

cd /home/boyun/NVIDIA/TensorRT-5.1.5.0/python

sudo pip install tensorrt-5.1.5.0-cp27-none-linux_x86_64.whl

一些插件,比如使用TensorFlow的时候,所以我们都安装上:

cd /home/boyun/NVIDIA/TensorRT-5.1.5.0/uff

sudo pip install uff-0.6.3-py2.py3-none-any.whl

cd /home/boyun/NVIDIA/TensorRT-5.1.5.0/graphsurgeon

sudo pip install graphsurgeon-0.4.1-py2.py3-none-any.whl

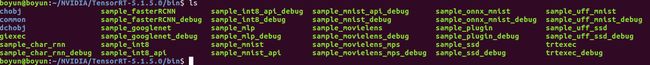

测试安装:

import tensorrt编译:

cd /home/boyun/NVIDIA/TensorRT-5.1.5.0/samples/

make -20

cd /home/boyun/NVIDIA/TensorRT-5.1.5.0/bin/

跑例子

./sample_googlenet

&&&& RUNNING TensorRT.sample_googlenet # ./sample_googlenet

[I] Building and running a GPU inference engine for GoogleNet

[I] Ran ./sample_googlenet with:

[I] Input(s): data

[I] Output(s): prob

&&&& PASSED TensorRT.sample_googlenet # ./sample_googlenet

测试时间

./trtexec

&&&& RUNNING TensorRT.trtexec # ./trtexec

Mandatory params:

--deploy= Caffe deploy file

OR --uff= UFF file

OR --onnx= ONNX Model file

OR --loadEngine= Load a saved engine

Mandatory params for UFF:

--uffInput=,C,H,W Input blob name and its dimensions for UFF parser (can be specified multiple times)

--output= Output blob name (can be specified multiple times)

Mandatory params for Caffe:

--output= Output blob name (can be specified multiple times)

Optional params:

--model= Caffe model file (default = no model, random weights used)

--batch=N Set batch size (default = 1)

--device=N Set cuda device to N (default = 0)

--iterations=N Run N iterations (default = 10)

--avgRuns=N Set avgRuns to N - perf is measured as an average of avgRuns (default=10)

--percentile=P For each iteration, report the percentile time at P percentage (0<=P<=100, with 0 representing min, and 100 representing max; default = 99.0%)

--workspace=N Set workspace size in megabytes (default = 16)

--safe Only test the functionality available in safety restricted flows.

--fp16 Run in fp16 mode (default = false). Permits 16-bit kernels

--int8 Run in int8 mode (default = false). Currently no support for ONNX model.

--verbose Use verbose logging (default = false)

--saveEngine= Save a serialized engine to file.

--loadEngine= Load a serialized engine from file.

--calib= Read INT8 calibration cache file. Currently no support for ONNX model.

--useDLACore=N Specify a DLA engine for layers that support DLA. Value can range from 0 to n-1, where n is the number of DLA engines on the platform.

--allowGPUFallback If --useDLACore flag is present and if a layer can't run on DLA, then run on GPU.

--useSpinWait Actively wait for work completion. This option may decrease multi-process synchronization time at the cost of additional CPU usage. (default = false)

--dumpOutput Dump outputs at end of test.

-h, --help Print usage

&&&& FAILED TensorRT.trtexec # ./trtexec

测试时间程序:请使用绝对路径

./trtexec --deploy=/home/boyun/NVIDIA/TensorRT-5.1.5.0/data/googlenet/googlenet.prototxt --output=prob --model=/home/boyun/NVIDIA/TensorRT-5.1.5.0/data/googlenet/googlenet.caffemodel

&&&& RUNNING TensorRT.trtexec # ./trtexec --deploy=/home/boyun/NVIDIA/TensorRT-5.1.5.0/data/googlenet/googlenet.prototxt --output=prob --model=/home/boyun/NVIDIA/TensorRT-5.1.5.0/data/googlenet/googlenet.caffemodel

[I] deploy: /home/boyun/NVIDIA/TensorRT-5.1.5.0/data/googlenet/googlenet.prototxt

[I] output: prob

[I] model: /home/boyun/NVIDIA/TensorRT-5.1.5.0/data/googlenet/googlenet.caffemodel

[I] Input "data": 3x224x224

[I] Output "prob": 1000x1x1

[I] Average over 10 runs is 1.42812 ms (host walltime is 1.55343 ms, 99% percentile time is 1.43962).

[I] Average over 10 runs is 1.42616 ms (host walltime is 1.51039 ms, 99% percentile time is 1.43974).

[I] Average over 10 runs is 1.42445 ms (host walltime is 1.60433 ms, 99% percentile time is 1.43638).

[I] Average over 10 runs is 1.42804 ms (host walltime is 1.5044 ms, 99% percentile time is 1.43565).

[I] Average over 10 runs is 1.42922 ms (host walltime is 1.49007 ms, 99% percentile time is 1.43565).

[I] Average over 10 runs is 1.42908 ms (host walltime is 1.59964 ms, 99% percentile time is 1.44486).

[I] Average over 10 runs is 1.43004 ms (host walltime is 1.47835 ms, 99% percentile time is 1.43974).

[I] Average over 10 runs is 1.4258 ms (host walltime is 1.60077 ms, 99% percentile time is 1.43667).

[I] Average over 10 runs is 1.42793 ms (host walltime is 1.48041 ms, 99% percentile time is 1.43872).

[I] Average over 10 runs is 1.4263 ms (host walltime is 1.618 ms, 99% percentile time is 1.43155).

&&&& PASSED TensorRT.trtexec # ./trtexec --deploy=/home/boyun/NVIDIA/TensorRT-5.1.5.0/data/googlenet/googlenet.prototxt --output=prob --model=/home/boyun/NVIDIA/TensorRT-5.1.5.0/data/googlenet/googlenet.caffemodel

将推理出的TensorRT的模型保存

mkdir saveEngine

./trtexec --deploy=/home/boyun/NVIDIA/TensorRT-5.1.5.0/data/googlenet/googlenet.prototxt --output=prob --model=/home/boyun/NVIDIA/TensorRT-5.1.5.0/data/googlenet/googlenet.caffemodel --saveEngine=/home/boyun/NVIDIA/TensorRT-5.1.5.0/saveEngine/googlenet

注释:

sampleGoogleNet.cpp:

/*

* Copyright 1993-2019 NVIDIA Corporation. All rights reserved.

*

* NOTICE TO LICENSEE:

*

* This source code and/or documentation ("Licensed Deliverables") are

* subject to NVIDIA intellectual property rights under U.S. and

* international Copyright laws.

*

* These Licensed Deliverables contained herein is PROPRIETARY and

* CONFIDENTIAL to NVIDIA and is being provided under the terms and

* conditions of a form of NVIDIA software license agreement by and

* between NVIDIA and Licensee ("License Agreement") or electronically

* accepted by Licensee. Notwithstanding any terms or conditions to

* the contrary in the License Agreement, reproduction or disclosure

* of the Licensed Deliverables to any third party without the express

* written consent of NVIDIA is prohibited.

*

* NOTWITHSTANDING ANY TERMS OR CONDITIONS TO THE CONTRARY IN THE

* LICENSE AGREEMENT, NVIDIA MAKES NO REPRESENTATION ABOUT THE

* SUITABILITY OF THESE LICENSED DELIVERABLES FOR ANY PURPOSE. IT IS

* PROVIDED "AS IS" WITHOUT EXPRESS OR IMPLIED WARRANTY OF ANY KIND.

* NVIDIA DISCLAIMS ALL WARRANTIES WITH REGARD TO THESE LICENSED

* DELIVERABLES, INCLUDING ALL IMPLIED WARRANTIES OF MERCHANTABILITY,

* NONINFRINGEMENT, AND FITNESS FOR A PARTICULAR PURPOSE.

* NOTWITHSTANDING ANY TERMS OR CONDITIONS TO THE CONTRARY IN THE

* LICENSE AGREEMENT, IN NO EVENT SHALL NVIDIA BE LIABLE FOR ANY

* SPECIAL, INDIRECT, INCIDENTAL, OR CONSEQUENTIAL DAMAGES, OR ANY

* DAMAGES WHATSOEVER RESULTING FROM LOSS OF USE, DATA OR PROFITS,

* WHETHER IN AN ACTION OF CONTRACT, NEGLIGENCE OR OTHER TORTIOUS

* ACTION, ARISING OUT OF OR IN CONNECTION WITH THE USE OR PERFORMANCE

* OF THESE LICENSED DELIVERABLES.

*

* U.S. Government End Users. These Licensed Deliverables are a

* "commercial item" as that term is defined at 48 C.F.R. 2.101 (OCT

* 1995), consisting of "commercial computer software" and "commercial

* computer software documentation" as such terms are used in 48

* C.F.R. 12.212 (SEPT 1995) and is provided to the U.S. Government

* only as a commercial end item. Consistent with 48 C.F.R.12.212 and

* 48 C.F.R. 227.7202-1 through 227.7202-4 (JUNE 1995), all

* U.S. Government End Users acquire the Licensed Deliverables with

* only those rights set forth herein.

*

* Any use of the Licensed Deliverables in individual and commercial

* software must include, in the user documentation and internal

* comments to the code, the above Disclaimer and U.S. Government End

* Users Notice.

*/

//!

//! sampleGoogleNet.cpp

//! This file contains the implementation of the GoogleNet sample. It creates the network using

//! the GoogleNet caffe model.

//! It can be run with the following command line:

//! Command: ./sample_googlenet [-h or --help] [-d=/path/to/data/dir or --datadir=/path/to/data/dir]

//!

#include "argsParser.h"

#include "buffers.h"

#include "logger.h"

#include "common.h"

#include "NvCaffeParser.h"

#include "NvInfer.h"

#include

#include

#include

#include

#include

//yangninghua

#include "time.h"

const std::string gSampleName = "TensorRT.sample_googlenet";

//!

//! \brief The SampleGoogleNet class implements the GoogleNet sample

//!

//! \details It creates the network using a caffe model

//!

class SampleGoogleNet

{

template

using SampleUniquePtr = std::unique_ptr;

public:

SampleGoogleNet(const samplesCommon::CaffeSampleParams& params)

: mParams(params)

{

}

//!

//! \brief Function builds the network engine

//!

//构建网络引擎

bool build();

//!

//! \brief This function runs the TensorRT inference engine for this sample

//!

//运行TensorRT推理引擎

bool infer();

//!

//! \brief This function can be used to clean up any state created in the sample class

//!

//清空状态

bool teardown();

samplesCommon::CaffeSampleParams mParams;

private:

//用于运行网络的TensorRT引擎

std::shared_ptr mEngine = nullptr; //!< The TensorRT engine used to run the network

//!

//! \brief This function parses a Caffe model for GoogleNet and creates a TensorRT network

//!

//此功能解析GoogleNet的Caffe模型并创建TensorRT网络

void constructNetwork(SampleUniquePtr& builder, SampleUniquePtr& network, SampleUniquePtr& parser);

};

//!

//! \brief This function creates the network, configures the builder and creates the network engine

//!

//! \details This function creates the GoogleNet network by parsing the caffe model and builds

//! the engine that will be used to run GoogleNet (mEngine)

//!

//! \return Returns true if the engine was created successfully and false otherwise

//!

bool SampleGoogleNet::build()

{

//一个名为的全局TensorRT API方法 createInferBuilder(gLogger) 用于创建类型的对象 IBuilder

//创建IBuilder同iLogger作为输入参数

auto builder = SampleUniquePtr(nvinfer1::createInferBuilder(gLogger.getTRTLogger()));

if (!builder)

return false;

//一种叫做的方法 createNetwork 为iBuilder定义用于创建类型的对象 iNetworkDefinition'

//createNetwork()用于创建网络

auto network = SampleUniquePtr(builder->createNetwork());

if (!network)

return false;

//创建一个可用的解析器(Caffe,ONNX或UFF)

//ONNX: auto parser = nvonnxparser::createParser(*network, gLogger);

//Caffe: auto parser = nvcaffeparser1::createCaffeParser();

//UFF: auto parser = nvuffparser::createUffParser();

auto parser = SampleUniquePtr(nvcaffeparser1::createCaffeParser());

if (!parser)

return false;

//加载caffe模型

//解析模型文件

constructNetwork(builder, network, parser);

//一种叫做的方法buildCudaEngine()的IBuilder被调用来创建一个对象ICudaEngine类型

//创建TensorRT引擎

//可以选择将引擎序列化并转储到文件中。

mEngine = std::shared_ptr(builder->buildCudaEngine(*network), samplesCommon::InferDeleter());

if (!mEngine)

return false;

return true;

}

//!

//! \brief This function uses a caffe parser to create the googlenet Network and marks the

//! output layers

//!

//! \param network Pointer to the network that will be populated with the googlenet network

//!

//! \param builder Pointer to the engine builder

//!

void SampleGoogleNet::constructNetwork(SampleUniquePtr& builder, SampleUniquePtr& network, SampleUniquePtr& parser)

{

//对象iParser调用parse方法读取模型文件并填充TensorRT网络

//params.dataDirs.push_back("data/googlenet/");

//params.dataDirs.push_back("data/samples/googlenet/");

//params.prototxtFileName = "googlenet.prototxt";

//params.weightsFileName = "googlenet.caffemodel";

const nvcaffeparser1::IBlobNameToTensor* blobNameToTensor = parser->parse(

locateFile(mParams.prototxtFileName, mParams.dataDirs).c_str(),

locateFile(mParams.weightsFileName, mParams.dataDirs).c_str(),

*network,

nvinfer1::DataType::kFLOAT);

//params.outputTensorNames.push_back("prob");

for (auto& s : mParams.outputTensorNames)

network->markOutput(*blobNameToTensor->find(s.c_str()));

//params.batchSize = 4;

//params.dlaCore = args.useDLACore;

builder->setMaxBatchSize(mParams.batchSize);

builder->setMaxWorkspaceSize(16_MB);

//builder->setFp16Mode(true);

//builder->setInt8Mode(true);

samplesCommon::enableDLA(builder.get(), mParams.dlaCore);

}

//!

//! \brief This function runs the TensorRT inference engine for this sample

//!

//! \details This function is the main execution function of the sample. It allocates the buffer,

//! sets inputs and executes the engine.

//!

bool SampleGoogleNet::infer()

{

// Create RAII buffer manager object

samplesCommon::BufferManager buffers(mEngine, mParams.batchSize);

//IExecutionContext用于执行推理引擎

auto context = SampleUniquePtr(mEngine->createExecutionContext());

if (!context)

return false;

// Fetch host buffers and set host input buffers to all zeros

//获取主机缓冲区并将主机输入缓冲区设置为全零

for (auto& input : mParams.inputTensorNames)

{

const auto bufferSize = buffers.size(input);

if (bufferSize == samplesCommon::BufferManager::kINVALID_SIZE_VALUE)

{

gLogError << "input tensor missing: " << input << "\n";

exit(EXIT_FAILURE);

}

memset(buffers.getHostBuffer(input), 0, bufferSize);

}

// Memcpy from host input buffers to device input buffers

//Memcpy从主机输入缓冲区到设备输入缓冲区

buffers.copyInputToDevice();

clock_t start, finish;

double duration;

start = clock();

bool status = context->execute(mParams.batchSize, buffers.getDeviceBindings().data());

if (!status)

return false;

finish = clock();

duration = (double)(finish - start) / CLOCKS_PER_SEC;

cout<<"前向推理时间"<] [--useDLACore=]\n";

std::cout << "--help Display help information\n";

std::cout << "--datadir Specify path to a data directory, overriding the default. This option can be used multiple times to add multiple directories. If no data directories are given, the default is to use data/samples/googlenet/ and data/googlenet/" << std::endl;

std::cout << "--useDLACore=N Specify a DLA engine for layers that support DLA. Value can range from 0 to n-1, where n is the number of DLA engines on the platform." << std::endl;

}

int main(int argc, char** argv)

{

samplesCommon::Args args;

bool argsOK = samplesCommon::parseArgs(args, argc, argv);

if (args.help)

{

printHelpInfo();

return EXIT_SUCCESS;

}

if (!argsOK)

{

gLogError << "Invalid arguments" << std::endl;

printHelpInfo();

return EXIT_FAILURE;

}

auto sampleTest = gLogger.defineTest(gSampleName, argc, const_cast(argv));

//函数reportTestEnd与其对应

//&&&& RUNNING TensorRT.sample_googlenet # ./sample_googlenet

gLogger.reportTestStart(sampleTest);

//参数初始化

samplesCommon::CaffeSampleParams params = initializeSampleParams(args);

SampleGoogleNet sample(params);

gLogInfo << "Building and running a GPU inference engine for GoogleNet" << std::endl;

if (!sample.build())

{

return gLogger.reportFail(sampleTest);

}

if (!sample.infer())

{

return gLogger.reportFail(sampleTest);

}

if (!sample.teardown())

{

return gLogger.reportFail(sampleTest);

}

//[I] Ran ./sample_googlenet with:

gLogInfo << "Ran " << argv[0] << " with: " << std::endl;

std::stringstream ss;

//可能有多个输入,进行遍历

//[I] Input(s): data

ss << "Input(s): ";

for (auto& input : sample.mParams.inputTensorNames)

ss << input << " ";

gLogInfo << ss.str() << std::endl;

ss.str(std::string());

//可能有多个输出,进行遍历

//[I] Output(s): prob

ss << "Output(s): ";

for (auto& output : sample.mParams.outputTensorNames)

ss << output << " ";

gLogInfo << ss.str() << std::endl;

//reportTestEnd函数在reportPass中被调用

//&&&& PASSED TensorRT.sample_googlenet # ./sample_googlenet

return gLogger.reportPass(sampleTest);

}

接着修改SSD的例子:

https://github.com/NVIDIA/TensorRT/tree/release/5.1/samples/opensource/sampleSSD

下载SSD的权重和deploy.prototxt还有labelmap_voc.prototxt

https://drive.google.com/file/d/0BzKzrI_SkD1_WVVTSmQxU0dVRzA/view

里面有VGG_VOC0712_SSD_300x300_iter_120000.caffemodel和deploy.prototxt

https://github.com/intel/caffe/blob/master/data/VOC0712/labelmap_voc.prototxt

可以找到labelmap_voc.prototxt

mv deploy.prototxt /data/ssd/ssd.prototxt

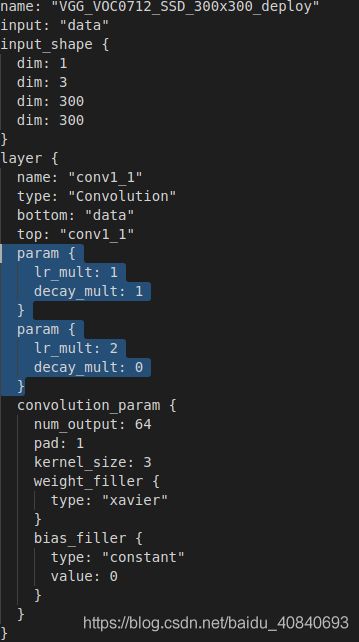

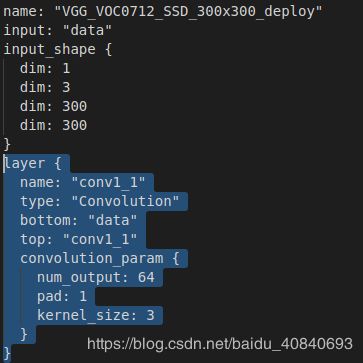

然后修改这个ssd.prototxt

首先:param,weight_filler,bias_filler参数全部不要(只做推理,要这没用)但是不去也行,我懒了抱歉

更改为:

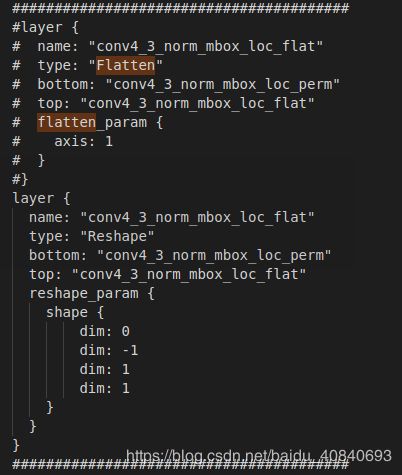

所有的Flatten改为Reshape这样

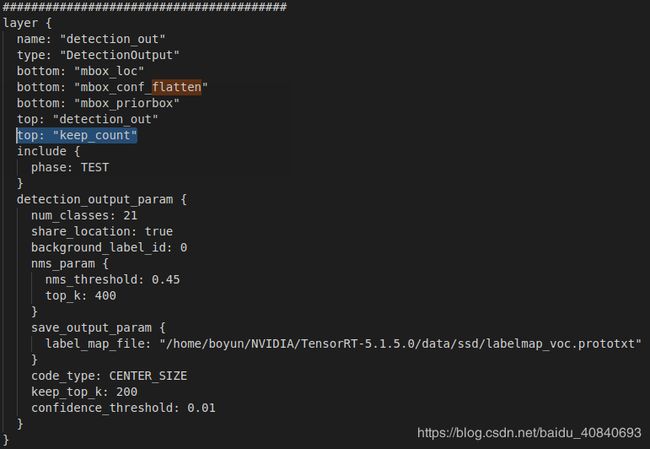

Update the detection_out layer to add the keep_count output as expected by the TensorRT DetectionOutput Plugin. top: "keep_count"

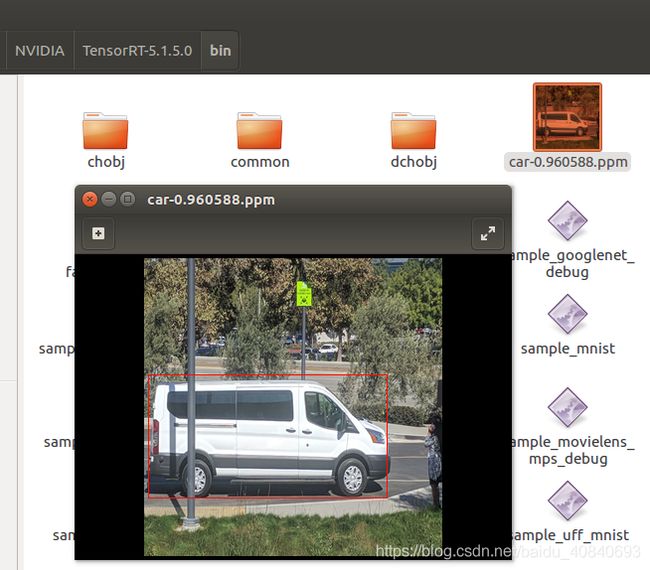

&&&& RUNNING TensorRT.sample_ssd # ./sample_ssd

[I] Begin parsing model...

[I] FP32 mode running...

[I] End parsing model...

[I] Begin building engine...

[I] End building engine...

[I] *** deserializing

[I] Image name:../../../data/ssd/bus.ppm, Label: car, confidence: 96.0588 xmin: 4.14485 ymin: 117.443 xmax: 244.102 ymax: 241.829

&&&& PASSED TensorRT.sample_ssd # ./sample_ssd生成的图保存在:

附上修改后的ssd.prototxt

name: "VGG_VOC0712_SSD_300x300_deploy"

input: "data"

input_shape {

dim: 1

dim: 3

dim: 300

dim: 300

}

layer {

name: "conv1_1"

type: "Convolution"

bottom: "data"

top: "conv1_1"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 64

pad: 1

kernel_size: 3

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu1_1"

type: "ReLU"

bottom: "conv1_1"

top: "conv1_1"

}

layer {

name: "conv1_2"

type: "Convolution"

bottom: "conv1_1"

top: "conv1_2"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 64

pad: 1

kernel_size: 3

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu1_2"

type: "ReLU"

bottom: "conv1_2"

top: "conv1_2"

}

layer {

name: "pool1"

type: "Pooling"

bottom: "conv1_2"

top: "pool1"

pooling_param {

pool: MAX

kernel_size: 2

stride: 2

}

}

layer {

name: "conv2_1"

type: "Convolution"

bottom: "pool1"

top: "conv2_1"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 128

pad: 1

kernel_size: 3

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu2_1"

type: "ReLU"

bottom: "conv2_1"

top: "conv2_1"

}

layer {

name: "conv2_2"

type: "Convolution"

bottom: "conv2_1"

top: "conv2_2"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 128

pad: 1

kernel_size: 3

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu2_2"

type: "ReLU"

bottom: "conv2_2"

top: "conv2_2"

}

layer {

name: "pool2"

type: "Pooling"

bottom: "conv2_2"

top: "pool2"

pooling_param {

pool: MAX

kernel_size: 2

stride: 2

}

}

layer {

name: "conv3_1"

type: "Convolution"

bottom: "pool2"

top: "conv3_1"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 256

pad: 1

kernel_size: 3

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu3_1"

type: "ReLU"

bottom: "conv3_1"

top: "conv3_1"

}

layer {

name: "conv3_2"

type: "Convolution"

bottom: "conv3_1"

top: "conv3_2"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 256

pad: 1

kernel_size: 3

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu3_2"

type: "ReLU"

bottom: "conv3_2"

top: "conv3_2"

}

layer {

name: "conv3_3"

type: "Convolution"

bottom: "conv3_2"

top: "conv3_3"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 256

pad: 1

kernel_size: 3

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu3_3"

type: "ReLU"

bottom: "conv3_3"

top: "conv3_3"

}

layer {

name: "pool3"

type: "Pooling"

bottom: "conv3_3"

top: "pool3"

pooling_param {

pool: MAX

kernel_size: 2

stride: 2

}

}

layer {

name: "conv4_1"

type: "Convolution"

bottom: "pool3"

top: "conv4_1"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 512

pad: 1

kernel_size: 3

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu4_1"

type: "ReLU"

bottom: "conv4_1"

top: "conv4_1"

}

layer {

name: "conv4_2"

type: "Convolution"

bottom: "conv4_1"

top: "conv4_2"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 512

pad: 1

kernel_size: 3

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu4_2"

type: "ReLU"

bottom: "conv4_2"

top: "conv4_2"

}

layer {

name: "conv4_3"

type: "Convolution"

bottom: "conv4_2"

top: "conv4_3"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 512

pad: 1

kernel_size: 3

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu4_3"

type: "ReLU"

bottom: "conv4_3"

top: "conv4_3"

}

layer {

name: "pool4"

type: "Pooling"

bottom: "conv4_3"

top: "pool4"

pooling_param {

pool: MAX

kernel_size: 2

stride: 2

}

}

layer {

name: "conv5_1"

type: "Convolution"

bottom: "pool4"

top: "conv5_1"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 512

pad: 1

kernel_size: 3

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

dilation: 1

}

}

layer {

name: "relu5_1"

type: "ReLU"

bottom: "conv5_1"

top: "conv5_1"

}

layer {

name: "conv5_2"

type: "Convolution"

bottom: "conv5_1"

top: "conv5_2"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 512

pad: 1

kernel_size: 3

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

dilation: 1

}

}

layer {

name: "relu5_2"

type: "ReLU"

bottom: "conv5_2"

top: "conv5_2"

}

layer {

name: "conv5_3"

type: "Convolution"

bottom: "conv5_2"

top: "conv5_3"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 512

pad: 1

kernel_size: 3

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

dilation: 1

}

}

layer {

name: "relu5_3"

type: "ReLU"

bottom: "conv5_3"

top: "conv5_3"

}

layer {

name: "pool5"

type: "Pooling"

bottom: "conv5_3"

top: "pool5"

pooling_param {

pool: MAX

kernel_size: 3

stride: 1

pad: 1

}

}

layer {

name: "fc6"

type: "Convolution"

bottom: "pool5"

top: "fc6"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 1024

pad: 6

kernel_size: 3

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

dilation: 6

}

}

layer {

name: "relu6"

type: "ReLU"

bottom: "fc6"

top: "fc6"

}

layer {

name: "fc7"

type: "Convolution"

bottom: "fc6"

top: "fc7"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 1024

kernel_size: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "relu7"

type: "ReLU"

bottom: "fc7"

top: "fc7"

}

layer {

name: "conv6_1"

type: "Convolution"

bottom: "fc7"

top: "conv6_1"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 256

pad: 0

kernel_size: 1

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "conv6_1_relu"

type: "ReLU"

bottom: "conv6_1"

top: "conv6_1"

}

layer {

name: "conv6_2"

type: "Convolution"

bottom: "conv6_1"

top: "conv6_2"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 512

pad: 1

kernel_size: 3

stride: 2

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "conv6_2_relu"

type: "ReLU"

bottom: "conv6_2"

top: "conv6_2"

}

layer {

name: "conv7_1"

type: "Convolution"

bottom: "conv6_2"

top: "conv7_1"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 128

pad: 0

kernel_size: 1

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "conv7_1_relu"

type: "ReLU"

bottom: "conv7_1"

top: "conv7_1"

}

layer {

name: "conv7_2"

type: "Convolution"

bottom: "conv7_1"

top: "conv7_2"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 256

pad: 1

kernel_size: 3

stride: 2

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "conv7_2_relu"

type: "ReLU"

bottom: "conv7_2"

top: "conv7_2"

}

layer {

name: "conv8_1"

type: "Convolution"

bottom: "conv7_2"

top: "conv8_1"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 128

pad: 0

kernel_size: 1

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "conv8_1_relu"

type: "ReLU"

bottom: "conv8_1"

top: "conv8_1"

}

layer {

name: "conv8_2"

type: "Convolution"

bottom: "conv8_1"

top: "conv8_2"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 256

pad: 0

kernel_size: 3

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "conv8_2_relu"

type: "ReLU"

bottom: "conv8_2"

top: "conv8_2"

}

layer {

name: "conv9_1"

type: "Convolution"

bottom: "conv8_2"

top: "conv9_1"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 128

pad: 0

kernel_size: 1

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "conv9_1_relu"

type: "ReLU"

bottom: "conv9_1"

top: "conv9_1"

}

layer {

name: "conv9_2"

type: "Convolution"

bottom: "conv9_1"

top: "conv9_2"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 256

pad: 0

kernel_size: 3

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "conv9_2_relu"

type: "ReLU"

bottom: "conv9_2"

top: "conv9_2"

}

layer {

name: "conv4_3_norm"

type: "Normalize"

bottom: "conv4_3"

top: "conv4_3_norm"

norm_param {

across_spatial: false

scale_filler {

type: "constant"

value: 20

}

channel_shared: false

}

}

layer {

name: "conv4_3_norm_mbox_loc"

type: "Convolution"

bottom: "conv4_3_norm"

top: "conv4_3_norm_mbox_loc"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 16

pad: 1

kernel_size: 3

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "conv4_3_norm_mbox_loc_perm"

type: "Permute"

bottom: "conv4_3_norm_mbox_loc"

top: "conv4_3_norm_mbox_loc_perm"

permute_param {

order: 0

order: 2

order: 3

order: 1

}

}

########################################

#layer {

# name: "conv4_3_norm_mbox_loc_flat"

# type: "Flatten"

# bottom: "conv4_3_norm_mbox_loc_perm"

# top: "conv4_3_norm_mbox_loc_flat"

# flatten_param {

# axis: 1

# }

#}

layer {

name: "conv4_3_norm_mbox_loc_flat"

type: "Reshape"

bottom: "conv4_3_norm_mbox_loc_perm"

top: "conv4_3_norm_mbox_loc_flat"

reshape_param {

shape {

dim: 0

dim: -1

dim: 1

dim: 1

}

}

}

########################################

layer {

name: "conv4_3_norm_mbox_conf"

type: "Convolution"

bottom: "conv4_3_norm"

top: "conv4_3_norm_mbox_conf"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 84

pad: 1

kernel_size: 3

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "conv4_3_norm_mbox_conf_perm"

type: "Permute"

bottom: "conv4_3_norm_mbox_conf"

top: "conv4_3_norm_mbox_conf_perm"

permute_param {

order: 0

order: 2

order: 3

order: 1

}

}

########################################

#layer {

# name: "conv4_3_norm_mbox_conf_flat"

# type: "Flatten"

# bottom: "conv4_3_norm_mbox_conf_perm"

# top: "conv4_3_norm_mbox_conf_flat"

# flatten_param {

# axis: 1

# }

#}

layer {

name: "conv4_3_norm_mbox_conf_flat"

type: "Reshape"

bottom: "conv4_3_norm_mbox_conf_perm"

top: "conv4_3_norm_mbox_conf_flat"

reshape_param {

shape {

dim: 0

dim: -1

dim: 1

dim: 1

}

}

}

########################################

layer {

name: "conv4_3_norm_mbox_priorbox"

type: "PriorBox"

bottom: "conv4_3_norm"

bottom: "data"

top: "conv4_3_norm_mbox_priorbox"

prior_box_param {

min_size: 30.0

max_size: 60.0

aspect_ratio: 2

flip: true

clip: false

variance: 0.1

variance: 0.1

variance: 0.2

variance: 0.2

step: 8

offset: 0.5

}

}

layer {

name: "fc7_mbox_loc"

type: "Convolution"

bottom: "fc7"

top: "fc7_mbox_loc"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 24

pad: 1

kernel_size: 3

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "fc7_mbox_loc_perm"

type: "Permute"

bottom: "fc7_mbox_loc"

top: "fc7_mbox_loc_perm"

permute_param {

order: 0

order: 2

order: 3

order: 1

}

}

########################################

#layer {

# name: "fc7_mbox_loc_flat"

# type: "Flatten"

# bottom: "fc7_mbox_loc_perm"

# top: "fc7_mbox_loc_flat"

# flatten_param {

# axis: 1

# }

#}

layer {

name: "fc7_mbox_loc_flat"

type: "Reshape"

bottom: "fc7_mbox_loc_perm"

top: "fc7_mbox_loc_flat"

reshape_param {

shape {

dim: 0

dim: -1

dim: 1

dim: 1

}

}

}

########################################

layer {

name: "fc7_mbox_conf"

type: "Convolution"

bottom: "fc7"

top: "fc7_mbox_conf"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 126

pad: 1

kernel_size: 3

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "fc7_mbox_conf_perm"

type: "Permute"

bottom: "fc7_mbox_conf"

top: "fc7_mbox_conf_perm"

permute_param {

order: 0

order: 2

order: 3

order: 1

}

}

########################################

#layer {

# name: "fc7_mbox_conf_flat"

# type: "Flatten"

# bottom: "fc7_mbox_conf_perm"

# top: "fc7_mbox_conf_flat"

# flatten_param {

# axis: 1

# }

#}

layer {

name: "fc7_mbox_conf_flat"

type: "Reshape"

bottom: "fc7_mbox_conf_perm"

top: "fc7_mbox_conf_flat"

reshape_param {

shape {

dim: 0

dim: -1

dim: 1

dim: 1

}

}

}

########################################

layer {

name: "fc7_mbox_priorbox"

type: "PriorBox"

bottom: "fc7"

bottom: "data"

top: "fc7_mbox_priorbox"

prior_box_param {

min_size: 60.0

max_size: 111.0

aspect_ratio: 2

aspect_ratio: 3

flip: true

clip: false

variance: 0.1

variance: 0.1

variance: 0.2

variance: 0.2

step: 16

offset: 0.5

}

}

layer {

name: "conv6_2_mbox_loc"

type: "Convolution"

bottom: "conv6_2"

top: "conv6_2_mbox_loc"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 24

pad: 1

kernel_size: 3

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "conv6_2_mbox_loc_perm"

type: "Permute"

bottom: "conv6_2_mbox_loc"

top: "conv6_2_mbox_loc_perm"

permute_param {

order: 0

order: 2

order: 3

order: 1

}

}

########################################

#layer {

# name: "conv6_2_mbox_loc_flat"

# type: "Flatten"

# bottom: "conv6_2_mbox_loc_perm"

# top: "conv6_2_mbox_loc_flat"

# flatten_param {

# axis: 1

# }

#}

layer {

name: "conv6_2_mbox_loc_flat"

type: "Reshape"

bottom: "conv6_2_mbox_loc_perm"

top: "conv6_2_mbox_loc_flat"

reshape_param {

shape {

dim: 0

dim: -1

dim: 1

dim: 1

}

}

}

########################################

layer {

name: "conv6_2_mbox_conf"

type: "Convolution"

bottom: "conv6_2"

top: "conv6_2_mbox_conf"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 126

pad: 1

kernel_size: 3

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "conv6_2_mbox_conf_perm"

type: "Permute"

bottom: "conv6_2_mbox_conf"

top: "conv6_2_mbox_conf_perm"

permute_param {

order: 0

order: 2

order: 3

order: 1

}

}

########################################

#layer {

# name: "conv6_2_mbox_conf_flat"

# type: "Flatten"

# bottom: "conv6_2_mbox_conf_perm"

# top: "conv6_2_mbox_conf_flat"

# flatten_param {

# axis: 1

# }

#}

layer {

name: "conv6_2_mbox_conf_flat"

type: "Reshape"

bottom: "conv6_2_mbox_conf_perm"

top: "conv6_2_mbox_conf_flat"

reshape_param {

shape {

dim: 0

dim: -1

dim: 1

dim: 1

}

}

}

########################################

layer {

name: "conv6_2_mbox_priorbox"

type: "PriorBox"

bottom: "conv6_2"

bottom: "data"

top: "conv6_2_mbox_priorbox"

prior_box_param {

min_size: 111.0

max_size: 162.0

aspect_ratio: 2

aspect_ratio: 3

flip: true

clip: false

variance: 0.1

variance: 0.1

variance: 0.2

variance: 0.2

step: 32

offset: 0.5

}

}

layer {

name: "conv7_2_mbox_loc"

type: "Convolution"

bottom: "conv7_2"

top: "conv7_2_mbox_loc"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 24

pad: 1

kernel_size: 3

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "conv7_2_mbox_loc_perm"

type: "Permute"

bottom: "conv7_2_mbox_loc"

top: "conv7_2_mbox_loc_perm"

permute_param {

order: 0

order: 2

order: 3

order: 1

}

}

########################################

#layer {

# name: "conv7_2_mbox_loc_flat"

# type: "Flatten"

# bottom: "conv7_2_mbox_loc_perm"

# top: "conv7_2_mbox_loc_flat"

# flatten_param {

# axis: 1

# }

#}

layer {

name: "conv7_2_mbox_loc_flat"

type: "Reshape"

bottom: "conv7_2_mbox_loc_perm"

top: "conv7_2_mbox_loc_flat"

reshape_param {

shape {

dim: 0

dim: -1

dim: 1

dim: 1

}

}

}

########################################

layer {

name: "conv7_2_mbox_conf"

type: "Convolution"

bottom: "conv7_2"

top: "conv7_2_mbox_conf"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 126

pad: 1

kernel_size: 3

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "conv7_2_mbox_conf_perm"

type: "Permute"

bottom: "conv7_2_mbox_conf"

top: "conv7_2_mbox_conf_perm"

permute_param {

order: 0

order: 2

order: 3

order: 1

}

}

########################################

#layer {

# name: "conv7_2_mbox_conf_flat"

# type: "Flatten"

# bottom: "conv7_2_mbox_conf_perm"

# top: "conv7_2_mbox_conf_flat"

# flatten_param {

# axis: 1

# }

#}

layer {

name: "conv7_2_mbox_conf_flat"

type: "Reshape"

bottom: "conv7_2_mbox_conf_perm"

top: "conv7_2_mbox_conf_flat"

reshape_param {

shape {

dim: 0

dim: -1

dim: 1

dim: 1

}

}

}

########################################

layer {

name: "conv7_2_mbox_priorbox"

type: "PriorBox"

bottom: "conv7_2"

bottom: "data"

top: "conv7_2_mbox_priorbox"

prior_box_param {

min_size: 162.0

max_size: 213.0

aspect_ratio: 2

aspect_ratio: 3

flip: true

clip: false

variance: 0.1

variance: 0.1

variance: 0.2

variance: 0.2

step: 64

offset: 0.5

}

}

layer {

name: "conv8_2_mbox_loc"

type: "Convolution"

bottom: "conv8_2"

top: "conv8_2_mbox_loc"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 16

pad: 1

kernel_size: 3

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "conv8_2_mbox_loc_perm"

type: "Permute"

bottom: "conv8_2_mbox_loc"

top: "conv8_2_mbox_loc_perm"

permute_param {

order: 0

order: 2

order: 3

order: 1

}

}

########################################

#layer {

# name: "conv8_2_mbox_loc_flat"

# type: "Flatten"

# bottom: "conv8_2_mbox_loc_perm"

# top: "conv8_2_mbox_loc_flat"

# flatten_param {

# axis: 1

# }

#}

layer {

name: "conv8_2_mbox_loc_flat"

type: "Reshape"

bottom: "conv8_2_mbox_loc_perm"

top: "conv8_2_mbox_loc_flat"

reshape_param {

shape {

dim: 0

dim: -1

dim: 1

dim: 1

}

}

}

########################################

layer {

name: "conv8_2_mbox_conf"

type: "Convolution"

bottom: "conv8_2"

top: "conv8_2_mbox_conf"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 84

pad: 1

kernel_size: 3

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "conv8_2_mbox_conf_perm"

type: "Permute"

bottom: "conv8_2_mbox_conf"

top: "conv8_2_mbox_conf_perm"

permute_param {

order: 0

order: 2

order: 3

order: 1

}

}

########################################

#layer {

# name: "conv8_2_mbox_conf_flat"

# type: "Flatten"

# bottom: "conv8_2_mbox_conf_perm"

# top: "conv8_2_mbox_conf_flat"

# flatten_param {

# axis: 1

# }

#}

layer {

name: "conv8_2_mbox_conf_flat"

type: "Reshape"

bottom: "conv8_2_mbox_conf_perm"

top: "conv8_2_mbox_conf_flat"

reshape_param {

shape {

dim: 0

dim: -1

dim: 1

dim: 1

}

}

}

########################################

layer {

name: "conv8_2_mbox_priorbox"

type: "PriorBox"

bottom: "conv8_2"

bottom: "data"

top: "conv8_2_mbox_priorbox"

prior_box_param {

min_size: 213.0

max_size: 264.0

aspect_ratio: 2

flip: true

clip: false

variance: 0.1

variance: 0.1

variance: 0.2

variance: 0.2

step: 100

offset: 0.5

}

}

layer {

name: "conv9_2_mbox_loc"

type: "Convolution"

bottom: "conv9_2"

top: "conv9_2_mbox_loc"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 16

pad: 1

kernel_size: 3

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "conv9_2_mbox_loc_perm"

type: "Permute"

bottom: "conv9_2_mbox_loc"

top: "conv9_2_mbox_loc_perm"

permute_param {

order: 0

order: 2

order: 3

order: 1

}

}

########################################

#layer {

# name: "conv9_2_mbox_loc_flat"

# type: "Flatten"

# bottom: "conv9_2_mbox_loc_perm"

# top: "conv9_2_mbox_loc_flat"

# flatten_param {

# axis: 1

# }

#}

layer {

name: "conv9_2_mbox_loc_flat"

type: "Reshape"

bottom: "conv9_2_mbox_loc_perm"

top: "conv9_2_mbox_loc_flat"

reshape_param {

shape {

dim: 0

dim: -1

dim: 1

dim: 1

}

}

}

########################################

layer {

name: "conv9_2_mbox_conf"

type: "Convolution"

bottom: "conv9_2"

top: "conv9_2_mbox_conf"

param {

lr_mult: 1

decay_mult: 1

}

param {

lr_mult: 2

decay_mult: 0

}

convolution_param {

num_output: 84

pad: 1

kernel_size: 3

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "conv9_2_mbox_conf_perm"

type: "Permute"

bottom: "conv9_2_mbox_conf"

top: "conv9_2_mbox_conf_perm"

permute_param {

order: 0

order: 2

order: 3

order: 1

}

}

########################################

#layer {

# name: "conv9_2_mbox_conf_flat"

# type: "Flatten"

# bottom: "conv9_2_mbox_conf_perm"

# top: "conv9_2_mbox_conf_flat"

# flatten_param {

# axis: 1

# }

#}

layer {

name: "conv9_2_mbox_conf_flat"

type: "Reshape"

bottom: "conv9_2_mbox_conf_perm"

top: "conv9_2_mbox_conf_flat"

reshape_param {

shape {

dim: 0

dim: -1

dim: 1

dim: 1

}

}

}

########################################

layer {

name: "conv9_2_mbox_priorbox"

type: "PriorBox"

bottom: "conv9_2"

bottom: "data"

top: "conv9_2_mbox_priorbox"

prior_box_param {

min_size: 264.0

max_size: 315.0

aspect_ratio: 2

flip: true

clip: false

variance: 0.1

variance: 0.1

variance: 0.2

variance: 0.2

step: 300

offset: 0.5

}

}

layer {

name: "mbox_loc"

type: "Concat"

bottom: "conv4_3_norm_mbox_loc_flat"

bottom: "fc7_mbox_loc_flat"

bottom: "conv6_2_mbox_loc_flat"

bottom: "conv7_2_mbox_loc_flat"

bottom: "conv8_2_mbox_loc_flat"

bottom: "conv9_2_mbox_loc_flat"

top: "mbox_loc"

concat_param {

axis: 1

}

}

layer {

name: "mbox_conf"

type: "Concat"

bottom: "conv4_3_norm_mbox_conf_flat"

bottom: "fc7_mbox_conf_flat"

bottom: "conv6_2_mbox_conf_flat"

bottom: "conv7_2_mbox_conf_flat"

bottom: "conv8_2_mbox_conf_flat"

bottom: "conv9_2_mbox_conf_flat"

top: "mbox_conf"

concat_param {

axis: 1

}

}

layer {

name: "mbox_priorbox"

type: "Concat"

bottom: "conv4_3_norm_mbox_priorbox"

bottom: "fc7_mbox_priorbox"

bottom: "conv6_2_mbox_priorbox"

bottom: "conv7_2_mbox_priorbox"

bottom: "conv8_2_mbox_priorbox"

bottom: "conv9_2_mbox_priorbox"

top: "mbox_priorbox"

concat_param {

axis: 2

}

}

layer {

name: "mbox_conf_reshape"

type: "Reshape"

bottom: "mbox_conf"

top: "mbox_conf_reshape"

reshape_param {

shape {

dim: 0

dim: -1

dim: 21

}

}

}

layer {

name: "mbox_conf_softmax"

type: "Softmax"

bottom: "mbox_conf_reshape"

top: "mbox_conf_softmax"

softmax_param {

axis: 2

}

}

########################################

#layer {

# name: "mbox_conf_flatten"

# type: "Flatten"

# bottom: "mbox_conf_softmax"

# top: "mbox_conf_flatten"

# flatten_param {

# axis: 1

# }

#}

layer {

name: "mbox_conf_flatten"

type: "Reshape"

bottom: "mbox_conf_softmax"

top: "mbox_conf_flatten"

reshape_param {

shape {

dim: 0

dim: -1

dim: 1

dim: 1

}

}

}

########################################

layer {

name: "detection_out"

type: "DetectionOutput"

bottom: "mbox_loc"

bottom: "mbox_conf_flatten"

bottom: "mbox_priorbox"

top: "detection_out"

top: "keep_count"

include {

phase: TEST

}

detection_output_param {

num_classes: 21

share_location: true

background_label_id: 0

nms_param {

nms_threshold: 0.45

top_k: 400

}

save_output_param {

label_map_file: "/home/boyun/NVIDIA/TensorRT-5.1.5.0/data/ssd/labelmap_voc.prototxt"

}

code_type: CENTER_SIZE

keep_top_k: 200

confidence_threshold: 0.01

}

}

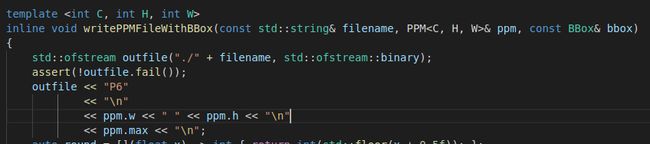

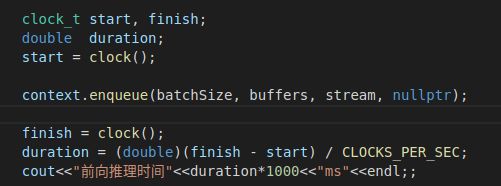

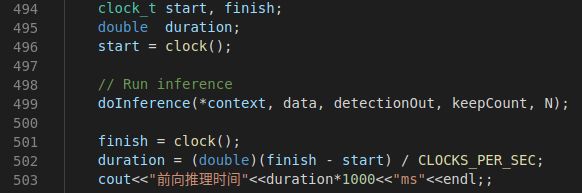

测试时间:

#include "time.h"

clock_t start, finish;

double duration;

start = clock();

context.enqueue(batchSize, buffers, stream, nullptr);

finish = clock();

duration = (double)(finish - start) / CLOCKS_PER_SEC;

cout<<"前向推理时间"<

&&&& RUNNING TensorRT.sample_ssd # ./sample_ssd

[I] Begin parsing model...

[I] FP32 mode running...

[I] End parsing model...

[I] Begin building engine...

[I] End building engine...

[I] *** deserializing

前向推理时间0.332ms

[I] Image name:../../../data/ssd/bus.ppm, Label: car, confidence: 96.0588 xmin: 4.14485 ymin: 117.443 xmax: 244.102 ymax: 241.829

&&&& PASSED TensorRT.sample_ssd # ./sample_ssd如果改变一个位置:

&&&& RUNNING TensorRT.sample_ssd # ./sample_ssd

[I] Begin parsing model...

[I] FP32 mode running...

[I] End parsing model...

[I] Begin building engine...

[I] End building engine...

[I] *** deserializing

前向推理时间11.626ms

[I] Image name:../../../data/ssd/bus.ppm, Label: car, confidence: 96.0587 xmin: 4.14484 ymin: 117.443 xmax: 244.102 ymax: 241.829

&&&& PASSED TensorRT.sample_ssd # ./sample_ssd我的显卡是GTX TiTAN X 12G不是Nvidia TiTan X 12G

所以1080ti可能比这个更快

caffe-cuda9.0-cudnn7.5

./build/tools/caffe time -model=/home/boyun/code/caffe-ssd/ssd/deploy.prototxt --weights=/home/boyun/code/caffe-ssd/ssd/VGG_VOC0712_SSD_300x300_iter_120000.caffemodel --iterations=200 -gpu 0I0714 13:52:09.150904 14202 caffe.cpp:412] Average Forward pass: 30.3141 ms.

I0714 13:52:09.150909 14202 caffe.cpp:414] Average Backward pass: 26.9677 ms.

I0714 13:52:09.150918 14202 caffe.cpp:416] Average Forward-Backward: 57.478 ms.

I0714 13:52:09.150923 14202 caffe.cpp:418] Total Time: 11495.6 ms.

I0714 13:52:09.150928 14202 caffe.cpp:419] *** Benchmark ends ***下载:

https://developer.nvidia.com/nvidia-tensorrt-5x-download

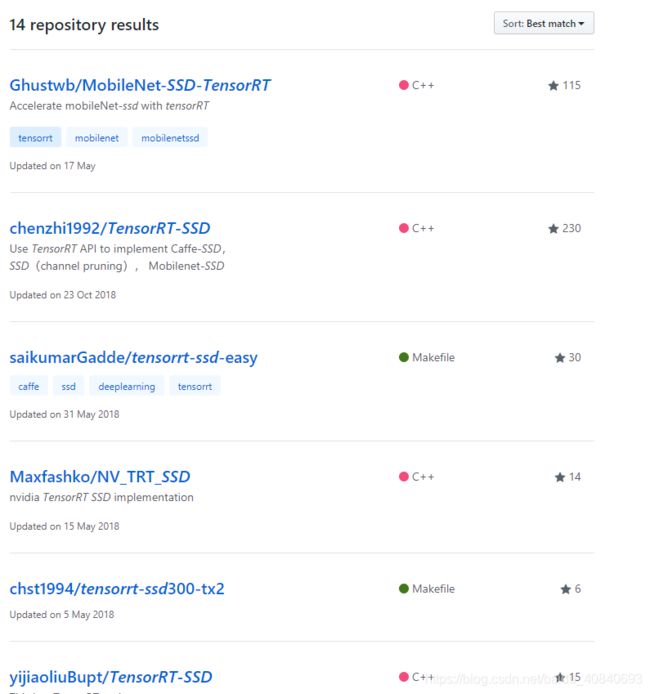

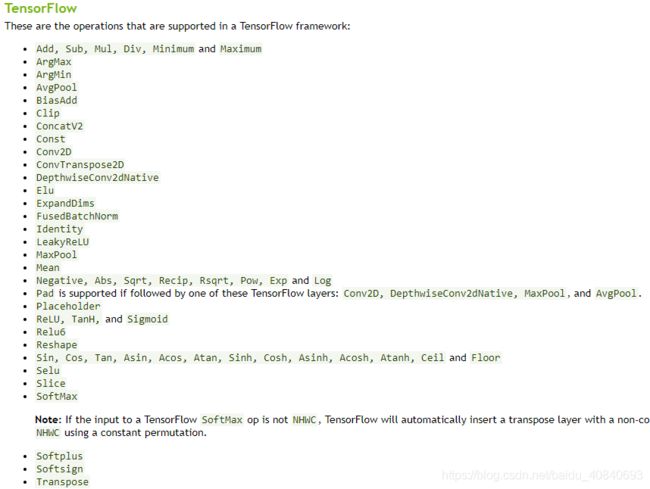

一些示例:

Caffe版yolov3+tensorRT:

https://www.jianshu.com/p/e78c5c321248?tdsourcetag=s_pcqq_aiomsg

https://github.com/C-H-D/tensorRT-Caffe

在Caffe中调用TensorRT提供的MNIST model

https://blog.csdn.net/fengbingchun/article/details/78606228

mobileNet-ssd使用tensorRT部署

https://blog.csdn.net/qq_17278169/article/details/82971983

TensorRT基于caffe模型加速MobileNet SSD

https://blog.csdn.net/qq_22764813/article/details/84544409

贤者之路, Caffe转TensorRT

https://blog.csdn.net/chanzhennan/article/details/87085754

caffe-ssd网络模型 tensorRT加速

http://www.pianshen.com/article/4373405601/

TensorRT 的 C++ API 使用详解

https://blog.csdn.net/u010552731/article/details/89501819

Use TensorRT API to implement Caffe-SSD, SSD(channel pruning), Mobilenet-SSD

https://github.com/chenzhi1992/TensorRT-SSD

TensorRT-Mobilenet-SSD can run 50fps on jetson tx2

https://github.com/Ghustwb/MobileNet-SSD-TensorRT

TensorRT 3.0 RC run SSD error in DetectionOutput layer

https://devtalk.nvidia.com/default/topic/1025153/gpu-accelerated-libraries/tensorrt-3-0-rc-run-ssd-error-in-detectionoutput-layer/post/5214393/#5214393

faster RCNN tensorRT代码

https://github.com/NVIDIA/TensorRT/tree/release/5.1/samples/opensource/sampleFasterRCNN

SSD tensorRT 代码

https://github.com/NVIDIA/TensorRT/tree/release/5.1/samples/opensource/sampleSSDgithub:TensorRT-SSD

https://github.com/onnx/onnx-tensorrt

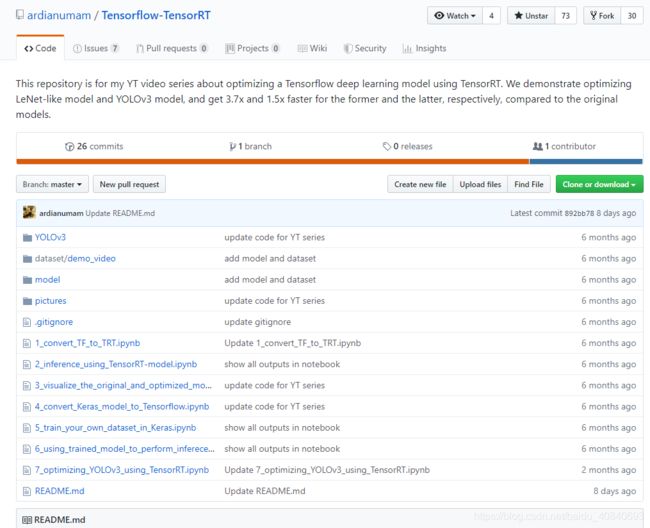

https://github.com/ardianumam/Tensorflow-TensorRT

视频:

https://www.youtube.com/watch?v=AIGOSz2tFP8&list=PLkRkKTC6HZMwdtzv3PYJanRtR6ilSCZ4f

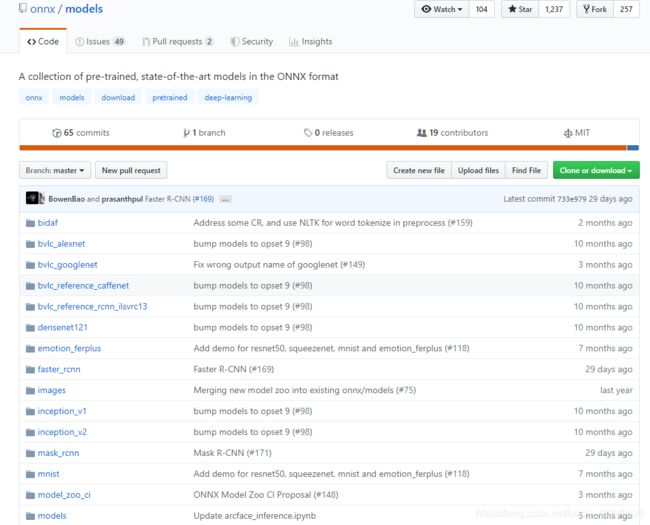

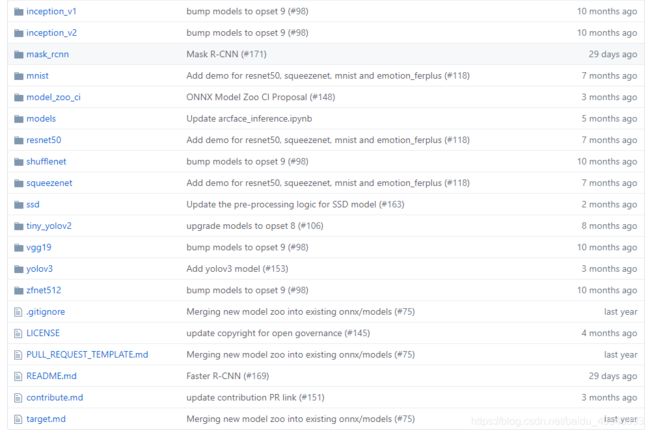

https://github.com/onnx/models

https://devblogs.nvidia.com/speed-up-inference-tensorrt/

How to Speed Up Deep Learning Inference Using TensorRT

https://developer.nvidia.com/tensorrt

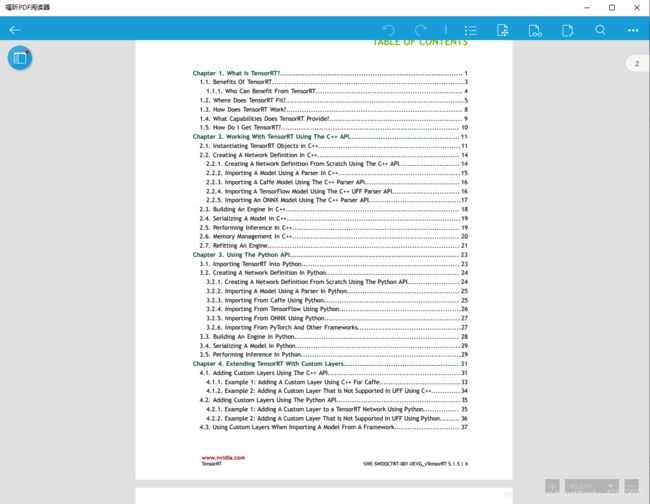

文档:

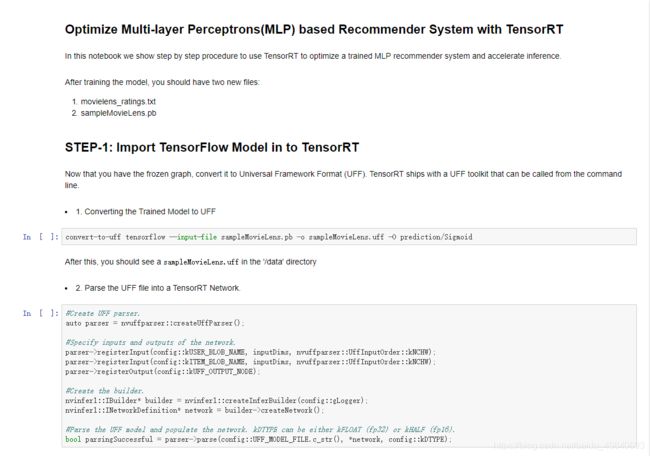

https://developer.download.nvidia.cn/compute/machine-learning/tensorrt/models/sampleMLP-notebook.html

https://docs.nvidia.com/deeplearning/sdk/pdf/TensorRT-Developer-Guide.pdf

https://docs.nvidia.com/deeplearning/sdk/tensorrt-archived/index.html

https://docs.nvidia.com/deeplearning/sdk/tensorrt-sample-support-guide/index.html#charRNN_sample