树莓派4:基于NCNN的火焰检测

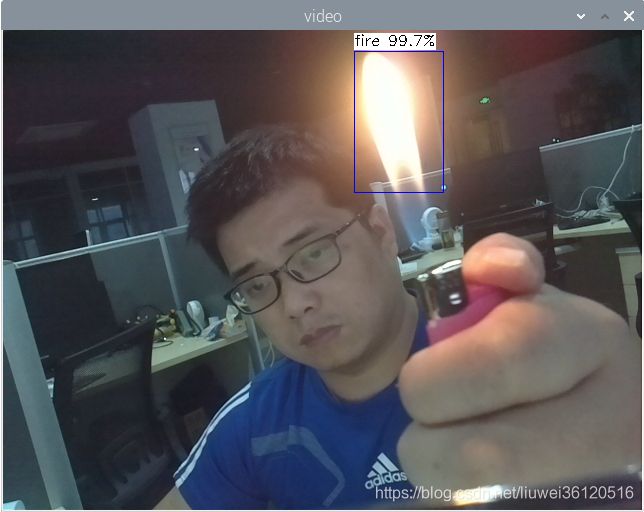

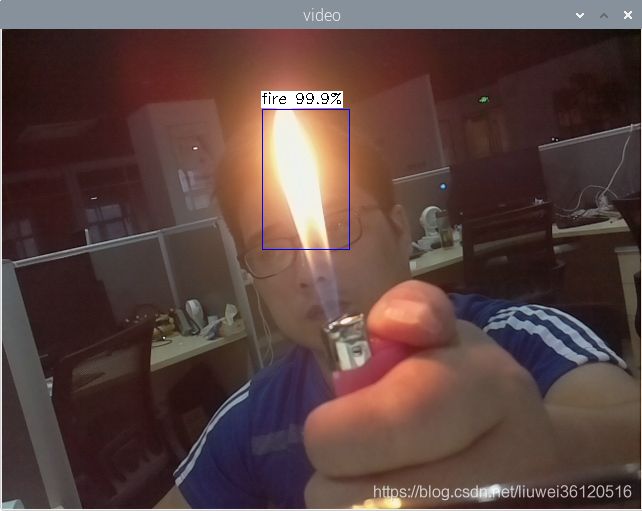

在前面两篇关于火焰检测的文章中,最终的效果不是很好,为了提高火焰检测的效果,又搜集了一些火焰数据,训练的网络由之前的yolov3-tiny改为mobilev2-yolov3,最终在树莓派上利用NCNN推算框架,比之前的效果要好很多,如图:

下面把实现的步骤和大家分享下:1、火焰数据集链接:

https://pan.baidu.com/s/1VypCAODfMvEexU-kDooSgw

提取码:feo2

链接:https://pan.baidu.com/s/1e32KPWI71PeFadUPmeEvHQ

提取码:ihsj2、在darknet下训练训练的cfg和model文件如果需要联系我。

3、在树莓派上部署NCNN官方提供了在树莓派上的编译说明,按照这个说明是可以编译起来的,安装依赖:

sudo apt-get install git cmake

sudo apt-get install -y gfortran

sudo apt-get install -y libprotobuf-dev libleveldb-dev libsnappy-dev libopencv-dev libhdf5-serial-dev protobuf-compiler

sudo apt-get install --no-install-recommends libboost-all-dev

sudo apt-get install -y libgflags-dev libgoogle-glog-dev liblmdb-dev libatlas-base-dev然后下载NCNN:

git clone https://github.com/Tencent/ncnn.git

cd ncnn编辑CMakeList.txt文件,添加examples和benchmark:

add_subdirectory(examples)

add_subdirectory(benchmark)

add_subdirectory(tools)然后就可以按照官方文档进行编译了,官方提供的pi3 toolchain在4代Raspbian上可以直接使用,最新版的NCNN会自动使用OpenMP:

cd <ncnn-root-dir>

mkdir -p build

cd build

cmake -DCMAKE_TOOLCHAIN_FILE=../toolchains/pi3.toolchain.cmake -DPI3=ON ..

make -j44、模型转换

cd <ncnn-root-dir>

cd build

cd tools/darknet

./darknet2ncnn mobilenetV2-yolov3.cfg mobilenetV2-yolov3.weights mobilenetV2-yolov3.param mobilenetV2-yolov3.bin 15、运行

cd <ncnn-root-dir>

cd build

cd example

./mobilenetV2-yolov36、部分代码

#include "net.h"

#include "platform.h"

#include 7、结果分析目前测试效果还比较满意,但是每帧处理的时间需要0.3s左右,还不能实时,接下来的目标是达到实时检测,并尝试别推理框架,比如MNN和TNN。