【2019-CS224n】Assignment1

Part 1: Count-Based Word Vectors

- NLTK下载数据遇到的坑

- Part 1: Count-Based Word Vectors(基于计数的词向量)

- 导包

- 读取,准备数据

- 创建字典

- 构建共现矩阵

- 降维

- 可视化

- 降维之后的共现图分析

- Part 2 (待更新。。。)

NLTK下载数据遇到的坑

Reuters:路透社语料库(商业和金融新闻)。包括10788篇新闻文献,共计130万字,分为90个主题,按照“训练”和“测试”分为两组。

1、执行下面的代码一直连接失败

import nltk

nltk.download('reuters')

[nltk_data] Error loading reuters:

之前用过nltk.download()没毛病。

2、然后尝试下面方法:

弹出:

还是不行,其实这个和网络关系很大,刚刚试了一下居然成功了。

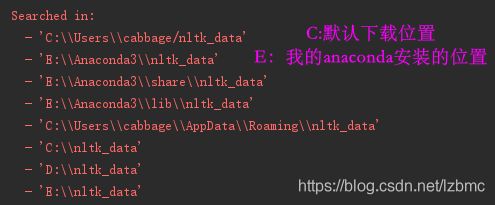

3、从github下载:nltk_data 这个下载也很慢,半天刷不出来,耐心等待,会弹出下载窗口,然后移动到下面路径中的任意一个

一定要先建“corpora”文件,再把reuters.zip放进去。一开始我直接放到nltk_data下找不到,根据代码情况决定是否解压(我用pycharm运行不解压就可以,但是在Jupyter Notebook不解压报错:No such file or directory: ‘E:\nltk_data\corpora\reuters\test\14829’ ???)

Part 1: Count-Based Word Vectors(基于计数的词向量)

导包

import sys

assert sys.version_info[0] == 3

assert sys.version_info[1] >= 5

from gensim.models import KeyedVectors # KeyedVectors:实现实体(单词、文档、图片都可以)和向量之间的映射。每个实体由其字符串id标识。

from gensim.test.utils import datapath

import pprint # 输出的更加规范易读

import matplotlib.pyplot as plt

plt.rcParams['figure.figsize'] = [10, 5] # plt.rcParams主要作用是设置画的图的分辨率,大小等信息

import nltk

nltk.download('reuters') # 建议github下载

from nltk.corpus import reuters # 导入路透社语料库

from numpy import *

import numpy as np

import random

import scipy as sp

from sklearn.decomposition import TruncatedSVD

from sklearn.decomposition import PCA

START_TOKEN = ''

END_TOKEN = ''

np.random.seed(0)

random.seed(0)

读取,准备数据

def read_corpus(category="crude"):

""" Read files from the specified Reuter's category.

Params:

category (string): category name

Return:

list of lists, with words from each of the processed files

"""

files = reuters.fileids(category) # 类别为crude的文档

# 每个文档都转化为小写,并在开头结尾加标识符

return [[START_TOKEN] + [w.lower() for w in list(reuters.words(f))] + [END_TOKEN] for f in files]

# pprint模块格式化打印

# pprint.pprint(object, stream=None, indent=1, width=80, depth=None, *, compact=False)

# width:控制打印显示的宽度。默认为80个字符。注意:当单个对象的长度超过width时,并不会分多行显示,而是会突破规定的宽度。

# compact:默认为False。如果值为False,超过width规定长度的序列会被分散打印到多行。如果为True,会尽量使序列填满width规定的宽度。

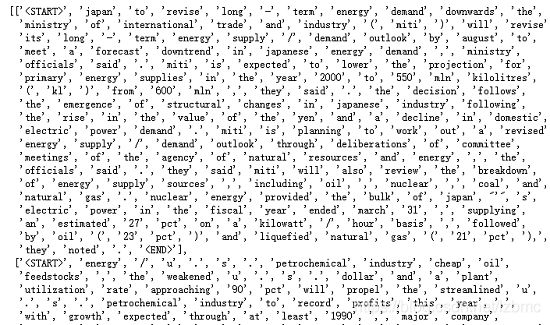

reuters_corpus = read_corpus()

pprint.pprint(reuters_corpus[:3], compact=True, width=100)

创建字典

# 计算出语料库中出现的不同单词,并排序。

def distinct_words(corpus):

""" Determine a list of distinct words for the corpus.

Params:

corpus (list of list of strings): corpus of documents

Return:

corpus_words (list of strings): list of distinct words across the corpus, sorted (using python 'sorted' function)

num_corpus_words (integer): number of distinct words across the corpus

"""

corpus_words = []

num_corpus_words = -1

# ------------------

# Write your implementation here.

flattened_list = [word for every_list in corpus for word in every_list] # 展平成一维

corpus_words = sorted(set(flattened_list)) # set去重,sorted排序

num_corpus_words = len(corpus_words) # 字典总数

# ------------------

return corpus_words, num_corpus_words

# 测试

def test_one():

# Define toy corpus

test_corpus = ["START All that glitters isn't gold END".split(" "),

"START All's well that ends well END".split(" ")]

test_corpus_words, num_corpus_words = distinct_words(test_corpus)

# Correct answers

ans_test_corpus_words = sorted(

list(set(["START", "All", "ends", "that", "gold", "All's", "glitters", "isn't", "well", "END"])))

ans_num_corpus_words = len(ans_test_corpus_words)

# Test correct number of words

assert (num_corpus_words == ans_num_corpus_words), "Incorrect number of distinct words. Correct: {}. Yours: {}" \

.format(ans_num_corpus_words, num_corpus_words)

# Test correct words

assert (test_corpus_words == ans_test_corpus_words), "Incorrect corpus_words.\nCorrect: {}\nYours: {}".format(

str(ans_test_corpus_words), str(test_corpus_words))

# Print Success

print("-" * 80)

print("Passed All Tests!")

print("-" * 80)

构建共现矩阵

def compute_co_occurrence_matrix(corpus, window_size=4):

""" Compute co-occurrence matrix for the given corpus and window_size (default of 4).

Note: Each word in a document should be at the center of a window. Words near edges will have a smaller

number of co-occurring words.

For example, if we take the document "START All that glitters is not gold END" with window size of 4,

"All" will co-occur with "START", "that", "glitters", "is", and "not".

Params:

corpus (list of list of strings): corpus of documents

window_size (int): size of context window

Return:

M (numpy matrix of shape (number of corpus words, number of corpus words)):

Co-occurence matrix of word counts.

The ordering of the words in the rows/columns should be the same as the ordering of the words given by the distinct_words function.

word2Ind (dict): dictionary that maps word to index (i.e. row/column number) for matrix M.

"""

words, num_words = distinct_words(corpus)

M = None

word2Ind = {}

# ------------------

# Write your implementation here.

word2Ind = {k: v for (k, v) in zip(words, range(num_words))}

# print(word2Ind)

M = np.zeros((num_words, num_words))

# !!!一个单词对应字典中的索引和当前文档中的索引,别混了。

for every_document in corpus:

for doc_index, word in enumerate(every_document): # 遍历当前文档中的每个单词及单词在文档中的索引

# print(doc_index, word)

dict_index = word2Ind[word] # 单词对应字典中的索引

for j in range(doc_index - window_size, doc_index + window_size + 1): # 文档中单词的索引位置-4/+4

if j >= 0 and j < len(every_document) and j != doc_index: # 窗口单词范围[0,len(doc))

outer_index = word2Ind[every_document[j]]

M[dict_index, outer_index] += 1

# ------------------

# 老师的答案

# for document in corpus:

# len_doc = len(document)

# for index in range(0, len_doc):

# center_index = word2Ind[document[index]]

# for i in range(index - window_size, index + window_size + 1):

# if i >= 0 and i < len_doc and i != index:

# outer_index = word2Ind[document[i]]

# # print('Incrementing for',document[index],document[i])

# M[center_index, outer_index] += 1.0

return M, word2Ind

def test_two():

# Define toy corpus and get student's co-occurrence matrix

test_corpus = ["START All that glitters isn't gold END".split(" "),

"START All's well that ends well END".split(" ")]

M_test, word2Ind_test = compute_co_occurrence_matrix(test_corpus, window_size=1)

# Correct M and word2Ind

M_test_ans = np.array(

[[0., 0., 0., 1., 0., 0., 0., 0., 1., 0., ],

[0., 0., 0., 1., 0., 0., 0., 0., 0., 1., ],

[0., 0., 0., 0., 0., 0., 1., 0., 0., 1., ],

[1., 1., 0., 0., 0., 0., 0., 0., 0., 0., ],

[0., 0., 0., 0., 0., 0., 0., 0., 1., 1., ],

[0., 0., 0., 0., 0., 0., 0., 1., 1., 0., ],

[0., 0., 1., 0., 0., 0., 0., 1., 0., 0., ],

[0., 0., 0., 0., 0., 1., 1., 0., 0., 0., ],

[1., 0., 0., 0., 1., 1., 0., 0., 0., 1., ],

[0., 1., 1., 0., 1., 0., 0., 0., 1., 0., ]]

)

word2Ind_ans = {'All': 0, "All's": 1, 'END': 2, 'START': 3, 'ends': 4, 'glitters': 5, 'gold': 6, "isn't": 7,

'that': 8, 'well': 9}

# Test correct word2Ind

assert (word2Ind_ans == word2Ind_test), "Your word2Ind is incorrect:\nCorrect: {}\nYours: {}" \

.format(word2Ind_ans, word2Ind_test)

# Test correct M shape

assert (M_test.shape == M_test_ans.shape), "M matrix has incorrect shape.\nCorrect: {}\nYours: {}" \

.format(M_test.shape, M_test_ans.shape)

# Test correct M values

for w1 in word2Ind_ans.keys():

idx1 = word2Ind_ans[w1]

for w2 in word2Ind_ans.keys():

idx2 = word2Ind_ans[w2]

student = M_test[idx1, idx2]

correct = M_test_ans[idx1, idx2]

if student != correct:

print("Correct M:")

print(M_test_ans)

print("Your M: ")

print(M_test)

raise AssertionError(

"Incorrect count at index ({}, {})=({}, {}) in matrix M. Yours has {} but should have {}."

.format(idx1, idx2, w1, w2, student, correct))

# Print Success

print("-" * 80)

print("Passed All Tests!")

print("-" * 80)

# test_two()

降维

def reduce_to_k_dim(M, k=2):

""" Reduce a co-occurence count matrix of dimensionality (num_corpus_words, num_corpus_words)

to a matrix of dimensionality (num_corpus_words, k) using the following SVD function from Scikit-Learn:

- http://scikit-learn.org/stable/modules/generated/sklearn.decomposition.TruncatedSVD.html

Params:

M (numpy matrix of shape (number of corpus words, number of corpus words)): co-occurence matrix of word counts

k (int): embedding size of each word after dimension reduction

Return:

M_reduced (numpy matrix of shape (number of corpus words, k)): matrix of k-dimensioal word embeddings.

In terms of the SVD from math class, this actually returns U * S

"""

n_iters = 10 # Use this parameter in your call to `TruncatedSVD`

M_reduced = None

print("Running Truncated SVD over %i words..." % (M.shape[0]))

# ------------------

# Write your implementation here.

svd = TruncatedSVD(n_components=k, n_iter=n_iters, random_state=0)

# svd.fit(M) # 训练数据

# M_reduced = svd.transform(M) # 降维

M_reduced = svd.fit_transform(M) # 等价于上面两句

# print(M_reduced) # 二维矩阵

# ------------------

print("Done.")

return M_reduced

def test_three():

# ---------------------

# Run this sanity check

# Note that this not an exhaustive check for correctness

# In fact we only check that your M_reduced has the right dimensions.

# ---------------------

# Define toy corpus and run student code

test_corpus = ["START All that glitters isn't gold END".split(" "),

"START All's well that ends well END".split(" ")]

M_test, word2Ind_test = compute_co_occurrence_matrix(test_corpus, window_size=1)

M_test_reduced = reduce_to_k_dim(M_test, k=2)

# Test proper dimensions

assert (M_test_reduced.shape[0] == 10), "M_reduced has {} rows; should have {}".format(M_test_reduced.shape[0], 10)

assert (M_test_reduced.shape[1] == 2), "M_reduced has {} columns; should have {}".format(M_test_reduced.shape[1], 2)

# Print Success

print("-" * 80)

print("Passed All Tests!")

print("-" * 80)

# test_three()

可视化

# 降维之后可视化

def plot_embeddings(M_reduced, word2Ind, words):

"""

Plot in a scatterplot the embeddings of the words specified in the list "words".

NOTE: do not plot all the words listed in M_reduced / word2Ind.

Include a label next to each point.

Params:

M_reduced (numpy matrix of shape (number of unique words in the corpus , k)): matrix of k-dimensioal word embeddings

word2Ind (dict): dictionary that maps word to indices for matrix M

words (list of strings): words whose embeddings we want to visualize

"""

# ------------------

# Write your implementation here.

# 需要得到每个单词的x,y坐标。

# 单词:words。 x,y:M_reduced的[[x,y],[x,y]]

for word in words:

x = M_reduced[word2Ind[word]][0]

y = M_reduced[word2Ind[word]][1]

plt.scatter(x, y, marker='x', color='red') # marker:表示的是标记的样式,默认的是'o'。

# plt.text()给图形添加文本注释

plt.text(x+0.0002, y+0.0002, word, fontsize=9) # x、y上方0.002处标注文字说明,word标注的文字,fontsize:文字大小

plt.show()

# ------------------

def test_four():

# The plot produced should look like the "test solution plot" depicted below.

# ---------------------

print("-" * 80)

print("Outputted Plot:")

M_reduced_plot_test = np.array([[1, 1], [-1, -1], [1, -1], [-1, 1], [0, 0]])

word2Ind_plot_test = {'test1': 0, 'test2': 1, 'test3': 2, 'test4': 3, 'test5': 4}

words = ['test1', 'test2', 'test3', 'test4', 'test5']

plot_embeddings(M_reduced_plot_test, word2Ind_plot_test, words)

print("-" * 80)

# test_four()

降维之后的共现图分析

def plot_analysis():

# -----------------------------

# Run This Cell to Produce Your Plot

# ------------------------------

reuters_corpus = read_corpus() # 二维列表数据

M_co_occurrence, word2Ind_co_occurrence = compute_co_occurrence_matrix(reuters_corpus) # 共现矩阵,字典

M_reduced_co_occurrence = reduce_to_k_dim(M_co_occurrence, k=2) # 降维

# Rescale (normalize) the rows to make them each of unit-length

# 重新缩放(规格化)这些 行(axis=1),使它们成为每个单元长度

M_lengths = np.linalg.norm(M_reduced_co_occurrence, axis=1) # 8185个词--8185行。一维

# print(shape(M_reduced_co_occurrence)) # (8185, 2)

# print(shape(M_lengths[:, np.newaxis])) # 二维(列)(8185, 1)

# numpy的broadcasting知识:https://jakevdp.github.io/PythonDataScienceHandbook/02.05-computation-on-arrays-broadcasting.html

M_normalized = M_reduced_co_occurrence / M_lengths[:, np.newaxis] # broadcasting

words = ['barrels', 'bpd', 'ecuador', 'energy', 'industry', 'kuwait', 'oil', 'output', 'petroleum', 'venezuela']

plot_embeddings(M_normalized, word2Ind_co_occurrence, words)

plot_analysis()