hive读书笔记

文章目录

-

- hive cli

-

- hive执行日志的位置

- 指定主动使用本地模式

- 指定数据仓库目录

- hive查看和使用自定义及系统属性

- 数据类型

-

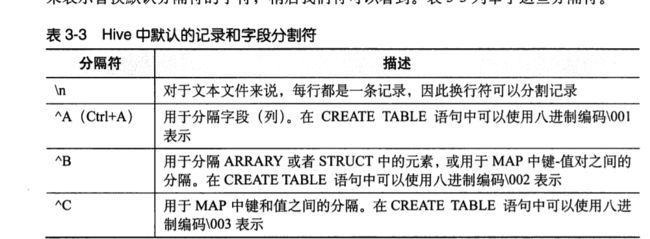

- 默认字段分隔符

- sql

-

- database

- table

-

- 指定分隔符

- 分区表

- 修改表

- 数据操作

-

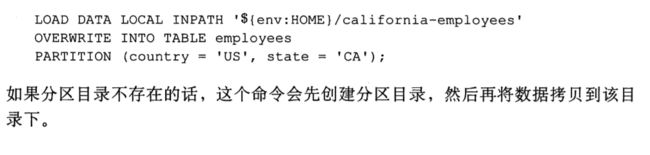

- 装载数据

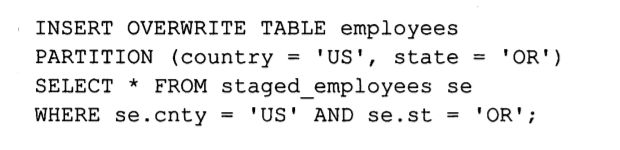

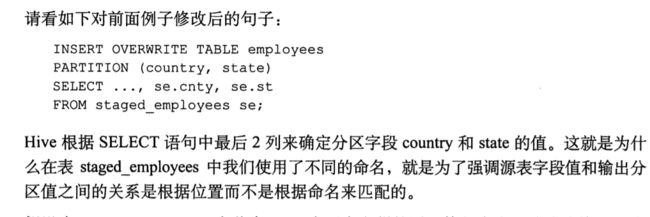

- 静态分区和动态分区

- 从查询结果创建表

- 导出数据

- 数据查询

-

- 查询是用正则表达式rlike

- 排序order,sort的区别

- cast 强制转换

- 数据抽样,分桶

- 视图

-

-

- 区别

-

- 索引

- 调优

-

- explain

- join优化

- 本地模式

- 并行优化

- 严格模式

- 调整mapper和reducer的数量

- JVM重用

- 索引

- 分区

- 推测执行

- 单个MR中多个group by

- 虚拟列

- 压缩

-

- 常用压缩格式

- 配置

- 测试

- 开发

-

- 日志

- debug

- 函数

-

- 内置函数

- 查看函数

- 调用函数

- 表生成函数UDTF

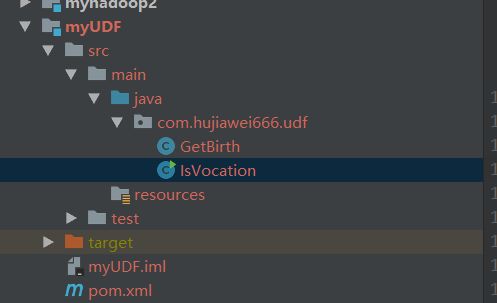

- UDF

-

- 标准类型

- 复杂类型

- UDAF

- UDTF

- 宏

- stream

-

- 使用内置的cat,cut,sed转换

- 使用自定义脚本

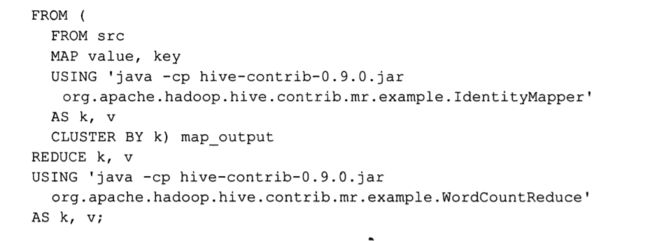

- wordcount

- 自定义记录格式

-

- 文本格式

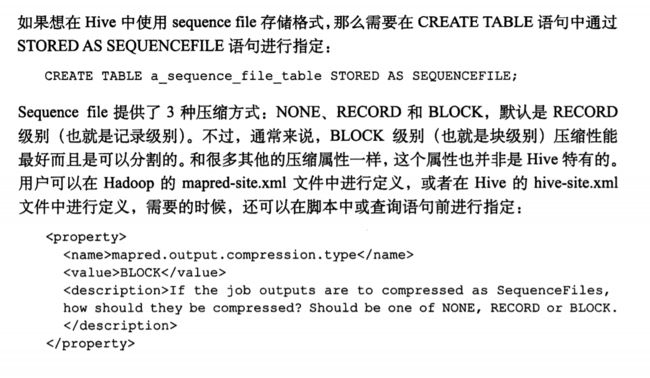

- sequenceFile

-

- RCFile

- 自定义输入格式

- 使用正则表达式过滤日志

- xml

- json

- 附录1 内置函数大全

-

- 数学函数

- 集合函数

- 类型转换函数

- 日期函数

- 条件函数

- 字符函数

- 聚合函数

- 表生成函数

- 附录3 链接

- 附录4 日志样例

hive cli

hive执行日志的位置

日志配置文件:/conf/hive-log4j2.properties

property.hive.log.dir = ${sys:java.io.tmpdir}/${sys:user.name}

可以在hive cli中查询变量值

hive> set system:java.io.tmpdir;

system:java.io.tmpdir=/tmp

--root用户的默认日志位置

/tmp/root/hive.log

指定主动使用本地模式

指定数据仓库目录

hive查看和使用自定义及系统属性

--会打印所有属性

hive>set;

hive>set env:HOME;

hive> set hivevar:col_name=name;

hive> set col_name;

hive> create table test3(id int,${env:LOGNAME} string);

hive> create table test2(id int,${hivevar:col_name} string);

--启动是添加配置项

--显示当前db

hive --hiveconf hive.cli.print.current.db=true

hive (default)> set hiveconf: hive.cli.print.current.db;

hiveconf: hive.cli.print.current.db=true

--更改为不显示

hive (default)> set hiveconf: hive.cli.print.current.db=false;

hive>

--显示系统属性,java对system属性有可读可写权限

hive> set system:user.name;

system:user.name=root

hive> set system:myname=hujiawei;

hive> set system:myname;

system:myname=hujiawei

#执行一条命令,-S 是静默模式,不会输出OK之类的信息

hive -S -e 'show tables;'

hive -S -e 'set'|grep warehouse;

#创建src表,并加载数据

create table src(s String);

echo "one row">/tmp/myfile

hive -e "LOAD DATA LOCAL INPATH '/tmp/myfile' into table src"

#执行sql文件

hive -f test.hql

#hive cli中执行文件

source /root/test/test.hql;

#hive cli加载时候自动加载$HOME/.hiverc文件,如果没有,可以创建一个

set hive.cli.print.current.db=true;

set hive.exec.mode.local.auto=true;

#hive -i 会在启动时候加载指定的文件

hive所有默认属性都在

/opt/install/hadoop/apache-hive-2.3.6-bin/conf/hive-default.xml.template

文件中显示配置了

-

在hive中执行shell命令:!pwd,执行hadoop命令:dfs -ls /

-

hive脚本注释方式同sql: –

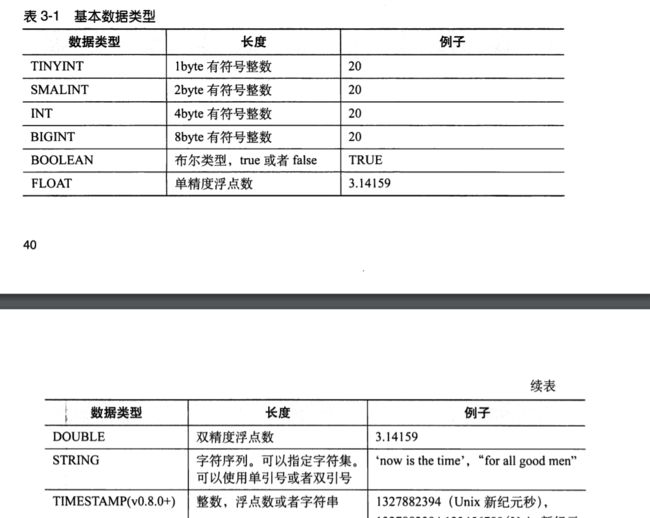

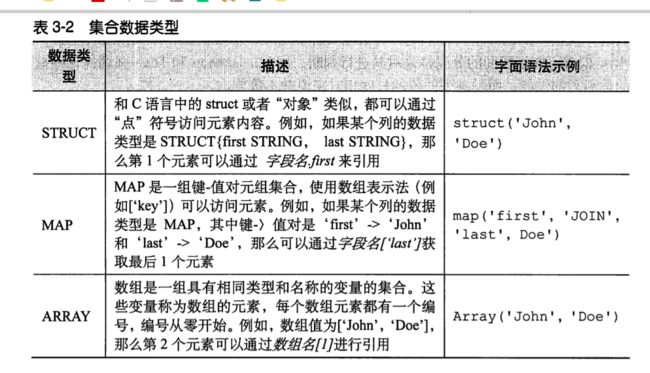

数据类型

create table employes(

name string,

salary float,

subordinates array<string>,

deductions map<String,float>,

address struct<street:string,city:string,state:string,zip:INT>

);

默认字段分隔符

create table employes(

name string,

salary float,

subordinates array<string>,

deductions map<String,float>,

address struct<street:string,city:string,state:string,zip:INT>

)

row format delimited

fields terminated by '\001'

collection items terminated by '\002'

map keys terminated by '\003'

lines terminated by '\n'

stored as textfile;

sql

database

show datbase like 't*'

create database test;

drop datbase test;

desc database test;

--hive中一个database在hdfs中以.db结尾的目录,表是以表名为名的目录,可以在创建的时候指定位置

hive> create database test location '/test/test.db';

dfs -ls -R /test

--删除有表的database;

drop database test cascade;

--添加database描述

create database test comment 'this is a test db';

--添加database属性

create database test comment 'this is a test db' with dbproperties('creator'='hujiawie','date'='2019年12月10日');

--查看属性

desc database extended test;

--修改数据库属性,可以新增,不能删除

alter database test set dbproperties('creators'='laohu');

table

create table employes(

name string,

salary float,

subordinates array<string>,

deductions map<String,float>,

address struct<street:string,city:string,state:string,zip:INT>

)location '/test/employes'

--拷贝表

hive> create table employees like employes;

hive> show tables in mydb;

指定分隔符

hive> create table t1(

> id int

> ,name string

> ,hobby array<string>

> ,add map<String,string>

> )

> partitioned by (pt_d string)

> row format delimited

> fields terminated by ','

> collection items terminated by '-'

> map keys terminated by ':'

> ;

分区表

hive> create table t1(

> id int

> ,name string

> ,hobby array<string>

> ,add map<String,string>

> )

> partitioned by (pt_d string)

> row format delimited

> fields terminated by ','

> collection items terminated by '-'

> map keys terminated by ':'

> ;

--加载数据

1,xiaoming,book-TV-code,beijing:chaoyang-shagnhai:pudong

2,lilei,book-code,nanjing:jiangning-taiwan:taibei

3,lihua,music-book,heilongjiang:haerbin

load data local inpath '/root/test/myfile' overwrite into table t1 partition ( pt_d = '201701');

--加载另一个分区数据

1 xiaoming ["book","TV","code"] {

"beijing":"chaoyang","shagnhai":"pudong"} 000000

2 lilei ["book","code"] {

"nanjing":"jiangning","taiwan":"taibei"} 000000

3 lihua ["music","book"] {

"heilongjiang":"haerbin"} 000000

1 xiaoming ["book","TV","code"] {

"beijing":"chaoyang","shagnhai":"pudong"} 201701

2 lilei ["book","code"] {

"nanjing":"jiangning","taiwan":"taibei"} 201701

3 lihua ["music","book"] {

"heilongjiang":"haerbin"} 201701

load data local inpath '/root/test/myfile2' overwrite into table t1 partition ( pt_d = '000000');

--查看dfs上的目录和文件

hive> dfs -ls -R /user/hive/warehouse/mydb.db;

drwxr-xr-x - root supergroup 0 2019-12-11 10:56 /user/hive/warehouse/mydb.db/employes

drwxr-xr-x - root supergroup 0 2019-12-11 11:04 /user/hive/warehouse/mydb.db/t1

drwxr-xr-x - root supergroup 0 2019-12-11 11:04 /user/hive/warehouse/mydb.db/t1/pt_d=000000

-rwxr-xr-x 1 root supergroup 474 2019-12-11 11:04 /user/hive/warehouse/mydb.db/t1/pt_d=000000/myfile2

drwxr-xr-x - root supergroup 0 2019-12-11 11:02 /user/hive/warehouse/mydb.db/t1/pt_d=201701

-rwxr-xr-x 1 root supergroup 147 2019-12-11 11:02 /user/hive/warehouse/mydb.db/t1/pt_d=201701/myfile

--加载一个分区,会创建相应的目录

hive> alter table t1 add partition(pt_d ='3333');

--删除分区,会删除相应的文件(外部表不会删除,可以通过msck repair table table_name恢复)

alter table test1 drop partition (pt_d = ‘201701’);

--另外注意分区其实也是个字段,只不过把这个字段当作索引,通过建目录的方式,提高性能

hive> desc extended t1;

id int

name string

hobby array<string>

add map<string,string>

pt_d string

# Partition Information

# col_name data_type comment

pt_d string

#查看有多少分区

hive> show partitions t1;

pt_d=000000

pt_d=3333

修改表

--重命名

hive> alter table employes rename to employees;

--修改分区地址,无效

hive> alter table t1 partition(pt_d=3333) set location "hdfs://localhost:9000/user/hive/warehouse/mydb.db/t1/pt_d=4444";

--修改列,这边修改列为值,after某列之后,可以改成first,就是第一个位置,

--但是更改列顺序要求两列类型相同,

hive> create table src (c1 string,c1 string);

hive> alter table src change column c1 c3 string comment 'test' after c2;

--增加列

hive> alter table src add columns(c4 string comment 'column4');

--删除列,全替换的方式

hive> alter table src replace columns(cl1 string,cl2 string);

--修改表属性

hive> alter table src set tblproperties('name'='hujiawei');

--查看表属性

hive> show tblproperties src;

数据操作

装载数据

- local指定是从本地拷贝,如果没有local是从hdfs中移动(不可跨集群)

- overwrite指定是追加还是覆盖

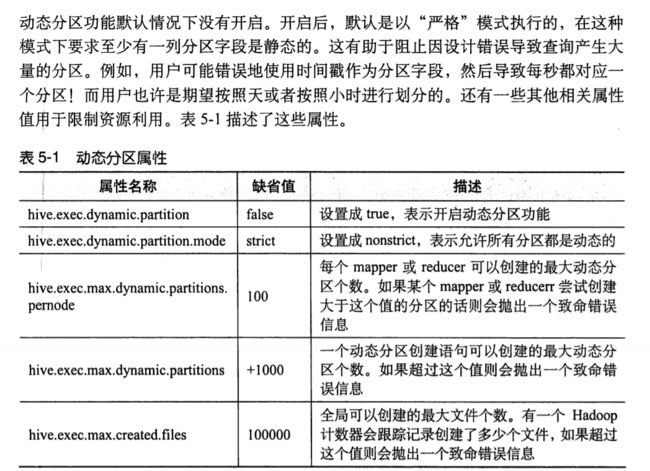

静态分区和动态分区

- 静态分区方法1:

- 静态分区方法2:

- 动态分区

从查询结果创建表

create table test as select c1,c2 from src;

--或者用like创建表

hive> create table test2 like test;

注意这种创建表,如果src表是从外部文件加载进来的表,会将src的数据文件移动到目标表的位置

exec.FileSinkOperator: Moving tmp dir: hdfs://localhost:9000/user/hive/warehouse/src/.hive-staging_hive_2019-12-10_16-11-45_214_2063272

320733291437-1/_tmp.-ext-10002 to: hdfs://localhost:9000/user/hive/warehouse/src/.hive-staging_hive_2019-12-10_16-11-45_214_2063272320733291437-1/-ext-10002

导出数据

--1,

hive> from test t

> insert overwrite local directory '/root/test/'

> select * ;

hive> ! ls /root/test;

000000_0

hive> ! cat /root/test/000000_0;

20191212

20180112

20190212

20190312

20190712

--2

hive> from test t

> insert overwrite local directory '/root/test/'

> select * ;

--3 直接拷贝hdfs中的文件

# 拷贝到本地

fs -get 'hdfs://localhost:9000/user/hive/warehouse/test/data' .

#拷贝到hdfs中另一个目录

hs -cp 'hdfs://localhost:9000/user/hive/warehouse/test/data' '/test/'

数据查询

查询是用正则表达式rlike

--hive的 正则表达式是用的java的,

select * from src a where a.s rlike '^a.*';

排序order,sort的区别

--order同oracle中的order,是全局排序,耗时多

select * from test a order by a.id1;

--sort by 是对每个reducer的输出排序,但是多个reducer整合的时候不一定排序正常

hive> select * from test a sort by a.id1;

--distribute by 用于对多个排序字段时,指定某个字段相同的给同一个reducer处理

hive> select * from test a distribute by a.id1 sort by a.id1,a.id2;

--cluster by 相当于distribute by order by 的组合,

hive> select * from test a cluster by a.id1;

cast 强制转换

hive> select cast(a.id1 as float) from test a ;

数据抽样,分桶

--将test 表数据随机分成2个桶,取其中一个桶

hive> select * from test tablesample(bucket 1 out of 2 on rand()) a;

--随机分3个桶,取第二个桶

hive> select * from test tablesample(bucket 2 out of 3 on rand());

--不是随机分桶,对列值分桶

hive> select * from test tablesample(bucket 2 out of 3 on id1);

--注意这边分桶其实不是均分的,所以每个桶中数据量不一定相同

视图

区别

-

逻辑视图和物理视图的区别

hive不支持物化视图,实质是将视图的定义语句和查询语句组合一起,供hive执行查询计划,所以视图只是一个逻辑视图,提供简化查询的功能 -

不能对列分权

orace中可以将指定列作为视图,达到控制权限,某些用户不需要ta_staff表的查询权限,只需要有视图的权限,可以看到某些列,但是hive中不支持对列分权,因为用户必须要有这个表的查询权限(文件访问权限)才能看视图但是hive中的视图可以通过where字句来限定某些行

hive> select * from test; OK 1 2 3 88 77 66 33 22 11 1 2 3 88 77 66 33 22 11 Time taken: 0.123 seconds, Fetched: 6 row(s) hive>关于hive的用户,角色,组的相关问题参考csdn1

索引

-

创建索引

hive> create index test1_index on table test(id1) as > 'org.apache.hadoop.hive.ql.index.compact.CompactIndexHandler' > with deferred rebuild > in table test_index_table; --bitmap索引适合值较少的列, create index index_test_2 on table test(id2) as 'BITMAP' with deferred rebuild in table test_index2_table ; -

删除索引

hive> drop index test1_index on test; -

查看索引

hive> show formatted index on test; -

重建索引

alter index test1_index on test rebuild; -

定制化索引

实现hive的接口,打包,添加后,在创建索引时用as指定类名具体见cwiki2

调优

explain

可以查看查询语句转换成map reduce的具体过程

explain select sum(id1) from test;

OK

STAGE DEPENDENCIES:

Stage-1 is a root stage

Stage-0 depends on stages: Stage-1

STAGE PLANS:

Stage: Stage-1

Map Reduce

Map Operator Tree:

TableScan

alias: test

Statistics: Num rows: 6 Data size: 42 Basic stats: COMPLETE Column stats: NONE

Select Operator

expressions: id1 (type: int)

outputColumnNames: id1

Statistics: Num rows: 6 Data size: 42 Basic stats: COMPLETE Column stats: NONE

Group By Operator

aggregations: sum(id1)

mode: hash

outputColumnNames: _col0

Statistics: Num rows: 1 Data size: 8 Basic stats: COMPLETE Column stats: NONE

Reduce Output Operator

sort order:

Statistics: Num rows: 1 Data size: 8 Basic stats: COMPLETE Column stats: NONE

value expressions: _col0 (type: bigint)

Reduce Operator Tree:

Group By Operator

aggregations: sum(VALUE._col0)

mode: mergepartial

outputColumnNames: _col0

Statistics: Num rows: 1 Data size: 8 Basic stats: COMPLETE Column stats: NONE

File Output Operator

compressed: false

Statistics: Num rows: 1 Data size: 8 Basic stats: COMPLETE Column stats: NONE

table:

input format: org.apache.hadoop.mapred.SequenceFileInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveSequenceFileOutputFormat

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

Stage: Stage-0

Fetch Operator

limit: -1

Processor Tree:

ListSink

Time taken: 0.31 seconds, Fetched: 44 row(s)

另外可以使用explain extended 获取更详细的信心

explain extended select sum(id1) from test;

OK

STAGE DEPENDENCIES:

Stage-1 is a root stage

Stage-0 depends on stages: Stage-1

STAGE PLANS:

Stage: Stage-1

Map Reduce

Map Operator Tree:

TableScan

alias: test

Statistics: Num rows: 6 Data size: 42 Basic stats: COMPLETE Column stats: NONE

GatherStats: false

Select Operator

expressions: id1 (type: int)

outputColumnNames: id1

Statistics: Num rows: 6 Data size: 42 Basic stats: COMPLETE Column stats: NONE

Group By Operator

aggregations: sum(id1)

mode: hash

outputColumnNames: _col0

Statistics: Num rows: 1 Data size: 8 Basic stats: COMPLETE Column stats: NONE

Reduce Output Operator

null sort order:

sort order:

Statistics: Num rows: 1 Data size: 8 Basic stats: COMPLETE Column stats: NONE

tag: -1

value expressions: _col0 (type: bigint)

auto parallelism: false

Path -> Alias:

hdfs://localhost:9000/user/hive/warehouse/test [test]

Path -> Partition:

hdfs://localhost:9000/user/hive/warehouse/test

Partition

base file name: test

input format: org.apache.hadoop.mapred.TextInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

properties:

COLUMN_STATS_ACCURATE {

"BASIC_STATS":"true"}

bucket_count -1

column.name.delimiter ,

columns id1,id2,id3

columns.comments

columns.types int:int:int

file.inputformat org.apache.hadoop.mapred.TextInputFormat

file.outputformat org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

location hdfs://localhost:9000/user/hive/warehouse/test

name default.test

numFiles 4

numRows 6

rawDataSize 42

serialization.ddl struct test { i32 id1, i32 id2, i32 id3}

serialization.format 1

serialization.lib org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

totalSize 48

transient_lastDdlTime 1576480718

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

input format: org.apache.hadoop.mapred.TextInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

properties:

COLUMN_STATS_ACCURATE {

"BASIC_STATS":"true"}

bucket_count -1

column.name.delimiter ,

columns id1,id2,id3

columns.comments

columns.types int:int:int

file.inputformat org.apache.hadoop.mapred.TextInputFormat

file.outputformat org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

location hdfs://localhost:9000/user/hive/warehouse/test

name default.test

numFiles 4

numRows 6

rawDataSize 42

serialization.ddl struct test { i32 id1, i32 id2, i32 id3}

serialization.format 1

serialization.lib org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

totalSize 48

transient_lastDdlTime 1576480718

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

name: default.test

name: default.test

Truncated Path -> Alias:

/test [test]

Needs Tagging: false

Reduce Operator Tree:

Group By Operator

aggregations: sum(VALUE._col0)

mode: mergepartial

outputColumnNames: _col0

Statistics: Num rows: 1 Data size: 8 Basic stats: COMPLETE Column stats: NONE

File Output Operator

compressed: false

GlobalTableId: 0

directory: hdfs://localhost:9000/tmp/hive/root/8d35f95d-893e-40ee-b831-6177341c7acb/hive_2019-12-17_11-12-25_359_70780381862038030-1/-mr-10001/.hive-staging_hive_2019-12-17_11-12-25_359_70780381862038030-1/-ext-10002

NumFilesPerFileSink: 1

Statistics: Num rows: 1 Data size: 8 Basic stats: COMPLETE Column stats: NONE

Stats Publishing Key Prefix: hdfs://localhost:9000/tmp/hive/root/8d35f95d-893e-40ee-b831-6177341c7acb/hive_2019-12-17_11-12-25_359_70780381862038030-1/-mr-10001/.hive-staging_hive_2019-12-17_11-12-25_359_70780381862038030-1/-ext-10002/

table:

input format: org.apache.hadoop.mapred.SequenceFileInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveSequenceFileOutputFormat

properties:

columns _col0

columns.types bigint

escape.delim \

hive.serialization.extend.additional.nesting.levels true

serialization.escape.crlf true

serialization.format 1

serialization.lib org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

TotalFiles: 1

GatherStats: false

MultiFileSpray: false

Stage: Stage-0

Fetch Operator

limit: -1

Processor Tree:

ListSink

Time taken: 0.324 seconds, Fetched: 119 row(s)

join优化

大表放join右边,小表放join左边

原因1:小表可以放在内存中缓存,用大表中记录挨个匹配小表的记录

实际原因是: 写在关联左侧的表每有1条重复的关联键时底层就会多1次运算处理

具体参考csdn3

本地模式

只针对小数据集,没有实际意义

并行优化

对job执行独立的阶段可以执行并行,提高速率

严格模式

严格模式下:

-

分区表下where必须指定分区

-

order by 必须加上limit

-

对笛卡尔乘积禁用

调整mapper和reducer的数量

根据输入和输出文件数量大小调整

JVM重用

可以减少新建task过程中初始化和销毁jvm的开销,缺点是耗时最长的task会长时间占用插槽,导致堵塞

索引

同oracle,对有索引列的条件查询会显著提高效率,但是维护索引会耗时,需要rebuild

分区

提升很明显,但是会导致namenode的文件过多,内存爆炸

推测执行

在分布式集群环境下,因为程序Bug(包括Hadoop本身的bug),负载不均衡或者资源分布不均等原因,会造成同一个作业的多个任务之间运行速度不一致,有些任务的运行速度可能明显慢于其他任务(比如一个作业的某个任务进度只有50%,而其他所有任务已经运行完毕),则这些任务会拖慢作业的整体执行进度。为了避免这种情况发生,Hadoop采用了推测执行(Speculative Execution)机制,它根据一定的法则推测出“拖后腿”的任务,并为这样的任务启动一个备份任务,让该任务与原始任务同时处理同一份数据,并最终选用最先成功运行完成任务的计算结果作为最终结果。

如果用户因为输入数据量很大而需要执行长时间的map或者Reduce task的话,那么启动推测执行造成的浪费是非常巨大大。

单个MR中多个group by

设置是否启用该功能,能将多个group by操作组装到单个MAP REDUCE中

虚拟列

用于诊断结果,通过参数配置开启

压缩

常用压缩格式

压缩能节省磁盘空间,提高文件传输速率,但是会消耗cpu加/解压缩的

-

对于量大但是不怎么计算的数据,一般用gzip(压缩比最高,压缩解压缩速度最慢)

-

对于量小但是经常需要计算的数据,一般用lzo或者snappy

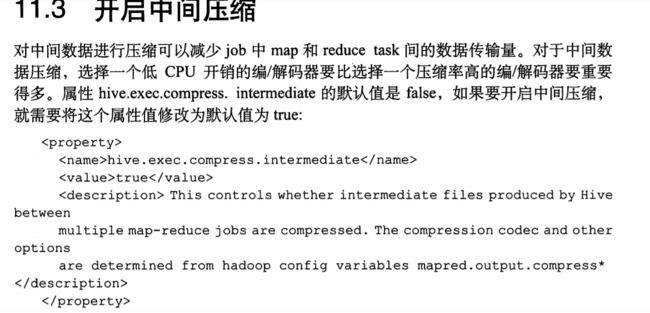

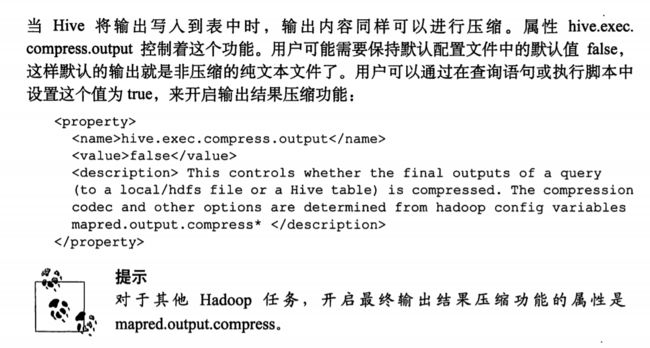

配置

-

开启hadoop压缩格式

vi /opt/install/hadoop/hadoop-2.7.1/etc/hadoop/core-site.xml添加

<property> <name>io.compression.codecsname> <value>org.apache.hadoop.io.compress.DefaultCodec,org.apache.hadoop.io.compress.GzipCodec,org.apache.hadoop.io.compress.BZip2Codec,org.apache.hadoop.io.compress.SnappyCodecvalue> property>--配置后不需要重启hadoop就能够在hive中显示可用的压缩格式 hive> set io.compression.codecs; io.compression.codecs=org.apache.hadoop.io.compress.DefaultCodec,org.apache.hadoop.io.compress.GzipCodec,org.apache.hadoop.io.compress.BZip2Codec,org.apache.hadoop.io.compress.SnappyCodec -

开启中间压缩

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-RejrvQ5W-1577407917780)(…//pic/image-20191218101039825.png)] -

开启输出结果压缩

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-F4IMck81-1577407917781)(…//pic/image-20191218101113926.png)] -

使用sequence file

测试

-

使用中间结果压缩

hive> set hive.exec.compress.intermediate=true; hive> create table interemediate_com_om row format delimited fields terminated by '\t' as select * from test; Automatically selecting local only mode for query WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases. Query ID = root_20191218101653_df7baa3b-9570-40a7-b480-be91907bfb1e Total jobs = 3 Launching Job 1 out of 3 Number of reduce tasks is set to 0 since there's no reduce operator Job running in-process (local Hadoop) 2019-12-18 10:17:03,294 Stage-1 map = 0%, reduce = 0% Ended Job = job_local1362494287_0001 Stage-4 is selected by condition resolver. Stage-3 is filtered out by condition resolver. Stage-5 is filtered out by condition resolver. Moving data to directory hdfs://localhost:9000/user/hive/warehouse/.hive-staging_hive_2019-12-18_10-16-53_073_1921170228225164888-1/-ext-10002 Moving data to directory hdfs://localhost:9000/user/hive/warehouse/interemediate_com_om MapReduce Jobs Launched: Stage-Stage-1: HDFS Read: 48 HDFS Write: 132 SUCCESS Total MapReduce CPU Time Spent: 0 msec OK Time taken: 11.773 seconds dfs -ls hdfs://localhost:9000/user/hive/warehouse/interemediate_com_om; Found 1 items -rwxr-xr-x 1 root supergroup 48 2019-12-18 10:17 hdfs://localhost:9000/user/hive/warehouse/interemediate_com_om/000000_0 hive> dfs -cat hdfs://localhost:9000/user/hive/warehouse/interemediate_com_om/*; 1 2 3 88 77 66 33 22 11 1 2 3 88 77 66 33 22 11 --最终结果仍然是文本格式 -

对输出结果用gzip压缩

hadoop中配置<property> <name>mapred.output.compressname> <value>truevalue> property> <property> <name>mapred.compress.map.outputname> <value>truevalue> property> <property> <name>mapred.output.compression.codecname> <value>org.apache.hadoop.io.compress.GzipCodecvalue> property>hive中执行

--检查压缩编码格式 hive> set mapred.output.compression.codec; mapred.output.compression.codec=org.apache.hadoop.io.compress.GzipCodec --检查是否输出文件压缩 hive> set hive.exec.compress.output; hive.exec.compress.output=false hive> set hive.exec.compress.output=true; --执行语句 hive> create table final_com_on_gz2 row format delimited fields terminated by '\t' as select * from test; Automatically selecting local only mode for query WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases. Query ID = root_20191218111200_4d4e7160-4f68-451c-86cf-9cb5c8b54129 Total jobs = 3 Launching Job 1 out of 3 Number of reduce tasks is set to 0 since there's no reduce operator Job running in-process (local Hadoop) 2019-12-18 11:12:12,231 Stage-1 map = 100%, reduce = 0% Ended Job = job_local1413027372_0001 Stage-4 is selected by condition resolver. Stage-3 is filtered out by condition resolver. Stage-5 is filtered out by condition resolver. Moving data to directory hdfs://localhost:9000/user/hive/warehouse/.hive-staging_hive_2019-12-18_11-12-00_983_5336074740355100025-1/-ext-10002 Moving data to directory hdfs://localhost:9000/user/hive/warehouse/final_com_on_gz2 MapReduce Jobs Launched: Stage-Stage-1: HDFS Read: 48 HDFS Write: 127 SUCCESS Total MapReduce CPU Time Spent: 0 msec OK Time taken: 12.716 seconds hive> dfs -ls hdfs://localhost:9000/user/hive/warehouse/final_com_on_gz2; Found 1 items -rwxr-xr-x 1 root supergroup 47 2019-12-18 11:12 hdfs://localhost:9000/user/hive/warehouse/final_com_on_gz2/000000_0.gz --可以将.gz文件 用hadoop -fs -get 命令拷贝到本地,然后使用zcat查看 -

输出为sequencefile

--检查压缩格式 hive> set mapred.output.compression.codec; mapred.output.compression.codec=org.apache.hadoop.io.compress.GzipCodec hive> set hive.exec.compress.output; hive.exec.compress.output=false --对结果进行压缩 hive> set hive.exec.compress.output=true; hive> set mapred.output.compression.type; mapred.output.compression.type=RECORD --使用sequencefile hive> set mapred.output.compression.type=BLOCK; --执行语句 hive> create table final_comp_on_gz_seq row format delimited fields terminated by '\t' stored as sequencefile as select * from test; Automatically selecting local only mode for query WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases. Query ID = root_20191223091402_98a1b33e-d0d1-4b5b-93a7-13b108e9ae59 Total jobs = 3 Launching Job 1 out of 3 Number of reduce tasks is set to 0 since there's no reduce operator Job running in-process (local Hadoop) 2019-12-23 09:14:13,261 Stage-1 map = 100%, reduce = 0% Ended Job = job_local1832209723_0001 Stage-4 is selected by condition resolver. Stage-3 is filtered out by condition resolver. Stage-5 is filtered out by condition resolver. Moving data to directory hdfs://localhost:9000/user/hive/warehouse/.hive-staging_hive_2019-12-23_09-14-02_976_8408522203594088276-1/-ext-10002 Moving data to directory hdfs://localhost:9000/user/hive/warehouse/final_comp_on_gz_seq MapReduce Jobs Launched: Stage-Stage-1: HDFS Read: 48 HDFS Write: 355 SUCCESS Total MapReduce CPU Time Spent: 0 msec OK Time taken: 11.52 seconds' ---检查文件, hive> dfs -ls -R /user/hive/warehouse/final_comp_on_gz_seq > ; -rwxr-xr-x 1 root supergroup 271 2019-12-23 09:14 /user/hive/warehouse/final_comp_on_gz_seq/000000_0 --文件是压缩后的二进制,cat看不了 hive> dfs -cat /user/hive/warehouse/final_comp_on_gz_seq/*; SEQorg.apache.hadoop.io.BytesWritableorg.apache.hadoop.io.Textorg.apache.hadoop.io.compress.GzipCodeckQ=kQ= --可以用-text读取sequencefile hive> dfs -text /user/hive/warehouse/final_comp_on_gz_seq/*; 1 2 3 88 77 66 33 22 11 1 2 3 88 77 66 33 22 11 -

归档

归档是将多个文件归并成一个,减少namenode的压力--创建测试表,带分区 create table hive_text(line string) partitioned by (folder string); --加载数据 hive> ! ls ${env:HIVE_HOME}; bin binary-package-licenses conf derby.log examples hcatalog jdbc lib LICENSE metastore_db NOTICE RELEASE_NOTES.txt scripts hive> alter table hive_text add partition (folder='docs'); hive> load data local inpath '${env:HIVE_HOME}/NOTICE' into table hive_text partition (folder='docs'); Loading data to table default.hive_text partition (folder=docs) OK Time taken: 1.158 seconds hive> load data local inpath '${env:HIVE_HOME}/RELEASE_NOTES.txt' into table hive_text partition (folder='docs'); Loading data to table default.hive_text partition (folder=docs) OK Time taken: 0.931 seconds hive> select * from hive_text; --设置允许归档 set hive.archive.enabled=true; --归档文件夹 hive> alter table hive_text archive partition (folder='docs'); intermediate.archived is hdfs://localhost:9000/user/hive/warehouse/hive_text/folder=docs_INTERMEDIATE_ARCHIVED intermediate.original is hdfs://localhost:9000/user/hive/warehouse/hive_text/folder=docs_INTERMEDIATE_ORIGINAL Creating data.har for hdfs://localhost:9000/user/hive/warehouse/hive_text/folder=docs in hdfs://localhost:9000/user/hive/warehouse/hive_text/folder=docs/.hive-staging_hive_2019-12-23_10-08-32_249_9145301772833183402-1/-ext-10000/partlevel Please wait... (this may take a while) Moving hdfs://localhost:9000/user/hive/warehouse/hive_text/folder=docs/.hive-staging_hive_2019-12-23_10-08-32_249_9145301772833183402-1/-ext-10000/partlevel to hdfs://localhost:9000/user/hive/warehouse/hive_text/folder=docs_INTERMEDIATE_ARCHIVED Moving hdfs://localhost:9000/user/hive/warehouse/hive_text/folder=docs to hdfs://localhost:9000/user/hive/warehouse/hive_text/folder=docs_INTERMEDIATE_ORIGINAL Moving hdfs://localhost:9000/user/hive/warehouse/hive_text/folder=docs_INTERMEDIATE_ARCHIVED to hdfs://localhost:9000/user/hive/warehouse/hive_text/folder=docs OK Time taken: 25.028 seconds --如果归档出错,查看hive执行日志,发现下面错误: 2019-12-23T10:03:40,679 ERROR [07dc3327-de2f-4586-9b96-229aad0d6bc3 main] exec.DDLTask: java.lang.NoClassDefFoundError: org/apache/hadoop/tools/HadoopArchives --是因为hadoop的归档jar要拷贝到hive的lib目录下,2.7.3版本的hadoop压缩包是:cd $HADOOP_HOME; find . -name hadoop*archive*.jar; ./share/hadoop/tools/lib/hadoop-archives-2.7.1.jar cp ./share/hadoop/tools/lib/hadoop-archives-2.7.1.jar $HIVE_HOME/lib

开发

日志

-

debug模式开启hive-cli

hive -hiveconf hive.root.logger=DEBUG,console

debug

ecs-d0b0:~ # hive --help --debug

Allows to debug Hive by connecting to it via JDI API

Usage: hive --debug[:comma-separated parameters list]

Parameters:

recursive=<y|n> Should child JVMs also be started in debug mode. Default: y

port=<port_number> Port on which main JVM listens for debug connection. Default: 8000

mainSuspend=<y|n> Should main JVM wait with execution for the debugger to connect. Default: y

childSuspend=<y|n> Should child JVMs wait with execution for the debugger to connect. Default: n

swapSuspend Swaps suspend options between main an child JVMs

函数

内置函数

见 [附录1](#附录1 内置函数大全)

查看函数

--显示有哪些udf

hive> show functions;

--查看函数详情

hive> desc function concat;

hive> desc function extended concat;

调用函数

--不使用表

hive> select concat('1','2') ;

--使用了表

hive> select concat('1','2') from src;

表生成函数UDTF

--构建表

hive> create table test(id int,arr array);

hive> insert into test select 1,array(11,12,13);

hive> insert into test select 2,array(21,22,23);

--array函数,explode函数

hive> select explode(array(1,2,3));

OK

1

2

3

--explode函数不能直接在查询中用,

hive> select id ,explode(arr) from test;

--FAILED: SemanticException [Error 10081]: UDTF's are not supported outside the SELECT clause, nor nested in expressions

--可以配合lateral view使用,

hive> select id ,ex_arr from test lateral view explode(arr) subView as ex_arr ;

1 11

1 12

1 13

2 21

2 22

2 23

UDF

标准类型

- idea中创建maven工程

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<parent>

<artifactId>parentartifactId>

<groupId>com.hujiawei666groupId>

<version>1.0-SNAPSHOTversion>

parent>

<modelVersion>4.0.0modelVersion>

<artifactId>myUDFartifactId>

<dependencies>

<dependency>

<groupId>junitgroupId>

<artifactId>junitartifactId>

<version>4.12version>

<scope>testscope>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-clientartifactId>

<version>2.7.1version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.hivegroupId>

<artifactId>hive-cliartifactId>

<version>2.3.6version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.hivegroupId>

<artifactId>hive-execartifactId>

<version>2.3.6version>

dependency>

dependencies>

<build>

<finalName>myUDFfinalName>

build>

project>

- 编写java

package com.hujiawei666.udf;

import org.apache.hadoop.hive.ql.exec.UDF;

import java.text.SimpleDateFormat;

import java.util.Date;

/**

* @author hujw

* date: 2019/12/13 11:17

**/

public class IsVocation extends UDF {

private SimpleDateFormat df;

private int minLength = 6;

private int[] volcation_mon_arr;

public IsVocation() {

df = new SimpleDateFormat("yyyyMM");

volcation_mon_arr = new int[]{

1, 2, 7, 8

};

}

public boolean evaluate(String dateString) {

int month = getMonth(dateString);

if (month == 0) {

return false;

} else {

for (int i = 0; i < volcation_mon_arr.length; i++) {

if (volcation_mon_arr[i] == month) {

return true;

}

}

}

return false;

}

private int getMonth(String dateString) {

if (dateString == null || dateString.length() < minLength) {

return 0;

}

try {

String monStr = dateString.substring(0, 6);

Date mon = df.parse(monStr);

if (mon != null) {

int month = mon.getMonth() + 1;

return month;

}

} catch (Exception e) {

System.out.println("dateString " + dateString + "is not a valid format");

e.printStackTrace();

}

return 0;

}

public static void main(String[] args) {

System.out.println(new IsVocation().evaluate("20191207"));

System.out.println(new IsVocation().evaluate("20190135"));

System.out.println(new IsVocation().evaluate("20190255"));

System.out.println(new IsVocation().evaluate("20190211"));

System.out.println(new IsVocation().evaluate("20190311"));

}

}

--创建示例表

hive> create table test(dt string);

hive> load data local in path /root/test/data into table test;

ecs-d0b0:~ # cat test/data

20191212

20180112

20190212

20190312

20190712

hive> select * from test;

20191212

20180112

20190212

20190312

20190712

hive> add jar /root/myUdf.jar;

Added [/root/myUdf.jar] to class path

Added resources: [/root/myUdf.jar]

hive> create function is_vocation as 'com.hujiawei666.udf.IsVocation';

hive> select a.dt,is_vocation(a.dt) from test a;

20191212 false

20180112 true

20190212 true

20190312 false

20190712 true

- 函数放到hdfs中调用

ecs-d0b0:~ # hs -put isVocation.jar /user/

create function default.is_vocation3 as 'com.ai.ctc.zjjs.udf.IsVocation' using jar 'hdfs://localhost:9000/user/isVocation.jar';

复杂类型

函数编写

package com.hujiawei666.udf;

import org.apache.hadoop.hive.ql.exec.UDFArgumentException;

import org.apache.hadoop.hive.ql.exec.UDFArgumentTypeException;

import org.apache.hadoop.hive.ql.metadata.HiveException;

import org.apache.hadoop.hive.ql.udf.generic.GenericUDF;

import org.apache.hadoop.hive.ql.udf.generic.GenericUDFUtils;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspector;

/**

* @author hujw

* date: 2019/12/23 10:45

**/

public class MyNvl extends GenericUDF {

private GenericUDFUtils.ReturnObjectInspectorResolver returnOIResolver;

private ObjectInspector[] argumentsIOs;

@Override

public ObjectInspector initialize(ObjectInspector[] objectInspectors) throws UDFArgumentException {

argumentsIOs = objectInspectors;

if (objectInspectors.length != 2) {

throw new UDFArgumentException("the operator 'NVL' accepts 2 arguments");

}

returnOIResolver = new GenericUDFUtils.ReturnObjectInspectorResolver(true);

if (!(returnOIResolver.update(argumentsIOs[0]) &&

returnOIResolver.update(argumentsIOs[1])

)) {

throw new UDFArgumentTypeException(2, "the 1st and 2nd args of function should be the same type," +

"but they are different:" + argumentsIOs[0].getTypeName() +

" and " + argumentsIOs[1].getTypeName());

}

return returnOIResolver.get();

}

@Override

public Object evaluate(DeferredObject[] deferredObjects) throws HiveException {

Object retVal = returnOIResolver.convertIfNecessary(deferredObjects[0].get(), argumentsIOs[0]);

if (retVal == null) {

retVal = returnOIResolver.convertIfNecessary(deferredObjects[1].get(), argumentsIOs[1]);

}

return retVal;

}

@Override

public String getDisplayString(String[] strings) {

StringBuffer sb=new StringBuffer();

sb.append("if");

sb.append(strings[0]);

sb.append("is null returns");

sb.append(strings[1]);

return sb.toString();

}

}

创建函数

add jar /root/test/myUdf.jar;

create function mynvl as 'com.hujiawei666.udf.MyNvl';

hive> select mynvl(1,2) as c1,mynvl(null,2) as c2 ,mynvl('','aa') as c3,mynvl(null,'b');

OK

1 2 b

Time taken: 0.062 seconds, Fetched: 1 row(s)

UDAF

自定义聚合(aggregation)函数

package com.hujiawei666.udf;

import org.apache.hadoop.hive.ql.exec.Description;

import org.apache.hadoop.hive.ql.exec.UDFArgumentException;

import org.apache.hadoop.hive.ql.exec.UDFArgumentTypeException;

import org.apache.hadoop.hive.ql.parse.SemanticException;

import org.apache.hadoop.hive.ql.udf.generic.AbstractGenericUDAFResolver;

import org.apache.hadoop.hive.ql.udf.generic.GenericUDAFEvaluator;

import org.apache.hadoop.hive.ql.udf.generic.GenericUDAFMkCollectionEvaluator;

import org.apache.hadoop.hive.ql.udf.generic.GenericUDAFParameterInfo;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspector;

import org.apache.hadoop.hive.serde2.typeinfo.TypeInfo;

import org.slf4j.LoggerFactory;

/**

* @author hujw

* date: 2019/12/24 9:26

**/

@Description(name = "collect",value = "_FUNC_(X) -return a list of objects." +

"CAUTION will easily OOM on large data sets")

public class GenericUDAFCollect extends AbstractGenericUDAFResolver {

static final org.slf4j.Logger LOGGER = LoggerFactory.getLogger(GenericUDAFCollect.class);

public GenericUDAFCollect() {

}

@Override

public GenericUDAFEvaluator getEvaluator(GenericUDAFParameterInfo info) throws SemanticException {

if (info.isAllColumns()) {

throw new SemanticException("The specified syntax for UDAF invocation is invalid.");

} else {

return this.getEvaluator(info.getParameters());

}

}

@Override

public GenericUDAFEvaluator getEvaluator(TypeInfo[] info) throws SemanticException {

if (info.length != 1) {

throw new UDFArgumentTypeException(info.length - 1, "Exactly one argument is expected");

}

if (info[0].getCategory() != ObjectInspector.Category.PRIMITIVE) {

throw new UDFArgumentTypeException(0, "Only primitive type arguments are accept but" +

info[0].getTypeName() + "was passed as parameter 1");

}

return new GenericUDAFMkListEvaluator();

}

}

package com.hujiawei666.udf;

import org.apache.hadoop.hive.ql.metadata.HiveException;

import org.apache.hadoop.hive.ql.udf.generic.GenericUDAFMkCollectionEvaluator;

import org.apache.hadoop.hive.serde2.objectinspector.*;

import java.util.ArrayList;

import java.util.Iterator;

import java.util.List;

/**

* @author hujw

* date: 2019/12/24 9:38

**/

public class GenericUDAFMkListEvaluator extends GenericUDAFMkCollectionEvaluator {

private PrimitiveObjectInspector inputOI;

private StandardListObjectInspector loi;

private StandardListObjectInspector internalMergeOI;

@Override

public ObjectInspector init(Mode m, ObjectInspector[] parameters) throws HiveException {

super.init(m, parameters);

if (m == Mode.PARTIAL1) {

inputOI = (PrimitiveObjectInspector) parameters[0];

return ObjectInspectorFactory.

getStandardListObjectInspector(

ObjectInspectorUtils.getStandardObjectInspector(inputOI));

} else {

if (!(parameters[0] instanceof StandardListObjectInspector)) {

inputOI = (PrimitiveObjectInspector) ObjectInspectorUtils.

getStandardObjectInspector(parameters[0]);

return ObjectInspectorFactory.getStandardListObjectInspector(inputOI);

} else {

internalMergeOI = (StandardListObjectInspector) parameters[0];

inputOI = (PrimitiveObjectInspector) internalMergeOI.getListElementObjectInspector();

loi = (StandardListObjectInspector) ObjectInspectorUtils.getStandardObjectInspector(internalMergeOI);

return loi;

}

}

}

static class MkArrayAggregationBuffer implements AggregationBuffer {

List<Object> container;

}

@Override

public void reset(AggregationBuffer agg) throws HiveException {

((MkArrayAggregationBuffer) agg).container = new ArrayList<>();

}

@Override

public AggregationBuffer getNewAggregationBuffer() throws HiveException {

MkArrayAggregationBuffer ret=new MkArrayAggregationBuffer();

reset(ret);

return ret;

}

//mapside

@Override

public void iterate(AggregationBuffer agg, Object[] parameters) throws HiveException {

assert parameters.length == 1;

Object p = parameters[0];

if (p != null) {

MkArrayAggregationBuffer myagg = (MkArrayAggregationBuffer)agg;

this.putIntoList(p, myagg);

}

}

@Override

public Object terminatePartial(AggregationBuffer agg) throws HiveException {

MkArrayAggregationBuffer myagg = (MkArrayAggregationBuffer)agg;

List<Object> ret = new ArrayList(myagg.container.size());

ret.addAll(myagg.container);

return ret;

}

@Override

public void merge(AggregationBuffer agg, Object partial) throws HiveException {

MkArrayAggregationBuffer myagg = (MkArrayAggregationBuffer)agg;

List<Object> partialResult = (ArrayList)this.internalMergeOI.getList(partial);

if (partialResult != null) {

Iterator var5 = partialResult.iterator();

while(var5.hasNext()) {

Object i = var5.next();

putIntoList(i, myagg);

}

}

}

@Override

public Object terminate(AggregationBuffer agg) throws HiveException {

MkArrayAggregationBuffer myagg = (MkArrayAggregationBuffer)agg;

List<Object> ret = new ArrayList(myagg.container.size());

ret.addAll(myagg.container);

return ret;

}

private void putIntoList(Object p, MkArrayAggregationBuffer agg) {

Object pCopy = ObjectInspectorUtils.copyToStandardObject(p, this.inputOI);

agg.container.add(pCopy);

}

}

执行

hive> add jar /root/test/myUdf.jar;

hive> create function collect as 'com.hujiawei666.udf.GenericUDAFCollect';

hive> create table collection_test(name string,age int) row format delimited fields terminated by ' ';

ecs-d0b0:~/test # cat afile.txt

hujiawei 11

hujiawei 22

wangning 33

wangning 44

hive> load data local inpath '/root/test/afile.txt' into table collection_test;

hive> select * from collection_test;

OK

hujiawei 11

hujiawei 22

wangning 33

wangning 44

hive> select collect(name) from collection_test;

["hujiawei","hujiawei","wangning","wangning"]

c

hive> select concat_ws(',',collect(name)) from collection_test;

hujiawei,hujiawei,wangning,wangning

hive> desc function concat_ws;

concat_ws(separator, [string | array(string)]+) - returns the concatenation of the strings separated by the separator.

--配合使用,打到mysql的group_concat效果

hive> select name,concat_ws(',',collect(cast(age as string))) from collection_test group by name;

hujiawei 11,22

wangning 33,44

UDTF

表生成函数,生成多列,多个值,

-

简单的示例

package com.hujiawei666.udf; import org.apache.hadoop.hive.ql.exec.UDFArgumentException; import org.apache.hadoop.hive.ql.metadata.HiveException; import org.apache.hadoop.hive.ql.udf.generic.GenericUDTF; import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspector; import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspectorFactory; import org.apache.hadoop.hive.serde2.objectinspector.PrimitiveObjectInspector; import org.apache.hadoop.hive.serde2.objectinspector.StructObjectInspector; import org.apache.hadoop.hive.serde2.objectinspector.primitive.PrimitiveObjectInspectorFactory; import org.apache.hadoop.hive.serde2.objectinspector.primitive.WritableConstantIntObjectInspector; import org.apache.hadoop.io.IntWritable; import java.util.ArrayList; /** * @author hujw * date: 2019/12/25 9:24 **/ public class GenericUDTFFor extends GenericUDTF { IntWritable start; IntWritable end; IntWritable inc; Object[] forwardObj=null; @Override public StructObjectInspector initialize(ObjectInspector[] argOIs) throws UDFArgumentException { start= ((WritableConstantIntObjectInspector)argOIs[0]).getWritableConstantValue(); end= ((WritableConstantIntObjectInspector)argOIs[1]).getWritableConstantValue(); if (argOIs.length == 3) { inc = ((WritableConstantIntObjectInspector) argOIs[2]).getWritableConstantValue(); } else { inc = new IntWritable(1); } this.forwardObj = new Object[1]; ArrayList<String> fieldNames = new ArrayList<>(); ArrayList<ObjectInspector> fieldOIs = new ArrayList<>(); fieldNames.add("col0"); fieldOIs.add( PrimitiveObjectInspectorFactory.getPrimitiveJavaObjectInspector( PrimitiveObjectInspector.PrimitiveCategory.INT) ); return ObjectInspectorFactory.getStandardStructObjectInspector(fieldNames, fieldOIs); } @Override public void process(Object[] objects) throws HiveException { for (int i = start.get(); i < end.get(); i = i + inc.get()) { this.forwardObj[0] = new Integer(i); //这边是使用父类的方法,开始下一列 forward(forwardObj); } } @Override public void close() throws HiveException { } }hive> add jar /root/test/myUdf.jar; Added [/root/test/myUdf.jar] to class path Added resources: [/root/test/myUdf.jar] hive> create function forx as 'com.hujiawei666.udf.GenericUDTFFor'; hive> select forx(1,5); 1 2 3 4 hive> select forx(1,100,10); 1 11 21 31 41 51 61 71 81 91 -

自带的parse_url_tuple函数

能够将url解析成多个部分hive> desc function parse_url_tuple; parse_url_tuple(url, partname1, partname2, ..., partnameN) - extracts N (N>=1) parts from a URL. It takes a URL and one or multiple partnames, and returns a tuple. All the input parameters and output column types are string. hive>select parse_url_tuple('https://blog.csdn.net/qq467215628','HOST','PATH'); blog.csdn.net /qq467215628 -

返回自定义结构多列

自定义函数book能将"20191225|Hive Programing Note|Hujiawei,Dukai"类型的字符串解析成一行多列,护着一列多行package com.hujiawei666.udf; import org.apache.hadoop.hive.ql.exec.UDFArgumentException; import org.apache.hadoop.hive.ql.metadata.HiveException; import org.apache.hadoop.hive.ql.udf.generic.GenericUDTF; import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspector; import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspectorFactory; import org.apache.hadoop.hive.serde2.objectinspector.PrimitiveObjectInspector; import org.apache.hadoop.hive.serde2.objectinspector.StructObjectInspector; import org.apache.hadoop.hive.serde2.objectinspector.primitive.JavaStringObjectInspector; import org.apache.hadoop.hive.serde2.objectinspector.primitive.PrimitiveObjectInspectorFactory; import org.apache.hadoop.hive.serde2.objectinspector.primitive.StringObjectInspector; import org.apache.hadoop.io.Text; import java.util.ArrayList; /** * @author hujw * date: 2019/12/25 9:54 **/ public class UDTFBook extends GenericUDTF { private Text sent; Object[] forwardObj=null; @Override public StructObjectInspector initialize(ObjectInspector[] argOIs) throws UDFArgumentException { ArrayList<String> fieldNames = new ArrayList<>(); ArrayList<ObjectInspector> fieldOIs = new ArrayList<>(); fieldNames.add("isbn"); fieldOIs.add(PrimitiveObjectInspectorFactory.getPrimitiveJavaObjectInspector(PrimitiveObjectInspector.PrimitiveCategory.INT)); fieldNames.add("title"); fieldOIs.add(PrimitiveObjectInspectorFactory.getPrimitiveJavaObjectInspector(PrimitiveObjectInspector.PrimitiveCategory.STRING)); fieldNames.add("author"); fieldOIs.add(PrimitiveObjectInspectorFactory.getPrimitiveJavaObjectInspector(PrimitiveObjectInspector.PrimitiveCategory.STRING)); forwardObj = new Object[3]; return ObjectInspectorFactory.getStandardStructObjectInspector(fieldNames, fieldOIs); } @Override public void process(Object[] objects) throws HiveException { String parts = objects[0].toString(); String[] part=parts.split("\\|"); forwardObj[0] = Integer.parseInt(part[0]); forwardObj[1] = part[1]; forwardObj[2] = part[2]; forward(forwardObj); } @Override public void close() throws HiveException { } }create table book(str string); insert into book values('20191225|Hive Programing Note|Hujiawei,Dukai'); add jar /root/test/myUdf.jar; create function book as 'com.hujiawei666.udf.UDTFBook'; select book(str) from book; hive> select book(str) from book; 20191225 Hive Programing Note Hujiawei,Dukai

宏

hive> create temporary macro sigmod (x double)1.0/(1.0+exp(-x));

hive> select sigmod(2);

0.8807970779778823

hive> create temporary macro aa (x double) x*x;

hive> select aa(2);

4.0

stream

使用内置的cat,cut,sed转换

hive> create table aa(c1 int,c2 int);

hive> select * from aa;

OK

1 2

3 4

hive> select transform(c1,c2) using '/bin/cat' as newA,newB from aa;

1 2

3 4

--转换类型

hive> select transform(c1,c2) using '/bin/cat' as (newA int,newB double) from aa;

1 2.0

3 4.0

--字符串替换

hive> select transform(c1,c2) using '/bin/sed s/2/x/' from aa;

1 x

3 4

使用自定义脚本

- 新建 ctof.sh将摄氏温度转换为华氏温度

#!/usr/bin/env bash

while read line; do

res=`echo "scale=2;((9/5)*$line)+32"|bc`

echo $res

done

导入到hive中

hive> add file /root/test/ctof.sh;

hive> select transform(c1) using 'ctof.sh' as newA from aa;

33.80

37.40

- 使用perl脚本分割字符串

afile.txt

ecs-d0b0:~/test # cat afile.txt

k1=v1,k2=v2

k3=v3,k4=v4,k5=v5

split_kv.pl

#!/user/bin/perl

while (hive> create table tb_split(line string);

hive> load data local inpath '/root/test/afile.txt' into table tb_split;

hive> select * from tb_split;

k1=v1,k2=v2

k3=v3,k4=v4,k5=v5

hive> select transform(line) using 'perl /root/test/split_kv.pl' as (key,value) from tb_split;

k1 v1

k2 v2

k3 v3

k4 v4

k5 v5

也可以执行c的程序

#include gcc test.c -o getValue

hive> select transform(line) using '/root/test/getValue' as newLine from tb_split;

k1=v1

k2=v2

k3=v3

k4=v4

k5=v5

使用perl达到聚合函数效果

sum.pl

#!/user/bin/perl

my $sum=0;

while(hive> select * from tb_sum;

1

2

3

2

3

4

hive> ADD FILE /root/test/sum.pl

hive> select transform(num) using 'perl sum.pl' as sum from tb_sum;

15

wordcount

-

直接用hive完成词频计数

man sh >sh.txthive> desc test; line string hive> load data local inpath '/root/test/sh.txt' into table test; hive> create table word as select word,count(1) as count from (select explode(split(line,' ')) as word from test) w group by word order by word; hive> select * from word a where a.count >100; 5829 If 110 a 113 and 128 be 221 command 151 current 110 cursor 105 is 128 of 239 shall 248 the 927 to 172 -

使用transform用py脚本执行

mapper.pyimport sys for line in sys.stdin: words=line.strip().split(); for word in words: print "%s\t1" %(word.lower())reducer.py

import sys (last_key,last_count)=(None,0); for line in sys.stdin: (key,count)=line.strip().split("\t") ##这边认为相同单词在一起,就是输入是分组的,利用cluster by可以实现 if(last_key and last_key!=key): print "%s\t%d" % (last_key,last_count) (last_key,last_count)=(key,int(count)) else: last_key=key last_count+=int(count) if last_key: print "%s\t%d" % (last_key,last_count)测试脚本

ecs-d0b0:~/test # echo 'new new old'|python mapper.py |python reducer.py new 2 old 1hive中执行

--注意这边的cluster by,将相同的单词分组了 from (from test select transform(line) using 'python /root/test/mapper.py' as word,count cluster by word) wc insert overwrite table word_count select transform(word,count) using 'python /root/test/reducer.py' as word,count; hive> select * from word_count where count>100; a 128 and 128 be 221 command 163 current 110 cursor 105 if 132 in 109 is 128 of 240 shall 248 the 994 to 172 --使用distribute和sort来替代cluster by from (from test select transform(line) using 'python /root/test/mapper.py' as word,count distribute by word sort by word desc) wc insert overwrite table word_count select transform(word,count) using 'python /root/test/reducer.py' as word,count;

自定义记录格式

文本格式

可见文本格式,可以自定义分隔符等,可以方便的使用cat,sed,vi等工具查看文本内容,但是占空间

sequenceFile

是压缩的格式,内容为二进制编码,hive可以直接读写sequencefile,可以使用dfs -text 方便的查看文件内容

RCFile

纵表形式,key-value这种组合,

示例:

hive> select * from test;

11 12

21 22

hive> create table test_col(k int,v int) row format serde 'org.apache.hadoop.hive.serde2.columnar.ColumnarSerDe' stored as inputformat 'org.apache.hadoop.hive.ql.io.RCFileInputFormat' outputformat 'org.apache.hadoop.hive.ql.io.RCFileOutputFormat';

hive> from test insert overwrite table test_col select c1,c2;

select * from test_col;

11 12

21 22

ecs-d0b0:~ # hive --service rcfilecat 'hdfs://localhost:9000/user/hive/warehouse/test_col/000000_0'

11 12

21 22

自定义输入格式

使用自定义inputformat,来创建dual表,无论有多少数据,只返回一行

package com.hujiawei666.hive;

import org.apache.hadoop.mapred.*;

import java.io.IOException;

/**

* @author hujw

* date: 2019/12/26 9:18

**/

public class DualInputFormat3 implements InputFormat {

@Override

public InputSplit[] getSplits(JobConf jobConf, int i) throws IOException {

InputSplit[] splits=new DualInputSplit[1];

splits[0]=new DualInputSplit();

return splits;

}

@Override

public RecordReader getRecordReader(InputSplit inputSplit, JobConf jobConf, Reporter reporter) throws IOException {

return new DualRecordReader(jobConf, inputSplit);

}

}

package com.hujiawei666.hive;

import org.apache.hadoop.mapred.InputSplit;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

/**

* @author hujw

* date: 2019/12/26 9:20

**/

public class DualInputSplit implements InputSplit {

@Override

public long getLength() throws IOException {

return 1;

}

@Override

public String[] getLocations() throws IOException {

return new String[]{

"localhost"};

}

@Override

public void write(DataOutput dataOutput) throws IOException {

}

@Override

public void readFields(DataInput dataInput) throws IOException {

}

}

package com.hujiawei666.hive;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapred.InputSplit;

import org.apache.hadoop.mapred.JobConf;

import org.apache.hadoop.mapred.RecordReader;

import java.io.IOException;

/**

* @author hujw

* date: 2019/12/26 9:21

**/

public class DualRecordReader implements RecordReader {

boolean hasNext =true;

public DualRecordReader(JobConf jc, InputSplit s) {

}

public DualRecordReader() {

}

/**

* 只返回一行,第一次取有一行,后面取都没有了

* @param o

* @param o2

* @return

* @throws IOException

*/

@Override

public boolean next(Object o, Object o2) throws IOException {

if (hasNext) {

hasNext = false;

return true;

} else {

return hasNext;

}

}

@Override

public Object createKey() {

return new Text("");

}

@Override

public Object createValue() {

return new Text("");

}

@Override

public long getPos() throws IOException {

return 0;

}

@Override

public void close() throws IOException {

}

@Override

public float getProgress() throws IOException {

if (hasNext) {

return 0.0f;

} else {

return 1.0f;

}

}

}

hive> add jar /root/test/myUdf3.jar;

hive> create table dual(fake String) stored as inputformat 'com.hujiawei666.hive.DualInputFormat3' outputformat 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat';

--这边插入两行,

hive> insert into dual values("");

hive> insert into dual values("");

--执行算数,只返回一行,不需要加limit

hive> select 1+1 from dual;

2

--查询所有数据也查不出

> select * from dual;

OK

Time taken: 0.172 seconds, Fetched: 1 row(s)

--查询count是两个

hive> select count(1) from dual;

OK

2

Time taken: 0.153 seconds, Fetched: 1 row(s)

使用正则表达式过滤日志

截取一段日志,样例见[附录4](#附录4 日志样例)

正则表达式为:

(\[\w+\]) ([\d+-:\. ]+) (\[[\w\d-]*\]) - ([\.\w+]*) - ([\.\w+]*) - ([=><]*) ( *\w+: )(.*$)

hive中使用正则表达式将日志的分字段导入到表中

--注意这边的正则表达式,要和java中运行的正则相同,即\要换成\\,进行两次转义

create table tb_log(

level string,

time string,

proc_id string,

class string,

log_class string,

direct string,

method string,

result string

)row format serde 'org.apache.hadoop.hive.contrib.serde2.RegexSerDe'

with serdeproperties(

"input.regex"="(\\[\\w+\\]) ([\\d+-:\\. ]+) (\\[[\\w\\d-]*\\]) - ([\\.\\w+]*) - ([\\.\\w+]*) - ([=><]*) ( *\\w+: )(.*$)",

"output.format.string"="%1$s %2$s %3$s %4$s %5$s %6$s %7$s %8$s"

)

stored as textfile;

load data local inpath '/root/test/test.log' into table tb_log;

hive> select time from tb_log;

2019-11-11 15:03:05.152

2019-11-11 15:03:05.152

2019-11-11 15:03:05.162

...

xml

xpath函数示例:

select xpath('b1b2','//@id');

["foo","bar"]

hive> select xpath('b1b2b3c1 c2 ','a/*[@class="bb"]/text()');

["b1","c1"]

hive> select xpath_int('24 ','a/b+a/c');

6

xpath语法见 https://www.w3school.com.cn/xpath/xpath_syntax.asp

json

hive自带的jsonserde,需要添加配置,或者add jar

/opt/install/hadoop/apache-hive-2.3.6-bin/conf/hive-site.xml

<property>

<name>hive.aux.jars.pathname>

<value>/opt/install/hadoop/apache-hive-2.3.6-bin/lib/hive-hcatalog-core-2.3.6.jarvalue>

property>

{

"name": "tom",

"sex": 1,

"age": 22

}

hs -put test.json /user/testJson/

create external table json_param(

name string,

sex int,

age int

)row format serde 'org.apache.hive.hcatalog.data.JsonSerDe'

with serdeproperties(

"name"="$.name",

"sex"="$.sex",

"age"="$.age"

)location '/user/testJson';

select * from json_param

附录1 内置函数大全

参见: https://blog.csdn.net/TheRa1nMan/article/details/89408718

数学函数

| Return Type | Name (Signature) | Description |

|---|---|---|

| DOUBLE | round(DOUBLE a) | Returns the rounded BIGINT value of a.返回对a四舍五入的BIGINT值 |

| DOUBLE | round(DOUBLE a, INT d) | Returns a rounded to d decimal places.返回DOUBLE型d的保留n位小数的DOUBLW型的近似值 |

| DOUBLE | bround(DOUBLE a) | Returns the rounded BIGINT value of a using HALF_EVEN rounding mode (as of Hive 1.3.0, 2.0.0). Also known as Gaussian rounding or bankers’ rounding. Example: bround(2.5) = 2, bround(3.5) = 4. 银行家舍入法(14:舍,69:进,5->前位数是偶:舍,5->前位数是奇:进) |

| DOUBLE | bround(DOUBLE a, INT d) | Returns a rounded to d decimal places using HALF_EVEN rounding mode (as of Hive 1.3.0, 2.0.0). Example: bround(8.25, 1) = 8.2, bround(8.35, 1) = 8.4. 银行家舍入法,保留d位小数 |

| BIGINT | floor(DOUBLE a) | Returns the maximum BIGINT value that is equal to or less than a向下取整,最数轴上最接近要求的值的左边的值 如:6.10->6 -3.4->-4 |

| BIGINT | ceil(DOUBLE a), ceiling(DOUBLE a) | Returns the minimum BIGINT value that is equal to or greater than a.求其不小于小给定实数的最小整数如:ceil(6) = ceil(6.1)= ceil(6.9) = 6 |

| DOUBLE | rand(), rand(INT seed) | Returns a random number (that changes from row to row) that is distributed uniformly from 0 to 1. Specifying the seed will make sure the generated random number sequence is deterministic.每行返回一个DOUBLE型随机数seed是随机因子 |

| DOUBLE | exp(DOUBLE a), exp(DECIMAL a) | Returns ea where e is the base of the natural logarithm. Decimal version added in Hive 0.13.0.返回e的a幂次方, a可为小数 |

| DOUBLE | ln(DOUBLE a), ln(DECIMAL a) | Returns the natural logarithm of the argument a. Decimal version added in Hive 0.13.0.以自然数为底d的对数,a可为小数 |

| DOUBLE | log10(DOUBLE a), log10(DECIMAL a) | Returns the base-10 logarithm of the argument a. Decimal version added in Hive 0.13.0.以10为底d的对数,a可为小数 |

| DOUBLE | log2(DOUBLE a), log2(DECIMAL a) | Returns the base-2 logarithm of the argument a. Decimal version added in Hive 0.13.0.以2为底数d的对数,a可为小数 |

| DOUBLE | log(DOUBLE base, DOUBLE a)log(DECIMAL base, DECIMAL a) | Returns the base-base logarithm of the argument a. Decimal versions added in Hive 0.13.0.以base为底的对数,base 与 a都是DOUBLE类型 |

| DOUBLE | pow(DOUBLE a, DOUBLE p), power(DOUBLE a, DOUBLE p) | Returns ap.计算a的p次幂 |

| DOUBLE | sqrt(DOUBLE a), sqrt(DECIMAL a) | Returns the square root of a. Decimal version added in Hive 0.13.0.计算a的平方根 |

| STRING | bin(BIGINT a) | Returns the number in binary format (see http://dev.mysql.com/doc/refman/5.0/en/string-functions.html#function_bin).计算二进制a的STRING类型,a为BIGINT类型 |

| STRING | hex(BIGINT a) hex(STRING a) hex(BINARY a) | If the argument is an INT or binary, hex returns the number as a STRING in hexadecimal format. Otherwise if the number is a STRING, it converts each character into its hexadecimal representation and returns the resulting STRING. (Seehttp://dev.mysql.com/doc/refman/5.0/en/string-functions.html#function_hex, BINARY version as of Hive 0.12.0.)计算十六进制a的STRING类型,如果a为STRING类型就转换成字符相对应的十六进制 |

| BINARY | unhex(STRING a) | Inverse of hex. Interprets each pair of characters as a hexadecimal number and converts to the byte representation of the number. (BINARY version as of Hive 0.12.0, used to return a string.)hex的逆方法 |

| STRING | conv(BIGINT num, INT from_base, INT to_base), conv(STRING num, INT from_base, INT to_base) | Converts a number from a given base to another (see http://dev.mysql.com/doc/refman/5.0/en/mathematical-functions.html#function_conv).将GIGINT/STRING类型的num从from_base进制转换成to_base进制 |

| DOUBLE | abs(DOUBLE a) | Returns the absolute value.计算a的绝对值 |

| INT or DOUBLE | pmod(INT a, INT b), pmod(DOUBLE a, DOUBLE b) | Returns the positive value of a mod b.a对b取模 |

| DOUBLE | sin(DOUBLE a), sin(DECIMAL a) | Returns the sine of a (a is in radians). Decimal version added in Hive 0.13.0.求a的正弦值 |

| DOUBLE | asin(DOUBLE a), asin(DECIMAL a) | Returns the arc sin of a if -1<=a<=1 or NULL otherwise. Decimal version added in Hive 0.13.0.求d的反正弦值 |

| DOUBLE | cos(DOUBLE a), cos(DECIMAL a) | Returns the cosine of a (a is in radians). Decimal version added in Hive 0.13.0.求余弦值 |

| DOUBLE | acos(DOUBLE a), acos(DECIMAL a) | Returns the arccosine of a if -1<=a<=1 or NULL otherwise. Decimal version added in Hive 0.13.0.求反余弦值 |

| DOUBLE | tan(DOUBLE a), tan(DECIMAL a) | Returns the tangent of a (a is in radians). Decimal version added in Hive 0.13.0.求正切值 |

| DOUBLE | atan(DOUBLE a), atan(DECIMAL a) | Returns the arctangent of a. Decimal version added in Hive 0.13.0.求反正切值 |

| DOUBLE | degrees(DOUBLE a), degrees(DECIMAL a) | Converts value of a from radians to degrees. Decimal version added in Hive 0.13.0.奖弧度值转换角度值 |

| DOUBLE | radians(DOUBLE a), radians(DOUBLE a) | Converts value of a from degrees to radians. Decimal version added in Hive 0.13.0.将角度值转换成弧度值 |

| INT or DOUBLE | positive(INT a), positive(DOUBLE a) | Returns a.返回a |

| INT or DOUBLE | negative(INT a), negative(DOUBLE a) | Returns -a.返回a的相反数 |

| DOUBLE or INT | sign(DOUBLE a), sign(DECIMAL a) | Returns the sign of a as ‘1.0’ (if a is positive) or ‘-1.0’ (if a is negative), ‘0.0’ otherwise. The decimal version returns INT instead of DOUBLE. Decimal version added in Hive 0.13.0.如果a是正数则返回1.0,是负数则返回-1.0,否则返回0.0 |

| DOUBLE | e() | Returns the value of e.数学常数e |

| DOUBLE | pi() | Returns the value of pi.数学常数pi |

| BIGINT | factorial(INT a) | Returns the factorial of a (as of Hive 1.2.0). Valid a is [0…20]. 求a的阶乘 |

| DOUBLE | cbrt(DOUBLE a) | Returns the cube root of a double value (as of Hive 1.2.0). 求a的立方根 |

| INT BIGINT | shiftleft(TINYINT|SMALLINT|INT a, INT b)shiftleft(BIGINT a, INT b) | Bitwise left shift (as of Hive 1.2.0). Shifts a b positions to the left.Returns int for tinyint, smallint and int a. Returns bigint for bigint a.按位左移 |

| INTBIGINT | shiftright(TINYINT|SMALLINT|INT a, INTb)shiftright(BIGINT a, INT b) | Bitwise right shift (as of Hive 1.2.0). Shifts a b positions to the right.Returns int for tinyint, smallint and int a. Returns bigint for bigint a.按拉右移 |

| INTBIGINT | shiftrightunsigned(TINYINT|SMALLINT|INTa, INT b),shiftrightunsigned(BIGINT a, INT b) | Bitwise unsigned right shift (as of Hive 1.2.0). Shifts a b positions to the right.Returns int for tinyint, smallint and int a. Returns bigint for bigint a.无符号按位右移(<<<) |

| T | greatest(T v1, T v2, …) | Returns the greatest value of the list of values (as of Hive 1.1.0). Fixed to return NULL when one or more arguments are NULL, and strict type restriction relaxed, consistent with “>” operator (as of Hive 2.0.0). 求最大值 |

| T | least(T v1, T v2, …) | Returns the least value of the list of values (as of Hive 1.1.0). Fixed to return NULL when one or more arguments are NULL, and strict type restriction relaxed, consistent with “<” operator (as of Hive 2.0.0). 求最小值 |

集合函数

| Return Type | Name(Signature) | Description |

|---|---|---|

| int | size(Map |

Returns the number of elements in the map type.求map的长度 |

| int | size(Array) | Returns the number of elements in the array type.求数组的长度 |

| array | map_keys(Map |

Returns an unordered array containing the keys of the input map.返回map中的所有key |

| array | map_values(Map |

Returns an unordered array containing the values of the input map.返回map中的所有value |

| boolean | array_contains(Array, value) | Returns TRUE if the array contains value.如该数组Array包含value返回true。,否则返回false |

| array | sort_array(Array) | Sorts the input array in ascending order according to the natural ordering of the array elements and returns it (as of version 0.9.0).按自然顺序对数组进行排序并返回 |

类型转换函数

| Return Type | Name(Signature) | Description |

|---|---|---|

| binary | binary(string|binary) | Casts the parameter into a binary.将输入的值转换成二进制 |

| Expected “=” to follow "type" | cast(expr as ) | Converts the results of the expression expr to . For example, cast(‘1’ as BIGINT) will convert the string ‘1’ to its integral representation. A null is returned if the conversion does not succeed. If cast(expr as boolean) Hive returns true for a non-empty string.将expr转换成type类型 如:cast(“1” as BIGINT) 将字符串1转换成了BIGINT类型,如果转换失败将返回NULL |

日期函数

| Return Type | Name(Signature) | Description |

|---|---|---|

| string | from_unixtime(bigint unixtime[, string format]) | Converts the number of seconds from unix epoch (1970-01-01 00:00:00 UTC) to a string representing the timestamp of that moment in the current system time zone in the format of “1970-01-01 00:00:00”.将时间的秒值转换成format格式(format可为“yyyy-MM-dd hh:mm:ss”,“yyyy-MM-dd hh”,“yyyy-MM-dd hh:mm”等等)如from_unixtime(1250111000,“yyyy-MM-dd”) 得到2009-03-12 |

| bigint | unix_timestamp() | Gets current Unix timestamp in seconds.获取本地时区下的时间戳 |

| bigint | unix_timestamp(string date) | Converts time string in format yyyy-MM-dd HH:mm:ss to Unix timestamp (in seconds), using the default timezone and the default locale, return 0 if fail: unix_timestamp(‘2009-03-20 11:30:01’) = 1237573801将格式为yyyy-MM-dd HH:mm:ss的时间字符串转换成时间戳 如unix_timestamp(‘2009-03-20 11:30:01’) = 1237573801 |

| bigint | unix_timestamp(string date, string pattern) | Convert time string with given pattern (see [http://docs.oracle.com/javase/tutorial/i18n/format/simpleDateFormat.html]) to Unix time stamp (in seconds), return 0 if fail: unix_timestamp(‘2009-03-20’, ‘yyyy-MM-dd’) = 1237532400.将指定时间字符串格式字符串转换成Unix时间戳,如果格式不对返回0 如:unix_timestamp(‘2009-03-20’, ‘yyyy-MM-dd’) = 1237532400 |

| string | to_date(string timestamp) | Returns the date part of a timestamp string: to_date(“1970-01-01 00:00:00”) = “1970-01-01”.返回时间字符串的日期部分 |

| int | year(string date) | Returns the year part of a date or a timestamp string: year(“1970-01-01 00:00:00”) = 1970, year(“1970-01-01”) = 1970.返回时间字符串的年份部分 |

| int | quarter(date/timestamp/string) | Returns the quarter of the year for a date, timestamp, or string in the range 1 to 4 (as of Hive 1.3.0). Example: quarter(‘2015-04-08’) = 2.返回当前时间属性哪个季度 如quarter(‘2015-04-08’) = 2 |

| int | month(string date) | Returns the month part of a date or a timestamp string: month(“1970-11-01 00:00:00”) = 11, month(“1970-11-01”) = 11.返回时间字符串的月份部分 |

| int | day(string date) dayofmonth(date) | Returns the day part of a date or a timestamp string: day(“1970-11-01 00:00:00”) = 1, day(“1970-11-01”) = 1.返回时间字符串的天 |

| int | hour(string date) | Returns the hour of the timestamp: hour(‘2009-07-30 12:58:59’) = 12, hour(‘12:58:59’) = 12.返回时间字符串的小时 |

| int | minute(string date) | Returns the minute of the timestamp.返回时间字符串的分钟 |

| int | second(string date) | Returns the second of the timestamp.返回时间字符串的秒 |

| int | weekofyear(string date) | Returns the week number of a timestamp string: weekofyear(“1970-11-01 00:00:00”) = 44, weekofyear(“1970-11-01”) = 44.返回时间字符串位于一年中的第几个周内 如weekofyear(“1970-11-01 00:00:00”) = 44, weekofyear(“1970-11-01”) = 44 |

| int | datediff(string enddate, string startdate) | Returns the number of days from startdate to enddate: datediff(‘2009-03-01’, ‘2009-02-27’) = 2.计算开始时间startdate到结束时间enddate相差的天数 |

| string | date_add(string startdate, int days) | Adds a number of days to startdate: date_add(‘2008-12-31’, 1) = ‘2009-01-01’.从开始时间startdate加上days |

| string | date_sub(string startdate, int days) | Subtracts a number of days to startdate: date_sub(‘2008-12-31’, 1) = ‘2008-12-30’.从开始时间startdate减去days |

| timestamp | from_utc_timestamp(timestamp, string timezone) | Assumes given timestamp is UTC and converts to given timezone (as of Hive 0.8.0). For example, from_utc_timestamp(‘1970-01-01 08:00:00’,‘PST’) returns 1970-01-01 00:00:00.如果给定的时间戳并非UTC,则将其转化成指定的时区下时间戳 |

| timestamp | to_utc_timestamp(timestamp, string timezone) | Assumes given timestamp is in given timezone and converts to UTC (as of Hive 0.8.0). For example, to_utc_timestamp(‘1970-01-01 00:00:00’,‘PST’) returns 1970-01-01 08:00:00.如果给定的时间戳指定的时区下时间戳,则将其转化成UTC下的时间戳 |

| date | current_date | Returns the current date at the start of query evaluation (as of Hive 1.2.0). All calls of current_date within the same query return the same value.返回当前时间日期 |

| timestamp | current_timestamp | Returns the current timestamp at the start of query evaluation (as of Hive 1.2.0). All calls of current_timestamp within the same query return the same value.返回当前时间戳 |

| string | add_months(string start_date, int num_months) | Returns the date that is num_months after start_date (as of Hive 1.1.0). start_date is a string, date or timestamp. num_months is an integer. The time part of start_date is ignored. If start_date is the last day of the month or if the resulting month has fewer days than the day component of start_date, then the result is the last day of the resulting month. Otherwise, the result has the same day component as start_date.返回当前时间下再增加num_months个月的日期 |

| string | last_day(string date) | Returns the last day of the month which the date belongs to (as of Hive 1.1.0). date is a string in the format ‘yyyy-MM-dd HH:mm:ss’ or ‘yyyy-MM-dd’. The time part of date is ignored.返回这个月的最后一天的日期,忽略时分秒部分(HH:mm:ss) |

| string | next_day(string start_date, string day_of_week) | Returns the first date which is later than start_date and named as day_of_week (as of Hive1.2.0). start_date is a string/date/timestamp. day_of_week is 2 letters, 3 letters or full name of the day of the week (e.g. Mo, tue, FRIDAY). The time part of start_date is ignored. Example: next_day(‘2015-01-14’, ‘TU’) = 2015-01-20.返回当前时间的下一个星期X所对应的日期 如:next_day(‘2015-01-14’, ‘TU’) = 2015-01-20 以2015-01-14为开始时间,其下一个星期二所对应的日期为2015-01-20 |

| string | trunc(string date, string format) | Returns date truncated to the unit specified by the format (as of Hive 1.2.0). Supported formats: MONTH/MON/MM, YEAR/YYYY/YY. Example: trunc(‘2015-03-17’, ‘MM’) = 2015-03-01.返回时间的最开始年份或月份 如trunc(“2016-06-26”,“MM”)=2016-06-01 trunc(“2016-06-26”,“YY”)=2016-01-01 注意所支持的格式为MONTH/MON/MM, YEAR/YYYY/YY |

| double | months_between(date1, date2) | Returns number of months between dates date1 and date2 (as of Hive 1.2.0). If date1 is later than date2, then the result is positive. If date1 is earlier than date2, then the result is negative. If date1 and date2 are either the same days of the month or both last days of months, then the result is always an integer. Otherwise the UDF calculates the fractional portion of the result based on a 31-day month and considers the difference in time components date1 and date2. date1 and date2 type can be date, timestamp or string in the format ‘yyyy-MM-dd’ or ‘yyyy-MM-dd HH:mm:ss’. The result is rounded to 8 decimal places. Example: months_between(‘1997-02-28 10:30:00’, ‘1996-10-30’) = 3.94959677**返回date1与date2之间相差的月份,如date1>date2,则返回正,如果date1 |

| string | date_format(date/timestamp/string ts, string fmt) | Converts a date/timestamp/string to a value of string in the format specified by the date format fmt (as of Hive 1.2.0). Supported formats are Java SimpleDateFormat formats –https://docs.oracle.com/javase/7/docs/api/java/text/SimpleDateFormat.html. The second argument fmt should be constant. Example: date_format(‘2015-04-08’, ‘y’) = ‘2015’.date_format can be used to implement other UDFs, e.g.:dayname(date) is date_format(date, ‘EEEE’)dayofyear(date) is date_format(date, ‘D’)按指定格式返回时间date 如:date_format(“2016-06-22”,“MM-dd”)=06-22 |

条件函数

| Return Type | Name(Signature) | Description |

|---|---|---|

| T | if(boolean testCondition, T valueTrue, T valueFalseOrNull) | Returns valueTrue when testCondition is true, returns valueFalseOrNull otherwise.如果testCondition 为true就返回valueTrue,否则返回valueFalseOrNull ,(valueTrue,valueFalseOrNull为泛型) |

| T | nvl(T value, T default_value) | Returns default value if value is null else returns value (as of HIve 0.11).如果value值为NULL就返回default_value,否则返回value |

| T | COALESCE(T v1, T v2, …) | Returns the first v that is not NULL, or NULL if all v’s are NULL.返回第一非null的值,如果全部都为NULL就返回NULL 如:COALESCE (NULL,44,55)=44/strong> |

| T | CASE a WHEN b THEN c [WHEN d THEN e]* [ELSE f] END | When a = b, returns c; when a = d, returns e; else returns f.如果a=b就返回c,a=d就返回e,否则返回f 如CASE 4 WHEN 5 THEN 5 WHEN 4 THEN 4 ELSE 3 END 将返回4 |

| T | CASE WHEN a THEN b [WHEN c THEN d]* [ELSE e] END | When a = true, returns b; when c = true, returns d; else returns e.如果a=ture就返回b,c= ture就返回d,否则返回e 如:CASE WHEN 5>0 THEN 5 WHEN 4>0 THEN 4 ELSE 0 END 将返回5;CASE WHEN 5<0 THEN 5 WHEN 4<0 THEN 4 ELSE 0 END 将返回0 |

| boolean | isnull( a ) | Returns true if a is NULL and false otherwise.如果a为null就返回true,否则返回false |

| boolean | isnotnull ( a ) | Returns true if a is not NULL and false otherwise.如果a为非null就返回true,否则返回false |

字符函数

| Return Type | Name(Signature) | Description |

|---|---|---|

| int | ascii(string str) | Returns the numeric value of the first character of str.返回str中首个ASCII字符串的整数值 |

| string | base64(binary bin) | Converts the argument from binary to a base 64 string (as of Hive 0.12.0)…将二进制bin转换成64位的字符串 |

| string | concat(string|binary A, string|binary B…) | Returns the string or bytes resulting from concatenating the strings or bytes passed in as parameters in order. For example, concat(‘foo’, ‘bar’) results in ‘foobar’. Note that this function can take any number of input strings…对二进制字节码或字符串按次序进行拼接 |

| array |

context_ngrams(array, array, int K, int pf) | Returns the top-k contextual N-grams from a set of tokenized sentences, given a string of “context”. See StatisticsAndDataMining for more information…与ngram类似,但context_ngram()允许你预算指定上下文(数组)来去查找子序列,具体看StatisticsAndDataMining(这里的解释更易懂) |

| string | concat_ws(string SEP, string A, string B…) | Like concat() above, but with custom separator SEP…与concat()类似,但使用指定的分隔符喜进行分隔 |

| string | concat_ws(string SEP, array) | Like concat_ws() above, but taking an array of strings. (as of Hive 0.9.0).拼接Array中的元素并用指定分隔符进行分隔 |

| string | decode(binary bin, string charset) | Decodes the first argument into a String using the provided character set (one of ‘US-ASCII’, ‘ISO-8859-1’, ‘UTF-8’, ‘UTF-16BE’, ‘UTF-16LE’, ‘UTF-16’). If either argument is null, the result will also be null. (As of Hive 0.12.0.).使用指定的字符集charset将二进制值bin解码成字符串,支持的字符集有:‘US-ASCII’, ‘ISO-8859-1’, ‘UTF-8’, ‘UTF-16BE’, ‘UTF-16LE’, ‘UTF-16’,如果任意输入参数为NULL都将返回NULL |

| binary | encode(string src, string charset) | Encodes the first argument into a BINARY using the provided character set (one of ‘US-ASCII’, ‘ISO-8859-1’, ‘UTF-8’, ‘UTF-16BE’, ‘UTF-16LE’, ‘UTF-16’). If either argument is null, the result will also be null. (As of Hive 0.12.0.).使用指定的字符集charset将字符串编码成二进制值,支持的字符集有:‘US-ASCII’, ‘ISO-8859-1’, ‘UTF-8’, ‘UTF-16BE’, ‘UTF-16LE’, ‘UTF-16’,如果任一输入参数为NULL都将返回NULL |

| int | find_in_set(string str, string strList) | Returns the first occurance of str in strList where strList is a comma-delimited string. Returns null if either argument is null. Returns 0 if the first argument contains any commas. For example, find_in_set(‘ab’, ‘abc,b,ab,c,def’) returns 3…返回以逗号分隔的字符串中str出现的位置,如果参数str为逗号或查找失败将返回0,如果任一参数为NULL将返回NULL回 |

| string | format_number(number x, int d) | Formats the number X to a format like ‘#,###,###.##’, rounded to D decimal places, and returns the result as a string. If D is 0, the result has no decimal point or fractional part. (As of Hive 0.10.0; bug with float types fixed in Hive 0.14.0, decimal type support added in Hive 0.14.0).将数值X转换成"#,###,###.##"格式字符串,并保留d位小数,如果d为0,将进行四舍五入且不保留小数 |

| string | get_json_object(string json_string, string path) | Extracts json object from a json string based on json path specified, and returns json string of the extracted json object. It will return null if the input json string is invalid. NOTE: The json path can only have the characters [0-9a-z_], i.e., no upper-case or special characters. Also, the keys *cannot start with numbers.* This is due to restrictions on Hive column names…从指定路径上的JSON字符串抽取出JSON对象,并返回这个对象的JSON格式,如果输入的JSON是非法的将返回NULL,注意此路径上JSON字符串只能由数字 字母 下划线组成且不能有大写字母和特殊字符,且key不能由数字开头,这是由于Hive对列名的限制 |

| boolean | in_file(string str, string filename) | Returns true if the string str appears as an entire line in filename…如果文件名为filename的文件中有一行数据与字符串str匹配成功就返回true |

| int | instr(string str, string substr) | Returns the position of the first occurrence of substr in str. Returns null if either of the arguments are null and returns 0 if substr could not be found in str. Be aware that this is not zero based. The first character in str has index 1…查找字符串str中子字符串substr出现的位置,如果查找失败将返回0,如果任一参数为Null将返回null,注意位置为从1开始的 |

| int | length(string A) | Returns the length of the string…返回字符串的长度 |

| int | locate(string substr, string str[, int pos]) | Returns the position of the first occurrence of substr in str after position pos…查找字符串str中的pos位置后字符串substr第一次出现的位置 |

| string | lower(string A) lcase(string A) | Returns the string resulting from converting all characters of B to lower case. For example, lower(‘fOoBaR’) results in ‘foobar’…将字符串A的所有字母转换成小写字母 |