用keras复现AlexNet

首先,我们用keras复现一下AlexNet的模型结构:

from keras.models import Sequential

from keras.layers import Input,Dense,Conv2D,MaxPooling2D,UpSampling2D,Dropout,Flatten

from keras.layers import Dropout

model = Sequential()

model.add(Conv2D(96,(11,11),strides=(4,4),input_shape=(227,227,3),padding='valid',activation='relu',kernel_initializer='uniform'))

model.add(MaxPooling2D(pool_size=(3,3),strides=(2,2)))

model.add(Conv2D(256,(5,5),strides=(1,1),padding='same',activation='relu',kernel_initializer='uniform'))

model.add(MaxPooling2D(pool_size=(3,3),strides=(2,2)))

model.add(Conv2D(384,(3,3),strides=(1,1),padding='same',activation='relu',kernel_initializer='uniform'))

model.add(Conv2D(384,(3,3),strides=(1,1),padding='same',activation='relu',kernel_initializer='uniform'))

model.add(Conv2D(256,(3,3),strides=(1,1),padding='same',activation='relu',kernel_initializer='uniform'))

model.add(MaxPooling2D(pool_size=(3,3),strides=(2,2)))

model.add(Flatten())

model.add(Dense(4096,activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(4096,activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(1000,activation='softmax'))

model.compile(loss='categorical_crossentropy',optimizer='sgd',metrics=['accuracy'])

model.summary()

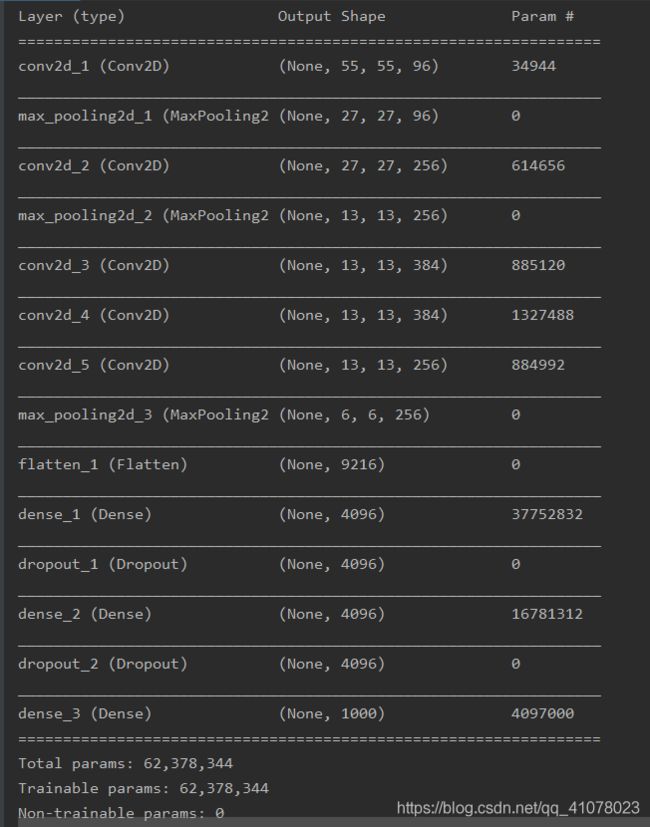

实现后打印的模型结构如下:

接下来用cifar10复现Alex论文,代码如下:

import keras

from keras.models import Model

from keras.datasets import cifar10

from keras.utils import to_categorical

from keras.layers import Input,Conv2D,GlobalMaxPooling2D,Dense,BatchNormalization,Activation,MaxPooling2D

from keras.layers import concatenate,Dropout,Flatten

from keras import optimizers

from keras.preprocessing.image import ImageDataGenerator

from keras.callbacks import LearningRateScheduler,TensorBoard,ModelCheckpoint

batch_size=64#一个batch用64张图

iterations=782#一个epoch用782个batch

epochs=300#一共循环300个epoch

num_classes=10

log_filepath='./alexnet'#tensorbroad的文件存储的路径

DATA_FORMAT='channels_last'

#数据预处理并设置学习率的变换规律

def color_preprocessing(x_train,x_test):#??????颜色预处理

x_train=x_train.astype('float32')

x_test=x_test.astype('float32')

mean=[123.307,122.95,113.865]

std = [62.9932, 62.0887, 66.7048]

for i in range(3):#i代表通道数

x_train[:,:,:,i]=(x_train[:,:,:,i]-mean[i])/std[i]

x_test[:, :, :, i] = (x_test[:, :, :, i] - mean[i]) / std[i]

return x_train,x_test

def scheduler(epoch):

if epoch<100:

return 0.01

if epoch<200:

return 0.001

return 0.0001

#加载数据

(x_train,y_train),(x_test,y_test)=cifar10.load_data()

y_train=to_categorical(y_train,num_classes=num_classes)#num_classes: 总类别数。

y_test=to_categorical(y_test,num_classes)

x_train,x_test=color_preprocessing(x_train,x_test)

#搭建网络

def alexnet(img_input,classes=10):

x=Conv2D(96,(11,11),strides=(4,4),padding='same',

activation='relu',kernel_initializer='uniform')(img_input)

x=MaxPooling2D(pool_size=(3,3),strides=(2,2),padding='same',data_format=DATA_FORMAT)(x)

x=Conv2D(256,(5,5),strides=(1,1),padding='same',

activation='relu',kernel_initializer='uniform')(x)

x = MaxPooling2D(pool_size=(3, 3), strides=(2, 2), padding='same', data_format=DATA_FORMAT)(x)

x=Conv2D(384,(3,3),strides=(1,1),padding='same',

activation='relu',kernel_initializer='uniform')(x)

x=Conv2D(384,(3,3),strides=(1,1),padding='same',

activation='relu',kernel_initializer='uniform')(x)

x=Conv2D(256,(3,3),strides=(1,1),padding='same',

activation='relu',kernel_initializer='uniform')(x)

x=MaxPooling2D(pool_size=(3,3),strides=(2,2),padding='same',data_format=DATA_FORMAT)(x)

x=Flatten()(x)

x=Dense(4096,activation='relu')(x)

x=Dropout(0.5)(x)

x=Dense(4096,activation='relu')(x)

x=Dropout(0.5)(x)

out=Dense(classes,activation='softmax')(x)

return out

img_input=Input(shape=(32,32,3))

output=alexnet(img_input)

model=Model(img_input,output)

model.summary()

#momentum: float >= 0. 参数,用于加速 SGD 在相关方向上前进,并抑制震荡。

#nesterov: boolean. 是否使用 Nesterov 动量。

sgd=optimizers.SGD(lr=.1,momentum=0.9,nesterov=True)

model.compile(loss='categorical_crossentropy',optimizer=sgd,metrics=['accuracy'])

# 设置回调函数

#histogram_freq: 对于模型中各个层计算激活值和模型权重直方图的频率(训练轮数中)。 如果设置成 0 ,直方图不会被计算。

tb_cb=TensorBoard(log_dir=log_filepath,histogram_freq=0)#可以可视化测试和训练的标准评估的动态图

#schedule: 一个函数,接受轮索引数作为输入(整数,从 0 开始迭代) 然后返回一个学习速率作为输出(浮点数)。

change_lr=LearningRateScheduler(scheduler)

cbks=[change_lr,tb_cb]#训练时作为参数

#设置数据增强

datagen=ImageDataGenerator(horizontal_flip=True,#随机将一半图像水平翻转

width_shift_range=0.125,#图像在水平方向上平移的范围

height_shift_range=0.125,

fill_mode='constant',cval=0.) #'constant': kkkkkkkk|abcd|kkkkkkkk (cval=k) 平移完用0来填充

#训练模型

model.fit_generator(datagen.flow(x_train,y_train,batch_size=batch_size),

steps_per_epoch=iterations,

epochs=epochs,

callbacks=cbks,

validation_data=(x_test,y_test))

model.save('alexnet.h5')