Flink中kafkaconnector任意对象自定义序列化与反序列化(KafkaSerializationSchema)

Flink中kafkaconnector自定义序列化与反序列化

对象与String自行互转进行生产与消费

当我们对Flink的kafka-connector有了一个大概的认识,并且能够对String,Json等类型的数据进行一个生产和消费操作后,能够想到的是那么对于更复杂的对象的生产与消费呢,比如某一个自定义的对象(POJO类),乃至任意的一个Object。

首先,我们发现可以很简单地发送String到kafka中,从其中去进行一个String类型地消费也很简单。那么首先可以想到的是,可以将对象信息转化为String然后当我们使用的时候也可以用String还原为对象信息。也就是说我们不借用kafka的自定义序列化和反序列化工具,自己根据需要对对象进行字符串化以及反字符串化,比如我们可以在数据类中定义一个tostring和fromString方法,注意,这个数据类要继承Serializable:

import com.ververica.flinktraining.exercises.datastream_java.utils.GeoUtils;

import org.joda.time.DateTime;

import org.joda.time.format.DateTimeFormat;

import org.joda.time.format.DateTimeFormatter;

import java.io.Serializable;

import java.util.Locale;

/**

* A TaxiRide is a taxi ride event. There are two types of events, a taxi ride start event and a

* taxi ride end event. The isStart flag specifies the type of the event.

*

* A TaxiRide consists of

* - the rideId of the event which is identical for start and end record

* - the type of the event (start or end)

* - the time of the event

* - the longitude of the start location

* - the latitude of the start location

* - the longitude of the end location

* - the latitude of the end location

* - the passengerCnt of the ride

* - the taxiId

* - the driverId

*

*/

public class TaxiRide implements Comparable<TaxiRide>, Serializable {

private static transient DateTimeFormatter timeFormatter =

DateTimeFormat.forPattern("yyyy-MM-dd HH:mm:ss").withLocale(Locale.US).withZoneUTC();

public TaxiRide() {

this.startTime = new DateTime();

this.endTime = new DateTime();

}

public TaxiRide(long rideId, boolean isStart, DateTime startTime, DateTime endTime,

float startLon, float startLat, float endLon, float endLat,

short passengerCnt, long taxiId, long driverId) {

this.rideId = rideId;

this.isStart = isStart;

this.startTime = startTime;

this.endTime = endTime;

this.startLon = startLon;

this.startLat = startLat;

this.endLon = endLon;

this.endLat = endLat;

this.passengerCnt = passengerCnt;

this.taxiId = taxiId;

this.driverId = driverId;

}

public long rideId;

public boolean isStart;

public DateTime startTime;

public DateTime endTime;

public float startLon;

public float startLat;

public float endLon;

public float endLat;

public short passengerCnt;

public long taxiId;

public long driverId;

public String toString() {

StringBuilder sb = new StringBuilder();

sb.append(rideId).append(",");

sb.append(isStart ? "START" : "END").append(",");

sb.append(startTime.toString(timeFormatter)).append(",");

sb.append(endTime.toString(timeFormatter)).append(",");

sb.append(startLon).append(",");

sb.append(startLat).append(",");

sb.append(endLon).append(",");

sb.append(endLat).append(",");

sb.append(passengerCnt).append(",");

sb.append(taxiId).append(",");

sb.append(driverId);

return sb.toString();

}

public static TaxiRide fromString(String line) {

String[] tokens = line.split(",");

if (tokens.length != 11) {

throw new RuntimeException("Invalid record: " + line);

}

TaxiRide ride = new TaxiRide();

try {

ride.rideId = Long.parseLong(tokens[0]);

switch (tokens[1]) {

case "START":

ride.isStart = true;

ride.startTime = DateTime.parse(tokens[2], timeFormatter);

ride.endTime = DateTime.parse(tokens[3], timeFormatter);

break;

case "END":

ride.isStart = false;

ride.endTime = DateTime.parse(tokens[2], timeFormatter);

ride.startTime = DateTime.parse(tokens[3], timeFormatter);

break;

default:

throw new RuntimeException("Invalid record: " + line);

}

ride.startLon = tokens[4].length() > 0 ? Float.parseFloat(tokens[4]) : 0.0f;

ride.startLat = tokens[5].length() > 0 ? Float.parseFloat(tokens[5]) : 0.0f;

ride.endLon = tokens[6].length() > 0 ? Float.parseFloat(tokens[6]) : 0.0f;

ride.endLat = tokens[7].length() > 0 ? Float.parseFloat(tokens[7]) : 0.0f;

ride.passengerCnt = Short.parseShort(tokens[8]);

ride.taxiId = Long.parseLong(tokens[9]);

ride.driverId = Long.parseLong(tokens[10]);

} catch (NumberFormatException nfe) {

throw new RuntimeException("Invalid record: " + line, nfe);

}

return ride;

}

// sort by timestamp,

// putting START events before END events if they have the same timestamp

public int compareTo(TaxiRide other) {

if (other == null) {

return 1;

}

int compareTimes = Long.compare(this.getEventTime(), other.getEventTime());

if (compareTimes == 0) {

if (this.isStart == other.isStart) {

return 0;

}

else {

if (this.isStart) {

return -1;

}

else {

return 1;

}

}

}

else {

return compareTimes;

}

}

@Override

public boolean equals(Object other) {

return other instanceof TaxiRide &&

this.rideId == ((TaxiRide) other).rideId;

}

@Override

public int hashCode() {

return (int)this.rideId;

}

public long getEventTime() {

if (isStart) {

return startTime.getMillis();

}

else {

return endTime.getMillis();

}

}

public double getEuclideanDistance(double longitude, double latitude) {

if (this.isStart) {

return GeoUtils.getEuclideanDistance((float) longitude, (float) latitude, this.startLon, this.startLat);

} else {

return GeoUtils.getEuclideanDistance((float) longitude, (float) latitude, this.endLon, this.endLat);

}

}

}

当能够把对象和字符串之间进行互相转化,那么就可以通过kafka最简单的 new SimpleStringSchema()来直接进行传输:

// get the taxi ride data stream

val rides = env.addSource(rideSourceOrTest(new TaxiRideSource(input, maxDelay, speed)))

val filteredRides = rides

.filter(r => GeoUtils.isInNYC(r.startLon, r.startLat) && GeoUtils.isInNYC(r.endLon, r.endLat))

.map(ride=>{

ride.toString

})

val kafkaSink: FlinkKafkaProducer[String] = new FlinkKafkaProducer[String](

"47.107.X.X:9092",

"TaxiRide",

new SimpleStringSchema()

)

filteredRides.addSink(kafkaSink)

// print the filtered stream

printOrTest(filteredRides)

// run the cleansing pipeline

env.execute("Taxi Ride Cleansing")

对应消费者:

val dataSource = new FlinkKafkaConsumer(

KAFKA_TOPIC,

new SimpleStringSchema(),

properties)

.setStartFromEarliest() // 指定从最开始进行消费

env.addSource(dataSource)

.map(ride=>TaxiRide.fromString(ride))

.print()

.setParallelism(1)

// execute program

env.execute("Flink Streaming—————KafkaSource")

使用kafka接口自定义KafkaDeserializationSchema获取分区数据

在我们使用SimpleStringSchema的时候,返回的结果只有Kafka的value,而没有其它信息。很多时候我们需要获得Kafka的topic或者其它信息,就需要通过实现KafkaDeserializationSchema接口来自定义返回数据的结构,以下代码会让kafka消费者返回了ConsumerRecord

import com.ververica.flinktraining.exercises.datastream_java.datatypes.TaxiRide;

import org.apache.flink.api.common.typeinfo.TypeHint;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.flink.shaded.jackson2.com.fasterxml.jackson.core.JsonProcessingException;

import org.apache.flink.shaded.jackson2.com.fasterxml.jackson.databind.ObjectMapper;

import org.apache.flink.streaming.connectors.kafka.KafkaDeserializationSchema;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.producer.ProducerRecord;

public class ObjDeSerializationSchema implements KafkaDeserializationSchema<ConsumerRecord<String, String>>{

private static String encoding = "UTF8";

@Override

public boolean isEndOfStream(ConsumerRecord<String, String> nextElement) {

return false;

}

@Override

public ConsumerRecord<String, String> deserialize(ConsumerRecord<byte[], byte[]> record) throws Exception {

// System.out.println("Record--partition::"+record.partition());

// System.out.println("Record--offset::"+record.offset());

// System.out.println("Record--timestamp::"+record.timestamp());

// System.out.println("Record--timestampType::"+record.timestampType());

// System.out.println("Record--checksum::"+record.checksum());

// System.out.println("Record--key::"+record.key());

// System.out.println("Record--value::"+record.value());

String key=null;

String value = null;

if (record.key() != null) {

key = new String(record.key());

}

if (record.value() != null) {

value = new String(record.value());

}

return new ConsumerRecord(

record.topic(),

record.partition(),

record.offset(),

record.timestamp(),

record.timestampType(),

record.checksum(),

record.serializedKeySize(),

record.serializedValueSize(),

key,

value);

}

@Override

public TypeInformation<ConsumerRecord<String, String>> getProducedType() {

return TypeInformation.of(new TypeHint<ConsumerRecord<String, String>>(){

});

}

自定义KafkaDeserializationSchema获取对应自定义数据类

上面介绍了怎么通过自定义KafkaDeserializationSchema来获取分区等数据信息,那么通过也就可以通过以下方式来实现获取自己定义的数据类的数据而不需要通过map方法进行转换:

import com.ververica.flinktraining.exercises.datastream_java.datatypes.TaxiRide;

import org.apache.flink.api.common.typeinfo.TypeHint;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.flink.streaming.connectors.kafka.KafkaDeserializationSchema;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.joda.time.DateTime;

import org.joda.time.format.DateTimeFormat;

import org.joda.time.format.DateTimeFormatter;

import java.util.Locale;

public class TaxirideDeserializationSchema implements KafkaDeserializationSchema<TaxiRide> {

private static String encoding = "UTF8";

private static transient DateTimeFormatter timeFormatter =

DateTimeFormat.forPattern("yyyy-MM-dd HH:mm:ss").withLocale(Locale.US).withZoneUTC();

@Override

public boolean isEndOfStream(TaxiRide ride) {

return false;

}

@Override

public TaxiRide deserialize(ConsumerRecord<byte[], byte[]> record) throws Exception {

// System.out.println("Record--partition::"+record.partition());

// System.out.println("Record--offset::"+record.offset());

// System.out.println("Record--timestamp::"+record.timestamp());

// System.out.println("Record--timestampType::"+record.timestampType());

// System.out.println("Record--checksum::"+record.checksum());

// System.out.println("Record--key::"+record.key());

// System.out.println("Record--value::"+record.value());

String key=null;

String value = null;

if (record.key() != null) {

key = new String(record.key());

}

if (record.value() != null) {

value = new String(record.value());

}

String line=value;

String[] tokens = line.split(",");

if (tokens.length != 11) {

throw new RuntimeException("Invalid record: " + line);

}

TaxiRide ride = new TaxiRide();

try {

ride.rideId = Long.parseLong(tokens[0]);

switch (tokens[1]) {

case "START":

ride.isStart = true;

ride.startTime = DateTime.parse(tokens[2], timeFormatter);

ride.endTime = DateTime.parse(tokens[3], timeFormatter);

break;

case "END":

ride.isStart = false;

ride.endTime = DateTime.parse(tokens[2], timeFormatter);

ride.startTime = DateTime.parse(tokens[3], timeFormatter);

break;

default:

throw new RuntimeException("Invalid record: " + line);

}

ride.startLon = tokens[4].length() > 0 ? Float.parseFloat(tokens[4]) : 0.0f;

ride.startLat = tokens[5].length() > 0 ? Float.parseFloat(tokens[5]) : 0.0f;

ride.endLon = tokens[6].length() > 0 ? Float.parseFloat(tokens[6]) : 0.0f;

ride.endLat = tokens[7].length() > 0 ? Float.parseFloat(tokens[7]) : 0.0f;

ride.passengerCnt = Short.parseShort(tokens[8]);

ride.taxiId = Long.parseLong(tokens[9]);

ride.driverId = Long.parseLong(tokens[10]);

} catch (NumberFormatException nfe) {

throw new RuntimeException("Invalid record: " + line, nfe);

}

return ride;

}

@Override

public TypeInformation<TaxiRide> getProducedType() {

return TypeInformation.of(new TypeHint<TaxiRide>(){

});

}

}

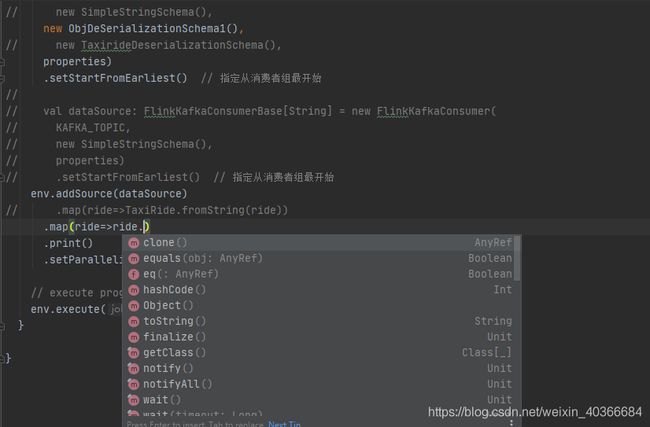

对应消费者中的使用方式:

import java.util.Properties

import org.apache.flink.api.common.serialization.SimpleStringSchema

import org.apache.flink.streaming.api.scala._

import org.apache.flink.api.java.utils.ParameterTool

import org.apache.flink.runtime.state.filesystem.FsStateBackend

import org.apache.flink.streaming.api.CheckpointingMode

import org.apache.flink.streaming.api.windowing.time.Time

import org.apache.flink.streaming.connectors.kafka.{

FlinkKafkaConsumer, FlinkKafkaConsumerBase}

import com.ververica.flinktraining.exercises.datastream_java.datatypes.TaxiRide

import com.ververica.flinktraining.exercises.datastream_scala.connect.util.{

ObjDeSerializationSchema, ObjectDecoder, TaxirideDeserializationSchema}

import org.apache.kafka.clients.consumer.ConsumerRecord

/**

* @author Do

* @Date 2020/4/14 23:25

*/

object TestConsumer {

//test02: XXXX test1:Taxiride2byte TaxiRide:(XXX,XXX)

private val KAFKA_TOPIC: String = "TaxiRide"

def main(args: Array[String]) {

val params: ParameterTool = ParameterTool.fromArgs(args)

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

// // exactly-once 语义保证整个应用内端到端的数据一致性

// env.getCheckpointConfig.setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE)

// // 开启检查点并指定检查点时间间隔为5s

// env.enableCheckpointing(5000) // checkpoint every 5000 msecs

// // 设置StateBackend,并指定状态数据存储位置

// env.setStateBackend(new FsStateBackend("file:///D:/Temp/checkpoint/flink/KafkaSource"))

val properties: Properties = new Properties()

properties.setProperty("bootstrap.servers", "47.107.X.X:9092")

properties.setProperty("group.id", "RideExercise")

properties.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

properties.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

val dataSource = new FlinkKafkaConsumer(

KAFKA_TOPIC,

// new SimpleStringSchema(),

new TaxirideDeserializationSchema(),

properties)

.setStartFromEarliest() // 指定从消费者组最开始

env.addSource(dataSource)

//测试是否转化为对应对象

.map(ride=>ride.driverId)

.print()

.setParallelism(1)

// execute program

env.execute("Flink Streaming—————KafkaSource")

}

}

对象数据的自定义生产KafkaSerializationSchema

上面已经有了将对应的POJO类在kafka中进行双向转换的操作,但是值得注意的是,生产数据时,依然是将TaxiRide类的数据转化为字符串来进行序列化,

6317,START,2013-01-01 00:18:00,1970-01-01 00:00:00,-73.9633,40.766083,-74.008865,40.710953,1,2013005501,2013005498

再通过这些字符串反序列化成TaxiRide对象进行消费。那么又没有一个通用的方法来将对象作为数据进行生产和消费过程呢?可以在对应的序列化和反序列化中将对象转为byte[],再转换回去,注意byte[]和String之间的互转可能会出现问题:java.io.StreamCorruptedException: invalid stream header: EFBFBDEF ,这是因为将字 ByteArrayOutputStream对象调用为toString转为为字符串时,会将 ObjectOutputStream对象放置在对象流头部的前两个字节(0xac)(0xed)序列化为两个“?”当这个字符串使用getByte()时会将两个“?”变为(0x3f )(0x3f) 。然而这两个字符并不构成有效的对象流头。所以转化对象时候会失败。我们尽量避免这种多余的转化。

序列化:

import com.ververica.flinktraining.exercises.datastream_java.datatypes.TaxiRide;

import org.apache.flink.shaded.jackson2.com.fasterxml.jackson.core.JsonProcessingException;

import org.apache.flink.shaded.jackson2.com.fasterxml.jackson.databind.ObjectMapper;

import org.apache.flink.streaming.connectors.kafka.KafkaSerializationSchema;

import org.apache.kafka.clients.producer.ProducerRecord;

import java.io.ByteArrayOutputStream;

import java.io.ObjectOutputStream;

import static com.ververica.flinktraining.exercises.datastream_scala.connect.util.BeanUtils.ObjectToBytes;

public class ObjSerializationSchema implements KafkaSerializationSchema<TaxiRide>{

private String topic;

private ObjectMapper mapper;

public ObjSerializationSchema(String topic) {

super();

this.topic = topic;

}

@Override

public ProducerRecord<byte[], byte[]> serialize(TaxiRide obj, Long timestamp) {

byte[] b = null;

b=ObjectToBytes(obj);

ObjectOutputStream os=null;

ByteArrayOutputStream bos=null;

try {

bos=new ByteArrayOutputStream();

os=new ObjectOutputStream(bos);

os.writeObject(obj);

byte[] bytes=bos.toByteArray();

b=bytes;

} catch (Exception e) {

e.printStackTrace();

}

return new ProducerRecord<byte[], byte[]>(topic, b);

}

}

反序列化:

import com.ververica.flinktraining.exercises.datastream_java.datatypes.TaxiRide;

import org.apache.flink.api.common.typeinfo.TypeHint;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.flink.shaded.jackson2.com.fasterxml.jackson.core.JsonProcessingException;

import org.apache.flink.shaded.jackson2.com.fasterxml.jackson.databind.ObjectMapper;

import org.apache.flink.streaming.connectors.kafka.KafkaDeserializationSchema;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.producer.ProducerRecord;

import java.io.ByteArrayInputStream;

import java.io.ObjectInputStream;

import static com.ververica.flinktraining.exercises.datastream_scala.connect.util.BeanUtils.BytesToObject;

public class ObjDeSerializationSchema1 implements KafkaDeserializationSchema<Object>{

private static String encoding = "UTF8";

private ObjectMapper mapper;

@Override

public boolean isEndOfStream(Object nextElement) {

return false;

}

@Override

public Object deserialize(ConsumerRecord<byte[], byte[]> record) throws Exception {

// System.out.println("Record--partition::"+record.partition());

// System.out.println("Record--offset::"+record.offset());

// System.out.println("Record--timestamp::"+record.timestamp());

// System.out.println("Record--timestampType::"+record.timestampType());

// System.out.println("Record--checksum::"+record.checksum());

// System.out.println("Record--key::"+record.key());

// System.out.println("Record--value::"+record.value());

ByteArrayInputStream bin=null;

try {

bin=new ByteArrayInputStream(record.value());

ObjectInputStream in=new ObjectInputStream(bin);

return in.readObject();

} catch (Exception e) {

e.printStackTrace();

}

return null;

}

@Override

public TypeInformation<Object> getProducedType() {

return TypeInformation.of(new TypeHint<Object>(){

});

}

}

成功地将对象实现了互相转换。