<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>cn.edu.nwsuaf</groupId>

<artifactId>Flink-Demo</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<!--Flink 版本-->

<flink.version>1.9.2</flink.version>

<!--JDK 版本-->

<java.version>1.8</java.version>

<!--Scala 2.11 版本-->

<scala.binary.version>2.11</scala.binary.version>

<!-- Kafka 0.11 版本-->

<kafka.version>0.11.0.0</kafka.version>

<maven.compiler.source>${java.version}</maven.compiler.source>

<maven.compiler.target>${java.version}</maven.compiler.target>

</properties>

<dependencies>

<!-- Apache Flink dependencies -->

<!-- These dependencies are provided, because they should not be packaged into the JAR file. -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.flink/flink-runtime-web -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-runtime-web_2.11</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<!-- Add logging framework, to produce console output when running in the IDE. -->

<!-- These dependencies are excluded from the application JAR by default. -->

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>1.7.7</version>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>1.2.17</version>

<scope>runtime</scope>

</dependency>

<!-- https://mvnrepository.com/artifact/org.projectlombok/lombok -->

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>1.18.8</version>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>${kafka.version}</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.54</version>

</dependency>

<!-- https://mvnrepository.com/artifact/mysql/mysql-connector-java -->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.47</version>

</dependency>

</dependencies>

<!-- 当你在 IDEA 中运行 Job 的时候,它会给你引入 flink-java、flink-streaming-java,且 scope 设置为 compile,但是你是打成 Jar 包的时候它又不起作用-->

<profiles>

<profile>

<id>add-dependencies-for-IDEA</id>

<activation>

<property>

<name>idea.version</name>

</property>

</activation>

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>${flink.version}</version>

<scope>compile</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

<scope>compile</scope>

</dependency>

</dependencies>

</profile>

</profiles>

<build>

<plugins>

<!-- Java Compiler -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.1</version>

<configuration>

<source>${java.version}</source>

<target>${java.version}</target>

</configuration>

</plugin>

<!-- 使用 maven-shade 插件创建一个包含所有必要的依赖项的 fat Jar -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>3.0.0</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<artifactSet>

<excludes>

<exclude>org.apache.flink:force-shading</exclude>

<exclude>com.google.code.findbugs:jsr305</exclude>

<exclude>org.slf4j:*</exclude>

<exclude>log4j:*</exclude>

</excludes>

</artifactSet>

<filters>

<filter>

<artifact>*:*</artifact>

<excludes>

<exclude>META-INF/*.SF</exclude>

<exclude>META-INF/*.DSA</exclude>

<exclude>META-INF/*.RSA</exclude>

</excludes>

</filter>

</filters>

<transformers>

<transformer

implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer">

<!--注意:这里一定要换成你自己的 Job main 方法的启动类-->

<mainClass>SocketWordCount</mainClass>

</transformer>

</transformers>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

DROP TABLE IF EXISTS `student`;

CREATE TABLE `student` (

`id` int(11) NOT NULL,

`name` varchar(255) DEFAULT NULL,

`age` int(11) DEFAULT NULL,

`sex` varchar(255) DEFAULT NULL

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

package batch.sink.mysql;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

@Data

@NoArgsConstructor

@AllArgsConstructor

public class Student {

private int id;

private String name;

private int age;

private String sex;

}

- SourceFromMySQL 是自定义的 Source 类,该类继承 RichSourceFunction,实现里面的 open、close、run、cancel 方法:

package batch.sink.mysql;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.functions.source.RichSourceFunction;

import java.sql.*;

public class SourceFromMySQL extends RichSourceFunction<Student> {

private Connection connection = null;

private PreparedStatement ps = null;

@Override

public void open(Configuration parameters) throws Exception {

super.open(parameters);

connection = getConnection();

String sql = "select * from student";

ps = connection.prepareStatement(sql);

}

@Override

public void close() throws Exception {

super.close();

if (connection != null) {

connection.close();

}

if (ps != null) {

ps.close();

}

}

@Override

public void run(SourceContext<Student> ctx) throws Exception {

ResultSet resultSet = ps.executeQuery();

while (resultSet.next()) {

Student student = new Student();

student.setId(resultSet.getInt("id"));

student.setName(resultSet.getString("name"));

student.setAge(resultSet.getInt("age"));

student.setSex(resultSet.getString("sex"));

ctx.collect(student);

}

}

@Override

public void cancel() {

}

private Connection getConnection() {

Connection connection = null;

String url = "jdbc:mysql://localhost:3306/test?useUnicode=true&characterEncoding=UTF-8";

String user = "root";

String pass = "123456";

try {

Class.forName("com.mysql.jdbc.Driver");

connection = DriverManager.getConnection(url, user, pass);

} catch (ClassNotFoundException | SQLException e) {

e.printStackTrace();

}

return connection;

}

}

package batch.sink.mysql;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

public class FlinkReadFromMySQL {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

DataStreamSource<Student> source = env.addSource(new SourceFromMySQL());

source.print("来自mysql的数据:");

env.execute("FlinkReadFromMySQL");

}

}

- 结果

- Kafka生产数据

package batch.sink.mysql;

import com.alibaba.fastjson.JSON;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord;

import java.util.Properties;

import java.util.Random;

public class KafkaUtils {

public static final String broker_list = "localhost:9092";

public static final String topic = "student";

public static void producerData() {

Properties properties = new Properties();

properties.setProperty("bootstrap.servers", broker_list);

properties.setProperty("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

properties.setProperty("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

KafkaProducer<String, String> kafkaProducer = new KafkaProducer<>(properties);

while (true) {

int id = new Random().nextInt(100);

String name = "zhiwei_" + id;

int age = new Random().nextInt(100);

String sex = String.valueOf(new Random().nextInt(2));

Student student = new Student(id, name, age, sex);

ProducerRecord<String, String> stringProducerRecord = new ProducerRecord<>(topic, JSON.toJSONString(student));

System.out.println("发送数据:" + JSON.toJSONString(student));

kafkaProducer.send(stringProducerRecord);

try {

Thread.sleep(3000);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

public static void main(String[] args) {

producerData();

}

}

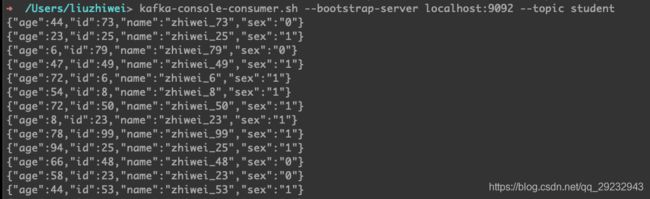

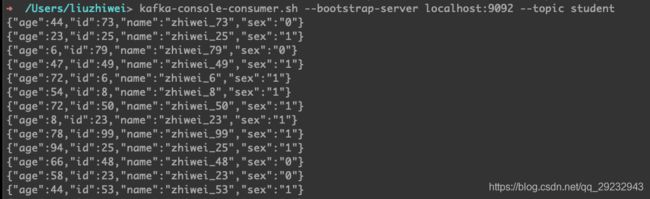

-

kafka命令行消费者

-

查看当前消费者消费的情况

-

Flink处理数据

package batch.sink.mysql;

import com.alibaba.fastjson.JSON;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer;

import java.util.Properties;

public class FlinkProcess {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

Properties properties = new Properties();

properties.setProperty("bootstrap.servers", "localhost:9092");

properties.setProperty("group.id", "student");

properties.setProperty("auto.offset.reset", "earliest");

String topic = "student";

FlinkKafkaConsumer<String> kafkaConsumer = new FlinkKafkaConsumer<String>(topic, new SimpleStringSchema(), properties);

DataStreamSource<String> streamSource = env.addSource(kafkaConsumer);

SingleOutputStreamOperator<Student> mapStudent = streamSource.map(new MapFunction<String, Student>() {

@Override

public Student map(String value) throws Exception {

Student student = JSON.parseObject(value, Student.class);

return student;

}

});

mapStudent.print("消费的student");

mapStudent.addSink(new MySqlSink());

env.execute("FlinkProcess");

}

}

-

消费的数据

-

自定义反序列化Student

package batch.sink.mysql;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.TypeReference;

import org.apache.flink.api.common.serialization.DeserializationSchema;

import org.apache.flink.api.common.typeinfo.TypeHint;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import java.io.IOException;

public class StudentDeserializationSchema implements DeserializationSchema<Student> {

@Override

public Student deserialize(byte[] message) throws IOException {

return (Student) JSON.parseObject(new String(message), new TypeReference<Student>() {

});

}

@Override

public boolean isEndOfStream(Student nextElement) {

return false;

}

@Override

public TypeInformation<Student> getProducedType() {

return TypeInformation.of(new TypeHint<Student>() {

});

}

}

package batch.sink.mysql;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.functions.sink.RichSinkFunction;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

import java.sql.SQLException;

public class MySqlSink extends RichSinkFunction<Student> {

private Connection connection = null;

private PreparedStatement ps = null;

@Override

public void open(Configuration parameters) throws Exception {

super.open(parameters);

connection = getConnection();

String sql = "insert into student values(?,?,?,?)";

ps = connection.prepareStatement(sql);

}

@Override

public void close() throws Exception {

super.close();

if (ps != null) {

ps.close();

}

if (connection != null) {

connection.close();

}

}

@Override

public void invoke(Student value, Context context) throws Exception {

ps.setInt(1, value.getId());

ps.setString(2, value.getName());

ps.setInt(3, value.getAge());

ps.setString(4, value.getSex());

ps.execute();

}

private Connection getConnection() {

Connection connection = null;

String url = "jdbc:mysql://localhost:3306/test?useUnicode=true&characterEncoding=UTF-8";

String user = "root";

String pass = "123456";

try {

Class.forName("com.mysql.jdbc.Driver");

connection = DriverManager.getConnection(url, user, pass);

} catch (ClassNotFoundException | SQLException e) {

e.printStackTrace();

}

return connection;

}

}