keras(return_sequences)和pytorch(output, (h_n, c_n))的记录

近期需要将一份Keras代码转写为Pytorch,有关LSTM的东西比较麻烦,特此记录。

keras代码和介绍

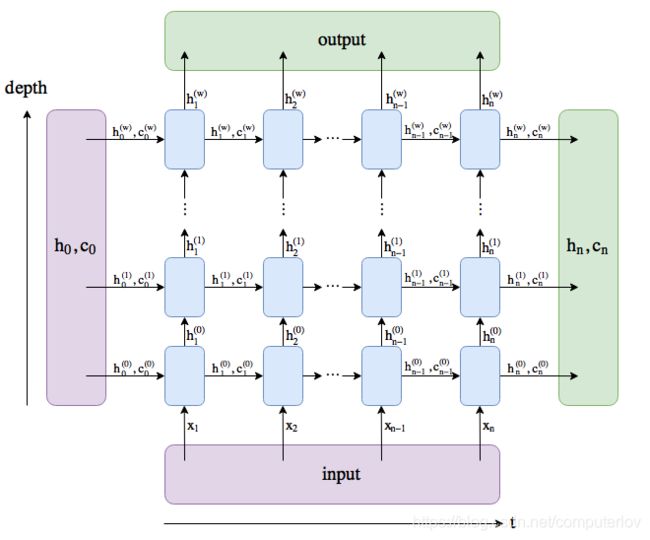

pytorch LSTM的直观图

第二个链接国内可能wall,故此贴上Pytorch的理解图。

----------------------------------------------------------------------------------------------------------------------------------------------------------------------

我遇到的keras代码是类似这样的:(return_sequences=Ture)

from keras.models import Model

from keras.layers import Input

from keras.layers import LSTM

from numpy import array

# define model

inputs1 = Input(shape=(3, 1))

lstm1 = LSTM(1, return_sequences=True)(inputs1)

model = Model(inputs=inputs1, outputs=lstm1)

# define input data

data = array([0.1, 0.2, 0.3]).reshape((1,3,1))

# make and show prediction

print(model.predict(data))按知乎大佬的意思输出应该是这样式儿的:

[[[-0.02243521]

[-0.06210149]

[-0.11457888]]]就是说:1个样本,Timestep为3(或者说时间窗),特征维为1。

若加上return_sequences=Ture ,意思就是每个Timestep都对应一个输出。“多对多”

若return_sequences=False,意思就是只输出最后一个Timestep的值。“多对一”

举个例子,买股票,看股票过去15天(Timestep)的情况,return_sequences=Ture就是说15天你每一天都预估一个值。

return_sequences=False就是说你看了15天的值,只预估最后一天的值。

综上我的pytorch代码如下:我是想写个动态的结构,所以lstm_dim写成了列表,指每一层的lstm隐藏单元数。(最多两层)

class LSTMEncoder(nn.Module):

def __init__(self, input_feature, output_feature, lstm_dim, lstm_dropout):

super(LeNetEncoder, self).__init__()

self.restored = False

if len(lstm_dim) == 2:

self.LSTM0 = torch.nn.LSTM(input_size=input_feature,

hidden_size=lstm_dim[0],

num_layers=1,

batch_first=True)

self.LSTM1 = torch.nn.LSTM(input_size=lstm_dim[0],

hidden_size=lstm_dim[1],

num_layers=1,

batch_first=True)

self.Drop = torch.nn.Dropout(p=lstm_dropout)

self.Dense = torch.nn.Linear(in_features=lstm_dim[1],

out_features=output_feature)

else:

self.LSTM0 = torch.nn.LSTM(input_size=input_feature,

hidden_size=lstm_dim[0],

num_layers=1,

batch_first=True)

self.Drop = torch.nn.Dropout(p=lstm_dropout)

self.Dense = torch.nn.Linear(in_features=lstm_dim[0],

out_features=output_feature)

def forward(self, input, lstm_dim):

for i in range(len(lstm_dim)):

if i == 0:

LSTM_out, (h_n0, c_n0) = self.LSTM0(input)

LSTM_out = self.Drop(LSTM_out)

else:

LSTM_out, (h_n, c_n) = self.LSTM1(LSTM_out, (h_n0, c_n0))

LSTM_out = self.Drop(LSTM_out)

#只要LSTM最后一个时间步的输出

Dense_out = F.relu(self.Dense(LSTM_out[:, -1, :]))

return Dense_out