【Tensorflow实战】一步步创建AlexNet模型识别猫和狗

实际项目见:https://github.com/HiAliens/Cat-and-dog

一、概述

一步步从数据处理和读入开始,到模型创建、训练、测试。

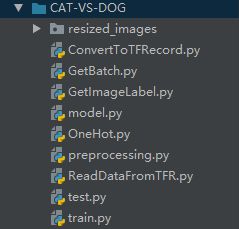

二、所含文件

文件注释里有详细的介绍,详细见上面gitghu地址

三、代码

- preprocessing.py

#!/usr/bin/env python

# -*- coding:utf-8 -*-

# author:Dr.Shang

import cv2

import os

import os

def resize(src):

"""

按照src的目录结构创建剪裁成227*227的图片

:param src: 需要裁剪图片的根目录

:return:

"""

succes = 0

fail = 0

fail_file = []

for root, dirs, files in os.walk(src):

print('开始文件写入……')

for file in files:

filepath = os.path.join(root, file)

filepath_list = filepath.split('\\')

# print(filepath)

# print(filepath_list)

# print(file)

try:

image = cv2.imread(filepath)

dim = (227, 227)

resized = cv2.resize(image, dim)

cwd = os.getcwd()

new_img_dir = os.path.join(cwd, 'resized_images2')

# new_img_dir_test = os.path.join(new_img_dir, filepath_list[-3]) 直接在这个地址创建文件夹报错

# print('test:' + new_img_dir_test)

if not os.path.exists(new_img_dir):

os.mkdir(new_img_dir)

# print('success')

new_img_path = new_img_dir + os.sep + filepath_list[-3]

# print(new_img_path == new_img_dir_test)

if not os.path.exists(new_img_path):

os.mkdir(new_img_path)

class_name = new_img_path + os.sep + filepath_list[-2]

if not os.path.exists(class_name):

print('{}文件夹不存在,已创建'.format(class_name))

os.mkdir(class_name)

path = os.path.join(class_name, file)

if not os.path.exists(path):

cv2.imwrite(path, resized)

succes += 1

# print('写入文件{}成功'.format(path))

# pass

except:

fail += 1

path += '\\n'

fail_file.append(path)

print(filepath + '文件出错')

if (succes + fail) % 500 == 0:

print('已处理{}张文件,成功{},失败{},失败文件请查看fail.txt'.format(succes+fail, succes, fail))

finally:

f = open('fail.txt', 'w')

f.write(fail_file)

print('总共成功写入{}张,失败{}'.format(succes, fail))

if __name__ == '__main__':

path = r'D:\DataSet\kaggle\catdog'

resize(path)

- GetImageLabel.py

#!/usr/bin/env python

# -*- coding:utf-8 -*-

# author:Dr.Shang

import os

import numpy as np

def get_file(file_dir):

'''

根据指定的训练或验证或测试数据集的路径获取图片集,目录结构应为 file——dir下包含cat和dog两个文件夹

:param file_dir: 训练数据集文件夹

:param is_tfr: 以何种方法得到image和label

:return: image和label

'''

images = []

floders = []

for root, sub_folders, files in os.walk(file_dir):

for file in files:

images.append(os.path.join(root, file))

for floder in sub_folders:

floders.append(os.path.join(root, floder))

labels = []

for one_floder in floders:

num_img = len(os.listdir(one_floder)) # 统计one_floder下包含多少个文件

label = one_floder.split('\\')[-1]

# print(label)

if label == 'cats':

labels = np.append(labels, num_img * [0]) # 生成一个2维列表

else:

labels = np.append(labels, num_img * [1])

# print(len(labels))

# shuffle

temp = []

temp = np.array([images, labels])

# print(temp)

temp = temp.transpose()

# print(temp)

'''

[['D:\\DataSet\\kaggle\\small_samples\\test\\cats\\cat.1500.jpg'

'D:\\DataSet\\kaggle\\small_samples\\test\\cats\\cat.1501.jpg'

'D:\\DataSet\\kaggle\\small_samples\\test\\cats\\cat.1502.jpg' ...

'D:\\DataSet\\kaggle\\small_samples\\test\\dogs\\dog.1997.jpg'

'D:\\DataSet\\kaggle\\small_samples\\test\\dogs\\dog.1998.jpg'

'D:\\DataSet\\kaggle\\small_samples\\test\\dogs\\dog.1999.jpg']

['0.0' '0.0' '0.0' ... '1.0' '1.0' '1.0']]

[['D:\\DataSet\\kaggle\\small_samples\\test\\cats\\cat.1500.jpg' '0.0']

['D:\\DataSet\\kaggle\\small_samples\\test\\cats\\cat.1501.jpg' '0.0']

['D:\\DataSet\\kaggle\\small_samples\\test\\cats\\cat.1502.jpg' '0.0']

...

['D:\\DataSet\\kaggle\\small_samples\\test\\dogs\\dog.1997.jpg' '1.0']

['D:\\DataSet\\kaggle\\small_samples\\test\\dogs\\dog.1998.jpg' '1.0']

['D:\\DataSet\\kaggle\\small_samples\\test\\dogs\\dog.1999.jpg' '1.0']]

'''

np.random.shuffle(temp)

image_list = list(temp[:, 0])

label_list = list(temp[:, 1])

label_list = [int(float(i)) for i in label_list]

return image_list, label_list

if __name__ == '__main__':

image, label = get_file(r'D:\coding\python\coding-pycharm\opencv+tensorflow\CAT-VS-DOG\resized_images2\test')

# print(image)

# print(label)

- ConvertToTFRecord.py

#!/usr/bin/env python

# -*- coding:utf-8 -*-

# author:Dr.Shang

import tensorflow as tf

import os

from skimage import io

def int64_feature(value):

return tf.train.Feature(int64_list=tf.train.Int64List(value=[value]))

def bytes_feature(value):

return tf.train.Feature(bytes_list=tf.train.BytesList(value=[value]))

def convert_to_tfrecord(images_list, label_list, save_dir, name):

filename = os.path.join(save_dir, name + '.tfrecords') # 路径

num_sample = len(images_list)

project_name = save_dir.split('\\')[5] # 具体看个人路径中项目名称在第几位置上

writer = tf.python_io.TFRecordWriter(filename)

print(f'{project_name}的{name}数据集转换开始……')

for i in range(num_sample):

try:

image = io.imread(images_list[i])

# print(type(image)) # must be a array

image_raw = image.tostring()

label = int(label_list[i])

example = tf.train.Example(features=tf.train.Features(feature={

'label':int64_feature(label),

'image_raw':bytes_feature(image_raw)

}))

writer.write(example.SerializeToString())

except IOError as e:

print(f'读取{images_list[i]}失败')

writer.close()

print(f'{project_name}的{name}数据集转换为{name}.tfrecords完成')

if __name__ == '__main__':

import GetImageLabel as getdata

import pysnooper

# @pysnooper.snoop()

def run():

cwd = os.getcwd()

dirs = os.listdir('./resized_images')

save_dir = []

src = os.path.join(cwd, 'resized_images')

print(src)

for dir in dirs:

save_dir.append(os.path.join(cwd, 'TFRecord\\' + dir))

src += os.sep + dir

for i in range(len(dirs)):

if not os.path.exists('./TFRecord'):

os.mkdir('./TFRecord')

if not os.path.exists(save_dir[i]):

os.mkdir(save_dir[i])

print(f'创建{dirs[i]}文件夹成功!')

image_list, label_list = getdata.get_file(src)

convert_to_tfrecord(image_list, label_list, save_dir[i], dirs[i])

run()

- ReadDataFromTFR.py

#!/usr/bin/env python

# -*- coding:utf-8 -*-

# author:Dr.Shang

import tensorflow as tf

def read_and_decode(tfrecords_file, batch_size, image_size=227): # 默认是AlexNet

filename_queue = tf.train.string_input_producer([tfrecords_file])

reader = tf.TFRecordReader()

_, serialized_example = reader.read(filename_queue)

img_features = tf.parse_single_example(serialized_example,

features={

'label': tf.FixedLenFeature([], tf.int64),

'image_raw': tf.FixedLenFeature([], tf.string)

}) # 这里是一次读取一张图片

image = tf.decode_raw(img_features['image_raw'], tf.uint8)

image = tf.reshape(image, [image_size, image_size, 3])

label = tf.cast(img_features['label'], tf.int32)

image_batch, label_batch = tf.train.shuffle_batch([image, label],

batch_size=batch_size,

min_after_dequeue=100,

num_threads=64,

capacity=200)

label_batch = tf.reshape(label_batch, [batch_size])

return image_batch, label_batch

if __name__ == '__main__':

import os

tfr_files = []

for root, dirs, files in os.walk('./TFRecord'): # 若想使用绝对路径,指定绝对路径

for file in files:

tfr_files.append(os.path.join(root, file))

image_batch, label_batch = read_and_decode(tfr_files[0], 32)

print(image_batch.shape) # (32, 227, 227, 3)

print(label_batch.shape) # (32,)

- GetBatch.py

#!/usr/bin/env python

# -*- coding:utf-8 -*-

# author:Dr.Shang

'''

ReadDataFromTFR 是将图片数据转化为TFR,当数据量庞大时,转换时间过长,这个办法是将图片地址转化为TFR,在训练过程中,

根据地址读取图片数据

'''

import tensorflow as tf

def get_batch(image_list, label_list, img_size, batch_size, capacity):

image = tf.cast(image_list, tf.string)

label = tf.cast(label_list, tf.int32)

input_queue = tf.train.slice_input_producer([image, label])

label = input_queue[1]

image_contents = tf.read_file(input_queue[0])

image = tf.image.decode_jpeg(image_contents, channels=3)

image = tf.image.resize_image_with_crop_or_pad(image, img_size, img_size)

image = tf.image.per_image_standardization(image) # 图片标准化

image_batch, label_batch = tf.train.batch([image, label], batch_size=batch_size, num_threads=64,capacity=capacity)

label_batch = tf.reshape(label_batch, [batch_size])

return image_batch, label_batch

if __name__ == '__main__':

import GetImageLabel

import os

cwd = os.getcwd()

path = os.path.join(cwd, 'resized_images')

dirs = os.listdir('./resized_images')

image_list, label_list = [], []

image_list, label_list = GetImageLabel.get_file(path)

with tf.Session() as sess:

image, label = get_batch(image_list, label_list, 227, 32, 200)

image2, label2 = sess.run([image, label])

print(label2)

- model.py

#!/usr/bin/env python

# -*- coding:utf-8 -*-

# author:Dr.Shang

import numpy as np

def onehot(labels):

num_sample = len(labels)

num_class = max(labels) + 1

onehot_labels = np.zeros((num_sample, num_class))

onehot_labels[np.arange(num_sample), labels] = 1

return onehot_labels

- model.py

#!/usr/bin/env python

# -*- coding:utf-8 -*-

# author:Dr.Shang

import tensorflow as tf

image_size = 227

lr = 1e-4

epoch = 200

batch_size = 50

display_step = 5

num_class = 2

num_fc1 = 4096

num_fc2 = 2048

W_conv = {

'conv1': tf.Variable(tf.truncated_normal([11, 11, 3, 96], stddev=0.0001)),

'conv2': tf.Variable(tf.truncated_normal([5, 5, 96, 256], stddev=0.01)),

'conv3': tf.Variable(tf.truncated_normal([3, 3, 256, 384], stddev=0.01)),

'conv4': tf.Variable(tf.truncated_normal([3, 3, 384, 384], stddev=0.01)),

'conv5': tf.Variable(tf.truncated_normal([3, 3, 384, 256], stddev=0.01)),

'fc1': tf.Variable(tf.truncated_normal([5 * 5 * 256, num_fc1], stddev=0.1)),

'fc2': tf.Variable(tf.truncated_normal([num_fc1, num_fc2], stddev=0.1)),

'fc3': tf.Variable(tf.truncated_normal([num_fc2, num_class], stddev=0.1)),

}

b_conv = {

'conv1': tf.Variable(tf.constant(0.0, dtype=tf.float32, shape=[96])),

'conv2': tf.Variable(tf.constant(0.1, dtype=tf.float32, shape=[256])),

'conv3': tf.Variable(tf.constant(0.1, dtype=tf.float32, shape=[384])),

'conv4': tf.Variable(tf.constant(0.1, dtype=tf.float32, shape=[384])),

'conv5': tf.Variable(tf.constant(0.1, dtype=tf.float32, shape=[256])),

'fc1': tf.Variable(tf.constant(0.1, dtype=tf.float32, shape=[num_fc1])),

'fc2': tf.Variable(tf.constant(0.1, dtype=tf.float32, shape=[num_fc2])),

'fc3': tf.Variable(tf.constant(0.1, dtype=tf.float32, shape=[num_class])),

}

def model(x_image):

conv1 = tf.nn.conv2d(x_image, W_conv['conv1'], strides=[1, 4, 4, 1], padding='VALID')

conv1 = tf.nn.bias_add(conv1, b_conv['conv1'])

conv1 = tf.nn.relu(conv1)

pool1 = tf.nn.avg_pool(conv1, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1], padding='VALID')

# print('pool.shape{})'.format(pool1.shape)) (?, 27, 27, 96)

conv2 = tf.nn.conv2d(pool1, W_conv['conv2'], strides=[1, 1, 1, 1], padding='SAME')

conv2 = tf.nn.bias_add(conv2, b_conv['conv2'])

conv2 = tf.nn.relu(conv2)

pool2 = tf.nn.avg_pool(conv2, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1], padding='VALID')

# print('poo2.shape{})'.format(pool2.shape)) (?, 13, 13, 256)

conv3 = tf.nn.conv2d(pool2, W_conv['conv3'], strides=[1, 1, 1, 1], padding='SAME')

conv3 = tf.nn.bias_add(conv3, b_conv['conv3'])

conv3 = tf.nn.relu(conv3)

pool3 = tf.nn.avg_pool(conv3, ksize=[1, 3, 3, 1], strides=[1, 1, 1, 1], padding='VALID')

# print('poo3.shape{})'.format(pool3.shape)) (?, 11, 11, 384)

conv4 = tf.nn.conv2d(pool3, W_conv['conv4'], strides=[1, 1, 1, 1], padding='SAME')

conv4 = tf.nn.bias_add(conv4, b_conv['conv4'])

conv4 = tf.nn.relu(conv4)

# print('conv4.shape{})'.format(conv4.shape)) (?, 11, 11, 384)

conv5 = tf.nn.conv2d(conv4, W_conv['conv5'], strides=[1, 1, 1, 1], padding='SAME')

conv5 = tf.nn.bias_add(conv5, b_conv['conv5'])

conv5 = tf.nn.relu(conv5)

pool5 = tf.nn.avg_pool(conv5, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1], padding='VALID')

# print(pool5.shape) (?, 5, 5, 256)

reshaped = tf.reshape(pool5, (-1, 5 * 5 * 256))

fc1 = tf.add(tf.matmul(reshaped, W_conv['fc1']), b_conv['fc1'])

fc1 = tf.nn.relu(fc1)

fc1 = tf.nn.dropout(fc1, 0.5)

fc2 = tf.add(tf.matmul(fc1, W_conv['fc2']), b_conv['fc2'])

fc2 = tf.nn.relu(fc2)

fc2 = tf.nn.dropout(fc2, 0.5)

fc3 = tf.add(tf.matmul(fc2, W_conv['fc3']), b_conv['fc3'])

return fc3

- train.py

#!/usr/bin/env python

# -*- coding:utf-8 -*-

# author:Dr.Shang

import tensorflow as tf

import matplotlib.pyplot as plt

import time

import os

# 这是之前写的文件

import GetImageLabel

import GetBatch

import ReadDataFromTFR

import model

import OneHot

image_size = 227

num_class = 2

lr = 0.001

epoch = 2000

x = tf.placeholder(tf.float32, [None, image_size, image_size, 3])

y = tf.placeholder(tf.int64, [None, num_class])

def train(image_batch, label_batch,val_Xbatch, val_ybatch):

fc3 = model.model(x)

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=fc3, labels=y))

optimizer = tf.train.GradientDescentOptimizer(learning_rate=lr).minimize(loss)

correct_pred = tf.equal(tf.argmax(y, 1), tf.argmax(fc3, 1))

accuracy = tf.reduce_mean(tf.cast(correct_pred, tf.float32))

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

save_model = './model'

save_log = './log'

save_plt = './plt'

max_acc = 0

if not os.path.exists(save_model):

print('模型保存目录{}不存在,正在创建……'.format(save_model))

os.mkdir(save_model)

print('创建成功')

if not os.path.exists(save_log):

print('日志保存目录{}不存在,正在创建……'.format(save_log))

os.mkdir(save_log)

print('创建成功')

if not os.path.exists(save_plt):

print('损失可视化保存目录{}不存在,正在创建……'.format(save_plt))

os.mkdir(save_plt)

print('创建成功')

save_model += (os.sep + 'AlexNet.ckpt')

save_plt += (os.sep + 'Alexnet.png')

train_writer = tf.summary.FileWriter(save_log, sess.graph)

saver = tf.train.Saver()

losses = []

acc = []

start_time = time.time()

coord = tf.train.Coordinator()

threads = tf.train.start_queue_runners(sess=sess, coord=coord)

for i in range(epoch):

image, label = sess.run([image_batch, label_batch]) # 注意 【】

labels = OneHot.onehot(label)

train_dict = {x: image, y: labels}

val_image, val_label = sess.run([val_Xbatch, val_ybatch]) # 注意 【】

val_labels = OneHot.onehot(val_label)

val_dict = {x: val_image, y: val_labels}

sess.run(optimizer, feed_dict=train_dict)

loss_record = sess.run(loss, feed_dict=train_dict)

acc_record = sess.run(accuracy, feed_dict=val_dict)

losses.append(loss_record)

acc.append(acc_record)

if acc_record > max_acc:

max_acc = acc_record

if i % 100 == 0:

print('正在训练,请稍后……')

print('now the loss is {}'.format(loss_record))

print('now the acc is {}'.format(acc_record))

end_time = time.time()

print('runing time is {}:'.format(end_time - start_time))

start_time = end_time

print('----------{} epoch is finished----------'.format(i))

print('最大精确度为{}'.format(max_acc))

print('训练完成,模型正在保存……')

saver.save(sess, save_model)

print('模型保存成功')

coord.request_stop()

coord.join()

plt.figure(figsize=(10, 4))

plt.subplot(1, 2, 1)

plt.plot(losses)

plt.xlabel('epoch')

plt.ylabel('loss')

plt.subplot(1, 2, 2)

plt.plot(acc)

plt.xlabel('epoch')

plt.ylabel('acc')

plt.tight_layout()

plt.savefig(save_plt, dpi=200)

plt.show()

if __name__ == '__main__':

tfrecords = './TFRecord/train/train.tfrecords'

file_train = './resized_images/train'

file_val = './resized_images/validation'

# image_batch, label_batch = ReadDataFromTFR.read_and_decode(tfrecords, 32)

X_train, y_train = GetImageLabel.get_file(file_train)

train_Xbatch, train_ybatch = GetBatch.get_batch(X_train, y_train, 227, 64, 200)

X_val, y_val = GetImageLabel.get_file(file_val)

val_Xbatch, val_ybatch = GetBatch.get_batch(X_val, y_val, 227, 64, 200)

train(train_Xbatch, train_ybatch, val_Xbatch, val_ybatch)

- test.py

#!/usr/bin/env python

# -*- coding:utf-8 -*-

# author:Dr.Shang

import tensorflow as tf

from PIL import Image

import numpy as np

import model

def per_calss(imagefile):

image = Image.open(imagefile)

image = image.resize([227, 227])

image_array = np.array(image)

image = tf.cast(image_array, tf.float32)

image = tf.image.per_image_standardization(image)

image = tf.reshape(image, [1, 227, 227, 3])

saver = tf.train.Saver()

with tf.Session() as sess:

save_model = tf.train.latest_checkpoint('./model')

saver.restore(sess, save_model)

image = sess.run(image)

image_size = 227

x = tf.placeholder(tf.float32, [None, image_size, image_size, 3])

fc3 = model.model(x)

prediction = sess.run(fc3, feed_dict={x : image})

max_index = np.argmax(prediction)

if max_index == 0:

return 'cat'

else:

return 'dog'

if __name__ == '__main__':

print(per_calss('./resized_images/test/cats/cat.1512.jpg'))