spark基础练习2

文章目录

- 1.谁是最大买“货”?(谁购买的最多,以购买总价为准)

- 2.哪个产品是最大卖货?(哪个产品销售的最多,以产品交易总价为准)

- 3.找出购买的周分布(根据一周分组,查看每天的交易额,分析每天交易量)

- 4.找出购买力最强地域 (根据洲来划分)

- 文件获取链接: https://pan.baidu.com/s/1VjAzYjzN0X8QKdu4pKQBbg 提取码: rknf

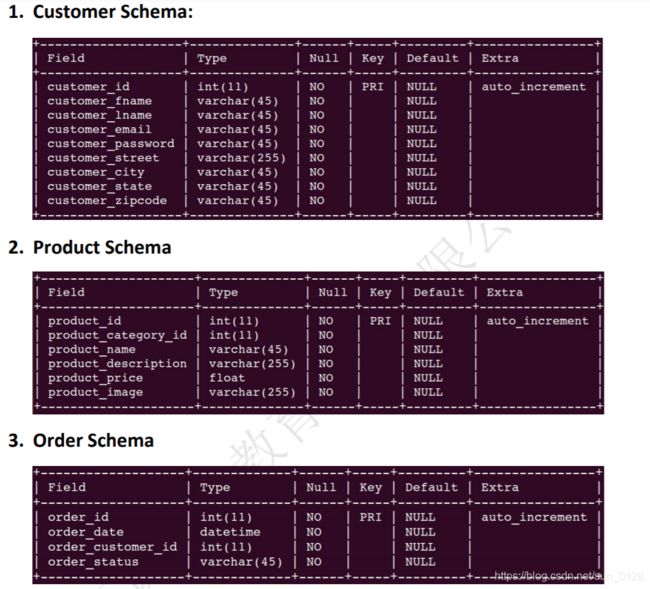

有如下四个csv文件,列属性如下:

#上传到本地

#加载数据成临时表

#1.顾客表

val df1= spark.read.format("csv").option("header","false").option("delimiter",",").load("file:///data/customers.csv").toDF("id","fname","lname","email","password","street","city","state","zipcode")

df1.registerTempTable("customers")

#产品表

val df2= spark.read.format("csv").option("header","false").option("delimiter",",").load("file:///data/products.csv").toDF("pid","pcid","pname","pdesc","price","image")

df2.registerTempTable("product")

#订单表

val df3 = spark.read.format("csv").option("header","false").option("delimiter",",").load("file:///data/orders.csv").toDF("oid","date","cid","status")

df3.registerTempTable("orders")

#订单详细表

val df4 = spark.read.format("csv").option("header","false").option("delimiter",",").load("file:///data/order_items.csv").toDF("iid","oid","pid","num","subtotal","price")

df4.registerTempTable("order_items")

1.谁是最大买“货”?(谁购买的最多,以购买总价为准)

#订单状态需要完成 连 顾客表 订单表 订单详细表 根据 顾客id分组得到 购买总价和最大的人

spark.sqlContext.sql("select c.id ,round(sum(i.subtotal)) s from customers c join orders o join order_items i on c.id = o.cid and o.oid = i.oid where o.status = \"COMPLETE\" group by c.id order by s desc limit 1").show

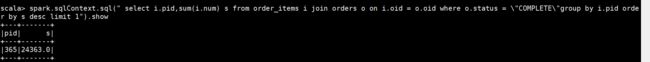

2.哪个产品是最大卖货?(哪个产品销售的最多,以产品交易总价为准)

#订单状态需要完成,订单表和订单详细表 连表 根据 产品id分组 求总价和最大的

spark.sqlContext.sql(" select i.pid,sum(i.num) s from order_items i join orders o on i.oid = o.oid where o.status = \"COMPLETE\"group by i.pid order by s desc limit 1").show

3.找出购买的周分布(根据一周分组,查看每天的交易额,分析每天交易量)

spark.sqlContext.sql("select dayofweek(o.date) d ,sum(i.subtotal) from orders o join order_items i on i.oid=o.oid where o.status = \"COMPLETE\" group by d")

#如果spark版本不支持dayofweek函数

spark.sqlContext.sql("select if(pmod(datediff(o.date, '1920-01-01') - 3, 7)==0,'星期日',concat('星期',pmod(datediff(o.date, '1920-01-01') - 3, 7))) d ,round(sum(i.subtotal)) from orders o join order_items i on i.oid=o.oid where o.status = \"COMPLETE\" group by d").show

4.找出购买力最强地域 (根据洲来划分)

spark.sqlContext.sql("select c.state ,round(sum(i.subtotal)) s from customers c join orders o join order_items i on c.id = o.cid and o.oid = i.oid where o.status = \"COMPLETE\" group by c.state order by s desc limit 1").show