吴恩达机器学习(三)—— ex1:Linear Regression(MATLAB+Python)

- 一、单变量线性回归

-

- 1.1 绘制数据

- 1.2 梯度下降

-

- 1.2.1 更新公式

- 1.2.2 实现

- 1.2.3 计算代价 J ( θ ) J(θ) J(θ)

- 1.2.4 梯度下降

- 1.3 可视化 J ( θ ) J(θ) J(θ)

- 二、多变量线性回归

-

- 2.1 特征归一化

- 2.2 梯度下降

- 2.3 正规方程

- 三、MATLAB实现

-

- 3.1 ex1.m

- 3.2 ex1_multi.m

- 四、Python实现

-

- 4.1 ex1.py

- 4.2 ex1_multi.py

本次练习对应的基础知识总结 → \rightarrow →线性回归。

本次练习对应的文档说明和提供的MATLAB代码 → \rightarrow → 提取码:wg3s 。

本次练习对应的完整代码实现(MATLAB + Python版本) → \rightarrow →Github链接。

一、单变量线性回归

在本次练习的单变量线性回归这一部分中,我们将使用一个变量实现线性回归,以预测食品卡车的利润。假设你是一家餐饮连锁店的首席执行官,并且正在考虑在不同的城市开设新的门店。该连锁店已经在各个城市开了新的分店,并且你有城市的利润和人口数据。你想使用此数据来帮助你选择要扩展到的下一个城市。

文件ex1data1.txt包含我们线性回归问题的数据集。第一列是城市的人口,第二列是该城市的餐车的利润,利润的负值表示亏损。已经设置了ex1.m脚本来加载此数据。

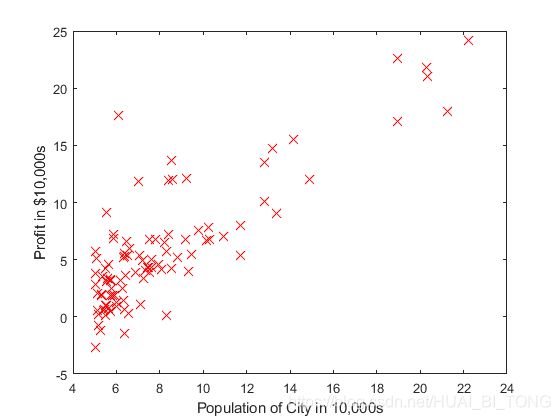

1.1 绘制数据

在开始任何任务之前,通过可视化了解数据通常很有用。对于题目中给的数据集,可以使用散点图来可视化数据,因为它只有两个属性可以绘制(利润和人口)。

在ex1.m中,数据集从数据文件加载到变量X和y中:

data = load('ex1data1.txt'); % read comma separated data

X = data(:, 1); y = data(:, 2);

m = length(y); % number of training examples

接下来,脚本调用plotData函数创建数据的散点图。我们需要完成plotData.m绘制图,修改文件并填写以下代码:

plot(x, y, 'rx', 'MarkerSize', 10); % Plot the data %MarkerSize 表示点的大小,rx表示红色的叉

ylabel('Profit in $10,000s'); % Set the y−axis label

xlabel('Population of City in 10,000s'); % Set the x−axis label

当继续运行ex1.m时,最终结果应如图1所示,带有相同的红色“ x”标记和轴标签。

1.2 梯度下降

在这一部分中,我们将使用梯度下降将线性回归参数 θ 拟合到我们的数据集中。

1.2.1 更新公式

线性回归的目标是最小化代价函数

J ( θ ) = 1 2 m ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) 2 J(\theta)=\frac{1}{2m}\sum_{i=1}^{m} (h _{\theta}(x^{(i)})-y^{(i)})^{2} J(θ)=2m1i=1∑m(hθ(x(i))−y(i))2

假设函数 h θ ( x ) hθ(x) hθ(x)由线性模型 h θ ( x ) = θ T x = θ 0 + θ 1 x h_{\theta }(x)=\theta ^{T}x=\theta _{0}+\theta _{1}x hθ(x)=θTx=θ0+θ1x 给出。

模型的参数 θ j \theta _{j} θj是需要被调整,从而使代价 J ( θ ) J(θ) J(θ)最小化的值。一种方法是使用批处理梯度下降算法(batch gradient descent algorithm)。在批次梯度下降中,每次迭代都会执行更新

θ j : = θ j − α 1 m ∑ i = 1 m ( h θ ( x ( i ) ) − y ( i ) ) x j ( i ) ( s i m u l t a n e o u s l y u p d a t e θ j f o r a l l j ) \theta _{j}:=\theta _{j}-\alpha \frac{1}{m}\sum_{i=1}^{m} (h _{\theta}(x^{(i)})-y^{(i)})x_{j}^{(i)} \ _{}\ _{} (simultaneously\ _{}\ _{}update\ _{}\ _{}θ_{j}\ _{}\ _{}for\ _{}\ _{}all\ _{}\ _{}j) θj:=θj−αm1i=1∑m(hθ(x(i))−y(i))xj(i) (simultaneously update θj for all j)

随着梯度下降的每一步,参数 θ j θ_{j} θj将接近最佳值,从而实现代价 J ( θ ) J(θ) J(θ)的最低。

1.2.2 实现

在ex1.m中,我们已经设置了用于线性回归的数据。在下面的几行中,考虑到截距项 θ 0 θ_{0} θ0,我们在数据中添加另一个维度。我们还将初始参数初始化为0,将学习率 α α α初始化为0.01。

X = [ones(m, 1), data(:,1)]; % Add a column of ones to x

theta = zeros(2, 1); % initialize fitting parameters

iterations = 1500;

alpha = 0.01;

1.2.3 计算代价 J ( θ ) J(θ) J(θ)

当我们执行梯度下降来最小化代价函数 J ( θ ) J(θ) J(θ)时,通过计算代价来监控收敛是有帮助的。在本节中,我们将实现一个计算 J ( θ ) J(θ) J(θ)的函数,以便可以检查梯度下降实现的收敛性。

我们的下一个任务是完成文件computeCost.m中的代码,该文件是一个计算 J ( θ ) J(θ) J(θ)的函数。

完成computeCost.m时需要填写以下代码:

h = X*theta - y;

J = 1/(2*m) * sum(h.^2);

一旦完成该函数,ex1.m中的下一步将使用初始化为零的 θ θ θ运行一次calculateCost,然后我们将看到打印在屏幕上的成本,应该为32.07。

运行ex1.m得到的结果如下:

With theta = [0 ; 0]

Cost computed = 32.072734

Expected cost value (approx) 32.07

With theta = [-1 ; 2]

Cost computed = 54.242455

Expected cost value (approx) 54.24

1.2.4 梯度下降

接下来,我们将在gradientDescent.m文件中实现梯度下降。题目给出的代码中已经编写了循环结构,我们只需要在每次迭代中为 θ θ θ提供更新。

在编程时,要明确代价 J ( θ ) J(θ) J(θ)是由向量 θ θ θ而不是 X X X和 y y y来参数化。

验证梯度下降是否正常工作的一种好方法是查看 J ( θ ) J(θ) J(θ)的值,并检查其每一步是否在减小。 gradientDescent.m的起始代码在每次迭代时都调用computeCost并打印成本。如果我们正确实现了梯度下降和computeCost,则 J ( θ ) J(θ) J(θ)的值将永远不会增加,并且应在算法结束时收敛为稳定值。

完成gradientDescent.m时需要填写以下代码:

theta = theta - alpha/m*X'*(X*theta - y);

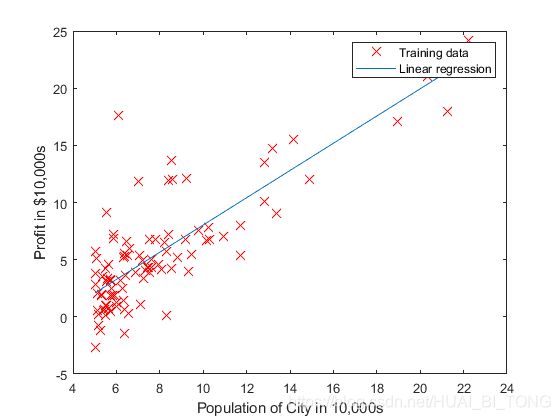

完成后,ex1.m将使用最终参数来绘制线性拟合。结果如图2所示。 θ θ θ的最终值还将用于预测35,000和70,000人区域的利润。

利用使代价 J ( θ ) J(θ) J(θ)最小时的 θ θ θ来预测35,000和70,000人区域的利润,结果如下所示:

For population = 35,000, we predict a profit of 2913.325861

For population = 70,000, we predict a profit of 44607.163473

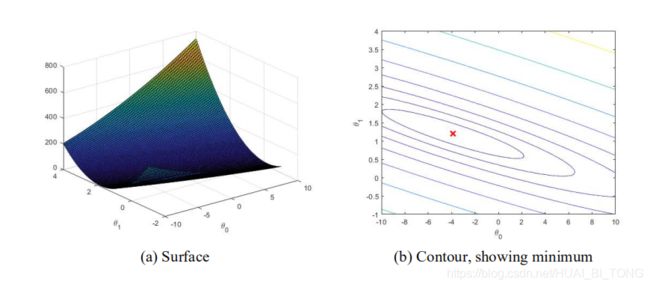

1.3 可视化 J ( θ ) J(θ) J(θ)

为了更好地理解代价函数 J ( θ ) J(θ) J(θ),我们将在 θ 0 θ_{0} θ0和 θ 1 θ_{1} θ1的二维网格值上绘制代价函数曲线。在ex1.m的下一步中,设置了使用我们之前编写的computeCost函数在网格值上计算 J ( θ ) J(θ) J(θ)的代码。

% initialize J vals to a matrix of 0's

J vals = zeros(length(theta0 vals), length(theta1 vals));

% Fill out J vals

for i = 1:length(theta0_vals)

for j = 1:length(theta1_vals)

t = [theta0_vals(i); theta1_vals(j)];

J_vals(i,j) = computeCost(X, y, t);

end

end

执行完这些行后,我们将获得 J ( θ ) J(θ) J(θ)值的二维数组。然后,脚本ex1.m将使用这些值通过surface 和 contour

命令生成 J ( θ ) J(θ) J(θ)的曲面和等高线图,如图3所示。

这些图的目的是向我们展示 J ( θ ) J(θ) J(θ)如何随 θ 0 θ_{0} θ0和 θ 1 θ_{1} θ1的变化而变化。代价函数 J ( θ ) J(θ) J(θ)是碗形的,并且具有全局最小值。该最小值是 θ 0 θ_{0} θ0和 θ 1 θ_{1} θ1的最佳点,并且梯度下降的每一步都在靠近该点。

二、多变量线性回归

在这一部分中,我们将使用多个变量实现线性回归以预测房屋价格。假设你正在出售房屋,并且想知道一个好的市场价格。一种方法是首先收集最近有关出售房屋的信息,并建立房屋价格模型。

文件ex1data2.txt包含某地区房屋价格的训练集。第一列是房屋的大小(以平方英尺为单位),第二列是卧室的数量,第三列是房屋的价格。

已经设置了ex1_multi.m脚本来帮助我们逐步完成此练习。

2.1 特征归一化

ex1_multi.m脚本将首先从此数据集中加载并显示一些值。通过查看这些值,可以发现房屋大小约为卧室数量的1000倍。当特征相差一个数量级时,首先执行特征缩放可以使梯度下降收敛更快。

我们的任务是完成featureNormalize.m中的代码,首先从数据集中减去每个要素的平均值,减去平均值后,然后再将特征值除以它们各自的“标准偏差”。

完成featureNormalize.m时需要填写以下代码:

for i=1:size(X,2)

mu(i)=mean(X(:,i));%求X每一列的均值

sigma(i)=std(X(:,i));%求X每一列的标准差

X_norm(:,i)=(X_norm(:,i)-mu(i))/sigma(i); %归一化处理X=(X-mean)/standard deviation

end

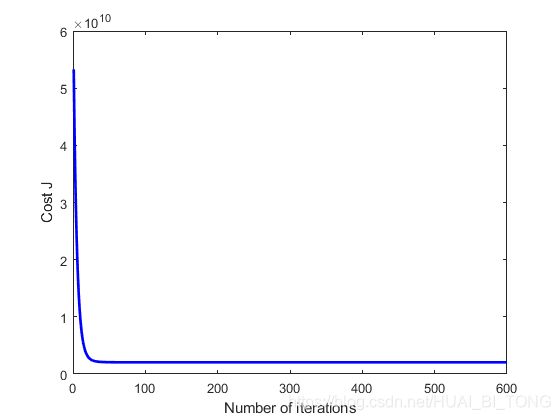

2.2 梯度下降

之前我们是在单变量回归问题上实现梯度下降的,现在唯一的区别是矩阵 X X X中有多于一个的特征,假设函数和批量梯度下降更新规则保持不变。我们应该在computeCostMulti.m和gradientDescentMulti.m中完成代码,以实现具有多个变量的线性回归的成本函数和梯度下降。

完成computeCostMulti.m时需要填写以下代码:

J = 1/(2*m) * (X*theta - y)'* (X*theta - y);

完成gradientDescentMulti.m时需要填写以下代码:

theta = theta - alpha/m*X'*(X*theta - y);

运行ex1_multi.m可以得到 α = 0.1 α=0.1 α=0.1时的梯度下降收敛如图4所示。

学习率为 α = 0.1 α=0.1 α=0.1时得到的最优参数 θ θ θ和预测1650平方英尺、3间卧室的房屋售价结果如下:

Running gradient descent ...

Theta computed from gradient descent:

340412.659574

110631.050279

-6649.474271

Predicted price of a 1650 sq-ft, 3 br house (using gradient descent):

$293081.464335

我们还可以通过修改ex1_multi.m并更改设置学习率的代码尝试不同的学习率,并找到快速收敛的学习率。建议以对数刻度尝试学习率 α α α的值,常取的值有0.3、0.1、0.03、0.01等。

2.3 正规方程

通过求解,我们可以得到正规方程的解为 θ = ( X T X ) − 1 X T y \theta=(X^{T}X)^{-1}X^{T}y θ=(XTX)−1XTy 。

使用此公式不需要任何特征缩放,并且我们将在一次计算中获得确切的解决方案:在梯度下降中没有“直到收敛”的循环。完成normalEqn.m中的代码以使用以上公式计算 θ θ θ。需要注意的是虽然无需缩放特征,但我们仍然需要在 X X X矩阵中添加一列1元素使得具有截距项( θ 0 θ_{0} θ0)。

完成normalEqn.m时需要填写以下代码:

theta = inv(X'*X)*X'*y;

使用正规方程在学习率为 α = 0.1 α=0.1 α=0.1时得到的最优参数 θ θ θ和预测1650平方英尺、3间卧室的房屋售价结果如下:

Solving with normal equations...

Theta computed from the normal equations:

89597.909543

139.210674

-8738.019112

Predicted price of a 1650 sq-ft, 3 br house (using normal equations):

$293081.464335

可以发现使用梯度下降和正规方程得到的最优参数 θ θ θ虽然有所不同,但是和预测1650平方英尺、3间卧室的房屋售价结果是相同的。

三、MATLAB实现

3.1 ex1.m

%% Machine Learning Online Class - Exercise 1: Linear Regression

% Instructions

% ------------

%

% This file contains code that helps you get started on the

% linear exercise. You will need to complete the following functions

% in this exericse:

%

% warmUpExercise.m

% plotData.m

% gradientDescent.m

% computeCost.m

% gradientDescentMulti.m

% computeCostMulti.m

% featureNormalize.m

% normalEqn.m

%

% For this exercise, you will not need to change any code in this file,

% or any other files other than those mentioned above.

%

% x refers to the population size in 10,000s

% y refers to the profit in $10,000s

%

%% Initialization

clear ; close all; clc

%% ==================== Part 1: Basic Function ====================

% Complete warmUpExercise.m

fprintf('Running warmUpExercise ... \n');

fprintf('5x5 Identity Matrix: \n');

warmUpExercise()

fprintf('Program paused. Press enter to continue.\n');

pause;

%% ======================= Part 2: Plotting =======================

fprintf('Plotting Data ...\n')

data = load('ex1data1.txt');% read comma separated data

X = data(:, 1); y = data(:, 2);

m = length(y); % number of training examples

% Plot Data

% Note: You have to complete the code in plotData.m

plotData(X, y);

fprintf('Program paused. Press enter to continue.\n');

pause;

%% =================== Part 3: Cost and Gradient descent ===================

X = [ones(m, 1), data(:,1)]; % Add a column of ones to X %X:mx2

theta = zeros(2, 1); % initialize fitting parameters %theta:2x1

% Some gradient descent settings

iterations = 3000;

alpha = 0.01;

fprintf('\nTesting the cost function ...\n')

% compute and display initial cost

J = computeCost(X, y, theta);

fprintf('With theta = [0 ; 0]\nCost computed = %f\n', J);

fprintf('Expected cost value (approx) 32.07\n');

% further testing of the cost function

J = computeCost(X, y, [-1 ; 2]);

fprintf('\nWith theta = [-1 ; 2]\nCost computed = %f\n', J);

fprintf('Expected cost value (approx) 54.24\n');

fprintf('Program paused. Press enter to continue.\n');

pause;

fprintf('\nRunning Gradient Descent ...\n')

% run gradient descent

[theta, J_history] = gradientDescent(X, y, theta, alpha, iterations);

%验证代价J是否一直下降

% m = length(y); % number of training examples

% J_history = zeros(iterations, 1);

% for iter = 1:iterations

% theta = theta - alpha/m*X'*(X*theta - y);

% J_history(iter) = computeCost(X, y, theta);

% end

% print theta to screen

fprintf('Theta found by gradient descent:\n');

fprintf('%f\n', theta);

fprintf('Expected theta values (approx)\n');

fprintf(' -3.6303\n 1.1664\n\n');

% Plot the linear fit

hold on; % keep previous plot visible

plot(X(:,2), X*theta, '-')

legend('Training data', 'Linear regression')

hold off % don't overlay any more plots on this figure

% Predict values for population sizes of 35,000 and 70,000

predict1 = [1, 3.5] *theta;

fprintf('For population = 35,000, we predict a profit of %f\n',...

predict1*10000);

predict2 = [1, 7] * theta;

fprintf('For population = 70,000, we predict a profit of %f\n',...

predict2*10000);

fprintf('Program paused. Press enter to continue.\n');

pause;

%% ============= Part 4: Visualizing J(theta_0, theta_1) =============

fprintf('Visualizing J(theta_0, theta_1) ...\n')

% Grid over which we will calculate J

theta0_vals = linspace(-10, 10, 100);

theta1_vals = linspace(-1, 4, 100);

% initialize J_vals to a matrix of 0's

J_vals = zeros(length(theta0_vals), length(theta1_vals));

% Fill out J_vals

for i = 1:length(theta0_vals)

for j = 1:length(theta1_vals)

t = [theta0_vals(i); theta1_vals(j)];

J_vals(i,j) = computeCost(X, y, t);

end

end

% Because of the way meshgrids work in the surf command, we need to

% transpose J_vals before calling surf, or else the axes will be flipped

J_vals = J_vals';

% Surface plot

figure;

surf(theta0_vals, theta1_vals, J_vals)

xlabel('\theta_0'); ylabel('\theta_1');

% Contour plot

figure;

% Plot J_vals as 15 contours spaced logarithmically between 0.01 and 100

contour(theta0_vals, theta1_vals, J_vals, logspace(-2, 3, 20))% logspace(a,b,N)把10的a次方到10的b次方区间分成N份 %contour(u,v,z,20)那么它会根据数据的范围画出20条等值线

xlabel('\theta_0'); ylabel('\theta_1');

hold on;

plot(theta(1), theta(2), 'rx', 'MarkerSize', 10, 'LineWidth', 2);

3.2 ex1_multi.m

%% Machine Learning Online Class

% Exercise 1: Linear regression with multiple variables

%

% Instructions

% ------------

%

% This file contains code that helps you get started on the

% linear regression exercise.

%

% You will need to complete the following functions in this

% exericse:

%

% warmUpExercise.m

% plotData.m

% gradientDescent.m

% computeCost.m

% gradientDescentMulti.m

% computeCostMulti.m

% featureNormalize.m

% normalEqn.m

%

% For this part of the exercise, you will need to change some

% parts of the code below for various experiments (e.g., changing

% learning rates).

%

%% Initialization

%% ================ Part 1: Feature Normalization ================

%% Clear and Close Figures

clear ; close all; clc

fprintf('Loading data ...\n');

%% Load Data

data = load('ex1data2.txt');

X = data(:, 1:2);

y = data(:, 3);

m = length(y);

% Print out some data points

fprintf('First 10 examples from the dataset: \n');

fprintf(' x = [%.0f %.0f], y = %.0f \n', [X(1:10,:) y(1:10,:)]');

fprintf('Program paused. Press enter to continue.\n');

pause;

% Scale features and set them to zero mean

fprintf('Normalizing Features ...\n');

[X mu sigma] = featureNormalize(X);

% Add intercept term to X

X = [ones(m, 1) X];

%% ================ Part 2: Gradient Descent ================

% ====================== YOUR CODE HERE ======================

% Instructions: We have provided you with the following starter

% code that runs gradient descent with a particular

% learning rate (alpha).

%

% Your task is to first make sure that your functions -

% computeCost and gradientDescent already work with

% this starter code and support multiple variables.

%

% After that, try running gradient descent with

% different values of alpha and see which one gives

% you the best result.

%

% Finally, you should complete the code at the end

% to predict the price of a 1650 sq-ft, 3 br house.

%

% Hint: By using the 'hold on' command, you can plot multiple

% graphs on the same figure.

%

% Hint: At prediction, make sure you do the same feature normalization.

%

fprintf('Running gradient descent ...\n');

% Choose some alpha value

alpha = 0.1;

num_iters = 600;

% Init Theta and Run Gradient Descent

theta = zeros(3, 1);

[theta, J_history] = gradientDescentMulti(X, y, theta, alpha, num_iters);

% Plot the convergence graph

figure;

plot(1:numel(J_history), J_history, '-b', 'LineWidth', 2);

xlabel('Number of iterations');

ylabel('Cost J');

% Display gradient descent's result

fprintf('Theta computed from gradient descent: \n');

fprintf(' %f \n', theta);

fprintf('\n');

% Estimate the price of a 1650 sq-ft, 3 br house

% ====================== YOUR CODE HERE ======================

% Recall that the first column of X is all-ones. Thus, it does

% not need to be normalized.

price = 0; % You should change this

price = [1,([1650,3]-mu)./sigma]*theta;

% ============================================================

fprintf(['Predicted price of a 1650 sq-ft, 3 br house ' ...

'(using gradient descent):\n $%f\n'], price);

fprintf('Program paused. Press enter to continue.\n');

pause;

%% ================ Part 3: Normal Equations ================

fprintf('Solving with normal equations...\n');

% ====================== YOUR CODE HERE ======================

% Instructions: The following code computes the closed form

% solution for linear regression using the normal

% equations. You should complete the code in

% normalEqn.m

%

% After doing so, you should complete this code

% to predict the price of a 1650 sq-ft, 3 br house.

%

%% Load Data

data = csvread('ex1data2.txt');

X = data(:, 1:2);

y = data(:, 3);

m = length(y);

% Add intercept term to X

X = [ones(m, 1) X];

% Calculate the parameters from the normal equation

theta = normalEqn(X, y);

% Display normal equation's result

fprintf('Theta computed from the normal equations: \n');

fprintf(' %f \n', theta);

fprintf('\n');

% Estimate the price of a 1650 sq-ft, 3 br house

% ====================== YOUR CODE HERE ======================

price = 0; % You should change this

price = [1,1650,3]*theta;

% ============================================================

fprintf(['Predicted price of a 1650 sq-ft, 3 br house ' ...

'(using normal equations):\n $%f\n'], price);

四、Python实现

4.1 ex1.py

import numpy as np #numpy模块:支持大量的维度数组与矩阵运算

import matplotlib.pylab as plt #matplotlib.pylab模块:绘图

from mpl_toolkits.mplot3d import Axes3D #mpl_toolkits.mplot3d绘制 3D 图像

# ==================== Part 1: Basic Function ====================

print('Running warmUpExercise...')

print('5x5 Identity Matrix: ')

A = np.eye((5))

print(A)

_ = input('Press [Enter] to continue.')

# ======================= Part 2: Plotting =======================

# 绘制散点图

def plotData(x, y):

plt.plot(x, y, 'rx', ms=10)

plt.xlabel('Population of City in 10,000')

plt.ylabel('Profit in $10,000')

plt.show()

print('Plotting Data...')

data = np.loadtxt('ex1data1.txt', delimiter=',')

X = data[:, 0]; Y = data[:, 1]

m = np.size(Y, 0)

plotData(X, Y)

_ = input('Press [Enter] to continue.')

# =================== Part 3: Gradient descent ===================

# 计算损失函数值

def computeCost(x, y, theta):

ly = np.size(y, 0)

cost = (x.dot(theta)-y).dot(x.dot(theta)-y)/(2*ly) #dot()函数:向量点积和矩阵乘法 x.dot(y) 等价于 np.dot(x,y)-x是m*n 矩阵y是n*m矩阵,则x.dot(y) 得到m*m矩阵

return cost

# 迭代计算theta

def gradientDescent(x, y, theta, alpha, num_iters):

m = np.size(y, 0)

j_history = np.zeros((num_iters,))

for i in range(num_iters):

deltaJ = x.T.dot(x.dot(theta)-y)/m

theta = theta-alpha*deltaJ

j_history[i] = computeCost(x, y, theta)

return theta, j_history

print('Running Gradient Descent ...')

X = np.vstack((np.ones((m,)), X)).T #vstack()在列上合并

theta = np.zeros((2,)) # 初始化参数 #若A = np.zeros(5)结果 [0. 0. 0. 0. 0.];B = np.zeros((5,), dtype=np.int)结果 [0 0 0 0 0]

iterations = 3000

alpha = 0.01

J = computeCost(X, Y, theta)

print(J)

theta, j_history = gradientDescent(X, Y, theta, alpha, iterations)

print('Theta found by gradient descent: ', theta)

plt.plot(X[:, 1], Y, 'rx', ms=10, label='Training data')

plt.plot(X[:, 1], X.dot(theta), '-', label='Linear regression')

plt.xlabel('Population of City in 10,000')

plt.ylabel('Profit in $10,000')

plt.legend(loc='upper right')

plt.show()

# Predict values for population sizes of 35,000 and 70,000

predict1 = np.array([1, 3.5]).dot(theta)

print('For population = 35,000, we predict a profit of ', predict1*10000)

predict2 = np.array([1, 7.0]).dot(theta)

print('For population = 70,000, we predict a profit of ', predict2*10000)

_ = input('Press [Enter] to continue.')

# ============= Part 4: Visualizing J(theta_0, theta_1) =============

print('Visualizing J(theta_0, theta_1) ...')

theta0_vals = np.linspace(-10, 10, 100)

theta1_vals = np.linspace(-1, 4, 100)

J_vals = np.zeros((np.size(theta0_vals, 0), np.size(theta1_vals, 0)))

for i in range(np.size(theta0_vals, 0)):

for j in range(np.size(theta1_vals, 0)):

t = np.array([theta0_vals[i], theta1_vals[j]])

J_vals[i, j] = computeCost(X, Y, t)

# 绘制三维图像

theta0_vals, theta1_vals = np.meshgrid(theta0_vals, theta1_vals)

fig = plt.figure()

#ax = Axes3D(fig)

ax = fig.gca(projection='3d')

ax.plot_surface(theta0_vals, theta1_vals, J_vals.T)

ax.set_xlabel(r'$\theta$0')

ax.set_ylabel(r'$\theta$1')

# 绘制等高线图

fig2 = plt.figure()

ax2 = fig2.add_subplot(111)

ax2.contour(theta0_vals, theta1_vals, J_vals.T, np.logspace(-2, 3, 20))

ax2.plot(theta[0], theta[1], 'rx', ms=10, lw=2)

ax2.set_xlabel(r'$\theta$0')

ax2.set_ylabel(r'$\theta$1')

plt.show()

4.2 ex1_multi.py

import numpy as np

import matplotlib.pylab as plt

from scipy import linalg #Scipy是一个用于数学、科学、工程领域的常用软件包,可以处理插值、积分、优化、图像处理、常微分方程数值解的求解、信号处理等问题。

# ================ Part 1: Feature Normalization ================

print('Loading data ...')

data = np.loadtxt('ex1data2.txt', delimiter=',')

X = data[:, 0:2]

Y = data[:, 2]

m = np.size(Y, 0)

print('First 10 examples from the dataset:')

for i in range(10):

print('x=[%.0f %.0f], y=%.0f' %(X[i, 0], X[i, 1], Y[i]))

_ = input('Press [Enter] to continue.')

print('Normalizing Features...')

# 归一化函数

def featureNormalize(x):

mu = np.mean(x, axis=0)

sigma = np.std(x, axis=0)

x_norm = np.divide(x-mu, sigma)

return x_norm, mu, sigma

X, mu, sigma = featureNormalize(X)

X = np.concatenate((np.ones((m, 1)), X), axis=1)

# ================ Part 2: Gradient Descent ================

print('Running gradient descent ...')

# 计算损失函数值

def computeCostMulti(x, y, theta):

m = np.size(y, 0)

j = (x.dot(theta)-y).dot(x.dot(theta)-y)/(2*m)

return j

def gradientDescentMulti(x, y, theta, alpha, num_iters):

m = np.size(y, 0)

j_history = np.zeros((num_iters,))

for i in range(num_iters):

theta = theta-alpha*(X.T.dot(X.dot(theta)-y)/m)

j_history[i] = computeCostMulti(x, y, theta)

return theta, j_history

alpha = 0.1

num_iters = 600

theta = np.zeros((3,))

theta, j_history = gradientDescentMulti(X, Y, theta, alpha, num_iters)

plt.plot(np.arange(np.size(j_history, 0)), j_history, '-b', lw=2) #arange函数用于创建等差数组

plt.xlabel('Number of iterations')

plt.ylabel('Cost J')

plt.show()

print('Theta computed from gradient descent: ', theta)

# Estimate the price of a 1650 sq-ft, 3 br house

X_test = np.array([1650, 3])

X_test = np.divide(X_test-mu, sigma)

X_test = np.hstack((1, X_test)) #vstack()在行上合并

price = X_test.dot(theta)

print('Predicted price of a 1650 sq-ft, 3 br house (using gradient descent): ', price)

_ = input('Press [Enter] to continue.')

# ================ Part 3: Normal Equations ================

data = np.loadtxt('ex1data2.txt', delimiter=',')

X = data[:, 0:2]

Y = data[:, 2]

m = np.size(Y, 0)

X = np.concatenate((np.ones((m, 1)), X), axis=1)#concatenate能够一次完成多个数组的拼接

# 利用标准公式求解theta

def normalEqn(x, y):

theta = linalg.pinv(X.T.dot(X)).dot(X.T).dot(y)

return theta

theta = normalEqn(X, Y)

print('Theta computed from the normal equations: ', theta)

# Estimate the price of a 1650 sq-ft, 3 br house

X_test = np.array([1, 1650, 3])

price = X_test.dot(theta)

print('Predicted price of a 1650 sq-ft, 3 br house (using normal equations): ', price)