pytorch_CNN实现文本情感分类

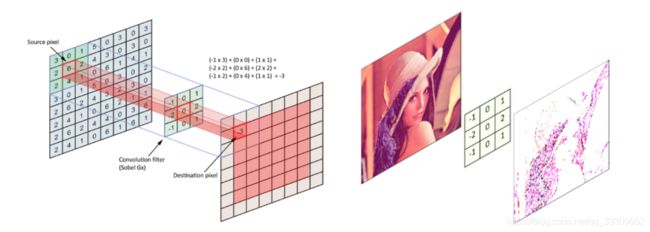

前面一章已经讲了cnn如何做图像识别,相对应的cnn也可以做文本识别,中心思想不变,卷积层以及池化层等不变,但是数据的输入就需要进行对应的调整

不熟悉cnn的同学可以看看上篇文章https://blog.csdn.net/qq_39309652/article/details/115978825?spm=1001.2014.3001.5501

这里对cnn的框架不再描述,我们主要看看文本数据如何转化为模型所需要的数据

我们只需要将数据构建为(N,C,H,W),其中N为批量数据,C为信号的通道,H为宽,W为高,我们以以下句子为例

“我想用CNN做个情感分析,这个语句是我喜欢的”

那这个句子的(N,C,H,W)分别为多少呢?

N是1,因为只有一个数据,也就是每次只输入一段话

C是1,因为只有一个通道不像图像有彩色图片三通道

H,W目前我们无法判断,需要进行构建,也就是将这一句话向量化,最原始的方法就是独热编码,先进行分词,之后one-hot,但是one-hot只是将数据向量化而不能表达文字之间的关系,如国王和王后这两个词接近,作者本人和帅气这个词接近,就是这个道理,在pytorch中直接使用nn.Embedding(vocab_size, embedding_size)就可以,vocab_size是所有词的总数,embedding_size是对应的维度

假如我们有一张对应向量表,就能找到每个词对应的词向量,就可以构建H和W,我们按照这个思想进行代码实现

import pandas as pd

import jieba

from torch.utils import data

import torch

import numpy as np

import torch.nn as nn

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

import torch.optim as optim

def cal_base_date():

#0代表消极,1代表积极

test=pd.DataFrame({

'text':['我想用CNN做个情感分析,这个语句是我喜欢的',

'哈哈哈,万年刮痧王李白终于加强了',

'这个游戏好极了,个别英雄强度超标,游戏里面英雄种类丰富,我太菜,求大佬带飞',

'我觉得是个好游戏',

'这个模型准确度好垃圾,我不喜欢',

'王者必糊,小学生没防到,还把一群初中生,什么时候没人脸识别,什么时候回归',

'快去吧健康系统去掉,不然举报',

'垃圾mht,还要人脸识别微信',

'那些没脑子玩家就别下载了',

],

'label':[1, 1, 1, 1, 0, 0, 0, 0, 0]})

return test

def cal_clear_word(test):

stoplist = [' ', '\n', ',']

def function(a):

word_list = [w for w in jieba.cut(a) if w not in list(stoplist)]

return word_list

test['text'] = test.apply(lambda x: function(x['text']), axis=1)

return test

def cal_update_date(test, sequence_length):

def prepare_sequence(seq):

idxs = [w for w in seq]

if len(idxs) >= sequence_length:

idxs = idxs[:sequence_length]

else:

pad_num = sequence_length - len(idxs)

for i in range(pad_num):

idxs.append('UNK')

return idxs

test['text'] = test.apply(lambda x: prepare_sequence(x['text']), axis=1)

return test

def cal_word_to_ix(test):

word_to_ix = {

} # 单词的索引字典

for sent, tags in test.values:

for word in sent:

if word not in word_to_ix:

word_to_ix[word] = len(word_to_ix)

def prepare_sequence(seq, to_ix):

idxs = [to_ix[w] for w in seq]

return idxs

test['text'] = test.apply(lambda x: prepare_sequence(x['text'], word_to_ix), axis=1)

return test, len(word_to_ix)

class TestDataset(data.Dataset):#继承Dataset

def __init__(self,test):

self.Data=test['text']#一些由2维向量表示的数据集

self.Label=test['label']#这是数据集对应的标签

def __getitem__(self, index):

#把numpy转换为Tensor

txt=torch.from_numpy(np.array(self.Data[index]))

label=torch.tensor(np.array(self.Label[index]))

return txt,label

def __len__(self):

return len(self.Data)

class TextCNN(nn.Module):

def __init__(self,embedding_size, num_classes):

super(TextCNN, self).__init__()

self.W = nn.Embedding(vocab_size, embedding_size)

self.conv = nn.Sequential(

# conv : [input_channel(=1), output_channel, (filter_height, filter_width), stride=1]

nn.Conv2d(1, 3, (2, embedding_size)),

nn.ReLU(),

# pool : ((filter_height, filter_width))

nn.MaxPool2d((2, 1)),

)

# fc

self.fc = nn.Linear(12, num_classes)

def forward(self, X):

batch_size = X.shape[0]

embedding_X = self.W(X) # [batch_size, sequence_length, embedding_size]

embedding_X = embedding_X.unsqueeze(

1) # add channel(=1) [batch, channel(=1), sequence_length, embedding_size]

conved = self.conv(embedding_X) # [batch_size, output_channel, 1, 1]

flatten = conved.view(batch_size, -1) # [batch_size, output_channel*1*1]

output = self.fc(flatten)

return output

# 准备数据

base_df = cal_base_date()

# 结巴分类以及去掉停用词

return_df = cal_clear_word(base_df)

# 规定每个词的长度,针对多余数据进行删除对于多余数据进行填补

sequence_length = 10

return_df = cal_update_date(return_df, sequence_length)

# 将文字转化为数字

return_df, vocab_size = cal_word_to_ix(return_df)

# 将数据转化为pytorch专用数据类型,方便批量化处理

Test=TestDataset(return_df)

batch_size = 3

test_loader = data.DataLoader(Test,batch_size,shuffle=False)

# 调用模型

embedding_size = 2

num_classes = 2

model = TextCNN(embedding_size,num_classes).to(device)

criterion = nn.CrossEntropyLoss().to(device)

optimizer = optim.Adam(model.parameters(), lr=1e-3)

for epoch in range(50):

for batch_x, batch_y in test_loader:

batch_x, batch_y = batch_x.to(device).long(), batch_y.to(device).long()

pred = model(batch_x)

loss = criterion(pred, batch_y)

if (epoch + 1) % 10 == 0:

print('Epoch:', '%04d' % (epoch + 1), 'loss =', '{:.6f}'.format(loss))

optimizer.zero_grad()

loss.backward()

optimizer.step()

# Test

# test_text = 'i hate me'

# tests = [[word2idx[n] for n in test_text.split()]]

# test_batch = torch.LongTensor(tests).to(device)

# # Predict

# model = model.eval()

# predict = model(test_batch).data.max(1, keepdim=True)[1]

# if predict[0][0] == 0:

# print(test_text,"is Bad Mean...")

# else:

# print(test_text,"is Good Mean!!")

Epoch: 0010 loss = 0.750256

Epoch: 0010 loss = 0.637410

Epoch: 0010 loss = 0.560465

Epoch: 0020 loss = 0.727384

Epoch: 0020 loss = 0.623586

Epoch: 0020 loss = 0.532217

Epoch: 0030 loss = 0.705179

Epoch: 0030 loss = 0.608043

Epoch: 0030 loss = 0.500805

Epoch: 0040 loss = 0.680783

Epoch: 0040 loss = 0.590005

Epoch: 0040 loss = 0.467500

Epoch: 0050 loss = 0.651597

Epoch: 0050 loss = 0.567914

Epoch: 0050 loss = 0.433917

# 代码少一份真实数据的验证,我等以后加上,有啥问题,进群或者直接留言