resNet模型论文与实现

前言

本人觉得自己工程实践水平有待提高。今日刚好复完 李沐 大神的视频,又刚好看了一下resnet一些复现的代码,resnet后续变体很多(比如resnext), 并且在目标检测领域yolo的主流主干网络就是resnet(当然也有其他),所以就想写写随笔。

ResNet论文逐段精读【论文精读】

Deep Residual Learning for Image Recognition(arxiv)

非常喜欢沐神的论文精读系列,有网友调侃 沐神 在b站带研究生了哈哈

另外也非常喜欢何凯明的作品,架构简单有效。resnet是2016CVPR best paper。另外值得一提的是,有很多人觉得他的新作 Masked Autoencoders Are Scalable Vision Learners 可能会在CVPR2022拿best paper,这个我们拭目以待。

复现的代码

这里我看了几个复现版本,觉得这个简单清晰,所以选用了这个版本,佩服这个仓库的作者的代码功底。(在下述代码中,代码风格可能会有改动,会换成我自己喜欢的书写风格,不过改动不大)

来源: https://github.com/xmu-xiaoma666/External-Attention-pytorch/blob/1ceda306c41063af11c956334747763444a4d83f/model/backbone/resnet.py#L43

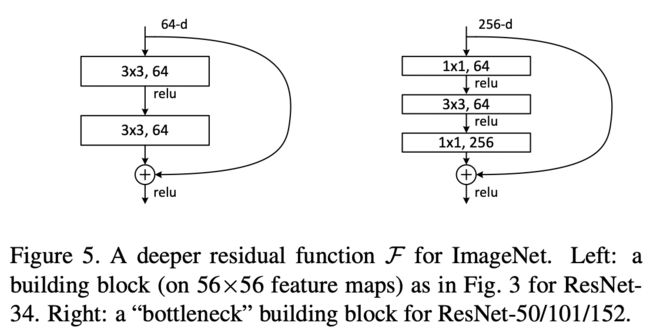

这里呢,该仓库的作者只复现了resnet50,101,152的模型,下图的右边,那个bottleneck的结构。不过没关系,只要读懂了右边架构的代码,修改一下源码实现左边的结构也是可以的。

bottleneck

作者先定义了一个bottlenect结构的类。

class BottleNeck(nn.Module):

expansion = 4

def __init__(self, in_channel, channel, stride=1, downsample=None):

"""

param in_channel: 输入block之前的通道数

param channel : 在block中间处理的时候的通道数(这个值是输出维度的1/4)

channel * block.expansion:输出的维度

"""

super().__init__()

# 1 * 1 的卷积,右图中三块卷积block最那个,作用是降维

self.conv1 = nn.Conv2d(in_channel, channel,

kernel_size=1, stride=stride, bias=False)

self.bn1 = nn.BatchNorm2d(channel)

# 3 * 3 的卷积

self.conv2 = nn.Conv2d(

channel, channel, kernel_size=3, padding=1, bias=False, stride=1)

self.bn2 = nn.BatchNorm2d(channel)

# 1 * 1 的卷积,升维

self.conv3 = nn.Conv2d(

channel, channel*self.expansion, kernel_size=1, stride=1, bias=False)

self.bn3 = nn.BatchNorm2d(channel*self.expansion)

self.relu = nn.ReLU(False)

self.downsample = downsample

self.stride = stride

def forward(self, x):

residual = x

out = self.relu(self.bn1(self.conv1(x))) # b,c,h,w

out = self.relu(self.bn2(self.conv2(out))) # b,c,h,w

out = self.relu(self.bn3(self.conv3(out))) # b,4c,h,w

if(self.downsample != None):

residual = self.downsample(residual)

# shotcup

out += residual

return self.relu(out)

resnet

定义ResNet类以及初始化一些层

class ResNet(nn.Module):

def __init__(self, block, layers_num_lt, num_classes=1000):

"""

param block : 块的对象,例如这里是 bottlenet块

param layers_num_lt : 列表,每一个元素代表每一块的层数

param num_classes : 最后分类器输出的类别数目

"""

super().__init__()

# 定义输入模块的维度

self.in_channel = 64

# stem layer

self.conv1 = nn.Conv2d(3, 64, kernel_size=7,

stride=2, padding=3, bias=False)

self.bn1 = nn.BatchNorm2d(64)

self.relu = nn.ReLU(False)

self.maxpool = nn.MaxPool2d(

kernel_size=3, stride=2, padding=0, ceil_mode=True)

# main layer,这里创建不同的block工作类似,分装成make_layer函数(protect类型,前面加个下划线)

self.layer1 = self._make_layer(block, 64, layers_num_lt[0])

self.layer2 = self._make_layer(block, 128, layers_num_lt[1], stride=2)

self.layer3 = self._make_layer(block, 256, layers_num_lt[2], stride=2)

self.layer4 = self._make_layer(block, 512, layers_num_lt[3], stride=2)

# classifier

self.avgpool = nn.AdaptiveAvgPool2d(1)

self.classifier = nn.Linear(512*block.expansion, num_classes)

self.softmax = nn.Softmax(-1)

# ...其他函数

forward函数, 也比较简单,参照下图即可明白

def forward(self, x):

# stem layer

out = self.relu(self.bn1(self.conv1(x))) # b,112,112,64

out = self.maxpool(out) # b,56,56,64

# layers:

out = self.layer1(out) # b,56,56,64*4

out = self.layer2(out) # b,28,28,128*4

out = self.layer3(out) # b,14,14,256*4

out = self.layer4(out) # b,7,7,512*4

# classifier

out = self.avgpool(out) # b,1,1,512*4

out = out.reshape(out.shape[0], -1) # b,512*4

out = self.classifier(out) # b,1000

out = self.softmax(out)

return out

然后我们回到创建中间几个block的make_layer函数, 主要是循环创建中间的block块以及在第一层要判断一下,可能要解决一下H(x)=F(x)+x中F(x)和x的channel维度不匹配问题的问题。

def _make_layer(self, block, channel, blocks, stride=1):

# downsample 主要用来处理H(x)=F(x)+x中F(x)和x的channel维度不匹配问题,即对残差结构的输入进行升维,在做残差相加的时候,必须保证残差的纬度与真正的输出维度(宽、高、以及深度)相同

# 比如步长!=1 或者 in_channel!=channel&self.expansion

downsample = None

if(stride != 1 or self.in_channel != channel*block.expansion):

self.downsample = nn.Conv2d(

self.in_channel, channel*block.expansion, stride=stride, kernel_size=1, bias=False)

# 第一个conv部分,可能需要downsample

layers = []

layers.append(block(self.in_channel, channel,

downsample=self.downsample, stride=stride))

self.in_channel = channel*block.expansion

for _ in range(1, blocks):

layers.append(block(self.in_channel, channel))

return nn.Sequential(*layers)

最后就可以通过改变block块中layer的个数来实现各种resnet了(50, 101, 152), 至于参数参考原论文 Deep Residual Learning for Image Recognition(arxiv)

# layers_num_lt参照论文设定 https://arxiv.org/pdf/1512.03385.pdf

def ResNet50(num_classes=1000):

return ResNet(block=BottleNeck, layers_num_lt=[3, 4, 6, 3], num_classes=num_classes)

def ResNet101(num_classes=1000):

return ResNet(block=BottleNeck, layers_num_lt=[3, 4, 23, 3], num_classes=num_classes)

def ResNet152(num_classes=1000):

return ResNet(block=BottleNeck, layers_num_lt=[3, 8, 36, 3], num_classes=num_classes)

测试

我们生成一个随机的tensor(模拟50张图,彩色3通道,高和宽都是224 ),丢进去,看看输出的维度(由于没有训练,其实tensor的含义并没有什么意义,所以我们只是看看能不能跑通)

if __name__ == '__main__':

input = torch.randn(50, 3, 224, 224)

resnet50 = ResNet50(1000)

# resnet101=ResNet101(1000)

# resnet152=ResNet152(1000)

out = resnet50(input)

print(out.shape)

完整代码

# coding:utf-8

import torch

from torch import nn

class BottleNeck(nn.Module):

expansion = 4

def __init__(self, in_channel, channel, stride=1, downsample=None):

"""

param in_channel: 输入block之前的通道数

param channel : 在block中间处理的时候的通道数(这个值是输出维度的1/4)

channel * block.expansion:输出的维度

"""

super().__init__()

# 1 * 1 的卷积,右图中三块卷积block最那个,作用是降维

self.conv1 = nn.Conv2d(in_channel, channel,

kernel_size=1, stride=stride, bias=False)

self.bn1 = nn.BatchNorm2d(channel)

# 3 * 3 的卷积

self.conv2 = nn.Conv2d(

channel, channel, kernel_size=3, padding=1, bias=False, stride=1)

self.bn2 = nn.BatchNorm2d(channel)

# 1 * 1 的卷积,升维

self.conv3 = nn.Conv2d(

channel, channel*self.expansion, kernel_size=1, stride=1, bias=False)

self.bn3 = nn.BatchNorm2d(channel*self.expansion)

self.relu = nn.ReLU(False)

self.downsample = downsample

self.stride = stride

def forward(self, x):

residual = x

out = self.relu(self.bn1(self.conv1(x))) # bs,c,h,w

out = self.relu(self.bn2(self.conv2(out))) # bs,c,h,w

out = self.relu(self.bn3(self.conv3(out))) # bs,4c,h,w

if(self.downsample != None):

residual = self.downsample(residual)

# shotcup

out += residual

return self.relu(out)

class ResNet(nn.Module):

def __init__(self, block, layers_num_lt, num_classes=1000):

"""

param block : 块的对象,例如这里是 bottlenet块

param layers_num_lt : 列表,每一个元素代表每一块的层数

param num_classes : 最后分类器输出的类别数目

"""

super().__init__()

# 定义输入模块的维度

self.in_channel = 64

# stem layer

self.conv1 = nn.Conv2d(3, 64, kernel_size=7,

stride=2, padding=3, bias=False)

self.bn1 = nn.BatchNorm2d(64)

self.relu = nn.ReLU(False)

self.maxpool = nn.MaxPool2d(

kernel_size=3, stride=2, padding=0, ceil_mode=True)

# main layer,这里创建不同的block工作类似,分装成make_layer函数(protect类型,前面加个下划线)

self.layer1 = self._make_layer(block, 64, layers_num_lt[0])

self.layer2 = self._make_layer(block, 128, layers_num_lt[1], stride=2)

self.layer3 = self._make_layer(block, 256, layers_num_lt[2], stride=2)

self.layer4 = self._make_layer(block, 512, layers_num_lt[3], stride=2)

# classifier

self.avgpool = nn.AdaptiveAvgPool2d(1)

self.classifier = nn.Linear(512*block.expansion, num_classes)

self.softmax = nn.Softmax(-1)

def forward(self, x):

# stem layer

out = self.relu(self.bn1(self.conv1(x))) # b,112,112,64

out = self.maxpool(out) # b,56,56,64

# layers:

out = self.layer1(out) # b,56,56,64*4

out = self.layer2(out) # b,28,28,128*4

out = self.layer3(out) # b,14,14,256*4

out = self.layer4(out) # b,7,7,512*4

# classifier

out = self.avgpool(out) # b,1,1,512*4

out = out.reshape(out.shape[0], -1) # b,512*4

out = self.classifier(out) # b,1000

out = self.softmax(out)

return out

def _make_layer(self, block, channel, blocks, stride=1):

# downsample 主要用来处理H(x)=F(x)+x中F(x)和x的channel维度不匹配问题,即对残差结构的输入进行升维,在做残差相加的时候,必须保证残差的纬度与真正的输出维度(宽、高、以及深度)相同

# 比如步长!=1 或者 in_channel!=channel&self.expansion

downsample = None

if(stride != 1 or self.in_channel != channel*block.expansion):

self.downsample = nn.Conv2d(

self.in_channel, channel*block.expansion, stride=stride, kernel_size=1, bias=False)

# 第一个conv部分,可能需要downsample

layers = []

layers.append(block(self.in_channel, channel,

downsample=self.downsample, stride=stride))

self.in_channel = channel*block.expansion

for _ in range(1, blocks):

layers.append(block(self.in_channel, channel))

return nn.Sequential(*layers)

# layers_num_lt参照论文设定 https://arxiv.org/pdf/1512.03385.pdf

def ResNet50(num_classes=1000):

return ResNet(block=BottleNeck, layers_num_lt=[3, 4, 6, 3], num_classes=num_classes)

def ResNet101(num_classes=1000):

return ResNet(block=BottleNeck, layers_num_lt=[3, 4, 23, 3], num_classes=num_classes)

def ResNet152(num_classes=1000):

return ResNet(block=BottleNeck, layers_num_lt=[3, 8, 36, 3], num_classes=num_classes)

if __name__ == '__main__':

input = torch.randn(50, 3, 224, 224)

resnet50 = ResNet50(1000)

# resnet101=ResNet101(1000)

# resnet152=ResNet152(1000)

out = resnet50(input)

print(out.shape)

加载权重

这里看了另一个仓库的代码 https://github.com/Lornatang/ResNet-PyTorch/blob/9e529757ce0607aafeae2ddd97142201b3d4cadd/resnet_pytorch/utils.py#L86