使用系统的AVFoundation可以实现设备捕捉视频和音频数据。

AVFoundation在iOS上的结构(如图1)

实现捕获功能需要下面几个类来完成

捕捉会话: AVCaptureSession.

捕捉设备: AVCaptureDevice.

捕捉设备输入 :AVCaptureDeviceInput

捕捉设备输出 :AVCaptureOutput (抽象类)下面是他们定具体实现子类

*AVCaptureStillImageOutput (图片)

*AVCaputureMovieFileOutput (视频)

* AVCaputureAudioDataOutput (音频数据流)

*AVCaputureVideoDataOutput (视频数据流)

捕捉连接: AVCaptureConnection

捕捉预览层: AVCaptureVideoPreviewLayer

首先 我们根据 AVCaptureDevice 来获取前/后摄像头设备,然后将他们封装到AVCaptureDeviceInput,加入到当前会话AVCaptureSession。再根据需要获取到数据设置设备输出,并添加到AVCaptureSession。最后AVCaptureSession开启捕捉,通过AVCaptureOutput设置的代理回调方法完成数据捕获。如图2所示

主要类详解

AVCaptureSession继承自NSObject,是AVFoundation的核心类;用于管理捕获对象AVCaptureInput的视频和音频的输入,协调捕获的输出AVCaptureOutput。(如图3)

首先我们创建一个AVCaptureSession

@property (strong, nonatomic) AVCaptureSession *captureSession;// 捕捉会话

self.captureSession = [[AVCaptureSession alloc]init]; self.captureSession.sessionPreset = AVCaptureSessionPresetHigh;

会话的参数配置:

1)、设置分辨率

使用会话上的 sessionPreset 来指定图像的质量和分辨率。预设是一个常数,确定了一部分可能的配置中的一个;在某些情况下,设计的配置是设备特有的:

标识分辨率注释

AVCaptureSessionPresetHigh High最高的录制质量,每个设备有所不同

AVCaptureSessionPresetMedium Medium适用于wifi分享,实际值可能会变

AVCaptureSessionPresetLow Low适用于3G分享,实际值可能会变

AVCaptureSessionPreset640x480 640x480VGA.

AVCaptureSessionPreset1280x720 1280x720720p HD.

AVCaptureSessionPresetPhoto Photo完整的照片分辨率,不支持视频输出。

2)、输入设备

一个AVCaptureDevice对象抽象出物理捕获设备,提供了输入数据(比如音频或者视频)给AVCaptureSession对象。例如每个输入设备都有一个对象,两个视频输入,一个用于前置摄像头,一个用于后置摄像头,一个用于麦克风的音频输入。我们利用AVCaptureDeviceDiscoverySession ios10以后新的方法来获取设备信息。 利用静态初始化方法来构造一个实例。

由于这些设备是所有应用共享使用的,所以在当前应用使用前需要进行会话配置beginConfiguration,并且询问是否能添加canAddInput,然后进行添加,最后我们将这次会话配置提交commitConfiguration。同理我们可以通过添加音频输出,设置音频输出代理来获取音频数据。

3)、输出设备

选用AVCaptureVideoDataOutput进行视频数据流捕捉,需要实现AVCaptureFileOutputRecordingDelegate代理方法( (void)captureOutput:(AVCaptureFileOutput *)output didStartRecordingToOutputFileAtURL:(NSURL *)fileURL fromConnections:(NSArray *)connections;)来回调捕捉到的数据,进行响应的处理。

AVCaptureDeviceDiscoverySession *discoverySession =[AVCaptureDeviceDiscoverySession discoverySessionWithDeviceTypes:@[AVCaptureDeviceTypeBuiltInWideAngleCamera] mediaType:AVMediaTypeVideo position:AVCaptureDevicePositionUnspecified];

NSArray *videoDevices = discoverySession.devices;

self.frontCamera = [AVCaptureDeviceInput deviceInputWithDevice:videoDevices.lastObject error:nil];

self.backCamera = [AVCaptureDeviceInput deviceInputWithDevice:videoDevices.firstObject error:nil];

self.videoInputDevice = self.backCamera;

self.videoDataOutput = [[AVCaptureVideoDataOutput alloc]init];

[self.videoDataOutput setSampleBufferDelegate:self queue:self.captureQueue];

[self.videoDataOutput setAlwaysDiscardsLateVideoFrames:YES];

[self.captureSession beginConfiguration];

if ([self.captureSession canAddInput:self.videoInputDevice]) {

[self.captureSession addInput:self.videoInputDevice];

}

if([self.captureSession canAddOutput:self.videoDataOutput]){

[self.captureSession addOutput:self.videoDataOutput];

}

[self.captureSession commitConfiguration];

4)、捕捉连接AVCaptureConnection

调用 AVCaptureSession 实例的方法-addInput:或 -addOutput: 方法时,会在所有兼容的输入和输出之间自动形成连接。添加没有连接的输入或输出时,只需手动添加连接。还可以使用连接来启用或禁用来自给定输入或给定输出的数据流。可以通过

self.videoConnection = [self.videoDataOutput connectionWithMediaType:AVMediaTypeVideo];

来获取连接。具体作用可以参考https://www.jianshu.com/p/29e99c696768

5)、捕捉预览层: AVCaptureVideoPreviewLayer

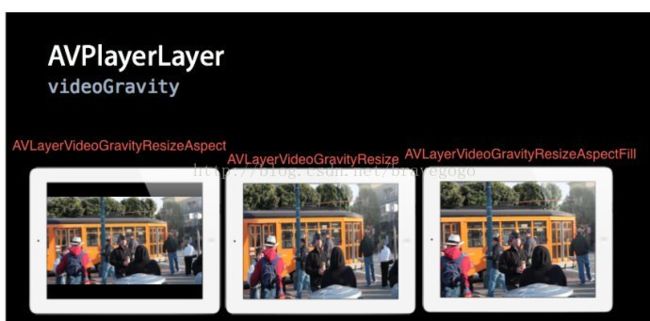

我们摄像头捕获的画面可以通过AVCaptureVideoPreviewLayer来预览。通常使用只需要设置frame大小以及videoGravity。

videoGravity:就是视频播放时的拉伸方式

参数详解

- AVLayerVideoGravityResize 非均匀模式。两个维度完全填充至整个视图区域

- AVLayerVideoGravityResizeAspect 等比例填充,直到一个维度到达区域边界

AVLayerVideoGravityResizeAspectFill 等比例填充,直到填充满整个视图区域,其中一个维度的部分区域会被裁剪

/**设置预览层**/

- (void)setupPreviewLayer{

self.preLayer = [AVCaptureVideoPreviewLayer layerWithSession:self.captureSession];

self.preLayer.frame = CGRectMake(0, 0, self.prelayerSize.width, self.prelayerSize.height);

//设置满屏

self.preLayer.videoGravity = AVLayerVideoGravityResizeAspectFill;

[self.preview.layer addSublayer:self.preLayer];

}

6)、捕获设备分辨率调整

-(void)updateFps:(NSInteger) fps{

//获取当前capture设备

AVCaptureDeviceDiscoverySession *discoverySession =[AVCaptureDeviceDiscoverySession discoverySessionWithDeviceTypes:@[AVCaptureDeviceTypeBuiltInWideAngleCamera] mediaType:AVMediaTypeVideo position:AVCaptureDevicePositionUnspecified];

NSArray *videoDevices = discoverySession.devices;

//遍历所有设备(前后摄像头)

for (AVCaptureDevice *vDevice in videoDevices) {

//获取当前支持的最大fps

float maxRate = [(AVFrameRateRange *)[vDevice.activeFormat.videoSupportedFrameRateRanges objectAtIndex:0] maxFrameRate];

//如果想要设置的fps小于或等于做大fps,就进行修改

if (maxRate >= fps) {

//实际修改fps的代码

if ([vDevice lockForConfiguration:NULL]) {

vDevice.activeVideoMinFrameDuration = CMTimeMake(10, (int)(fps * 10));

vDevice.activeVideoMaxFrameDuration = vDevice.activeVideoMinFrameDuration;

[vDevice unlockForConfiguration];

}

}

}

}

7) 我们也可以提供摄像头切换的方法进行切换

-(void)switchCamera{

[self.captureSession beginConfiguration];

[self.captureSession removeInput:self.videoInputDevice];

if ([self.videoInputDevice isEqual: self.frontCamera]) {

self.videoInputDevice = self.backCamera;

}else{

self.videoInputDevice = self.frontCamera;

}

[self.captureSession addInput:self.videoInputDevice];

[self.captureSession commitConfiguration];

}

8) 设置好后通过会话的 来开启步骤

9)源码:SQSystemCapture.h

//

// SQSystemCapture.h

// CPDemo

//

// Created by Sem on 2020/8/10.

// Copyright © 2020 SEM. All rights reserved.

//

#import

#import

#import

#import

NS_ASSUME_NONNULL_BEGIN

typedef NS_ENUM(int,SQSystemCaptrueType){

SQSystemCaptrueTypeVideo = 0,

SQSystemCaptrueTypeAudio,

SQSystemCaptrueTypeAll

};

@protocol SQSystemCaptureDelegate

@optional

-(void)captureSampleBuffer:(CMSampleBufferRef )sampleBuffer withType:(SQSystemCaptrueType)type;

@end

@interface SQSystemCapture : NSObject

@property (nonatomic,strong)UIView * preview;

@property (nonatomic,weak)id delegate;

/**捕获视频的宽*/

@property (nonatomic, assign, readonly) NSUInteger witdh;

/**捕获视频的高*/

@property (nonatomic, assign, readonly) NSUInteger height;

-(instancetype)initWithType:(SQSystemCaptrueType)type;

-(instancetype)init UNAVAILABLE_ATTRIBUTE;

/** 准备工作(只捕获音频时调用)*/

- (void)prepare;

//捕获内容包括视频时调用(预览层大小,添加到view上用来显示)

- (void)prepareWithPreviewSize:(CGSize)size;

/**开始*/

- (void)start;

/**结束*/

- (void)stop;

/**切换摄像头*/

- (void)changeCamera;

//授权检测

+ (int)checkMicrophoneAuthor;

+ (int)checkCameraAuthor;

@end

NS_ASSUME_NONNULL_END

10)源码:SQSystemCapture.m

//

// SQSystemCapture.m

// CPDemo

//

// Created by Sem on 2020/8/10.

// Copyright © 2020 SEM. All rights reserved.

//

#import "SQSystemCapture.h"

@interface SQSystemCapture ()

/********************控制相关**********/

//是否进行

@property (nonatomic, assign) BOOL isRunning;

/********************公共*************/

//会话

@property (nonatomic, strong) AVCaptureSession *captureSession;

//代理队列

@property (nonatomic, strong) dispatch_queue_t captureQueue;

/********************音频相关**********/

//音频设备

@property (nonatomic, strong) AVCaptureDeviceInput *audioInputDevice;

//输出数据接收

@property (nonatomic, strong) AVCaptureAudioDataOutput *audioDataOutput;

@property (nonatomic, strong) AVCaptureConnection *audioConnection;

/********************视频相关**********/

//当前使用的视频设备

@property (nonatomic, weak) AVCaptureDeviceInput *videoInputDevice;

//前后摄像头

@property (nonatomic, strong) AVCaptureDeviceInput *frontCamera;

@property (nonatomic, strong) AVCaptureDeviceInput *backCamera;

//输出数据接收

@property (nonatomic, strong) AVCaptureVideoDataOutput *videoDataOutput;

@property (nonatomic, strong) AVCaptureConnection *videoConnection;

//预览层

@property (nonatomic, strong) AVCaptureVideoPreviewLayer *preLayer;

@property (nonatomic, assign) CGSize prelayerSize;

@end

@implementation SQSystemCapture{

//捕捉类型

SQSystemCaptrueType capture;

}

-(dispatch_queue_t)captureQueue{

if(!_captureQueue){

_captureQueue = dispatch_queue_create("TMCapture Queue", DISPATCH_QUEUE_SERIAL);

}

return _captureQueue;

}

#pragma mark-懒加载

- (AVCaptureSession *)captureSession{

if (!_captureSession) {

_captureSession = [[AVCaptureSession alloc] init];

}

return _captureSession;

}

- (UIView *)preview{

if (!_preview) {

_preview = [[UIView alloc] init];

}

return _preview;

}

-(instancetype)initWithType:(SQSystemCaptrueType)type{

self = [super init];

if(self){

capture = type;

}

return self;

}

- (void)captureOutput:(AVCaptureOutput *)output didOutputSampleBuffer:(CMSampleBufferRef)sampleBuffer fromConnection:(AVCaptureConnection *)connection{

if(connection == self.audioConnection){

[_delegate captureSampleBuffer:sampleBuffer withType:SQSystemCaptrueTypeAudio];

}else{

[_delegate captureSampleBuffer:sampleBuffer withType:SQSystemCaptrueTypeVideo];

}

}

- (void)prepare{

[self prepareWithPreviewSize:CGSizeZero];

}

//捕获内容包括视频时调用(预览层大小,添加到view上用来显示)

- (void)prepareWithPreviewSize:(CGSize)size{

_prelayerSize = size;

if(capture == SQSystemCaptrueTypeAudio){

[self setupAudio];

}else if (capture == SQSystemCaptrueTypeVideo) {

[self setupVideo];

}else if (capture == SQSystemCaptrueTypeAll) {

[self setupAudio];

[self setupVideo];

}

}

-(void)setupAudio{

AVCaptureDevice *audioDevice = [AVCaptureDevice defaultDeviceWithMediaType:AVMediaTypeAudio];

self.audioInputDevice = [AVCaptureDeviceInput deviceInputWithDevice:audioDevice error:NULL];

self.audioDataOutput = [[AVCaptureAudioDataOutput alloc]init];

[self.audioDataOutput setSampleBufferDelegate:self queue:self.captureQueue];

[self.captureSession beginConfiguration];

if ([self.captureSession canAddInput:self.audioInputDevice]) {

[self.captureSession addInput:self.audioInputDevice];

}

if([self.captureSession canAddOutput:self.audioDataOutput]){

[self.captureSession addOutput:self.audioDataOutput];

}

[self.captureSession commitConfiguration];

self.audioConnection = [self.audioDataOutput connectionWithMediaType:AVMediaTypeAudio];

}

-(void)setupVideo{

AVCaptureDeviceDiscoverySession *discoverySession =[AVCaptureDeviceDiscoverySession discoverySessionWithDeviceTypes:@[AVCaptureDeviceTypeBuiltInWideAngleCamera] mediaType:AVMediaTypeVideo position:AVCaptureDevicePositionUnspecified];

NSArray *videoDevices = discoverySession.devices;

self.frontCamera = [AVCaptureDeviceInput deviceInputWithDevice:videoDevices.lastObject error:nil];

self.backCamera = [AVCaptureDeviceInput deviceInputWithDevice:videoDevices.firstObject error:nil];

self.videoInputDevice = self.backCamera;

self.videoDataOutput = [[AVCaptureVideoDataOutput alloc]init];

[self.videoDataOutput setSampleBufferDelegate:self queue:self.captureQueue];

[self.videoDataOutput setAlwaysDiscardsLateVideoFrames:YES];

[self.captureSession beginConfiguration];

if ([self.captureSession canAddInput:self.videoInputDevice]) {

[self.captureSession addInput:self.videoInputDevice];

}

if([self.captureSession canAddOutput:self.videoDataOutput]){

[self.captureSession addOutput:self.videoDataOutput];

}

[self setVideoPreset];

[self.captureSession commitConfiguration];

self.videoConnection = [self.videoDataOutput connectionWithMediaType:AVMediaTypeVideo];

[self updateFps:25];

//设置预览

[self setupPreviewLayer];

}

/**设置预览层**/

- (void)setupPreviewLayer{

self.preLayer = [AVCaptureVideoPreviewLayer layerWithSession:self.captureSession];

self.preLayer.frame = CGRectMake(0, 0, self.prelayerSize.width, self.prelayerSize.height);

//设置满屏

self.preLayer.videoGravity = AVLayerVideoGravityResizeAspectFill;

[self.preview.layer addSublayer:self.preLayer];

}

-(void)updateFps:(NSInteger) fps{

//获取当前capture设备

AVCaptureDeviceDiscoverySession *discoverySession =[AVCaptureDeviceDiscoverySession discoverySessionWithDeviceTypes:@[AVCaptureDeviceTypeBuiltInWideAngleCamera] mediaType:AVMediaTypeVideo position:AVCaptureDevicePositionUnspecified];

NSArray *videoDevices = discoverySession.devices;

//遍历所有设备(前后摄像头)

for (AVCaptureDevice *vDevice in videoDevices) {

//获取当前支持的最大fps

float maxRate = [(AVFrameRateRange *)[vDevice.activeFormat.videoSupportedFrameRateRanges objectAtIndex:0] maxFrameRate];

//如果想要设置的fps小于或等于做大fps,就进行修改

if (maxRate >= fps) {

//实际修改fps的代码

if ([vDevice lockForConfiguration:NULL]) {

vDevice.activeVideoMinFrameDuration = CMTimeMake(10, (int)(fps * 10));

vDevice.activeVideoMaxFrameDuration = vDevice.activeVideoMinFrameDuration;

[vDevice unlockForConfiguration];

}

}

}

}

/**设置分辨率**/

- (void)setVideoPreset{

if ([self.captureSession canSetSessionPreset:AVCaptureSessionPreset1920x1080]) {

self.captureSession.sessionPreset = AVCaptureSessionPreset1920x1080;

_witdh = 1080; _height = 1920;

}else if ([self.captureSession canSetSessionPreset:AVCaptureSessionPreset1280x720]) {

self.captureSession.sessionPreset = AVCaptureSessionPreset1280x720;

_witdh = 720; _height = 1280;

}else{

self.captureSession.sessionPreset = AVCaptureSessionPreset640x480;

_witdh = 480; _height = 640;

}

}

/**开始*/

- (void)start{

if(![self.captureSession isRunning]){

[self.captureSession startRunning];

}

}

/**结束*/

- (void)stop{

if([self.captureSession isRunning]){

[self.captureSession stopRunning];

}

}

/**切换摄像头*/

- (void)changeCamera{

[self switchCamera];

}

-(void)switchCamera{

[self.captureSession beginConfiguration];

[self.captureSession removeInput:self.videoInputDevice];

if ([self.videoInputDevice isEqual: self.frontCamera]) {

self.videoInputDevice = self.backCamera;

}else{

self.videoInputDevice = self.frontCamera;

}

[self.captureSession addInput:self.videoInputDevice];

[self.captureSession commitConfiguration];

}

- (void)dealloc{

NSLog(@"capture销毁。。。。");

[self destroyCaptureSession];

}

-(void) destroyCaptureSession{

if (self.captureSession) {

if (capture == SQSystemCaptrueTypeAudio) {

[self.captureSession removeInput:self.audioInputDevice];

[self.captureSession removeOutput:self.audioDataOutput];

}else if (capture == SQSystemCaptrueTypeVideo) {

[self.captureSession removeInput:self.videoInputDevice];

[self.captureSession removeOutput:self.videoDataOutput];

}else if (capture == SQSystemCaptrueTypeAll) {

[self.captureSession removeInput:self.audioInputDevice];

[self.captureSession removeOutput:self.audioDataOutput];

[self.captureSession removeInput:self.videoInputDevice];

[self.captureSession removeOutput:self.videoDataOutput];

}

}

self.captureSession = nil;

}

+ (int)checkMicrophoneAuthor{

int result = 0;

//麦克风

AVAudioSessionRecordPermission permissionStatus = [[AVAudioSession sharedInstance] recordPermission];

switch (permissionStatus) {

case AVAudioSessionRecordPermissionUndetermined:

// 请求授权

[[AVAudioSession sharedInstance] requestRecordPermission:^(BOOL granted) {

}];

result = 0;

break;

case AVAudioSessionRecordPermissionDenied://拒绝

result = -1;

break;

case AVAudioSessionRecordPermissionGranted://允许

result = 1;

break;

default:

break;

}

return result;

}

/**

* 摄像头授权

* 0 :未授权 1:已授权 -1:拒绝

*/

+ (int)checkCameraAuthor{

int result = 0;

AVAuthorizationStatus videoStatus = [AVCaptureDevice authorizationStatusForMediaType:AVMediaTypeVideo];

switch (videoStatus) {

case AVAuthorizationStatusNotDetermined://第一次

// 请求授权

[AVCaptureDevice requestAccessForMediaType:AVMediaTypeVideo completionHandler:^(BOOL granted) {

}];

break;

case AVAuthorizationStatusAuthorized://已授权

result = 1;

break;

default:

result = -1;

break;

}

return result;

}

-(int)test{

int result = 0;

AVAuthorizationStatus videoStatus = [AVCaptureDevice authorizationStatusForMediaType:AVMediaTypeAudio];

switch (videoStatus) {

case AVAuthorizationStatusNotDetermined://第一次

break;

case AVAuthorizationStatusAuthorized://已授权

result = 1;

break;

default:

result = -1;

break;

}

return result;

}

@end