为什么80%的码农都做不了架构师?>>> ![]()

利用卷积神经网络识别mnist手写数字,python程序来源于:

TensorFlow卷积神经网络(CNN)示例 - 高级API

Convolutional Neural Network Example - tf.layers API

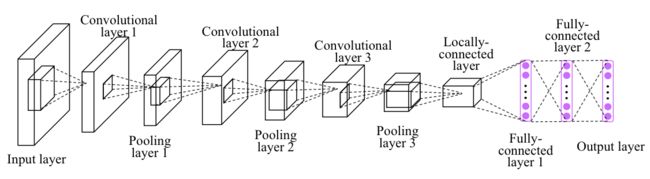

CNN网络结构图示

MNIST数据库

More info: http://yann.lecun.com/exdb/mnist/

In [38]:

from __future__ import division, print_function, absolute_import

# Import MNIST data,MNIST数据集导入

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("file://G:/pandas/data", one_hot=False)

import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

Successfully downloaded train-images-idx3-ubyte.gz 9912422 bytes. WARNING:tensorflow:From d:\ide\python\lib\site-packages\tensorflow\contrib\learn\python\learn\datasets\mnist.py:262: extract_images (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version. Instructions for updating: Please use tf.data to implement this functionality. Extracting file://G:/pandas/data\train-images-idx3-ubyte.gz Successfully downloaded train-labels-idx1-ubyte.gz 28881 bytes. WARNING:tensorflow:From d:\ide\python\lib\site-packages\tensorflow\contrib\learn\python\learn\datasets\mnist.py:267: extract_labels (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version. Instructions for updating: Please use tf.data to implement this functionality. Extracting file://G:/pandas/data\train-labels-idx1-ubyte.gz Successfully downloaded t10k-images-idx3-ubyte.gz 1648877 bytes. Extracting file://G:/pandas/data\t10k-images-idx3-ubyte.gz Successfully downloaded t10k-labels-idx1-ubyte.gz 4542 bytes. Extracting file://G:/pandas/data\t10k-labels-idx1-ubyte.gz WARNING:tensorflow:From d:\ide\python\lib\site-packages\tensorflow\contrib\learn\python\learn\datasets\mnist.py:290: DataSet.__init__ (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version. Instructions for updating: Please use alternatives such as official/mnist/dataset.py from tensorflow/models.

In [39]:

# Training Parameters,超参数 learning_rate = 0.001 #学习率 num_steps = 2000 # 训练步数 batch_size = 128 # 训练数据批的大小 # Network Parameters,网络参数 num_input = 784 # MNIST数据输入 (img shape: 28*28) num_classes = 10 # MNIST所有类别 (0-9 digits) dropout = 0.75 # Dropout, probability to keep units,保留神经元相应的概率

In [40]:

# Create the neural network,创建深度神经网络

def conv_net(x_dict, n_classes, dropout, reuse, is_training):

# Define a scope for reusing the variables,确定命名空间

with tf.variable_scope('ConvNet', reuse=reuse):

# TF Estimator类型的输入为像素

x = x_dict['images']

# MNIST数据输入格式为一位向量,包含784个特征 (28*28像素)

# 用reshape函数改变形状以匹配图像的尺寸 [高 x 宽 x 通道数]

# 输入张量的尺度为四维: [(每一)批数据的数目, 高,宽,通道数]

x = tf.reshape(x, shape=[-1, 28, 28, 1])

# 卷积层,32个卷积核,尺寸为5x5

conv1 = tf.layers.conv2d(x, 32, 5, activation=tf.nn.relu)

# 最大池化层,步长为2,无需学习任何参量

conv1 = tf.layers.max_pooling2d(conv1, 2, 2)

# 卷积层,32个卷积核,尺寸为5x5

conv2 = tf.layers.conv2d(conv1, 64, 3, activation=tf.nn.relu)

# 最大池化层,步长为2,无需学习任何参量

conv2 = tf.layers.max_pooling2d(conv2, 2, 2)

# 展开特征为一维向量,以输入全连接层

fc1 = tf.contrib.layers.flatten(conv2)

# 全连接层

fc1 = tf.layers.dense(fc1, 1024)

# 应用Dropout (训练时打开,测试时关闭)

fc1 = tf.layers.dropout(fc1, rate=dropout, training=is_training)

# 输出层,预测类别

out = tf.layers.dense(fc1, n_classes)

return out

In [41]:

# 确定模型功能 (参照TF Estimator模版)

def model_fn(features, labels, mode):

# 构建神经网络

# 因为dropout在训练与测试时的特性不一,我们此处为训练和测试过程创建两个独立但共享权值的计算图

logits_train = conv_net(features, num_classes, dropout, reuse=False, is_training=True)

logits_test = conv_net(features, num_classes, dropout, reuse=True, is_training=False)

# 预测

pred_classes = tf.argmax(logits_test, axis=1)

pred_probas = tf.nn.softmax(logits_test)

if mode == tf.estimator.ModeKeys.PREDICT:

return tf.estimator.EstimatorSpec(mode, predictions=pred_classes)

# 确定误差函数与优化器

loss_op = tf.reduce_mean(tf.nn.sparse_softmax_cross_entropy_with_logits(

logits=logits_train, labels=tf.cast(labels, dtype=tf.int32)))

optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate)

train_op = optimizer.minimize(loss_op, global_step=tf.train.get_global_step())

# 评估模型精确度

acc_op = tf.metrics.accuracy(labels=labels, predictions=pred_classes)

# TF Estimators需要返回EstimatorSpec

estim_specs = tf.estimator.EstimatorSpec(

mode=mode,

predictions=pred_classes,

loss=loss_op,

train_op=train_op,

eval_metric_ops={'accuracy': acc_op})

return estim_specs

In [43]:

# 构建Estimator model = tf.estimator.Estimator(model_fn)

INFO:tensorflow:Using default config.

WARNING:tensorflow:Using temporary folder as model directory: C:\Users\XUESON~1\AppData\Local\Temp\tmpdaj442mr

INFO:tensorflow:Using config: {'_model_dir': 'C:\\Users\\XUESON~1\\AppData\\Local\\Temp\\tmpdaj442mr', '_tf_random_seed': None, '_save_summary_steps': 100, '_save_checkpoints_steps': None, '_save_checkpoints_secs': 600, '_session_config': None, '_keep_checkpoint_max': 5, '_keep_checkpoint_every_n_hours': 10000, '_log_step_count_steps': 100, '_service': None, '_cluster_spec': , '_task_type': 'worker', '_task_id': 0, '_global_id_in_cluster': 0, '_master': '', '_evaluation_master': '', '_is_chief': True, '_num_ps_replicas': 0, '_num_worker_replicas': 1}

In [44]:

# 确定训练输入函数

input_fn = tf.estimator.inputs.numpy_input_fn(

x={'images': mnist.train.images}, y=mnist.train.labels,

batch_size=batch_size, num_epochs=None, shuffle=True)

# 开始训练模型

model.train(input_fn, steps=num_steps)

INFO:tensorflow:Calling model_fn. INFO:tensorflow:Done calling model_fn. INFO:tensorflow:Create CheckpointSaverHook. INFO:tensorflow:Graph was finalized. INFO:tensorflow:Running local_init_op. INFO:tensorflow:Done running local_init_op. INFO:tensorflow:Saving checkpoints for 1 into C:\Users\XUESON~1\AppData\Local\Temp\tmpdaj442mr\model.ckpt. INFO:tensorflow:loss = 2.333311, step = 1 INFO:tensorflow:global_step/sec: 4.39831 INFO:tensorflow:loss = 0.17404273, step = 101 (22.736 sec) INFO:tensorflow:global_step/sec: 3.77397 INFO:tensorflow:loss = 0.11679428, step = 201 (26.497 sec) INFO:tensorflow:global_step/sec: 4.31603 INFO:tensorflow:loss = 0.13766995, step = 301 (23.169 sec) INFO:tensorflow:global_step/sec: 4.15033 INFO:tensorflow:loss = 0.10576604, step = 401 (24.094 sec) INFO:tensorflow:global_step/sec: 3.94188 INFO:tensorflow:loss = 0.021022122, step = 501 (25.415 sec) INFO:tensorflow:global_step/sec: 3.85337 INFO:tensorflow:loss = 0.014713779, step = 601 (25.905 sec) INFO:tensorflow:global_step/sec: 4.46202 INFO:tensorflow:loss = 0.10044412, step = 701 (22.410 sec) INFO:tensorflow:global_step/sec: 4.40926 INFO:tensorflow:loss = 0.022443363, step = 801 (22.680 sec) INFO:tensorflow:global_step/sec: 4.38373 INFO:tensorflow:loss = 0.011833555, step = 901 (22.812 sec) INFO:tensorflow:global_step/sec: 4.67343 INFO:tensorflow:loss = 0.015882235, step = 1001 (21.413 sec) INFO:tensorflow:global_step/sec: 3.90732 INFO:tensorflow:loss = 0.07513487, step = 1101 (25.593 sec) INFO:tensorflow:global_step/sec: 3.46564 INFO:tensorflow:loss = 0.03496183, step = 1201 (28.842 sec) INFO:tensorflow:global_step/sec: 3.71127 INFO:tensorflow:loss = 0.0319542, step = 1301 (26.958 sec) INFO:tensorflow:global_step/sec: 3.80743 INFO:tensorflow:loss = 0.04076298, step = 1401 (26.252 sec) INFO:tensorflow:global_step/sec: 3.63793 INFO:tensorflow:loss = 0.072247826, step = 1501 (27.501 sec) INFO:tensorflow:global_step/sec: 4.26565 INFO:tensorflow:loss = 0.016876796, step = 1601 (23.443 sec) INFO:tensorflow:global_step/sec: 4.18978 INFO:tensorflow:loss = 0.10762453, step = 1701 (23.855 sec) INFO:tensorflow:global_step/sec: 3.6478 INFO:tensorflow:loss = 0.05514864, step = 1801 (27.411 sec) INFO:tensorflow:global_step/sec: 4.18436 INFO:tensorflow:loss = 0.027144967, step = 1901 (23.899 sec) INFO:tensorflow:Saving checkpoints for 2000 into C:\Users\XUESON~1\AppData\Local\Temp\tmpdaj442mr\model.ckpt. INFO:tensorflow:Loss for final step: 0.010040723.

Out[44]:

In [45]:

# 评判模型

# 确定评判用输入函数

input_fn = tf.estimator.inputs.numpy_input_fn(

x={'images': mnist.test.images}, y=mnist.test.labels,

batch_size=batch_size, shuffle=False)

model.evaluate(input_fn)

INFO:tensorflow:Calling model_fn. INFO:tensorflow:Done calling model_fn. INFO:tensorflow:Starting evaluation at 2018-05-17-01:36:50 INFO:tensorflow:Graph was finalized. INFO:tensorflow:Restoring parameters from C:\Users\XUESON~1\AppData\Local\Temp\tmpdaj442mr\model.ckpt-2000 INFO:tensorflow:Running local_init_op. INFO:tensorflow:Done running local_init_op. INFO:tensorflow:Finished evaluation at 2018-05-17-01:36:57 INFO:tensorflow:Saving dict for global step 2000: accuracy = 0.992, global_step = 2000, loss = 0.03843919

Out[45]:

{'accuracy': 0.992, 'global_step': 2000, 'loss': 0.03843919}

In [46]:

# 预测单个图像

n_images = 4

# 从数据集得到测试图像

test_images = mnist.test.images[:n_images]

# 准备输入数据

input_fn = tf.estimator.inputs.numpy_input_fn(

x={'images': test_images}, shuffle=False)

# 用训练好的模型预测图片类别

preds = list(model.predict(input_fn))

INFO:tensorflow:Calling model_fn. INFO:tensorflow:Done calling model_fn. INFO:tensorflow:Graph was finalized. INFO:tensorflow:Restoring parameters from C:\Users\XUESON~1\AppData\Local\Temp\tmpdaj442mr\model.ckpt-2000 INFO:tensorflow:Running local_init_op. INFO:tensorflow:Done running local_init_op.

输入图片

In [49]:

for i in range(n_images):

plt.imshow(np.reshape(test_images[i], [28, 28]), cmap='gray')

print("Model prediction:", preds[i])

输出:

Model prediction: 7

Model prediction: 2

Model prediction: 1

Model prediction: 0