OpenCL编程之二

白嫖来的C端代码:

matrix.c:

#include

#include

#include

#include

#pragma warning( disable : 4996 )

int main() {

cl_int error;

cl_platform_id platforms;

cl_device_id devices;

cl_context context;

FILE *program_handle;

size_t program_size;

char *program_buffer;

cl_program program;

size_t log_size;

char *program_log;

char kernel_name[] = "createBuffer";

cl_kernel kernel;

cl_command_queue queue;

//获取平台

error = clGetPlatformIDs(1, &platforms, NULL);

if (error != 0) {

printf("Get platform failed!");

return -1;

}

//获取设备

error = clGetDeviceIDs(platforms, CL_DEVICE_TYPE_GPU, 1, &devices, NULL);

if (error != 0) {

printf("Get device failed!");

return -1;

}

//创建上下文

context = clCreateContext(NULL,1,&devices,NULL,NULL,&error);

if (error != 0) {

printf("Creat context failed!");

return -1;

}

//创建程序;注意要用"rb"

program_handle = fopen("kernel.cl","rb");

if (program_handle == NULL) {

printf("The kernle can not be opened!");

return -1;

}

fseek(program_handle,0,SEEK_END);

program_size = ftell(program_handle);

rewind(program_handle);

program_buffer = (char *)malloc(program_size+1);

program_buffer[program_size] = '\0';

error=fread(program_buffer,sizeof(char),program_size,program_handle);

if (error == 0) {

printf("Read kernel failed!");

return -1;

}

fclose(program_handle);

program = clCreateProgramWithSource(context,1,(const char **)&program_buffer,

&program_size,&error);

if (error < 0) {

printf("Couldn't create the program!");

return -1;

}

//编译程序

error = clBuildProgram(program,1,&devices,NULL,NULL,NULL);

if (error < 0) {

//确定日志文件的大小

clGetProgramBuildInfo(program,devices,CL_PROGRAM_BUILD_LOG,0,NULL,&log_size);

program_log = (char *)malloc(log_size+1);

program_log[log_size] = '\0';

//读取日志

clGetProgramBuildInfo(program, devices, CL_PROGRAM_BUILD_LOG,

log_size+1, program_log, NULL);

printf("%s\n",program_log);

free(program_log);

return -1;

}

free(program_buffer);

//创建命令队列

queue = clCreateCommandQueue(context, devices, CL_QUEUE_PROFILING_ENABLE, &error);

if (error < 0) {

printf("Coudn't create the command queue");

return -1;

}

//创建内核

kernel = clCreateKernel(program,kernel_name,&error);

if (kernel==NULL) {

printf("Couldn't create kernel!\n");

return -1;

}

//初始化参数

float result[100];

float a_in[100];

float b_in[100];

for (int i = 0; i < 100; i++) {

a_in[i] = i;

b_in[i] = i*2.0;

}

//创建缓存对象

cl_mem memObject1 = clCreateBuffer(context,CL_MEM_READ_ONLY|CL_MEM_COPY_HOST_PTR,sizeof(float)*100,a_in,&error);

if (error < 0) {

printf("Creat memObject1 failed!\n");

return -1;

}

cl_mem memObject2 = clCreateBuffer(context, CL_MEM_READ_ONLY | CL_MEM_COPY_HOST_PTR,

sizeof(float) * 100, b_in, &error);

if (error < 0) {

printf("Creat memObject2 failed!\n");

return -1;

}

cl_mem memObject3 = clCreateBuffer(context, CL_MEM_WRITE_ONLY ,

sizeof(float) * 100, NULL, &error);

if (error < 0) {

printf("Creat memObject3 failed!\n");

return -1;

}

//设置内核参数

error = clSetKernelArg(kernel,0,sizeof(cl_mem),&memObject1);

error|= clSetKernelArg(kernel, 1, sizeof(cl_mem), &memObject2);

error |= clSetKernelArg(kernel, 2, sizeof(cl_mem), &memObject3);

if (error != CL_SUCCESS) {

printf("Error setting kernel arguments!\n");

return -1;

}

//执行内核

size_t globalWorkSize[1] = {100};

size_t localWorkSize[1] = {1};

error = clEnqueueNDRangeKernel(queue,kernel,1,NULL,globalWorkSize,

localWorkSize,0,NULL,NULL);

if (error != CL_SUCCESS) {

printf("Error queuing kernel for execution!\n");

return -1;

}

//读取执行结果

error = clEnqueueReadBuffer(queue,memObject3,CL_TRUE,0,100*sizeof(float),

result,0,NULL,NULL);

if (error != CL_SUCCESS) {

printf("Error reading result buffer!\n");

return -1;

}

//显示结果

for (int i = 0; i < 100; i++) {

printf("%f ",result[i]);

}

printf("\n");

//释放资源

clReleaseDevice(devices);

clReleaseContext(context);

clReleaseCommandQueue(queue);

clReleaseProgram(program);

clReleaseKernel(kernel);

clReleaseMemObject(memObject1);

clReleaseMemObject(memObject2);

clReleaseMemObject(memObject3);

return 0;

}

OpenCL代码:

kernel.cl:

__kernel void createBuffer(__global const float *a_in,

__global const float *b_in,

__global float *result) {

int gid = get_global_id(0);

result[gid] = a_in[gid] + b_in[gid];

}

执行逻辑是,用opencl开发的kernel.cl程序,在HOST端的C程序中被动态加载,运行时编译,投递到GPU中运行,跑出结果后在从GPU MEM中读回来打印。

验证:

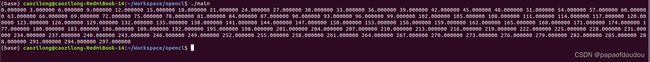

结合a_in,b_in初始化值和kernel.cl的逻辑,可以知道正确的结果应该是首项为0,公差为3的等差数列,我们编译运行,看一下结果是否符合我们预期:

编译命令:

gcc -I/usr/local/cuda-11.5/targets/x86_64-linux/include matrix.c -o main -L/usr/local/cuda-11.5/targets/x86_64-linux/lib/ -lOpenCL和CUDA的关系:

架构上,它们在同一层面,cuda和OpenCL都属于一种并行计算开发语言,这从CUDA使用的编译器和OpenCL虽然使用GCC编译HOST侧代码,但是端册代码却需编译一个文本CL程序文件,交给OpenCL API执行在线编译看出来,他们虽然都有吸取C的语法特点,但是异构加速核心这一块和CPU端的编译器是不共用的,关于CUDA开发的例子,可以参考如下博客。

CUDA编程初探_tugouxp的专栏-CSDN博客_cuda并行程序设计CUDA的全称是Compute Unified Device Architecture,是显卡厂商NVIDIA推出的运算平台,开发者可以使用C语言来编写CUDA代码,使用NVCC编译器可以在支持CUDA的GPU处理器上以高速运行。虽然AMD也做显卡,但是CUDA是老黄自家提出的标准,没带AMD一起玩儿,所以,提到基于CUDA的高性能计算,使用的都是Nvidia的显卡。首先安装CUDA环境,具体方式参考博客:FairMOT Cuda环境搭建并进行推理_tugouxp的专栏-CSDN博客环境准备1.PChttps://blog.csdn.net/tugouxp/article/details/121293446