【101】NLP 自然语言处理14种分类算法】

![]()

内容目录

一、数据集介绍二、解压文件明确需求三、批量读取和合并文本数据集四、中文文本分词五、停止词使用六、编码器处理文本标签七、常规算法模型1、k近邻算法2、决策树3、多层感知器4、伯努力贝叶斯5、高斯贝叶斯6、多项式贝叶斯7、逻辑回归8、支持向量机八、集成算法模型1、随机森林算法2、自适应增强算法3、lightgbm算法4、xgboost算法九、深度学习1、前馈神经网络2、LSTM 神经网络十、算法之间性能比较

一、数据集介绍

文档共有4中类型:女性、体育、文学、校园

训练集放到train文件夹里,测试集放到test文件夹里。

停用词放到stop文件夹里。

训练集:3305 [女性,体育,文学,校园]

测试集 200 [女性,体育,文学,校园]import os

import shutil

import zipfile

import jieba

import time

import warnings

import xgboost

import lightgbm

import numpy as np

import pandas as pd

from keras import models

from keras import layers

from keras.utils.np_utils import to_categorical

from keras.preprocessing.text import Tokenizer

from sklearn import svm

from sklearn import metrics

from sklearn.neural_network import MLPClassifier

from sklearn.tree import DecisionTreeClassifier

from sklearn.neighbors import KNeighborsClassifier

from sklearn.naive_bayes import BernoulliNB

from sklearn.naive_bayes import GaussianNB

from sklearn.naive_bayes import MultinomialNB

from sklearn.linear_model import LogisticRegression

from sklearn.ensemble import RandomForestClassifier

from sklearn.ensemble import AdaBoostClassifier

from sklearn.preprocessing import LabelEncoder

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.feature_extraction.text import TfidfVectorizer

warnings.filterwarnings('ignore')二、解压文件明确需求

《获取数据》—《数据分析和处理》—《特征工程与选择》—《算法模型》—《性能评估/参数调优》

目标需求1:批量读取文档文件.txt

目标需求2:文本向量化使用词袋法或者TFIDF

目标需求3:比较各个算法的性能优劣,包括模型消耗用时、模型准确率

三、批量读取和合并文本数据集

path = 'D:\\A\\AI-master\\py-data\\text_classification-master\\text classification'

def read_text(path, text_list):

'''

path: 必选参数,文件夹路径

text_list: 必选参数,文件夹 path 下的所有 .txt 文件名列表

return: 返回值

features 文本(特征)数据,以列表形式返回;

labels 分类标签,以列表形式返回

'''

features, labels = [], []

for text in text_list:

if text.split('.')[-1] == 'txt':

try:

with open(path + text, encoding='gbk') as fp:

features.append(fp.read()) # 特征

labels.append(path.split('\\')[-2]) # 标签

except Exception as erro:

print('\n>>>发现错误, 正在输出错误信息...\n', erro)

return features, labels

def merge_text(train_or_test, label_name):

'''

train_or_test: 必选参数,train 训练数据集 or test 测试数据集

label_name: 必选参数,分类标签的名字

return: 返回值

merge_features 合并好的所有特征数据,以列表形式返回;

merge_labels 合并好的所有分类标签数据,以列表形式返回

'''

print('\n>>>文本读取和合并程序已经启动, 请稍候...')

merge_features, merge_labels = [], [] # 函数全局变量

for name in label_name:

path = 'D:\\A\\AI-master\\py-data\\text_classification-master\\text classification\\' + train_or_test + '\\' + name + '\\'

text_list = os.listdir(path)

features, labels = read_text(path=path, text_list=text_list) # 调用函数

merge_features += features # 特征

merge_labels += labels # 标签

# 可以自定义添加一些想要知道的信息

print('\n>>>你正在处理的数据类型是...\n', train_or_test)

print('\n>>>[', train_or_test, ']数据具体情况如下...')

print('样本数量\t', len(merge_features), '\t类别名称\t', set(merge_labels))

print('\n>>>文本读取和合并工作已经处理完毕...\n')

return merge_features, merge_labels

#获取训练集

train_or_test = 'train'

label_name = ['女性', '体育', '校园', '文学']

X_train, y_train = merge_text(train_or_test, label_name)

# 获取测试集

train_or_test = 'test'

label_name = ['女性', '体育', '校园', '文学']

X_test, y_test = merge_text(train_or_test, label_name)print('X_train, y_train',len(X_train), len(y_train))

print('X_test, y_test',len(X_test), len(y_test))

X_train, y_train 3305 3305

X_test, y_test 200 200

四、中文文本分词

# 训练集

X_train_word = [jieba.cut(words) for words in X_train]

X_train_cut = [' '.join(word) for word in X_train_word]

X_train_cut[:5]

['明天 三里屯 见 瑞丽 服饰 美容 :2011 瑞丽 造型 大赏 派对 瑞丽 专属 模特 黄美熙 康猴 猴 康乐 帕丽扎 提 也 会 参加 哦 瑞丽 专属 模特 转发 ( 53 ) 评论 ( 5 ) 12 月 8 日 17 : 10 来自 新浪 微博',

'转发 微博 水草 温 :北京 早安 中国 宝迪沃 减肥 训练营 2012 年 寒假 班 开始 报名 早安 中国 吧 : 51 ( 分享 自 百度 贴 吧 ) 您 还 在 为 肥胖 为难 吗 冬季 减肥 训练 帮助 您 快速 减掉 多余 脂肪 咨询 顾问 温 老师 1071108677 原文 转发 原文 评论',

'多谢 支持 , 鱼子 精华 中 含有 太平洋 深海 凝胶 能 给 秀发 源源 滋养 , 鱼子 精华 发膜 含有 羟基 积雪草 苷 , 三重 神经酰胺 能 补充 秀发 流失 的 胶质 , 可以 有 焕然 新生 的 感觉 。鲍艺芳 :我 在 倍 丽莎 美发 沙龙 做 的 奢华 鱼子酱 护理 超级 好用 以后 就 用 它 了 我 的 最 爱 巴黎 卡诗 原文 转发 原文 评论',

'注射 除皱 针 时会 疼 吗 ?其他 产品 注射 会 产生 难以忍受 的 疼痛 , 除皱 针中 含有 微量 的 利多卡因 以 减轻 注射 过程 中 的 疼痛 , 注射 过程 只有 非常 轻微 的 疼痛感 。另外 , 除皱 针 的 胶原蛋白 具有 帮助 凝血 作用 , 极少 产生 淤血 现象 。一般 注射 后 就 可以 出门 。其他 产品 容易 产生 淤斑 , 而且 时间 较长 。让 人 难以 接受 。',

'注射 除皱 针 时会 疼 吗 ?其他 产品 注射 会 产生 难以忍受 的 疼痛 , 除皱 针中 含有 微量 的 利多卡因 以 减轻 注射 过程 中 的 疼痛 , 注射 过程 只有 非常 轻微 的 疼痛感 。另外 , 除皱 针 的 胶原蛋白 具有 帮助 凝血 作用 , 极少 产生 淤血 现象 。一般 注射 后 就 可以 出门 。其他 产品 容易 产生 淤斑 , 而且 时间 较长 。让 人 难以 接受 。']

# 训练集

# 测试集

X_test_word = [jieba.cut(words) for words in X_test]

X_test_cut = [' '.join(word) for word in X_test_word]

X_test_cut[:5]

['【 L 丰胸 — — 安全 的 丰胸 手术 】 华美 L 美胸 非常 安全 , 5 大 理由 :① 丰胸 手术 在世界上 已有 超过 100 年 的 历史 ; ② 假体 硅胶 有 40 多年 的 安全 使用 史 ; ③ 每年 都 有 超过 600 万 女性 接受 丰胸 手术 ; ④ 不 损伤 乳腺 组织 , 不 影响 哺乳 ; ⑤ 华美 美莱 率先 发布 中国 首部 《 整形 美容 安全 消费 白皮书 》 , 维护 社会 大众 整形 美容 消费 利益 。\t \t \t \t $ LOTOzf $',

'【 穿 高跟鞋 不磨 脚 小 方法 】 1 . 用 热 毛巾 在 磨 脚 的 部位 捂 几分钟 , 再拿块 干 的 软 毛巾 垫 着 , 用 锤子 把 鞋子 磨脚 的 地方 敲 平整 就 可以 啦 ;2 . 把 报纸 捏 成团 沾点 水 , 不要 太湿 , 但 要 整团 都 沾 到 水 , 再 拿 张干 的 报纸 裹住 湿 的 报纸 , 塞 在 挤 脚 部位 , 用 塑料袋 密封 一夜 ;3 . 穿鞋 之前 , 拿 香皂 ( 蜡烛 亦可 ) 在 磨脚 位置 薄薄的 涂上一层 。\t \t \t \t $ LOTOzf $',

'要 在 有限 的 空间 里 实现 最大 的 价值 , 布 上网 线 、 音频线 、 高清 线 、 投影 线 、 电源线 、 闭 路线 等 一切 能够 想到 的 线 ;在 32 ㎡ 里 放 一套 沙发 和 预留 5 - 6 个人 的 办公 环境 ;5 ㎡ 里 做 一个 小 卧室 ;在 6 ㎡ 里装 一个 袖珍 厨房 和 一个 厕所 ;还要 在 2 米 * 5 米 的 阳台 上 做 一个 小 花园 ;除 阳台 外套 内 只有 43 ㎡ 的 房子 要 怎么 做 才能 合理 利用 ?$ LOTOzf $',

'【 女人 命好 的 几点 特征 】 1 . 圆脸 ( 对 人际关系 及 财运 都 有加 分 ) ;2 . 下巴 丰满 ( 可能 会 拥有 两栋 以上 的 房产 ) ;3 . 臀大 ( 代表 有 财运 ) ;4 . 腿 不能 细 ( 腿 长脚 瘦 , 奔走 不停 , 辛苦 之相 也 ) ;5 . 小腹 有 脂肪 ( 状是 一种 福寿之 相 ) 。$ LOTOzf $',

'中央 经济 工作 会议 12 月 12 日至 14 日 在 北京 举行 , 很 引人瞩目 的 一个 新 的 提法 是 , 要 提高 中等 收入者 比重 , 但是 股市 却 把 “ 中等 收入者 ” 一批 又 一批 地 变成 了 “ 低收入 人群 ” !$ LOTOzf $']

五、停止词使用

1 停止词是为了过滤掉已经确认对训练模型没有实际价值的分词

2 停止词作用:提高模型的精确度和稳定性

# 加载停止词语料

stoplist = [word.strip() for word in open(

'D:\\A\\AI-master\\py-data\\text_classification-master\\

text classification\\stop\\stopword.txt', encoding='utf-8').readlines()]

stoplist[:10]

['\ufeff,', '?', '、', '。', '“', '”', '《', '》', '!', ',']

六、编码器处理文本标签

le = LabelEncoder()

y_train_le = le.fit_transform(y_train)

y_test_le = le.fit_transform(y_test)

y_train_le, y_test_le

(array([1, 1, 1, ..., 2, 2, 2], dtype=int64),

array([1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 3,

3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 2, 2, 2, 2, 2, 2, 2,

2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2,

2, 2], dtype=int64))

文本数据转换成数据值数据矩阵

count = CountVectorizer(stop_words=stoplist)

'''注意:

这里要先 count.fit() 训练所有训练和测试集,保证特征数一致,

这样在算法建模时才不会报错

'''

count.fit(list(X_train_cut) + list(X_test_cut))

X_train_count = count.transform(X_train_cut)

X_test_count = count.transform(X_test_cut)

X_train_count = X_train_count.toarray()

X_test_count = X_test_count.toarray()

print(X_train_count.shape, X_test_count.shape)

X_train_count, X_test_count

(3305, 23732) (200, 23732)

(array([[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0],

...,

[1, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0]], dtype=int64), array([[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0],

...,

[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0]], dtype=int64))

七、常规算法模型

封装一个函数,提高复用率,使用时只需调用函数即可。

# 用于存储所有算法的名字,准确率和所消耗的时间

estimator_list, score_list, time_list = [], [], []

def get_text_classification(estimator, X, y, X_test, y_test):

'''

estimator: 分类器,必选参数

X: 特征训练数据,必选参数

y: 标签训练数据,必选参数

X_test: 特征测试数据,必选参数

y_tes: 标签测试数据,必选参数

return: 返回值

y_pred_model: 预测值

classifier: 分类器名字

score: 准确率

t: 消耗的时间

matrix: 混淆矩阵

report: 分类评价函数

'''

start = time.time()

print('\n>>>算法正在启动,请稍候...')

model = estimator

print('\n>>>算法正在进行训练,请稍候...')

model.fit(X, y)

print(model)

print('\n>>>算法正在进行预测,请稍候...')

y_pred_model = model.predict(X_test)

print(y_pred_model)

print('\n>>>算法正在进行性能评估,请稍候...')

score = metrics.accuracy_score(y_test, y_pred_model)

matrix = metrics.confusion_matrix(y_test, y_pred_model)

report = metrics.classification_report(y_test, y_pred_model)

print('>>>准确率\n', score)

print('\n>>>混淆矩阵\n', matrix)

print('\n>>>召回率\n', report)

print('>>>算法程序已经结束...')

end = time.time()

t = end - start

print('\n>>>算法消耗时间为:', t, '秒\n')

classifier = str(model).split('(')[0]

return y_pred_model, classifier, score, round(t, 2), matrix, report

1、k近邻算法

knc = KNeighborsClassifier()

result = get_text_classification(knc, X_train_count, y_train_le, X_test_count, y_test_le)

estimator_list.append(result[1]), score_list.append(result[2]), time_list.append(result[3])

算法正在启动,请稍候...算法正在进行训练,请稍候...KNeighborsClassifier(algorithm='auto', leaf_size=30, metric='minkowski', metric_params=None, n_jobs=None, n_neighbors=5, p=2, weights='uniform')算法正在进行预测,请稍候...[1 0 0 0 0 0 0 0 0 1 0 0 0 1 0 0 0 0 0 1 1 1 0 1 0 1 2 0 1 0 0 1 0 2 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 2 0 0 0 0 0 0 0 0 2 2 2 0 0 0 0 2 0 0 0 2 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 2 2 2 2 2 0 0 0 0 0 0 0 0 0 0 0 0 0 0 2 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 2 2 0 0 0 0 0 0 2 0 0 0 0 0 2 0 0 0 0 0 0 0 0 0 0 0 0 0 0 2 0 2 2 0 0 2 0 3 0 2 2 2 2 2 2 0 2 0 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 0 2 2 0 0 0 0 0 2 2 2 2]算法正在进行性能评估,请稍候...准确率 0.665混淆矩阵 [[99 0 16 0] [26 10 2 0] [ 8 0 23 0] [ 6 0 9 1]]召回率 precision recall f1-score support 0 0.71 0.86 0.78 115 1 1.00 0.26 0.42 38 2 0.46 0.74 0.57 31 3 1.00 0.06 0.12 16 accuracy 0.67 200 macro avg 0.79 0.48 0.47 200weighted avg 0.75 0.67 0.62 200算法程序已经结束...算法消耗时间为: 28.78205680847168 秒

(None, None, None)

2、决策树

dtc = DecisionTreeClassifier()

result = get_text_classification(dtc, X_train_count, y_train_le, X_test_count, y_test_le)

estimator_list.append(result[1]), score_list.append(result[2]), time_list.append(result[3])

算法正在进行训练,请稍候...

DecisionTreeClassifier(class_weight=None, criterion='gini', max_depth=None,

max_features=None, max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, presort=False,

random_state=None, splitter='best')

算法正在进行预测,请稍候...

[1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 3 1 2 1 1 0

1 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 0 0 0 0 0 0 0 0 0 2 2 0 0 0 1 0 2 0 0 2 0

1 0 0 0 0 1 0 1 1 1 0 0 0 0 0 0 3 3 0 0 1 1 0 0 0 0 0 0 0 0 1 0 0 0 0 2 0

1 0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 1 0 0 0 0 0 0 0 1 1 1 1 1 0 1 1 1 1

1 0 0 0 0 1 3 1 3 2 3 3 3 3 3 0 3 2 2 2 3 2 1 2 1 2 2 2 2 2 1 2 2 2 2 2 3

3 2 2 0 1 2 3 3 0 2 1 0 2 2 2]

算法正在进行性能评估,请稍候...

准确率

0.735

混淆矩阵

[[84 24 5 2]

[ 1 35 1 1]

[ 3 5 19 4]

[ 1 2 4 9]]

召回率

precision recall f1-score support

0 0.94 0.73 0.82 115

1 0.53 0.92 0.67 38

2 0.66 0.61 0.63 31

3 0.56 0.56 0.56 16

accuracy 0.73 200

macro avg 0.67 0.71 0.67 200

weighted avg 0.79 0.73 0.74 200

算法程序已经结束...

算法消耗时间为: 20.55004119873047 秒

Out[35]: (None, None, None)

3、多层感知器

mlpc = MLPClassifier()

result = get_text_classification(mlpc, X_train_count, y_train_le, X_test_count, y_test_le)

estimator_list.append(result[1]), score_list.append(result[2]), time_list.append(result[3])

算法正在启动,请稍候...算法正在进行训练,请稍候...MLPClassifier(activation='relu', alpha=0.0001, batch_size='auto', beta_1=0.9, beta_2=0.999, early_stopping=False, epsilon=1e-08, hidden_layer_sizes=(100,), learning_rate='constant', learning_rate_init=0.001, max_iter=200, momentum=0.9, n_iter_no_change=10, nesterovs_momentum=True, power_t=0.5, random_state=None, shuffle=True, solver='adam', tol=0.0001, validation_fraction=0.1, verbose=False, warm_start=False)算法正在进行预测,请稍候...[1 1 2 1 3 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 3 0 0 0 0 0 0 0 0 0 0 0 0 0 2 2 0 0 0 1 0 0 0 0 0 0 0 0 2 0 0 0 0 0 0 0 0 0 0 0 2 0 0 0 0 2 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 2 0 1 0 0 0 0 0 1 0 0 0 0 0 0 3 2 3 2 3 1 3 3 3 0 3 2 2 2 3 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 0 2 1 2 2 2 2]算法正在进行性能评估,请稍候...准确率 0.89混淆矩阵 [[105 3 6 1] [ 0 36 1 1] [ 1 1 29 0] [ 2 1 5 8]]召回率 precision recall f1-score support 0 0.97 0.91 0.94 115 1 0.88 0.95 0.91 38 2 0.71 0.94 0.81 31 3 0.80 0.50 0.62 16 accuracy 0.89 200 macro avg 0.84 0.82 0.82 200weighted avg 0.90 0.89 0.89 200算法程序已经结束...算法消耗时间为: 234.1950967311859 秒

(None, None, None)

4、伯努力贝叶斯

bnb = BernoulliNB()

result = get_text_classification(bnb, X_train_count, y_train_le, X_test_count, y_test_le)

estimator_list.append(result[1]), score_list.append(result[2]), time_list.append(result[3])

算法正在启动,请稍候...算法正在进行训练,请稍候...BernoulliNB(alpha=1.0, binarize=0.0, class_prior=None, fit_prior=True)算法正在进行预测,请稍候...[1 1 0 1 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 1 1 1 1 1 1 0 1 0 1 0 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 2 0 0 0 0 2 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 2 0 0 0 0 2 0 0 2 0 0 0 2 0 0 0 0 0 0 2 2 0 0 0 2 2 2 2 0 0 2 0 0 2 2 0]算法正在进行性能评估,请稍候...准确率 0.785混淆矩阵 [[113 0 2 0] [ 6 32 0 0] [ 19 0 12 0] [ 15 0 1 0]]召回率 precision recall f1-score support 0 0.74 0.98 0.84 115 1 1.00 0.84 0.91 38 2 0.80 0.39 0.52 31 3 0.00 0.00 0.00 16 accuracy 0.79 200 macro avg 0.63 0.55 0.57 200weighted avg 0.74 0.79 0.74 200算法程序已经结束...算法消耗时间为: 1.1219978332519531 秒

(None, None, None)

5、高斯贝叶斯

gnb = GaussianNB()

result = get_text_classification(gnb, X_train_count, y_train_le, X_test_count, y_test_le)

estimator_list.append(result[1]), score_list.append(result[2]), time_list.append(result[3])

算法正在启动,请稍候...算法正在进行训练,请稍候...GaussianNB(priors=None, var_smoothing=1e-09)算法正在进行预测,请稍候...[1 1 1 1 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 2 1 2 1 1 1 1 0 0 0 0 0 0 0 0 0 0 0 2 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 2 0 0 0 0 0 0 0 0 0 0 3 0 0 0 0 0 0 0 0 0 0 0 0 0 2 2 0 0 0 0 0 0 0 0 1 0 0 0 2 0 0 0 0 0 0 0 0 0 0 0 2 0 0 0 0 2 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 2 0 0 0 0 0 0 3 1 2 3 3 1 3 3 3 2 3 3 3 3 3 2 3 2 2 2 2 0 1 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2]算法正在进行性能评估,请稍候...准确率 0.89混淆矩阵 [[105 1 8 1] [ 2 34 2 0] [ 1 1 28 1] [ 1 2 2 11]]召回率 precision recall f1-score support 0 0.96 0.91 0.94 115 1 0.89 0.89 0.89 38 2 0.70 0.90 0.79 31 3 0.85 0.69 0.76 16 accuracy 0.89 200 macro avg 0.85 0.85 0.84 200weighted avg 0.90 0.89 0.89 200算法程序已经结束...算法消耗时间为: 2.2403738498687744 秒

(None, None, None)

6、多项式贝叶斯

mnb = MultinomialNB()

result = get_text_classification(mnb, X_train_count, y_train_le, X_test_count, y_test_le)

estimator_list.append(result[1]), score_list.append(result[2]), time_list.append(result[3])

算法正在启动,请稍候...算法正在进行训练,请稍候...MultinomialNB(alpha=1.0, class_prior=None, fit_prior=True)算法正在进行预测,请稍候...[1 1 2 1 3 3 1 1 1 1 1 2 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 3 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 0 2 0 2 3 3 3 0 0 0 0 3 3 2 3 0 3 2 3 3 3 3 3 0 0 0 3 2 2 2 2 2 2 2 2 2 2 0 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2]算法正在进行性能评估,请稍候...准确率 0.905混淆矩阵 [[108 1 2 4] [ 0 33 3 2] [ 1 0 30 0] [ 4 0 2 10]]召回率 precision recall f1-score support 0 0.96 0.94 0.95 115 1 0.97 0.87 0.92 38 2 0.81 0.97 0.88 31 3 0.62 0.62 0.62 16 accuracy 0.91 200 macro avg 0.84 0.85 0.84 200weighted avg 0.91 0.91 0.91 200算法程序已经结束...算法消耗时间为: 0.5864312648773193 秒

(None, None, None)

7、逻辑回归

lgr = LogisticRegression()

result = get_text_classification(lgr, X_train_count, y_train_le, X_test_count, y_test_le)

estimator_list.append(result[1]), score_list.append(result[2]), time_list.append(result[3])

算法正在启动,请稍候...算法正在进行训练,请稍候...LogisticRegression(C=1.0, class_weight=None, dual=False, fit_intercept=True, intercept_scaling=1, l1_ratio=None, max_iter=100, multi_class='warn', n_jobs=None, penalty='l2', random_state=None, solver='warn', tol=0.0001, verbose=0, warm_start=False)算法正在进行预测,请稍候...[1 1 2 1 3 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 2 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 2 2 0 0 0 1 0 0 0 0 0 0 0 0 2 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 2 0 1 0 2 0 0 0 1 2 0 0 0 0 0 3 2 3 2 3 3 3 3 3 0 3 0 0 0 3 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 1 2 2 2 2 2 2 1 0 2 2 2]算法正在进行性能评估,请稍候...准确率 0.89混淆矩阵 [[105 3 7 0] [ 0 36 1 1] [ 1 2 28 0] [ 5 0 2 9]]召回率 precision recall f1-score support 0 0.95 0.91 0.93 115 1 0.88 0.95 0.91 38 2 0.74 0.90 0.81 31 3 0.90 0.56 0.69 16 accuracy 0.89 200 macro avg 0.87 0.83 0.84 200weighted avg 0.90 0.89 0.89 200算法程序已经结束...算法消耗时间为: 0.7747480869293213 秒

(None, None, None)

8、支持向量机

svc = svm.SVC()

result = get_text_classification(svc, X_train_count, y_train_le, X_test_count, y_test_le)

estimator_list.append(result[1]), score_list.append(result[2]), time_list.append(result[3])

算法正在启动,请稍候...算法正在进行训练,请稍候...SVC(C=1.0, cache_size=200, class_weight=None, coef0=0.0, decision_function_shape='ovr', degree=3, gamma='auto_deprecated', kernel='rbf', max_iter=-1, probability=False, random_state=None, shrinking=True, tol=0.001, verbose=False)算法正在进行预测,请稍候...[0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0]算法正在进行性能评估,请稍候...准确率 0.575混淆矩阵 [[115 0 0 0] [ 38 0 0 0] [ 31 0 0 0] [ 16 0 0 0]]召回率 precision recall f1-score support 0 0.57 1.00 0.73 115 1 0.00 0.00 0.00 38 2 0.00 0.00 0.00 31 3 0.00 0.00 0.00 16 accuracy 0.57 200 macro avg 0.14 0.25 0.18 200weighted avg 0.33 0.57 0.42 200算法程序已经结束...算法消耗时间为: 401.34070205688477 秒

(None, None, None)

八、集成算法模型

1、随机森林算法

rfc = RandomForestClassifier()

result = get_text_classification(rfc, X_train_count, y_train_le, X_test_count, y_test_le)

estimator_list.append(result[1]), score_list.append(result[2]), time_list.append(result[3])

算法正在启动,请稍候...算法正在进行训练,请稍候...RandomForestClassifier(bootstrap=True, class_weight=None, criterion='gini', max_depth=None, max_features='auto', max_leaf_nodes=None, min_impurity_decrease=0.0, min_impurity_split=None, min_samples_leaf=1, min_samples_split=2, min_weight_fraction_leaf=0.0, n_estimators=10, n_jobs=None, oob_score=False, random_state=None, verbose=0, warm_start=False)算法正在进行预测,请稍候...[1 1 1 1 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 2 0 0 0 0 0 0 0 0 2 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 2 1 0 0 0 0 2 0 0 0 2 0 0 0 0 0 0 0 0 0 0 0 2 0 0 0 0 2 0 0 0 2 0 0 0 0 0 0 0 0 0 0 0 0 0 2 2 1 2 1 1 1 2 1 0 0 0 0 0 1 2 2 3 2 3 3 2 3 3 0 3 2 2 2 3 2 1 2 2 2 2 2 2 2 2 2 2 2 2 2 2 0 2 2 1 2 2 3 0 0 2 0 2 2 2 2]算法正在进行性能评估,请稍候...准确率 0.82混淆矩阵 [[97 6 12 0] [ 1 36 1 0] [ 4 2 24 1] [ 1 1 7 7]]召回率 precision recall f1-score support 0 0.94 0.84 0.89 115 1 0.80 0.95 0.87 38 2 0.55 0.77 0.64 31 3 0.88 0.44 0.58 16 accuracy 0.82 200 macro avg 0.79 0.75 0.75 200weighted avg 0.85 0.82 0.82 200算法程序已经结束...算法消耗时间为: 4.107015609741211 秒

(None, None, None)

2、自适应增强算法

abc = AdaBoostClassifier()

result = get_text_classification(abc, X_train_count, y_train_le, X_test_count, y_test_le)

estimator_list.append(result[1]), score_list.append(result[2]), time_list.append(result[3])

算法正在启动,请稍候...算法正在进行训练,请稍候...RandomForestClassifier(bootstrap=True, class_weight=None, criterion='gini', max_depth=None, max_features='auto', max_leaf_nodes=None, min_impurity_decrease=0.0, min_impurity_split=None, min_samples_leaf=1, min_samples_split=2, min_weight_fraction_leaf=0.0, n_estimators=10, n_jobs=None, oob_score=False, random_state=None, verbose=0, warm_start=False)算法正在进行预测,请稍候...[1 1 1 1 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 2 0 0 0 0 0 0 0 0 2 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 2 1 0 0 0 0 2 0 0 0 2 0 0 0 0 0 0 0 0 0 0 0 2 0 0 0 0 2 0 0 0 2 0 0 0 0 0 0 0 0 0 0 0 0 0 2 2 1 2 1 1 1 2 1 0 0 0 0 0 1 2 2 3 2 3 3 2 3 3 0 3 2 2 2 3 2 1 2 2 2 2 2 2 2 2 2 2 2 2 2 2 0 2 2 1 2 2 3 0 0 2 0 2 2 2 2]算法正在进行性能评估,请稍候...准确率 0.82混淆矩阵 [[97 6 12 0] [ 1 36 1 0] [ 4 2 24 1] [ 1 1 7 7]]召回率 precision recall f1-score support 0 0.94 0.84 0.89 115 1 0.80 0.95 0.87 38 2 0.55 0.77 0.64 31 3 0.88 0.44 0.58 16 accuracy 0.82 200 macro avg 0.79 0.75 0.75 200weighted avg 0.85 0.82 0.82 200算法程序已经结束...算法消耗时间为: 4.107015609741211 秒Out[43]: (None, None, None)abc = AdaBoostClassifier()result = get_text_classification(abc, X_train_count, y_train_le, X_test_count, y_test_le)estimator_list.append(result[1]), score_list.append(result[2]), time_list.append(result[3])算法正在启动,请稍候...算法正在进行训练,请稍候...AdaBoostClassifier(algorithm='SAMME.R', base_estimator=None, learning_rate=1.0, n_estimators=50, random_state=None)算法正在进行预测,请稍候...[1 1 1 1 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 0 1 0 0 1 0 1 1 0 1 1 0 0 0 1 1 0 0 0 1 1 1 0 1 0 1 0 1 1 1 1 1 0 1 0 0 0 1 1 0 1 1 1 0 1 0 1 1 1 0 1 1 1 0 1 1 1 0 1 0 0 0 0 0 0 0 1 0 0 0 1 1 1 0 0 0 1 1 0 1 0 0 0 0 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 0 1 3 1 3 1 3 3 3 3 3 1 3 1 1 1 3 2 1 2 1 2 2 0 2 2 1 1 1 1 1 1 3 3 1 1 1 1 2 3 0 1 2 1 1 1 1 2]算法正在进行性能评估,请稍候...准确率 0.57混淆矩阵 [[59 56 0 0] [ 1 37 0 0] [ 2 17 9 3] [ 0 7 0 9]]召回率 precision recall f1-score support 0 0.95 0.51 0.67 115 1 0.32 0.97 0.48 38 2 1.00 0.29 0.45 31 3 0.75 0.56 0.64 16 accuracy 0.57 200 macro avg 0.75 0.58 0.56 200weighted avg 0.82 0.57 0.60 200算法程序已经结束...算法消耗时间为: 94.61701512336731 秒

(None, None, None)

3、lightgbm算法

gbm = lightgbm.LGBMClassifier()

result = get_text_classification(gbm, X_train_count, y_train_le, X_test_count, y_test_le)

estimator_list.append(result[1]), score_list.append(result[2]), time_list.append(result[3])

算法正在启动,请稍候...算法正在进行训练,请稍候...LGBMClassifier(boosting_type='gbdt', class_weight=None, colsample_bytree=1.0, importance_type='split', learning_rate=0.1, max_depth=-1, min_child_samples=20, min_child_weight=0.001, min_split_gain=0.0, n_estimators=100, n_jobs=-1, num_leaves=31, objective=None, random_state=None, reg_alpha=0.0, reg_lambda=0.0, silent=True, subsample=1.0, subsample_for_bin=200000, subsample_freq=0)算法正在进行预测,请稍候...[1 2 1 1 3 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 3 1 2 1 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 2 0 0 0 0 0 0 0 0 0 2 2 0 0 0 2 0 0 0 0 2 0 2 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 0 0 0 0 0 0 0 0 2 0 0 0 0 0 0 0 0 0 0 0 2 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 3 2 3 1 2 0 1 2 2 1 2 0 0 0 0 1 2 2 3 2 3 3 2 3 3 0 3 2 2 2 3 2 2 2 2 2 2 0 2 2 2 3 3 2 2 2 2 2 2 2 1 2 2 3 2 0 2 1 0 2 1 2]算法正在进行性能评估,请稍候...准确率 0.785混淆矩阵 [[94 6 13 2] [ 0 34 2 2] [ 3 3 22 3] [ 1 1 7 7]]召回率 precision recall f1-score support 0 0.96 0.82 0.88 115 1 0.77 0.89 0.83 38 2 0.50 0.71 0.59 31 3 0.50 0.44 0.47 16 accuracy 0.79 200 macro avg 0.68 0.71 0.69 200weighted avg 0.82 0.79 0.79 200算法程序已经结束...算法消耗时间为: 1.754338264465332 秒

(None, None, None)

4、xgboost算法

xgb = xgboost.XGBClassifier()

result = get_text_classification(xgb, X_train_count, y_train_le, X_test_count, y_test_le)

estimator_list.append(result[1]), score_list.append(result[2]), time_list.append(result[3])

算法正在启动,请稍候...算法正在进行训练,请稍候...XGBClassifier(base_score=0.5, booster='gbtree', colsample_bylevel=1, colsample_bynode=1, colsample_bytree=1, gamma=0, learning_rate=0.1, max_delta_step=0, max_depth=3, min_child_weight=1, missing=None, n_estimators=100, n_jobs=1, nthread=None, objective='multi:softprob', random_state=0, reg_alpha=0, reg_lambda=1, scale_pos_weight=1, seed=None, silent=None, subsample=1, verbosity=1)算法正在进行预测,请稍候...[1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 1 0 0 1 0 1 1 0 0 0 1 0 0 0 0 0 1 1 0 1 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 0 0 0 0 1 1 0 0 1 1 0 0 0 1 0 1 0 0 0 0 0 0 0 1 0 0 0 1 0 1 0 1 0 0 1 0 1 0 0 0 0 0 0 0 1 1 0 1 1 1 1 1 1 0 0 0 0 1 3 1 3 0 3 3 3 3 3 0 3 1 1 1 3 2 2 2 1 2 2 2 2 2 1 1 2 2 2 2 3 1 1 2 1 1 2 3 2 0 2 1 1 1 1 2]算法正在进行性能评估,请稍候...准确率 0.735混淆矩阵 [[83 32 0 0] [ 0 38 0 0] [ 1 11 17 2] [ 2 5 0 9]]召回率 precision recall f1-score support 0 0.97 0.72 0.83 115 1 0.44 1.00 0.61 38 2 1.00 0.55 0.71 31 3 0.82 0.56 0.67 16 accuracy 0.73 200 macro avg 0.81 0.71 0.70 200weighted avg 0.86 0.73 0.75 200算法程序已经结束...算法消耗时间为: 974.3143627643585 秒Out[46]: (None, None, None)

九、深度学习

1、前馈神经网络

Keras 也是一个高级封装的接口,简单介绍如下。

1) 算法流程

创建神经网络——添加神经层——编译神经网络——训练神经网络——预测——性能评估——保存模型

2) 添加神经层

至少要有两层神经层,第一层必须是输入神经层,最后一层必须是输出层;

输入神经层主要设置输入的维度,而最后一层主要是设置激活函数的类型来指明是分类还是回归问题

3 )编译神经网络

分类问题的 metrics,一般以 accuracy 准确率来衡量

回归问题的 metrics, 一般以 mae 平均绝对误差来衡量

start = time.time()

# --------------------------------

# np.random.seed(0) # 设置随机数种子

feature_num = X_train_count.shape[1] # 设置所希望的特征数量

# ---------------------------------

# 独热编码目标向量来创建目标矩阵

y_train_cate = to_categorical(y_train_le)

y_test_cate = to_categorical(y_test_le)

print(y_train_cate)

[[0. 1. 0. 0.]

[0. 1. 0. 0.]

[0. 1. 0. 0.]

...

[0. 0. 1. 0.]

[0. 0. 1. 0.]

[0. 0. 1. 0.]]

# 1 创建神经网络

network = models.Sequential()

# ----------------------------------------------------

# 2 添加神经连接层

# 第一层必须有并且一定是 [输入层], 必选

network.add(layers.Dense( # 添加带有 relu 激活函数的全连接层

units=128,

activation='relu',

input_shape=(feature_num, )

))

# 介于第一层和最后一层之间的称为 [隐藏层],可选

network.add(layers.Dense( # 添加带有 relu 激活函数的全连接层

units=128,

activation='relu'

))

network.add(layers.Dropout(0.8))

# 最后一层必须有并且一定是 [输出层], 必选

network.add(layers.Dense( # 添加带有 softmax 激活函数的全连接层

units=4,

activation='sigmoid'

))

# -----------------------------------------------------

# 3 编译神经网络

network.compile(loss='categorical_crossentropy', # 分类交叉熵损失函数

optimizer='rmsprop',

metrics=['accuracy'] # 准确率度量

)

# -----------------------------------------------------

# 4 开始训练神经网络

history = network.fit(X_train_count, # 训练集特征

y_train_cate, # 训练集标签

epochs=20, # 迭代次数

batch_size=300, # 每个批量的观测数 可做优化

validation_data=(X_test_count, y_test_cate) # 验证测试集数据

)

network.summary()

Train on 3305 samples, validate on 200 samples

Epoch 1/20

300/3305 [=>............................] - ETA: 3s - loss: 1.3948 - accuracy: 0.2167

600/3305 [====>.........................] - ETA: 2s - loss: 1.3805 - accuracy: 0.3167

900/3305 [=======>......................] - ETA: 1s - loss: 1.3690 - accuracy: 0.3667

1200/3305 [=========>....................] - ETA: 1s - loss: 1.3561 - accuracy: 0.3950

1500/3305 [============>.................] - ETA: 1s - loss: 1.3446 - accuracy: 0.4100

1800/3305 [===============>..............] - ETA: 0s - loss: 1.3301 - accuracy: 0.4283

2100/3305 [==================>...........] - ETA: 0s - loss: 1.3139 - accuracy: 0.4429

2400/3305 [====================>.........] - ETA: 0s - loss: 1.2963 - accuracy: 0.4563

2700/3305 [=======================>......] - ETA: 0s - loss: 1.2767 - accuracy: 0.4707

3000/3305 [==========================>...] - ETA: 0s - loss: 1.2578 - accuracy: 0.4810

3300/3305 [============================>.] - ETA: 0s - loss: 1.2416 - accuracy: 0.4912

3305/3305 [==============================] - 2s 637us/step - loss: 1.2411 - accuracy: 0.4914 - val_loss: 0.9422 - val_accuracy: 0.7000

Epoch 2/20

300/3305 [=>............................] - ETA: 1s - loss: 0.9911 - accuracy: 0.6033

600/3305 [====>.........................] - ETA: 1s - loss: 0.9473 - accuracy: 0.6433

900/3305 [=======>......................] - ETA: 1s - loss: 0.9485 - accuracy: 0.6411

1200/3305 [=========>....................] - ETA: 1s - loss: 0.9281 - accuracy: 0.6567

1500/3305 [============>.................] - ETA: 0s - loss: 0.9095 - accuracy: 0.6733

1800/3305 [===============>..............] - ETA: 0s - loss: 0.8946 - accuracy: 0.6850

2100/3305 [==================>...........] - ETA: 0s - loss: 0.8755 - accuracy: 0.7005

2400/3305 [====================>.........] - ETA: 0s - loss: 0.8618 - accuracy: 0.7067

2700/3305 [=======================>......] - ETA: 0s - loss: 0.8496 - accuracy: 0.7126

3000/3305 [==========================>...] - ETA: 0s - loss: 0.8383 - accuracy: 0.7157

3300/3305 [============================>.] - ETA: 0s - loss: 0.8260 - accuracy: 0.7233

3305/3305 [==============================] - 2s 481us/step - loss: 0.8256 - accuracy: 0.7238 - val_loss: 0.6232 - val_accuracy: 0.8400

Epoch 3/20

300/3305 [=>............................] - ETA: 1s - loss: 0.6145 - accuracy: 0.8500

600/3305 [====>.........................] - ETA: 1s - loss: 0.5991 - accuracy: 0.8550

900/3305 [=======>......................] - ETA: 0s - loss: 0.5806 - accuracy: 0.8622

1200/3305 [=========>....................] - ETA: 0s - loss: 0.5707 - accuracy: 0.8667

1500/3305 [============>.................] - ETA: 0s - loss: 0.5585 - accuracy: 0.8693

1800/3305 [===============>..............] - ETA: 0s - loss: 0.5467 - accuracy: 0.8739

2100/3305 [==================>...........] - ETA: 0s - loss: 0.5445 - accuracy: 0.8705

2400/3305 [====================>.........] - ETA: 0s - loss: 0.5359 - accuracy: 0.8700

2700/3305 [=======================>......] - ETA: 0s - loss: 0.5235 - accuracy: 0.8778

3000/3305 [==========================>...] - ETA: 0s - loss: 0.5224 - accuracy: 0.8763

3300/3305 [============================>.] - ETA: 0s - loss: 0.5158 - accuracy: 0.8806

3305/3305 [==============================] - 1s 447us/step - loss: 0.5162 - accuracy: 0.8805 - val_loss: 0.4481 - val_accuracy: 0.8800

Epoch 4/20

300/3305 [=>............................] - ETA: 1s - loss: 0.3936 - accuracy: 0.9167

600/3305 [====>.........................] - ETA: 1s - loss: 0.3881 - accuracy: 0.9133

900/3305 [=======>......................] - ETA: 0s - loss: 0.3891 - accuracy: 0.9089

1200/3305 [=========>....................] - ETA: 0s - loss: 0.3737 - accuracy: 0.9158

1500/3305 [============>.................] - ETA: 0s - loss: 0.3714 - accuracy: 0.9147

1800/3305 [===============>..............] - ETA: 0s - loss: 0.3725 - accuracy: 0.9139

2100/3305 [==================>...........] - ETA: 0s - loss: 0.3613 - accuracy: 0.9200

2400/3305 [====================>.........] - ETA: 0s - loss: 0.3559 - accuracy: 0.9192

2700/3305 [=======================>......] - ETA: 0s - loss: 0.3526 - accuracy: 0.9207

3000/3305 [==========================>...] - ETA: 0s - loss: 0.3429 - accuracy: 0.9247

3300/3305 [============================>.] - ETA: 0s - loss: 0.3367 - accuracy: 0.9264

3305/3305 [==============================] - 1s 447us/step - loss: 0.3369 - accuracy: 0.9259 - val_loss: 0.3506 - val_accuracy: 0.9000

Epoch 5/20

300/3305 [=>............................] - ETA: 1s - loss: 0.2625 - accuracy: 0.9567

600/3305 [====>.........................] - ETA: 1s - loss: 0.2679 - accuracy: 0.9483

900/3305 [=======>......................] - ETA: 0s - loss: 0.2576 - accuracy: 0.9567

1200/3305 [=========>....................] - ETA: 0s - loss: 0.2515 - accuracy: 0.9558

1500/3305 [============>.................] - ETA: 0s - loss: 0.2498 - accuracy: 0.9567

1800/3305 [===============>..............] - ETA: 0s - loss: 0.2446 - accuracy: 0.9572

2100/3305 [==================>...........] - ETA: 0s - loss: 0.2459 - accuracy: 0.9519

2400/3305 [====================>.........] - ETA: 0s - loss: 0.2445 - accuracy: 0.9521

2700/3305 [=======================>......] - ETA: 0s - loss: 0.2407 - accuracy: 0.9537

3000/3305 [==========================>...] - ETA: 0s - loss: 0.2361 - accuracy: 0.9553

3300/3305 [============================>.] - ETA: 0s - loss: 0.2350 - accuracy: 0.9536

3305/3305 [==============================] - 2s 479us/step - loss: 0.2347 - accuracy: 0.9537 - val_loss: 0.2831 - val_accuracy: 0.9050

Epoch 6/20

300/3305 [=>............................] - ETA: 2s - loss: 0.1655 - accuracy: 0.9700

600/3305 [====>.........................] - ETA: 2s - loss: 0.1610 - accuracy: 0.9733

900/3305 [=======>......................] - ETA: 1s - loss: 0.1643 - accuracy: 0.9767

1200/3305 [=========>....................] - ETA: 1s - loss: 0.1644 - accuracy: 0.9758

1500/3305 [============>.................] - ETA: 1s - loss: 0.1683 - accuracy: 0.9740

1800/3305 [===============>..............] - ETA: 0s - loss: 0.1602 - accuracy: 0.9767

2100/3305 [==================>...........] - ETA: 0s - loss: 0.1545 - accuracy: 0.9781

2400/3305 [====================>.........] - ETA: 0s - loss: 0.1519 - accuracy: 0.9787

2700/3305 [=======================>......] - ETA: 0s - loss: 0.1523 - accuracy: 0.9774

3000/3305 [==========================>...] - ETA: 0s - loss: 0.1515 - accuracy: 0.9777

3300/3305 [============================>.] - ETA: 0s - loss: 0.1510 - accuracy: 0.9773

3305/3305 [==============================] - 2s 524us/step - loss: 0.1511 - accuracy: 0.9773 - val_loss: 0.2449 - val_accuracy: 0.9050

Epoch 7/20

300/3305 [=>............................] - ETA: 1s - loss: 0.1142 - accuracy: 0.9933

600/3305 [====>.........................] - ETA: 1s - loss: 0.1093 - accuracy: 0.9850

900/3305 [=======>......................] - ETA: 0s - loss: 0.1182 - accuracy: 0.9767

1200/3305 [=========>....................] - ETA: 0s - loss: 0.1182 - accuracy: 0.9775

1500/3305 [============>.................] - ETA: 0s - loss: 0.1195 - accuracy: 0.9800

1800/3305 [===============>..............] - ETA: 0s - loss: 0.1158 - accuracy: 0.9817

2100/3305 [==================>...........] - ETA: 0s - loss: 0.1146 - accuracy: 0.9819

2400/3305 [====================>.........] - ETA: 0s - loss: 0.1124 - accuracy: 0.9833

2700/3305 [=======================>......] - ETA: 0s - loss: 0.1104 - accuracy: 0.9841

3000/3305 [==========================>...] - ETA: 0s - loss: 0.1078 - accuracy: 0.9847

3300/3305 [============================>.] - ETA: 0s - loss: 0.1078 - accuracy: 0.9842

3305/3305 [==============================] - 1s 447us/step - loss: 0.1076 - accuracy: 0.9843 - val_loss: 0.2377 - val_accuracy: 0.9000

Epoch 8/20

300/3305 [=>............................] - ETA: 1s - loss: 0.0846 - accuracy: 0.9867

600/3305 [====>.........................] - ETA: 1s - loss: 0.0838 - accuracy: 0.9867

900/3305 [=======>......................] - ETA: 1s - loss: 0.0844 - accuracy: 0.9867

1200/3305 [=========>....................] - ETA: 0s - loss: 0.0877 - accuracy: 0.9842

1500/3305 [============>.................] - ETA: 0s - loss: 0.0853 - accuracy: 0.9853

1800/3305 [===============>..............] - ETA: 0s - loss: 0.0852 - accuracy: 0.9856

2100/3305 [==================>...........] - ETA: 0s - loss: 0.0825 - accuracy: 0.9862

2400/3305 [====================>.........] - ETA: 0s - loss: 0.0821 - accuracy: 0.9867

2700/3305 [=======================>......] - ETA: 0s - loss: 0.0807 - accuracy: 0.9874

3000/3305 [==========================>...] - ETA: 0s - loss: 0.0826 - accuracy: 0.9863

3300/3305 [============================>.] - ETA: 0s - loss: 0.0817 - accuracy: 0.9861

3305/3305 [==============================] - 1s 449us/step - loss: 0.0816 - accuracy: 0.9861 - val_loss: 0.2296 - val_accuracy: 0.8850

Epoch 9/20

300/3305 [=>............................] - ETA: 1s - loss: 0.0553 - accuracy: 0.9967

600/3305 [====>.........................] - ETA: 1s - loss: 0.0574 - accuracy: 0.9950

900/3305 [=======>......................] - ETA: 0s - loss: 0.0586 - accuracy: 0.9944

1200/3305 [=========>....................] - ETA: 0s - loss: 0.0690 - accuracy: 0.9908

1500/3305 [============>.................] - ETA: 0s - loss: 0.0693 - accuracy: 0.9920

1800/3305 [===============>..............] - ETA: 0s - loss: 0.0673 - accuracy: 0.9922

2100/3305 [==================>...........] - ETA: 0s - loss: 0.0657 - accuracy: 0.9924

2400/3305 [====================>.........] - ETA: 0s - loss: 0.0686 - accuracy: 0.9896

2700/3305 [=======================>......] - ETA: 0s - loss: 0.0685 - accuracy: 0.9893

3000/3305 [==========================>...] - ETA: 0s - loss: 0.0670 - accuracy: 0.9897

3300/3305 [============================>.] - ETA: 0s - loss: 0.0660 - accuracy: 0.9897

3305/3305 [==============================] - 1s 448us/step - loss: 0.0659 - accuracy: 0.9897 - val_loss: 0.2290 - val_accuracy: 0.8950

Epoch 10/20

300/3305 [=>............................] - ETA: 1s - loss: 0.0477 - accuracy: 0.9967

600/3305 [====>.........................] - ETA: 1s - loss: 0.0562 - accuracy: 0.9917

900/3305 [=======>......................] - ETA: 1s - loss: 0.0500 - accuracy: 0.9933

1200/3305 [=========>....................] - ETA: 0s - loss: 0.0517 - accuracy: 0.9908

1500/3305 [============>.................] - ETA: 0s - loss: 0.0490 - accuracy: 0.9927

1800/3305 [===============>..............] - ETA: 0s - loss: 0.0499 - accuracy: 0.9928

2100/3305 [==================>...........] - ETA: 0s - loss: 0.0493 - accuracy: 0.9929

2400/3305 [====================>.........] - ETA: 0s - loss: 0.0488 - accuracy: 0.9925

2700/3305 [=======================>......] - ETA: 0s - loss: 0.0474 - accuracy: 0.9933

3000/3305 [==========================>...] - ETA: 0s - loss: 0.0459 - accuracy: 0.9937

3300/3305 [============================>.] - ETA: 0s - loss: 0.0444 - accuracy: 0.9942

3305/3305 [==============================] - 1s 450us/step - loss: 0.0443 - accuracy: 0.9943 - val_loss: 0.2183 - val_accuracy: 0.8900

Epoch 11/20

300/3305 [=>............................] - ETA: 1s - loss: 0.0316 - accuracy: 0.9967

600/3305 [====>.........................] - ETA: 1s - loss: 0.0464 - accuracy: 0.9917

900/3305 [=======>......................] - ETA: 0s - loss: 0.0444 - accuracy: 0.9933

1200/3305 [=========>....................] - ETA: 0s - loss: 0.0436 - accuracy: 0.9917

1500/3305 [============>.................] - ETA: 0s - loss: 0.0448 - accuracy: 0.9913

1800/3305 [===============>..............] - ETA: 0s - loss: 0.0431 - accuracy: 0.9917

2100/3305 [==================>...........] - ETA: 0s - loss: 0.0410 - accuracy: 0.9929

2400/3305 [====================>.........] - ETA: 0s - loss: 0.0405 - accuracy: 0.9929

2700/3305 [=======================>......] - ETA: 0s - loss: 0.0393 - accuracy: 0.9933

3000/3305 [==========================>...] - ETA: 0s - loss: 0.0399 - accuracy: 0.9933

3300/3305 [============================>.] - ETA: 0s - loss: 0.0394 - accuracy: 0.9936

3305/3305 [==============================] - 1s 451us/step - loss: 0.0394 - accuracy: 0.9936 - val_loss: 0.2331 - val_accuracy: 0.9000

Epoch 12/20

300/3305 [=>............................] - ETA: 1s - loss: 0.0263 - accuracy: 1.0000

600/3305 [====>.........................] - ETA: 1s - loss: 0.0263 - accuracy: 0.9983

900/3305 [=======>......................] - ETA: 0s - loss: 0.0314 - accuracy: 0.9967

1200/3305 [=========>....................] - ETA: 0s - loss: 0.0312 - accuracy: 0.9967

1500/3305 [============>.................] - ETA: 0s - loss: 0.0286 - accuracy: 0.9973

1800/3305 [===============>..............] - ETA: 0s - loss: 0.0270 - accuracy: 0.9978

2100/3305 [==================>...........] - ETA: 0s - loss: 0.0254 - accuracy: 0.9981

2400/3305 [====================>.........] - ETA: 0s - loss: 0.0251 - accuracy: 0.9983

2700/3305 [=======================>......] - ETA: 0s - loss: 0.0263 - accuracy: 0.9981

3000/3305 [==========================>...] - ETA: 0s - loss: 0.0277 - accuracy: 0.9973

3300/3305 [============================>.] - ETA: 0s - loss: 0.0298 - accuracy: 0.9967

3305/3305 [==============================] - 2s 482us/step - loss: 0.0298 - accuracy: 0.9967 - val_loss: 0.2208 - val_accuracy: 0.8850

Epoch 13/20

300/3305 [=>............................] - ETA: 1s - loss: 0.0254 - accuracy: 0.9967

600/3305 [====>.........................] - ETA: 1s - loss: 0.0234 - accuracy: 0.9983

900/3305 [=======>......................] - ETA: 1s - loss: 0.0220 - accuracy: 0.9978

1200/3305 [=========>....................] - ETA: 0s - loss: 0.0228 - accuracy: 0.9958

1500/3305 [============>.................] - ETA: 0s - loss: 0.0279 - accuracy: 0.9947

1800/3305 [===============>..............] - ETA: 0s - loss: 0.0269 - accuracy: 0.9950

2100/3305 [==================>...........] - ETA: 0s - loss: 0.0275 - accuracy: 0.9952

2400/3305 [====================>.........] - ETA: 0s - loss: 0.0273 - accuracy: 0.9954

2700/3305 [=======================>......] - ETA: 0s - loss: 0.0277 - accuracy: 0.9956

3000/3305 [==========================>...] - ETA: 0s - loss: 0.0273 - accuracy: 0.9957

3300/3305 [============================>.] - ETA: 0s - loss: 0.0274 - accuracy: 0.9955

3305/3305 [==============================] - 2s 459us/step - loss: 0.0273 - accuracy: 0.9955 - val_loss: 0.2477 - val_accuracy: 0.8950

Epoch 14/20

300/3305 [=>............................] - ETA: 1s - loss: 0.0187 - accuracy: 0.9967

600/3305 [====>.........................] - ETA: 1s - loss: 0.0249 - accuracy: 0.9950

900/3305 [=======>......................] - ETA: 1s - loss: 0.0240 - accuracy: 0.9956

1200/3305 [=========>....................] - ETA: 0s - loss: 0.0222 - accuracy: 0.9967

1500/3305 [============>.................] - ETA: 0s - loss: 0.0210 - accuracy: 0.9967

1800/3305 [===============>..............] - ETA: 0s - loss: 0.0201 - accuracy: 0.9972

2100/3305 [==================>...........] - ETA: 0s - loss: 0.0203 - accuracy: 0.9971

2400/3305 [====================>.........] - ETA: 0s - loss: 0.0201 - accuracy: 0.9975

2700/3305 [=======================>......] - ETA: 0s - loss: 0.0204 - accuracy: 0.9974

3000/3305 [==========================>...] - ETA: 0s - loss: 0.0205 - accuracy: 0.9970

3300/3305 [============================>.] - ETA: 0s - loss: 0.0204 - accuracy: 0.9970

3305/3305 [==============================] - 2s 455us/step - loss: 0.0204 - accuracy: 0.9970 - val_loss: 0.2115 - val_accuracy: 0.8950

Epoch 15/20

300/3305 [=>............................] - ETA: 1s - loss: 0.0182 - accuracy: 1.0000

600/3305 [====>.........................] - ETA: 1s - loss: 0.0163 - accuracy: 1.0000

900/3305 [=======>......................] - ETA: 0s - loss: 0.0156 - accuracy: 0.9989

1200/3305 [=========>....................] - ETA: 0s - loss: 0.0144 - accuracy: 0.9992

1500/3305 [============>.................] - ETA: 0s - loss: 0.0140 - accuracy: 0.9987

1800/3305 [===============>..............] - ETA: 0s - loss: 0.0145 - accuracy: 0.9983

2100/3305 [==================>...........] - ETA: 0s - loss: 0.0144 - accuracy: 0.9986

2400/3305 [====================>.........] - ETA: 0s - loss: 0.0148 - accuracy: 0.9983

2700/3305 [=======================>......] - ETA: 0s - loss: 0.0142 - accuracy: 0.9985

3000/3305 [==========================>...] - ETA: 0s - loss: 0.0149 - accuracy: 0.9980

3300/3305 [============================>.] - ETA: 0s - loss: 0.0154 - accuracy: 0.9973

3305/3305 [==============================] - 1s 450us/step - loss: 0.0154 - accuracy: 0.9973 - val_loss: 0.2775 - val_accuracy: 0.8950

Epoch 16/20

300/3305 [=>............................] - ETA: 1s - loss: 0.0110 - accuracy: 0.9967

600/3305 [====>.........................] - ETA: 1s - loss: 0.0140 - accuracy: 0.9967

900/3305 [=======>......................] - ETA: 1s - loss: 0.0123 - accuracy: 0.9978

1200/3305 [=========>....................] - ETA: 0s - loss: 0.0127 - accuracy: 0.9983

1500/3305 [============>.................] - ETA: 0s - loss: 0.0137 - accuracy: 0.9973

1800/3305 [===============>..............] - ETA: 0s - loss: 0.0147 - accuracy: 0.9972

2100/3305 [==================>...........] - ETA: 0s - loss: 0.0157 - accuracy: 0.9967

2400/3305 [====================>.........] - ETA: 0s - loss: 0.0157 - accuracy: 0.9967

2700/3305 [=======================>......] - ETA: 0s - loss: 0.0152 - accuracy: 0.9963

3000/3305 [==========================>...] - ETA: 0s - loss: 0.0149 - accuracy: 0.9963

3300/3305 [============================>.] - ETA: 0s - loss: 0.0149 - accuracy: 0.9964

3305/3305 [==============================] - 1s 453us/step - loss: 0.0149 - accuracy: 0.9964 - val_loss: 0.2708 - val_accuracy: 0.9000

Epoch 17/20

300/3305 [=>............................] - ETA: 1s - loss: 0.0140 - accuracy: 0.9967

600/3305 [====>.........................] - ETA: 1s - loss: 0.0155 - accuracy: 0.9967

900/3305 [=======>......................] - ETA: 1s - loss: 0.0147 - accuracy: 0.9978

1200/3305 [=========>....................] - ETA: 0s - loss: 0.0130 - accuracy: 0.9983

1500/3305 [============>.................] - ETA: 0s - loss: 0.0132 - accuracy: 0.9980

1800/3305 [===============>..............] - ETA: 0s - loss: 0.0129 - accuracy: 0.9978

2100/3305 [==================>...........] - ETA: 0s - loss: 0.0137 - accuracy: 0.9976

2400/3305 [====================>.........] - ETA: 0s - loss: 0.0132 - accuracy: 0.9979

2700/3305 [=======================>......] - ETA: 0s - loss: 0.0147 - accuracy: 0.9970

3000/3305 [==========================>...] - ETA: 0s - loss: 0.0142 - accuracy: 0.9973

3300/3305 [============================>.] - ETA: 0s - loss: 0.0145 - accuracy: 0.9973

3305/3305 [==============================] - 1s 452us/step - loss: 0.0145 - accuracy: 0.9973 - val_loss: 0.2197 - val_accuracy: 0.9100

Epoch 18/20

300/3305 [=>............................] - ETA: 1s - loss: 0.0116 - accuracy: 0.9967

600/3305 [====>.........................] - ETA: 1s - loss: 0.0132 - accuracy: 0.9950

900/3305 [=======>......................] - ETA: 1s - loss: 0.0123 - accuracy: 0.9967

1200/3305 [=========>....................] - ETA: 0s - loss: 0.0129 - accuracy: 0.9975

1500/3305 [============>.................] - ETA: 0s - loss: 0.0137 - accuracy: 0.9973

1800/3305 [===============>..............] - ETA: 0s - loss: 0.0125 - accuracy: 0.9978

2100/3305 [==================>...........] - ETA: 0s - loss: 0.0123 - accuracy: 0.9976

2400/3305 [====================>.........] - ETA: 0s - loss: 0.0150 - accuracy: 0.9967

2700/3305 [=======================>......] - ETA: 0s - loss: 0.0167 - accuracy: 0.9963

3000/3305 [==========================>...] - ETA: 0s - loss: 0.0162 - accuracy: 0.9967

3300/3305 [============================>.] - ETA: 0s - loss: 0.0156 - accuracy: 0.9970

3305/3305 [==============================] - 2s 469us/step - loss: 0.0156 - accuracy: 0.9970 - val_loss: 0.2645 - val_accuracy: 0.9000

Epoch 19/20

300/3305 [=>............................] - ETA: 1s - loss: 0.0057 - accuracy: 1.0000

600/3305 [====>.........................] - ETA: 1s - loss: 0.0052 - accuracy: 1.0000

900/3305 [=======>......................] - ETA: 1s - loss: 0.0072 - accuracy: 0.9989

1200/3305 [=========>....................] - ETA: 0s - loss: 0.0078 - accuracy: 0.9992

1500/3305 [============>.................] - ETA: 0s - loss: 0.0081 - accuracy: 0.9993

1800/3305 [===============>..............] - ETA: 0s - loss: 0.0083 - accuracy: 0.9994

2100/3305 [==================>...........] - ETA: 0s - loss: 0.0119 - accuracy: 0.9986

2400/3305 [====================>.........] - ETA: 0s - loss: 0.0136 - accuracy: 0.9979

2700/3305 [=======================>......] - ETA: 0s - loss: 0.0127 - accuracy: 0.9981

3000/3305 [==========================>...] - ETA: 0s - loss: 0.0142 - accuracy: 0.9980

3300/3305 [============================>.] - ETA: 0s - loss: 0.0144 - accuracy: 0.9976

3305/3305 [==============================] - 2s 466us/step - loss: 0.0144 - accuracy: 0.9976 - val_loss: 0.2941 - val_accuracy: 0.9000

Epoch 20/20

300/3305 [=>............................] - ETA: 1s - loss: 0.0105 - accuracy: 0.9967

600/3305 [====>.........................] - ETA: 1s - loss: 0.0172 - accuracy: 0.9933

900/3305 [=======>......................] - ETA: 1s - loss: 0.0156 - accuracy: 0.9933

1200/3305 [=========>....................] - ETA: 0s - loss: 0.0138 - accuracy: 0.9950

1500/3305 [============>.................] - ETA: 0s - loss: 0.0128 - accuracy: 0.9960

1800/3305 [===============>..............] - ETA: 0s - loss: 0.0120 - accuracy: 0.9961

2100/3305 [==================>...........] - ETA: 0s - loss: 0.0131 - accuracy: 0.9962

2400/3305 [====================>.........] - ETA: 0s - loss: 0.0126 - accuracy: 0.9967

2700/3305 [=======================>......] - ETA: 0s - loss: 0.0142 - accuracy: 0.9959

3000/3305 [==========================>...] - ETA: 0s - loss: 0.0132 - accuracy: 0.9963

3300/3305 [============================>.] - ETA: 0s - loss: 0.0125 - accuracy: 0.9967

3305/3305 [==============================] - 2s 456us/step - loss: 0.0125 - accuracy: 0.9967 - val_loss: 0.3149 - val_accuracy: 0.8950

Model: "sequential_2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_1 (Dense) (None, 128) 3037824

_________________________________________________________________

dense_2 (Dense) (None, 128) 16512

_________________________________________________________________

dropout_1 (Dropout) (None, 128) 0

_________________________________________________________________

dense_3 (Dense) (None, 4) 516

=================================================================

Total params: 3,054,852

Trainable params: 3,054,852

Non-trainable params: 0

_________________________________________________________________

# 5 模型预测

y_pred_keras = network.predict(X_test_count)

# 6 性能评估

print('>>>多分类前馈神经网络性能评估如下...\n')

score = network.evaluate(X_test_count,

y_test_cate,

batch_size=32)

print('\n>>>评分\n', score)

print()

end = time.time()

estimator_list.append('前馈网络')

score_list.append(score[1])

time_list.append(round(end-start, 2))

多分类前馈神经网络性能评估如下...

32/200 [===>..........................] - ETA: 0s

128/200 [==================>...........] - ETA: 0s

200/200 [==============================] - 0s 529us/step

评分

[0.31489723950624465, 0.8949999809265137]

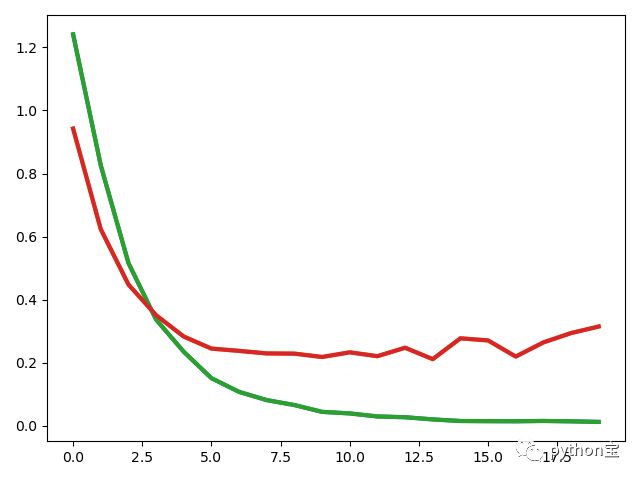

# 损失函数情况

import matplotlib.pyplot as plt

train_loss = history.history["loss"]

valid_loss = history.history["val_loss"]

epochs = [i for i in range(len(train_loss))]

plt.plot(epochs, train_loss,linewidth=3.0)

plt.plot(epochs, valid_loss,linewidth=3.0)

plt.show()

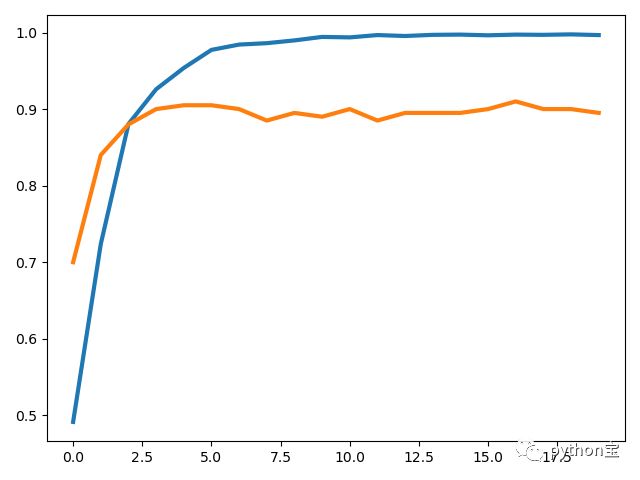

# 准确率情况

train_loss = history.history['accuracy']

valid_loss = history.history['val_accuracy']

epochs = [i for i in range(len(train_loss))]

plt.plot(epochs, train_loss,linewidth=3.0)

plt.plot(epochs, valid_loss,linewidth=3.0)

plt.show()

# 7 保存/加载模型

# 保存

print('\n>>>你正在进行保存模型操作, 请稍候...\n')

network.save('D:\\A\AI-master\\py-data\\text_classification-master\\my_network_model.h5')

print('>>>保存工作已完成...\n')

# 加载和使用

print('>>>你正在加载已经训练好的模型, 请稍候...\n')

my_load_model = models.load_model('D:\\A\\AI-master\\py-data\\text_classification-master\\my_network_model.h5')

print('>>>你正在使用加载的现成模型进行预测, 请稍候...\n')

print('>>>预测部分结果如下...')

my_load_model.predict(X_test_count)[:20]

你正在进行保存模型操作, 请稍候...

保存工作已完成...

你正在加载已经训练好的模型, 请稍候...

W0401 16:51:40.653452 2520 nn_ops.py:4372] Large dropout rate: 0.8 (>0.5). In TensorFlow 2.x, dropout() uses dropout rate instead of keep_prob. Please ensure that this is intended.

你正在使用加载的现成模型进行预测, 请稍候...

预测部分结果如下...

array([[6.5150915e-14, 9.9956208e-01, 7.6179704e-15, 5.9075337e-14],

[1.3438159e-07, 7.5693017e-01, 6.7286510e-06, 1.0690396e-06],

[1.1479392e-05, 1.3596797e-03, 5.2056019e-04, 3.6293927e-06],

[1.7625215e-08, 9.9138886e-01, 1.0188694e-08, 2.5233907e-08],

[3.5257504e-04, 8.8972389e-04, 9.2386857e-05, 4.8687063e-02],

[2.7085954e-04, 7.7684097e-02, 8.9289460e-05, 1.2786119e-04],

[5.2730764e-13, 9.9920541e-01, 1.9166842e-11, 8.2062039e-12],

[1.2135930e-13, 9.9911600e-01, 6.6255135e-12, 1.3244053e-12],

[2.1020567e-11, 3.2926968e-01, 2.7211988e-10, 5.5204083e-12],

[2.6995275e-14, 9.9954861e-01, 8.7633069e-13, 2.9740466e-12],

[2.0245436e-13, 9.5068341e-01, 2.3346332e-11, 1.7555527e-12],

[9.6436650e-13, 3.0095114e-05, 6.6907599e-09, 2.0117261e-16],

[1.5413009e-11, 9.9399120e-01, 1.2802791e-11, 1.5615023e-11],

[1.5962692e-25, 9.9999988e-01, 1.4920251e-24, 4.1572804e-24],

[8.9582224e-11, 9.9145550e-01, 2.9633193e-10, 3.9553510e-10],

[8.1094669e-09, 1.9613329e-01, 1.3441264e-06, 1.8306956e-08],

[1.6764140e-11, 9.9539572e-01, 1.2220336e-10, 1.5574391e-11],

[1.9227930e-06, 8.0996698e-01, 4.0275991e-05, 7.4675190e-06],

[1.1718333e-05, 2.7493445e-02, 3.0977117e-05, 1.2554987e-06],

[1.0690468e-10, 9.9689734e-01, 3.5567584e-09, 3.6802743e-09]],

dtype=float32)

2、LSTM 神经网络

# 设置所希望的特征数

feature_num = X_train_count.shape[1]

# 使用单热编码目标向量对标签进行处理

y_train_cate = to_categorical(y_train_le)

y_test_cate = to_categorical(y_test_le)

print(y_train_cate)

[[0. 1. 0. 0.] [0. 1. 0. 0.] [0. 1. 0. 0.] ... [0. 0. 1. 0.] [0. 0. 1. 0.] [0. 0. 1. 0.]]

# 1 创建神经网络

lstm_network = models.Sequential()

# ----------------------------------------------

# 2 添加神经层

lstm_network.add(layers.Embedding(input_dim=feature_num, # 添加嵌入层

output_dim=4))

lstm_network.add(layers.LSTM(units=128)) # 添加 128 个单元的 LSTM 神经层

lstm_network.add(layers.Dense(units=4,

activation='sigmoid')) # 添加 sigmoid 分类激活函数的全连接层

# ----------------------------------------------

# 3 编译神经网络

lstm_network.compile(loss='binary_crossentropy',

optimizer='Adam',

metrics=['accuracy']

)

# ----------------------------------------------

# 4 开始训练模型

lstm_network.fit(X_train_count,

y_train_cate,

epochs=5,

batch_size=128,

validation_data=(X_test_count, y_test_cate)

)

十、算法之间性能比较

df = pd.DataFrame()

df['分类器'] = estimator_list

df['准确率'] = score_list

df['消耗时间/s'] = time_list

df

分类器 准确率 消耗时间/s

0 KNeighborsClassifier 0.665 28.50

1 DecisionTreeClassifier 0.735 21.13

2 MLPClassifier 0.860 327.06

3 BernoulliNB 0.785 1.15

4 GaussianNB 0.890 1.97

5 MultinomialNB 0.905 0.54

6 LogisticRegression 0.890 0.74

7 SVC 0.575 389.03

8 RandomForestClassifier 0.820 3.95

9 AdaBoostClassifier 0.570 96.50

10 LGBMClassifier 0.785 2.01

11 XGBClassifier 0.735 984.70

12 前馈网络 0.905 31.13

综上 DataFrame 展示,结合消耗时间和准确率来看,可以得出以下结论:

在同一训练集和测试集、分类器默认参数设置(都未进行调参)的情况下:

综合效果最好的是:

MultinomialNB 多项式朴素贝叶斯分类算法:

其准确率达到了 90.5% 并且所消耗的的时间才 0.55 s

综合效果最差的是:

SVC 支持向量机

其准确率才 0.575 并且消耗时间高达 380.72s

准确率最低的是:0.570

AdaBoostClassifier 自适应增强集成学习算法

消耗时间最高的是:566.59s

XGBClassifier 集成学习算法

![]()

About Me:小婷儿

● 本文作者:小婷儿,专注于python、数据分析、数据挖掘、机器学习相关技术,也注重技术的运用

● 作者博客地址:https://blog.csdn.net/u010986753

● 本系列题目来源于作者的学习笔记,部分整理自网络,若有侵权或不当之处还请谅解

● 版权所有,欢迎分享本文,转载请保留出处

● 微信:tinghai87605025 联系我加微信群

● QQ:87605025

● QQ交流群py_data :483766429

● 公众号:python宝 或 DB宝

● 提供OCP、OCM和高可用最实用的技能培训

● 题目解答若有不当之处,还望各位朋友批评指正,共同进步

![]()

如果您觉得到文章对您有帮助,欢迎赞赏哦!有您的支持,小婷儿一定会越来越好!