统计学习方法 第四章习题答案

第4章的习题与习题1.1有些相似,建议两章一起看,关于极大似然估计和贝叶斯估计我在第一章的习题中讲解了,可以先看看第一章的解答。

第一章习题是在伯努利试验中做贝叶斯估计时,采用的是

文章目录

- 代码实现

- 习题4.1

- 习题4.2

- 参考

代码实现

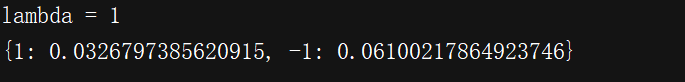

在解习题前先把这一章的算法4.1和算法4.2实现一下,4.2是在4.1的基础上使用了拉普拉斯平滑,换言之4.1是4.2 λ = 0 \lambda=0 λ=0的特殊情况。这里实现4,2,数据即为例题4.1中的训练数据

import numpy as np

def loadData():

X = np.array([[1,1,1,1,1,2,2,2,2,2,3,3,3,3,3],

['S','M','M','S','S','S','M','M','L','L','L','M','M','L','L']])

Y = np.array([-1,-1,1,1,-1,-1,-1,1,1,1,1,1,1,1,-1])

return X, Y

def Bayes(X, Y, x, Lambda = 0):

print("lambda = %d"%Lambda)

setY = list(set(Y))

PY = [(sum(Y == y) + Lambda) / (len(Y) + len(setY) * Lambda) for y in setY]

resP = {}

for i in range(len(setY)):

tempresP = PY[i]

for j in range(len(x)):

count = 0 + Lambda

for k in range(len(X[j])):

if X[j][k] == x[j] and Y[k] == setY[i]:

count += 1

P = count / (sum(Y == setY[i]) + Lambda * len(set(X[j])))

tempresP *= P

resP[setY[i]] = tempresP

print(resP)

if __name__ == "__main__":

X, Y = loadData()

x = ['2', 'S']

Bayes(X, Y, x, 0)

习题4.1

题目:用极大似然估计法推出朴素贝叶斯法中的概率估计公式(4.8)及公式(4.9)。

公式4.8

P ( Y = c k ) = ∑ i = 1 N I ( y i = c k ) N , k = 1 , 2 , … , K P\left(Y=c_{k}\right)=\frac{\sum_{i=1}^{N} I\left(y_{i}=c_{k}\right)}{N}, \quad k=1,2, \ldots, K P(Y=ck)=N∑i=1NI(yi=ck),k=1,2,…,K

其中 I I I为指示函数, y = c k y = c_{k} y=ck时为1,否则为0,在书的第10页有介绍。

设 P ( Y = c k ) = θ P\left(Y=c_{k}\right)=\theta P(Y=ck)=θ,进行 N N N次实验,有 n n n次 Y = c k Y=c_{k} Y=ck.

即 n = ∑ i = 1 N I ( y i = c k ) n=\sum_{i=1}^{N} I\left(y_{i}=c_{k}\right) n=∑i=1NI(yi=ck)

| P ( Y = c k ) P\left(Y=c_{k}\right) P(Y=ck) | P ( Y ≠ c k ) P\left(Y\neq c_{k}\right) P(Y=ck) |

|---|---|

| θ \theta θ | 1 − θ 1-\theta 1−θ |

则有 L ( θ ) = θ n ⋅ ( 1 − θ ) N − n L(\theta) = \theta^n\cdot(1-\theta)^{N-n} L(θ)=θn⋅(1−θ)N−n

一般取对数作为似然函数 L ( θ ) = n ⋅ l o g θ + ( N − n ) ⋅ l o g ( 1 − θ ) L(\theta) = n\cdot log\theta+(N-n)\cdot log(1-\theta) L(θ)=n⋅logθ+(N−n)⋅log(1−θ)

求导 L ′ ( θ ) = n ⋅ 1 θ + ( N − n ) ⋅ 1 1 − θ L'(\theta) = n\cdot \frac{1}{\theta}+(N-n)\cdot \frac{1}{1-\theta} L′(θ)=n⋅θ1+(N−n)⋅1−θ1

令 L ′ = 0 L'=0 L′=0,有 θ = n N = ∑ i = 1 N I ( y i = c k ) N \theta = \frac{n}{N} = \frac{\sum_{i=1}^{N} I\left(y_{i}=c_{k}\right)}{N} θ=Nn=N∑i=1NI(yi=ck)

得证

公式4.9

P ( X ( j ) = a j l ∣ Y = c k ) = ∑ i = 1 N I ( x i ( j ) = a j l , y i = c k ) ∑ i = 1 N I ( y i = c k ) P\left(X^{(j)}=a_{j l} | Y=c_{k}\right)=\frac{\sum_{i=1}^{N} I\left(x_{i}^{(j)}=a_{j l}, y_{i}=c_{k}\right)}{\sum_{i=1}^{N} I\left(y_{i}=c_{k}\right)} P(X(j)=ajl∣Y=ck)=∑i=1NI(yi=ck)∑i=1NI(xi(j)=ajl,yi=ck)

证明过程类似,设 P ( X ( j ) = a j l ∣ Y = c k ) = θ P\left(X^{(j)}=a_{j l} | Y=c_{k}\right)=\theta P(X(j)=ajl∣Y=ck)=θ,进行了N次实验,有 n n n次 Y = c k Y=c_{k} Y=ck,有 m m m次 Y = c k , X ( j ) = a j l Y=c_{k},X^{(j)}=a_{j l} Y=ck,X(j)=ajl

即 n = ∑ i = 1 N I ( y i = c k ) , m = ∑ i = 1 N I ( x i ( j ) = a j l , y i = c k ) n=\sum_{i=1}^{N} I\left(y_{i}=c_{k}\right),m=\sum_{i=1}^{N} I\left(x_{i}^{(j)}=a_{j l}, y_{i}=c_{k}\right) n=∑i=1NI(yi=ck),m=∑i=1NI(xi(j)=ajl,yi=ck)

有 L ( θ ) = θ m ⋅ ( 1 − θ ) n − m L(\theta) = \theta^m\cdot(1-\theta)^{n-m} L(θ)=θm⋅(1−θ)n−m

取对数 L ( θ ) = m ⋅ l o g θ + ( n − m ) ⋅ l o g ( 1 − θ ) L(\theta) = m\cdot log\theta+(n-m)\cdot log(1-\theta) L(θ)=m⋅logθ+(n−m)⋅log(1−θ)

求导 L ′ ( θ ) = m ⋅ 1 θ + ( n − m ) ⋅ 1 1 − θ L'(\theta) = m\cdot \frac{1}{\theta}+(n-m)\cdot \frac{1}{1-\theta} L′(θ)=m⋅θ1+(n−m)⋅1−θ1

令 L ′ = 0 L'=0 L′=0,有 θ = m n = ∑ i = 1 N I ( x i ( j ) = a j l , y i = c k ) ∑ i = 1 N I ( y i = c k ) \theta = \frac{m}{n} = \frac{\sum_{i=1}^{N} I\left(x_{i}^{(j)}=a_{j l}, y_{i}=c_{k}\right)}{\sum_{i=1}^{N} I\left(y_{i}=c_{k}\right)} θ=nm=∑i=1NI(yi=ck)∑i=1NI(xi(j)=ajl,yi=ck)

得证

习题4.2

用贝叶斯估计法推出朴素贝叶斯法中的概率估计公式(4.10)及公式(4.11)。

与习题4.1类似,假设进行了N次实验,有 n i n_{i} ni次 Y = c i Y=c_{i} Y=ci,有 m i m_{i} mi次 Y = c i , X ( j ) = a j l Y=c_{i},X^{(j)}=a_{j l} Y=ci,X(j)=ajl

即 n i = ∑ i = 1 N I ( y i = c i ) , m i = ∑ i = 1 N I ( x i ( j ) = a j l , y i = c i ) n_{i}=\sum_{i=1}^{N} I\left(y_{i}=c_{i}\right),m_{i}=\sum_{i=1}^{N} I\left(x_{i}^{(j)}=a_{j l}, y_{i}=c_{i}\right) ni=∑i=1NI(yi=ci),mi=∑i=1NI(xi(j)=ajl,yi=ci)

公式4.11

P λ ( Y = c k ) = ∑ i = 1 N I ( y i = c k ) + λ N + K λ P_{\lambda}\left(Y=c_{k}\right)=\frac{\sum_{i=1}^{N} I\left(y_{i}=c_{k}\right)+\lambda}{N+K \lambda} Pλ(Y=ck)=N+Kλ∑i=1NI(yi=ck)+λ

假设 P λ ( Y = c i ) = θ i P_{\lambda}\left(Y=c_{i}\right)=\theta_{i} Pλ(Y=ci)=θi,其中 θ i \theta_{i} θi服从参数为 α i \alpha_{i} αi的狄利克雷分布。

即有 f ( θ 1 , ⋯ , θ K ∣ α 1 , … , α k ) = 1 B ( α 1 , ⋯ , α K ) ∏ i = 1 K θ i α i − 1 f\left(\theta_{1}, \cdots, \theta_{K} | \alpha_{1}, \ldots, \alpha_{k}\right)=\frac{1}{B\left(\alpha_{1}, \cdots, \alpha_{K}\right)} \prod_{i=1}^{K} \theta_{i}^{\alpha_{i}-1} f(θ1,⋯,θK∣α1,…,αk)=B(α1,⋯,αK)1∏i=1Kθiαi−1

与极大似然估计类似,有 P ( N ∣ θ 1 , ⋯ , θ K ) = θ 1 n 1 θ 2 n 2 . . . θ K n K = ∏ i = 1 K θ i n i P\left(N | \theta_{1}, \cdots,\theta_{K}\right)=\theta^{n_{1}}_{1}\theta^{n_{2}}_{2}...\theta^{n_{K}}_{K}=\prod_{i=1}^{K} \theta_{i}^{n_{i}} P(N∣θ1,⋯,θK)=θ1n1θ2n2...θKnK=∏i=1Kθini

P ( θ 1 , ⋯ , θ K ∣ N ) ∝ P ( N ∣ θ 1 , ⋯ , θ K ) P ( θ 1 , ⋯ , θ k ) ∝ ∏ i = 1 K θ i α i − 1 ∏ i = 1 K θ i n i ∝ ∏ i = 1 K θ i α i − 1 + n i P\left(\theta_{1}, \cdots, \theta_{K} | N\right) \propto P\left(N | \theta_{1}, \cdots, \theta_{K}\right) P\left(\theta_{1}, \cdots, \theta_{k}\right)\propto\prod_{i=1}^{K} \theta_{i}^{\alpha_{i}-1}\prod_{i=1}^{K} \theta_{i}^{n_{i}}\propto\prod_{i=1}^{K} \theta_{i}^{\alpha_{i}-1+n_{i}} P(θ1,⋯,θK∣N)∝P(N∣θ1,⋯,θK)P(θ1,⋯,θk)∝∏i=1Kθiαi−1∏i=1Kθini∝∏i=1Kθiαi−1+ni

所以有后验概率 P ( θ 1 , ⋯ , θ k ∣ N ) P\left(\theta_{1}, \cdots, \theta_{k} | N\right) P(θ1,⋯,θk∣N)服从于狄利克雷分布

P λ ( Y = c i ) P_{\lambda}\left(Y=c_{i}\right) Pλ(Y=ci)取 θ i \theta_{i} θi的期望 E ( θ i ) = n i + α i N + ∑ j = 1 k ( α j ) E(\theta_{i})=\frac{n_{i}+\alpha_{i}}{N+\sum_{j=1}^{k}\left(\alpha_{j}\right)} E(θi)=N+∑j=1k(αj)ni+αi,若假设 θ i \theta_{i} θi服从参数为 λ \lambda λ的狄利克雷分布,即 α 1 = α 2 = . . . = α k = λ \alpha_{1}=\alpha_{2}=...=\alpha_{k}=\lambda α1=α2=...=αk=λ,则有 E ( θ i ) = ∑ i = 1 N I ( y i = c i ) + λ N + K ∗ λ E(\theta_{i})=\frac{\sum_{i=1}^{N} I\left(y_{i}=c_{i}\right)+\lambda}{N+K*\lambda} E(θi)=N+K∗λ∑i=1NI(yi=ci)+λ

得证

公式4.10

P λ ( X ( j ) = a j l ∣ Y = c k ) = ∑ i = 1 N I ( x i ( j ) = a j , y i = c k ) + λ ∑ i = 1 N I ( y i = c k ) + S j λ P_{\lambda}\left(X^{(j)}=a_{j{l}} | Y=c_{k}\right)=\frac{\sum_{i=1}^{N} I\left(x_{i}^{(j)}=a_{j}, y_{i}=c_{k}\right)+\lambda}{\sum_{i=1}^{N} I\left(y_{i}=c_{k}\right)+S_{j} \lambda} Pλ(X(j)=ajl∣Y=ck)=∑i=1NI(yi=ck)+Sjλ∑i=1NI(xi(j)=aj,yi=ck)+λ,其中 S j S_{j} Sj表示第 j j j个特征的取值个数

证明过程类似,知识参数有点变动,设 P ( X ( j ) = a j l ∣ Y = c i ) = θ i P\left(X^{(j)}=a_{j l} | Y=c_{i}\right)=\theta_{i} P(X(j)=ajl∣Y=ci)=θi, θ i \theta_{i} θi服从于参数为 α i \alpha_{i} αi的狄利克雷分布。

即有 f ( θ 1 , ⋯ , θ S j ∣ α 1 , … , α S j ) = 1 B ( α 1 , ⋯ , α S j ) ∏ i = 1 S j θ i α i − 1 f\left(\theta_{1}, \cdots, \theta_{S_{j}} | \alpha_{1}, \ldots, \alpha_{S_{j}}\right)=\frac{1}{B\left(\alpha_{1}, \cdots, \alpha_{S_{j}}\right)} \prod_{i=1}^{S_{j}} \theta_{i}^{\alpha_{i}-1} f(θ1,⋯,θSj∣α1,…,αSj)=B(α1,⋯,αSj)1∏i=1Sjθiαi−1

同理 P ( n ∣ θ 1 , ⋯ , θ k ) = θ 1 m 1 θ 2 m 2 . . . θ K m K = ∏ i = 1 S j θ i m i P\left(n | \theta_{1}, \cdots,\theta_{k}\right)=\theta^{m_{1}}_{1}\theta^{m_{2}}_{2}...\theta^{m_{K}}_{K}=\prod_{i=1}^{S_{j}} \theta_{i}^{m_{i}} P(n∣θ1,⋯,θk)=θ1m1θ2m2...θKmK=∏i=1Sjθimi

P ( θ 1 , ⋯ , θ S j ∣ n ) ∝ P ( n ∣ θ 1 , ⋯ , θ S j ) P ( θ 1 , ⋯ , θ S j ) ∝ ∏ i = 1 S j θ i α i − 1 ∏ i = 1 S j θ i m i ∝ ∏ i = 1 S j θ i α i − 1 + m i P\left(\theta_{1}, \cdots, \theta_{S_{j}} | n\right) \propto P\left(n | \theta_{1}, \cdots, \theta_{S_{j}}\right) P\left(\theta_{1}, \cdots, \theta_{S_{j}}\right)\propto\prod_{i=1}^{S_{j}} \theta_{i}^{\alpha_{i}-1}\prod_{i=1}^{S_{j}} \theta_{i}^{m_{i}}\propto\prod_{i=1}^{S_{j}} \theta_{i}^{\alpha_{i}-1+m_{i}} P(θ1,⋯,θSj∣n)∝P(n∣θ1,⋯,θSj)P(θ1,⋯,θSj)∝∏i=1Sjθiαi−1∏i=1Sjθimi∝∏i=1Sjθiαi−1+mi

所以有后验概率 P ( θ 1 , ⋯ , θ S j ∣ n ) P\left(\theta_{1}, \cdots, \theta_{S_{j}} | n\right) P(θ1,⋯,θSj∣n)服从于狄利克雷分布

P λ ( X ( j ) = a j l ∣ Y = c k ) P_{\lambda}\left(X^{(j)}=a_{j{l}} | Y=c_{k}\right) Pλ(X(j)=ajl∣Y=ck)取 θ i \theta_{i} θi的期望 E ( θ i ) = m j + α i n + ∑ j = 1 S j ( α j ) E(\theta_{i})=\frac{m_{j}+\alpha_{i}}{n+\sum_{j=1}^{S_{j}}\left(\alpha_{j}\right)} E(θi)=n+∑j=1Sj(αj)mj+αi,若假设 θ i \theta_{i} θi服从参数为 λ \lambda λ的狄利克雷分布,即 α 1 = α 2 = . . . = α S j = λ \alpha_{1}=\alpha_{2}=...=\alpha_{S_{j}}=\lambda α1=α2=...=αSj=λ,则有 E ( θ i ) = ∑ i = 1 N I ( x i ( j ) = a j l , y i = c i ) + λ ∑ i = 1 N I ( y i = c i ) + S j ∗ λ E(\theta_{i})=\frac{\sum_{i=1}^{N} I\left(x_{i}^{(j)}=a_{j l}, y_{i}=c_{i}\right)+\lambda}{\sum_{i=1}^{N} I\left(y_{i}=c_{i}\right)+S_{j}*\lambda} E(θi)=∑i=1NI(yi=ci)+Sj∗λ∑i=1NI(xi(j)=ajl,yi=ci)+λ

参考

极大似然估计与贝叶斯估计(强推,博主讲得很详细)

狄利克雷分布与贝叶斯分布分布

第4章习题