NLP(四):朴素贝叶斯原理及文本分类的sklearn实现

目录

1.朴素贝叶斯原理

2.基于的朴素贝叶斯的文本分类的sklearn实现

2.1首先基于sklearn的dataset数据集,贴上朴素贝叶斯手写数字识别的历程。

2.2sklearn朴素贝贝叶斯文本分类的实现

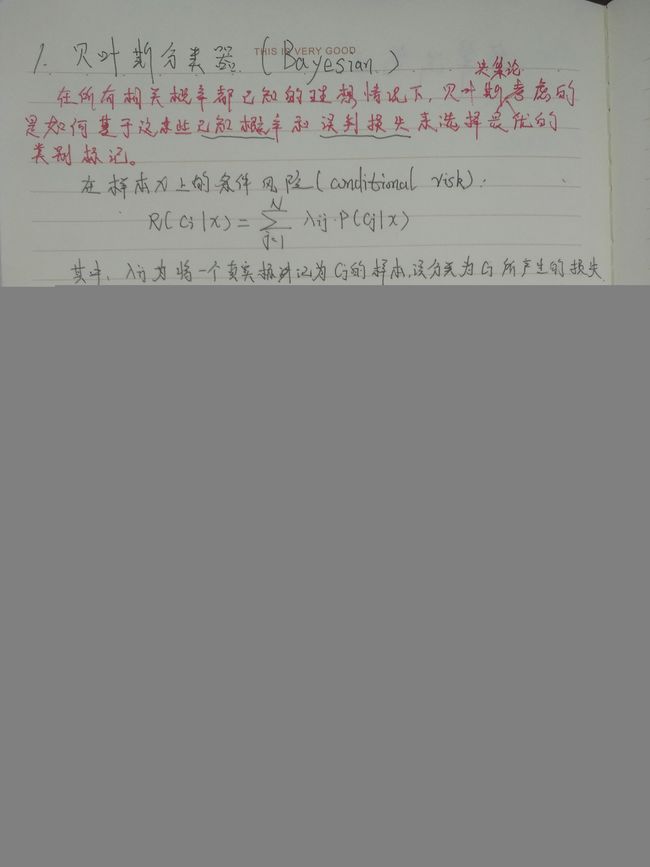

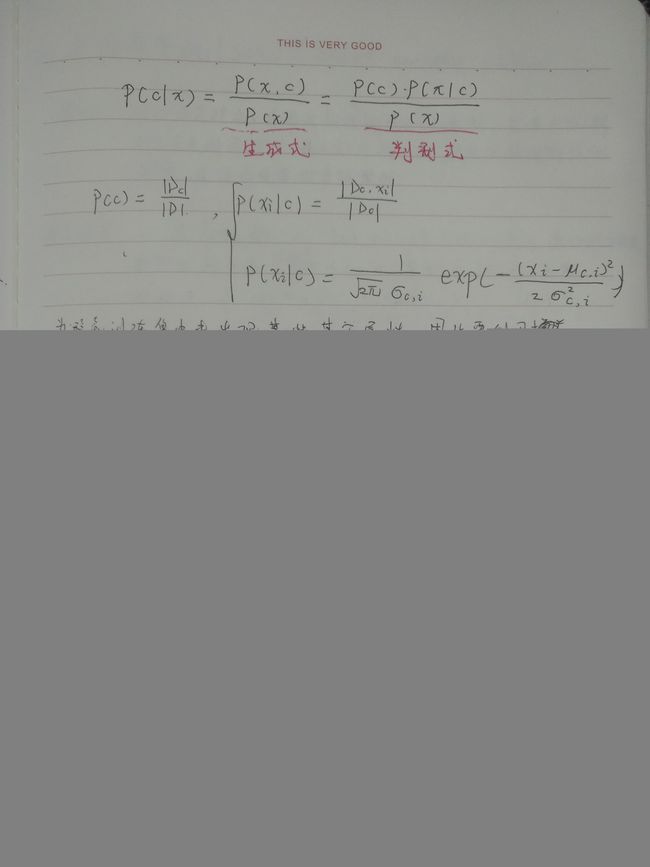

1.朴素贝叶斯原理

直接贴上自己的朴素贝叶斯(参考书籍为西瓜书)学习笔记:

2.基于的朴素贝叶斯的文本分类的sklearn实现

2.1首先基于sklearn的dataset数据集,贴上朴素贝叶斯手写数字识别的历程。

# @Author : wpf

# @Email : [email protected]

# @File : 12.py

# @Software: PyCharm Community Edition

from sklearn import datasets, model_selection, naive_bayes

import matplotlib.pyplot as plt

# 可视化手写识别数据集Digit Dataset

def show_digits():

digits = datasets.load_digits()

fig = plt.figure()

for i in range(20):

ax = fig.add_subplot(4, 5, i + 1)

ax.imshow(digits.images[i], cmap=plt.cm.gray_r, interpolation='nearest')

plt.show()

show_digits()

# 加载Digit数据集

def load_data():

digits = datasets.load_digits()

return model_selection.train_test_split(digits.data, digits.target,

test_size=0.25, random_state=0)

def test_GaussianNB(*data):

X_train, X_test, y_train, y_test = data

cls = naive_bayes.GaussianNB()

cls.fit(X_train, y_train)

print('GaussianNB Classifier')

print('Training Score: %.2f' % cls.score(X_train, y_train))

print('Test Score: %.2f' % cls.score(X_test, y_test))

print(X_test)

pre = cls.predict(X_test)

print(pre)

X_train, X_test, y_train, y_test = load_data()

print(test_GaussianNB(X_train, X_test, y_train, y_test))

def test_MultinomialNB(*data):

X_train, X_test, y_train, y_test = data

cls = naive_bayes.MultinomialNB()

cls.fit(X_train, y_train)

print('MultinomialNB Classifier')

print('Training Score: %.2f' % cls.score(X_train, y_train))

print('Test Score: %.2f' % cls.score(X_test, y_test))

X_train, X_test, y_train, y_test = load_data()

print(test_MultinomialNB(X_train, X_test, y_train, y_test))

def test_BernoulliNB(*data):

X_train, X_test, y_train, y_test = data

cls = naive_bayes.BernoulliNB()

cls.fit(X_train, y_train)

print('BernoulliNB Classifier')

print('Training Score: %.2f' % cls.score(X_train, y_train))

print('Test Score: %.2f' % cls.score(X_test, y_test))

X_train, X_test, y_train, y_test = load_data()

print(test_BernoulliNB(X_train, X_test, y_train, y_test))2.2sklearn朴素贝贝叶斯文本分类的实现

import os

import random

import jieba #处理中文

#import nltk #处理英文

from sklearn.naive_bayes import MultinomialNB

import matplotlib.pyplot as plt

# 文本处理,也就是样本生成过程

def text_processing(folder_path, test_size=0.2):

folder_list = os.listdir(folder_path)

data_list = []

class_list = []

# 遍历文件夹

for folder in folder_list:

new_folder_path = os.path.join(folder_path, folder)

files = os.listdir(new_folder_path)

# 读取文件

j = 1

for file in files:

if j > 100: # 怕内存爆掉,只取100个样本文件,你可以注释掉取完

break

with open(os.path.join(new_folder_path, file), 'rb') as fp:

text = fp.read()

## 是的,随处可见的jieba中文分词

# jieba.enable_parallel(4) # 开启并行分词模式,参数为并行进程数,不支持windows

word_cut = jieba.cut(text, cut_all=False) # 精确模式,返回的结构是一个可迭代的genertor

word_list = list(word_cut) # genertor转化为list,每个词unicode格式

# jieba.disable_parallel() # 关闭并行分词模式

data_list.append(word_list) # 训练集list #list里套list,每个list是没篇文章分完词的Unicode编码

class_list.append(folder) # 类别,即文件夹的名字(代表类别)

j += 1

## 粗暴地划分训练集和测试集

data_class_list = list(zip(data_list, class_list)) # 将列表对应元素合并,创建一个元组队的列表

random.shuffle(data_class_list)

index = int(len(data_class_list) * test_size) + 1 # 20% 当做训练集,index为19

train_list = data_class_list[index:] # 19-89共71个设为训练集

test_list = data_class_list[:index] # 0-18共19个设为测试集

train_data_list, train_class_list = zip(*train_list) # 将映射的元组unzip成原来的两个list

test_data_list, test_class_list = zip(*test_list)

# 其实可以用sklearn自带的部分做

# train_data_list, test_data_list, train_class_list, test_class_list = sklearn.cross_validation.train_test_split(data_list, class_list, test_size=test_size)

# 统计词频放入all_words_dict

all_words_dict = {} # 字典类型

for word_list in train_data_list:

for word in word_list: # 遍历训练集中每个词语

if word in all_words_dict:

all_words_dict[word] += 1

else:

all_words_dict[word] = 1

# key函数利用词频进行降序排序

all_words_tuple_list = sorted(all_words_dict.items(), key=lambda f: f[1], reverse=True) # 内建函数sorted参数需为list

all_words_list = list(zip(*all_words_tuple_list))[0] # 将元组分成两个list,只要第一个list,all_words_list为训练集中所有词语按词频排序的list

return all_words_list, train_data_list, test_data_list, train_class_list, test_class_list

#粗暴的词统计

def make_word_set(words_file):

words_set = set()#set类型没有重复的元素

with open(words_file, 'rb') as fp:

for line in fp.readlines():#按行读,每行是一个停用词

word = line.strip().decode("utf-8")#去除首位空格

if len(word)>0 and word not in words_set: # 去重

words_set.add(word)

return words_set

# 选取特征词

#去掉停用词,和词频高的(比较normal)的词,得到说有的特征词集,构成词袋

def words_dict(all_words_list, deleteN, stopwords_set=set()):

feature_words = []

n = 1

for t in range(deleteN, len(all_words_list), 1):#去掉词频较高的前deleteN个词,可能是停用词或比较normal的词

if n > 1000: # feature_words的维度1000

break

if not all_words_list[t].isdigit() and all_words_list[t] not in stopwords_set and 1