作者:RayChiu_Labloy

版权声明:著作权归作者所有,商业转载请联系作者获得授权,非商业转载请注明出处

目录

win10的OpenVINO安装

使用OpenVINO将yolo预训练模型转成IR格式

OpenVINO设置永久环境变量

windows 系统环境变量里面添加

path 相关环境添加

VS2017配置OpenVINO和OpenCV

包含目录

库目录

附加目录

推理

脚本main.cpp

效果:

执行时遇到的问题

win10的OpenVINO安装

参考:win10 安装OpenVINO并在tensorflow环境下测试官方demo_RayChiu757374816的博客-CSDN博客

使用OpenVINO将yolo预训练模型转成IR格式

参考:win10环境yolov5s预训练模型转onnx然后用openvino生成推理加速模型并测试推理_RayChiu757374816的博客-CSDN博客

OpenVINO设置永久环境变量

官方给的设置环境变量的bat脚本感觉没起作用,如果不设置永久的环境变量则会出现找不到各种动态库的情况。

windows 系统环境变量里面添加

| 变量名 |

变量值 |

| INTEL_OPENVINO_DIR |

C:\Program Files (x86)\IntelSWTools\openvino_2020.4.287 |

| INTEL_CVSDK_DIR |

%INTEL_OPENVINO_DIR% |

| HDDL_INSTALL_DIR |

%INTEL_OPENVINO_DIR%\deployment_tools\inference_engine\external\hddl |

| InferenceEngine_DIR |

%INTEL_OPENVINO_DIR%\deployment_tools\inference_engine\share |

| OpenCV_DIR |

%INTEL_OPENVINO_DIR%\opencv\cmake |

| NGRAPH_DIR |

%INTEL_OPENVINO_DIR%\deployment_tools\ngraph\cmake |

| PYTHONPATH |

%INTEL_OPENVINO_DIR%\python\python3.8 |

注意对应自己的OpenVINO的安装路径和python版本

path 相关环境添加

%HDDL_INSTALL_DIR%\bin

%INTEL_OPENVINO_DIR%\opencv\bin

%INTEL_OPENVINO_DIR%\deployment_tools\ngraph\lib

%INTEL_OPENVINO_DIR%\deployment_tools\inference_engine\external\tbb\bin

%INTEL_OPENVINO_DIR%\deployment_tools\inference_engine\bin\intel64\Release

%INTEL_OPENVINO_DIR%\deployment_tools\inference_engine\bin\intel64\Debug

%INTEL_OPENVINO_DIR%\python\python3.8\openvino\inference_engine

VS2017配置OpenVINO和OpenCV

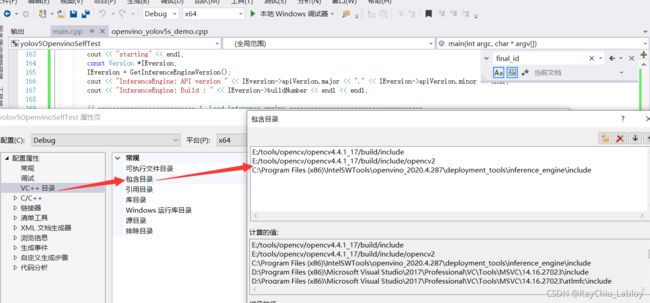

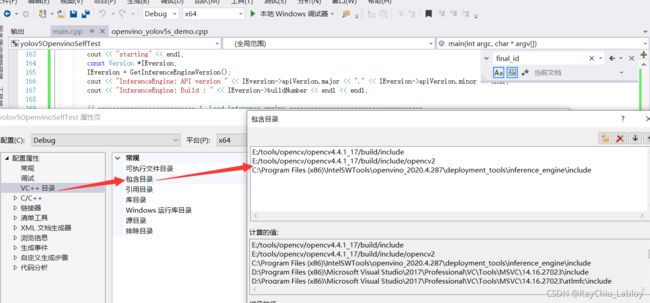

包含目录

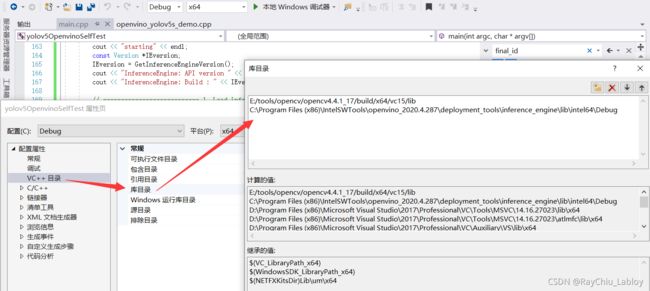

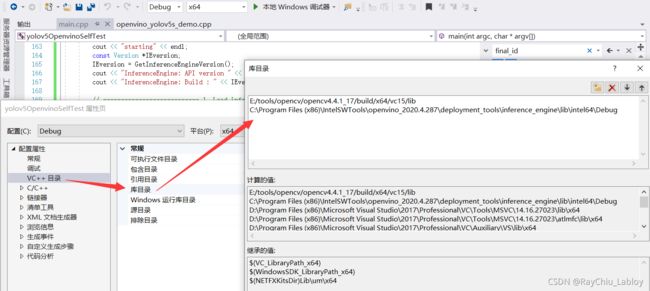

库目录

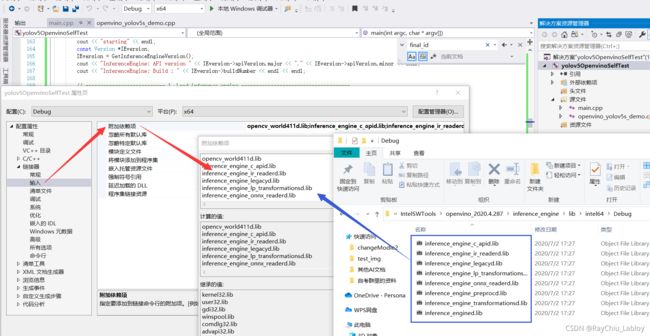

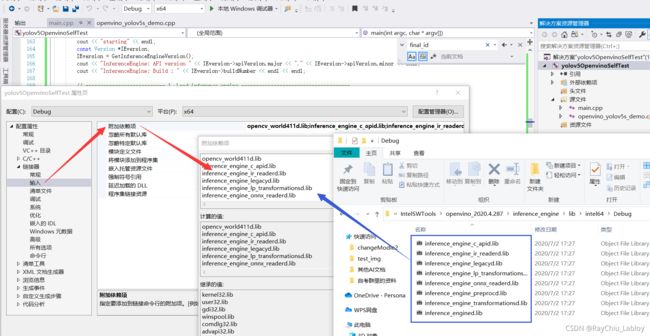

附加目录

推理

脚本main.cpp

只需要对应自己的155-157三行

#include

#include

#include

#include

效果:

执行时遇到的问题

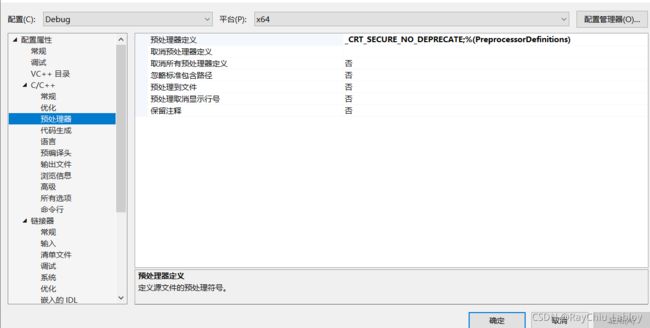

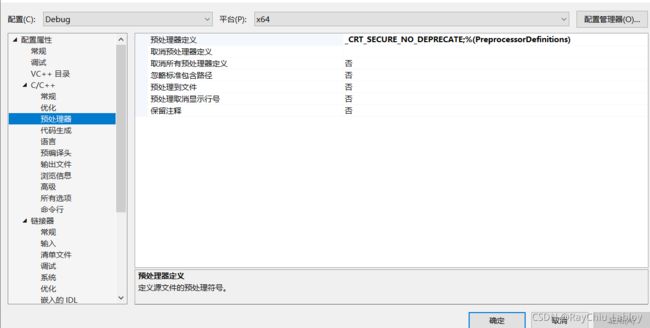

执行报错:错误 C4996 'wcstombs': This function or variable may be unsafe. Consider using wcstombs_s instead. To disable deprecation, use _CRT_SECURE_NO_WARNINGS. See online help for details. yolov5OpenvinoSelfTest c:/program files (x86)/intelswtools/openvino_2020.4.287/deployment_tools/inference_engine/include/ie_unicode.hpp 38

遇到这样的情况就是缺少宏,所以需要我们将需要的宏进行加上就可以了

在以下的位置:项目->属性->配置属性->C/C++ -> 预处理器 -> 预处理器定义,增加_CRT_SECURE_NO_DEPRECATE

参考:GitHub - fb029ed/yolov5_cpp_openvino: 用c++实现了yolov5使用openvino的部署

【如果对您有帮助,交个朋友给个一键三连吧,您的肯定是我博客高质量维护的动力!!!】