Federated Learning 联邦学习概述及最新工作

声明:以后会逐渐转移到某乎啦,有兴趣的伙伴可以关注:霁月

一、目前主流的联邦学习应用场景

|

|

Cross-silo FL |

Cross-device FL |

| Setting |

Training a model on siloed data. Clients are from different organizations (e.g. medical or financial) or geo-distributed datacenters. |

The clients are a very large number of mobile or IoT (物联网) devices 医疗方面,可能有未来的家庭医生hhh |

| Data distribution |

Data is generated locally and remains decentralized. |

|

| Distribution scale |

Typically 2 - 100 clients. |

Massively parallel, up to 10^10 clients. |

| Addressability |

Each client has an identity or name that allows the system to access it specifically. |

Clients cannot be indexed directly (i.e., no use of client identifiers). 不知道哪些数据已经收集,有重复手机训练的可能性 |

| Data partition axis |

Partition is fixed. Could be horizontal or vertical. |

Fixed partitioning by example (horizontal). |

二、目前主流的分类

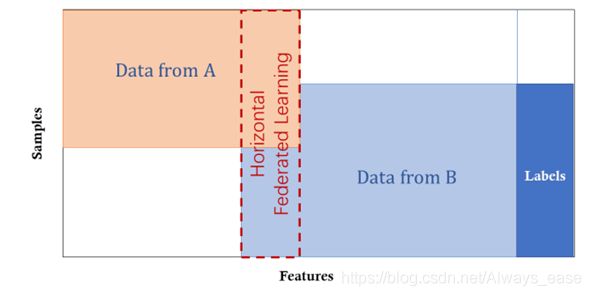

1. Horizontal Federated Learning (HFL)

Where to use? Datasets share the same feature space but different in samples

What’s the result? The number of training samples increased.

Example: 2 regional hospitals

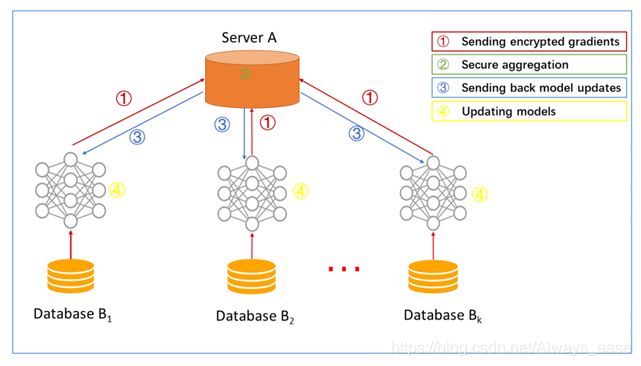

Training Progress:

1)sever发出请求。2)每个client在本地迭代数据。3)传参数给sever。4)sever收集到所有参数之后,对参数取平均,更新模型参数,再把当前参数传给每个client

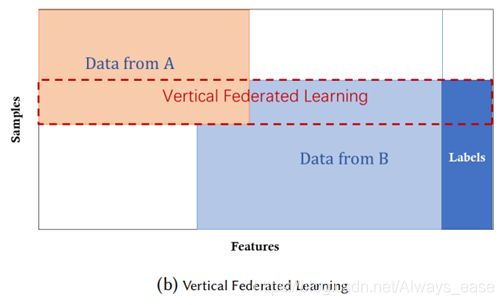

2. Vertical Federated Learning (VFL)

Where to use? Datasets share the same sample ID but differ in feature space.

What’s the result? Helps to establish a "common wealth"

Example: 2 different companies in the same city.

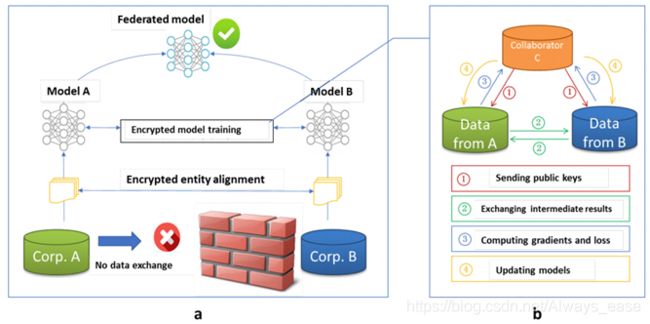

Training Progress:

1)sever给每个client传递key。2)client本地训练。3)client加密特征。4)其他clients把加密特征给B。5)B计算loss+gradient。6)B加密,并传给sever。7)server更新参数,再给所有client

加密的过程可能会引入噪声

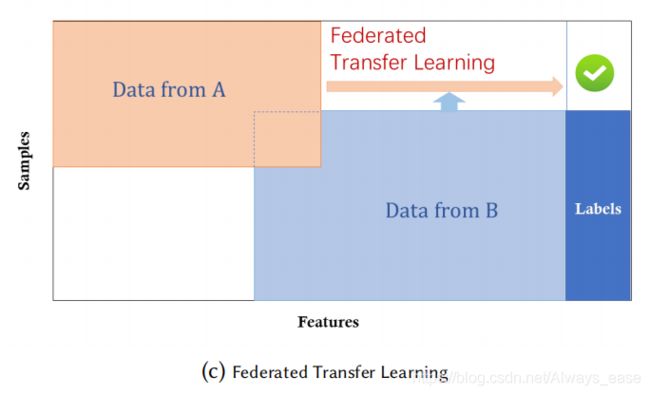

3. Federated Transfer Learning (FTL)

Where to use? Datasets share the same sample ID but differ in feature space.

What’s the result? Helps to establish a "common wealth"

Example: 2 different companies in the same city.

Training Progress:与纵向联邦学习相似,只是中间传递结果不同:共同样本/不是共同样本Common samples or not.

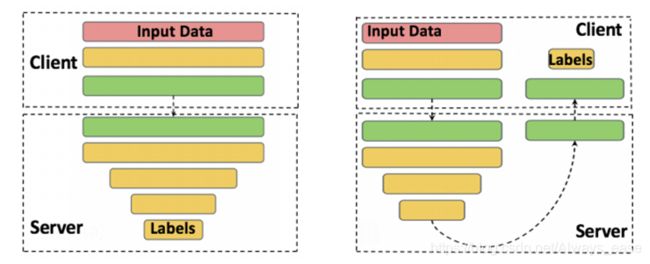

三、Federated Learning (FL) to Split Learning (SL)

FL:Shared model:Disadvantages: Attack.

转变: Split the execution of a model on a per-layer basis between the clients and the server

SL: Split learning。Advantages: The client has no access to the server-side model and vice-versa.

四、Split Learning

Advantages: 1) Enables the reduction in client-side computation in comparison to FL. 2) A certain level of privacy

Weakness: 1)The training takes place in a relay-based approach, where the server trains with one client and then move to another client sequentially. (Slow.)

2)SL makes the clients’ resources idle because only one client engages with the server at one instance.

1)sever发出请求;2)第一个client从上一个client获得参数;迭代前几层;3)传递最后一层参数给server;4)server收到参数,迭代后面几层,再反传,反传的gradient给client,此时server可以更新自己的参数;5)client收到gradient,完成后续反传,更新自己的参数,把weight传给下一个client

Federated Learning (FL) vs. Split Learning (SL)

|

|

FL |

SL |

| Network |

Whole |

Split |

| Client |

Parallel |

Step by step |

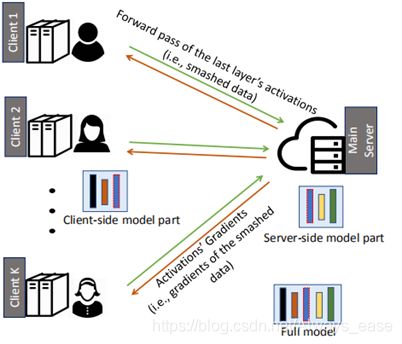

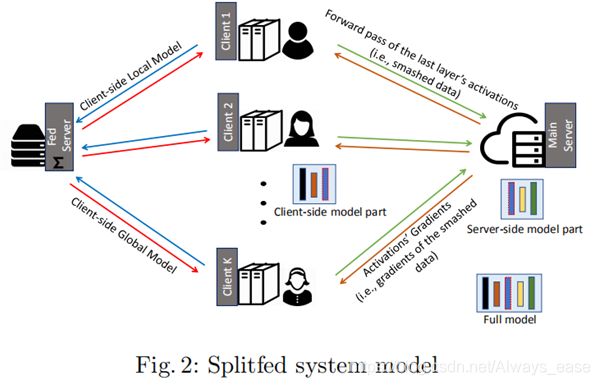

五、SL+FL来了!SplitFed

为了把FL和SL结合起来,冲鸭!消灭劣势,整合优势!

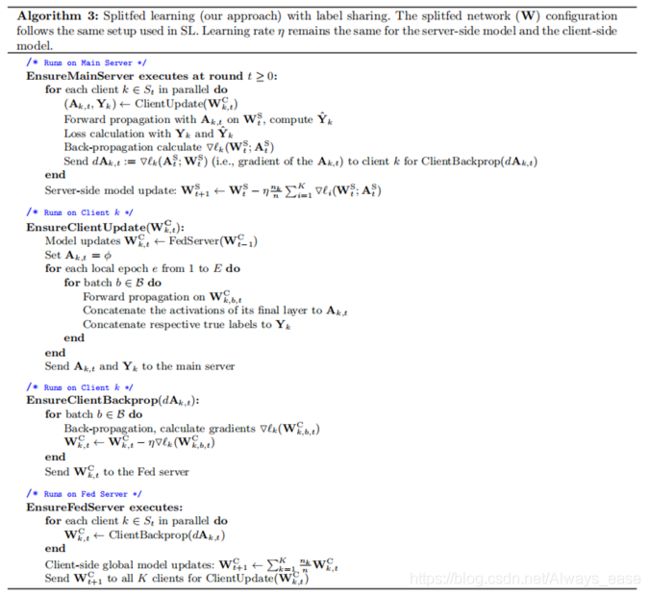

Training progress:

1.1从FedServer初始化;1.2每个client单独训练;1.3传递参数给MainServer;1.4MainServer训练、反传;1.5给client,client反传,更新后的weights给FedServer

2.1 MainServer接收到所有参数后,再更新权重

3.1 所有Client反传后,FedServer更新权重

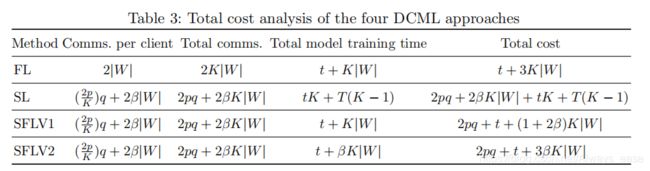

cost analysis

K: the number of clients p: total data size

K: the number of clients p: total data size

q: the size of the smashed layer |W|: the total number of model parameters

: the fraction of model parameters (weights) available in a client in SL

t: the model training time (for any architecture) for one global epoch

T: the wait time (delay) for one client to receive updates during SL during one global epoch

第2列:client整体cost;第3列:mode training cost;第2+第3=第4

第一行:

- 2|W|: download and upload

- 2KW:K个client。不过我个人有些疑惑,假如是全部并行,那应该是不要乘以K KW: 加权平均的延迟。

第二行:

- 2pq: upload and download from server参数量, 2betaKW to/from next client

- SL 没有KW的原因:server不需要取average,每一个都在一个client的训练过程中更新了

第三行SFLV1:

- 2pq: 同上; 2betaKW 同上;

- KW=betaKW+(1-beta)KW=Main sever取平均+FedServer取平均

第四行SFLV2:

- 把多个client同时开始,当成batch

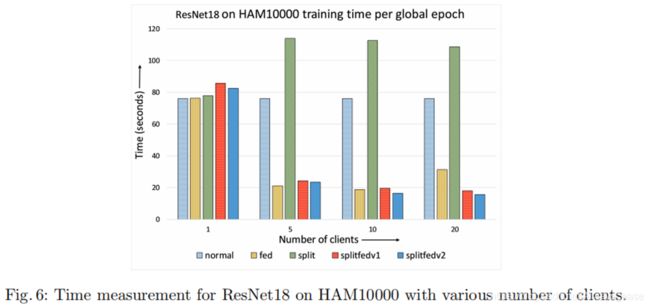

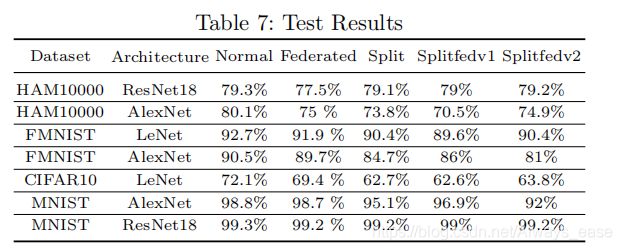

Experiments

- Totally, Normal>FL>SL.

- FL has better learning performance in most cases as the original model is not split while trained among the multiple clients.

- VGG16 on CIFAR10 did not converge in SL. (unavailability of certain other factors such as, hyper-parameters tuning or change in data distribution)

六、Challenges - Incentive

1、The incentive can be in the form of monetary payout or final models with different levels of performance

2、Clients might worry that contributing their data to training federated learning models will benefit their competitors, who do not contribute as much but receive the same final model nonetheless

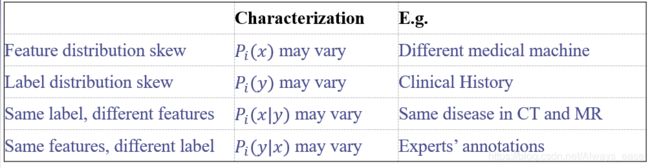

七、Challenges - Non-IID Data

Statement:

(, ) from h client’s local data distribution; examples (, ) ∼(x, y) ∼ (, )

Solution:

1、Augment data in order to make the data across clients more similar.

2、Create a small dataset which can be shared globally.

八、各家知名Platforms

| Platforms |

|

Source |

Functions |

| TFF |

TF |

https://github.com/tensorflow/federated |

Horizontal FL

|

| FedML |

PyTorch |

https://github.com/FedML-AI/FedML |

1. Supports: on-device training for IoT, mobile devices, distributed computing, and single-machine simulation.

2. Supports various algorithms: decentralized learning, vertical FL, and split learning

|

| PySyft |

PyTorch |

|

PySyft decouples private data from model training, using federated learning, differential privacy, and multi-party computation (MPC) |

| Leaf |

|

|

Provides methodologies, pipelines, and evaluation techniques |

| Sherpa.ai |

|

|

|

| PyVertical |

PyTorch |

|

Vertical FL |

| Flower |

|

https://flower.dev/ |

Horizontal FL |

References

- Kairouz, P. et al. “Advances and Open Problems in Federated Learning.”

- Yang, Qiang, et al. "Federated machine learning: Concept and applications."

- Thapa, Chandra et al. “SplitFed: When Federated Learning Meets Split Learning.”