数据增强系列(4)如何进行实例分割的增强

1.概述

在本笔记中,我们将使用流行的增强库,Albumentations来执行类似于coco数据集的多个注释的图像增强。

你可以简单地安装它:pip install albumentations

2.代码实现

我们考虑一个图分割图像有若干对象,每个对象有一个标签,一个边界框(bbox),和一个二进制掩码。

2.1导入相关库

# Let's import needed libraries

import torch

import albumentations as A

import cv2

import json

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import os

from skimage.color import label2rgb

2.2 设置相关参数

设置通用常量。提示:在实践中,您可以创建 config.py 文件,将它们添加到那里,然后执行

from configs import ...

IMG_SIZE = 512

MAX_SIZE = 1120

IMAGE_ID = '0461935888bad18244f11e67e7d3b417.jpg'

设置数据路径。为方便起见,我在此处上传了图像和注释

input_path = 'examples-for-augs'

image_filepath = os.path.join(input_path, IMAGE_ID)

annot_filepath = os.path.join(input_path, 'annotations.csv')

2.3 数据可视化

让我们加载带有注释的图像

# load image

image = cv2.imread(image_filepath, cv2.IMREAD_UNCHANGED)

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB) # I am still wondering why OpenCV changed to BGR by default

height, width, channels = image.shape

print(height, width) # 1685 1123

# load annotations

anns = pd.read_csv(annot_filepath)

print(anns.head())

# Unnamed: 0 ImageId ... Width ClassId

# 0 5842 0461935888bad18244f11e67e7d3b417.jpg ... 1123 23

# 1 5843 0461935888bad18244f11e67e7d3b417.jpg ... 1123 23

# 2 5844 0461935888bad18244f11e67e7d3b417.jpg ... 1123 6

# 3 5845 0461935888bad18244f11e67e7d3b417.jpg ... 1123 32

# 4 5846 0461935888bad18244f11e67e7d3b417.jpg ... 1123 42

def img_masks_targets(df, img_id):

"""Select all targets of one image as an array of numbers

Select all masks of one image as an array of RLE strings

Output:

masks and targets for an image

"""

# select all targets of one image as an array of numbers

targets = df[df['ImageId'] == img_id]["ClassId"].values

# select all masks of one image as an array is strings

rles = df[df['ImageId'] == img_id]["EncodedPixels"].values

return targets, rles

labels, rles = img_masks_targets(anns, img_id=IMAGE_ID)

num_instances = len(rles)

print(f'Number of instances on the image {len(rles)}') # Number of instances on the image 10

图像的掩码通常采用游程编码格式(RLE)。我们需要将它们转换为二进制掩码来绘制和/或增强。这里有一些RLE编码和解码的助手

# :https://www.kaggle.com/paulorzp/run-length-encode-and-decode

def rle_decode(rle_str: str, mask_shape: tuple, mask_dtype=np.uint8):

"""Helper to decode RLE string to a binary mask"""

s = rle_str.split()

starts, lengths = [np.asarray(x, dtype=int) for x in (s[0:][::2], s[1:][::2])]

starts -= 1

ends = starts + lengths

mask = np.zeros(np.prod(mask_shape), dtype=mask_dtype)

for lo, hi in zip(starts, ends):

mask[lo:hi] = 1

return mask.reshape(mask_shape[::-1]).T

def rle_encode(mask):

"""Helper to encode binary mask to RLE string"""

pixels = mask.T.flatten()

pixels = np.concatenate([[0], pixels, [0]])

rle = np.where(pixels[1:] != pixels[:-1])[0] + 1

rle[1::2] -= rle[::2]

return rle.tolist()

masks = np.zeros((len(rles), height, width), dtype=np.uint8)

for num in range(num_instances):

masks[num, :, :] = rle_decode(rles[num], (height, width), np.uint8)

print(masks.shape) # (10, 1685, 1123)

定义一些辅助函数来可视化数据和标签

def visualize_bbox(img, bbox, color=(255, 255, 0), thickness=2):

"""Helper to add bboxes to images

Args:

img : image as open-cv numpy array

bbox : boxes as a list or numpy array in pascal_voc fromat [x_min, y_min, x_max, y_max]

color=(255, 255, 0): boxes color

thickness=2 : boxes line thickness

"""

x_min, y_min, x_max, y_max = bbox

x_min, y_min, x_max, y_max = int(x_min), int(y_min), int(x_max), int(y_max)

cv2.rectangle(img, (x_min, y_min), (x_max, y_max), color=color, thickness=thickness)

return img

def plot_image_anns(image, masks, boxes=None):

"""Helper to plot images with bboxes and masks

Args:

image: image as open-cv numpy array, original and augmented

masks: setof binary masks, original and augmented

bbox : boxes as a list or numpy array, original and augmented

"""

# glue binary masks together

one_mask = np.zeros_like(masks[0])

for i, mask in enumerate(masks):

one_mask += (mask > 0).astype(np.uint8) * (

11 - i) # (11-i) so my inner artist is happy with the masks colors

if boxes is not None:

for box in boxes:

image = visualize_bbox(image, box)

# for binary masks we get one channel and need to convert to RGB for visualization

mask_rgb = label2rgb(one_mask, bg_label=0)

f, ax = plt.subplots(1, 2, figsize=(16, 16))

ax[0].imshow(image)

ax[0].set_title('Original image')

ax[1].imshow(mask_rgb, interpolation='nearest')

ax[1].set_title('Original mask')

f.tight_layout()

plt.show()

def plot_image_aug(image, image_aug, masks, aug_masks, boxes, aug_boxes):

"""Helper to plot images with bboxes and masks and their augmented versions

Args:

image, image_aug: image as open-cv numpy array, original and augmented

masks, aug_masks:setof binary masks, original and augmented

bbox, aug_boxes : boxes as a list or numpy array, original and augmented

"""

# glue masks together

one_mask = np.zeros_like(masks[0])

for i, mask in enumerate(masks):

one_mask += (mask > 0).astype(np.uint8) * (11 - i)

one_aug_mask = np.zeros_like(aug_masks[0])

for i, augmask in enumerate(aug_masks):

one_aug_mask += (augmask > 0).astype(np.uint8) * (11 - i)

for box in boxes:

image = visualize_bbox(image, box)

for augbox in aug_boxes:

image_aug = visualize_bbox(image_aug, augbox)

# for binary masks we get one channel and need to convert to RGB for visualization

mask_rgb = label2rgb(one_mask, bg_label=0)

mask_aug_rgb = label2rgb(one_aug_mask, bg_label=0)

f, ax = plt.subplots(2, 2, figsize=(16, 16))

ax[0, 0].imshow(image)

ax[0, 0].set_title('Original image')

ax[0, 1].imshow(image_aug)

ax[0, 1].set_title('Augmented image')

ax[1, 0].imshow(mask_rgb, interpolation='nearest')

ax[1, 0].set_title('Original mask')

ax[1, 1].imshow(mask_aug_rgb, interpolation='nearest')

ax[1, 1].set_title('Augmented mask')

f.tight_layout()

plt.show()

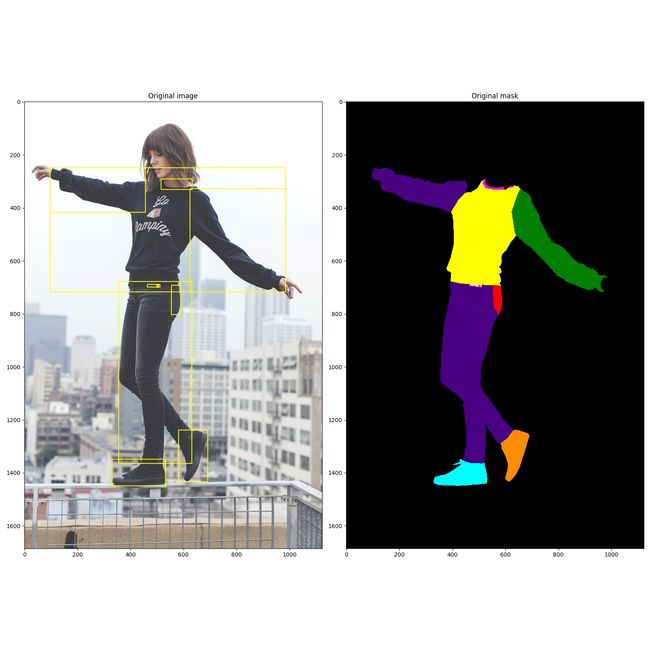

让我们看看我们的图像和相应的蒙版

plot_image_anns(image, masks)

如 Mask-R-CNN 的实例分割模型,您还需要边界框。 COCO 数据集提供了它们,但通常你只会得到掩码。没问题,我们将从掩码生成bbox。

def get_boxes_from_masks(masks):

""" Helper, gets bounding boxes from masks """

coco_boxes = []

for mask in masks:

pos = np.nonzero(mask)

xmin = np.min(pos[1])

xmax = np.max(pos[1])

ymin = np.min(pos[0])

ymax = np.max(pos[0])

coco_boxes.append([xmin, ymin, xmax, ymax])

coco_boxes = np.asarray(coco_boxes, dtype=np.float32)

return coco_boxes

img = image.copy()

boxes = get_boxes_from_masks(masks)

plot_image_anns(img, masks, boxes)

2.4 数据增强

现在让我们创建一个我们想要应用于图像和注释的变换列表。 增强的选择取决于您的任务。常见的包括:

- 卫星成像数据的 D4 对称群增强;

- 用于分类的不同亮度和颜色的裁剪,旋转和缩放;

- 基于相机的自动驾驶任务的天气条件模拟;

- X 射线胸部扫描的噪声、模糊和轻微旋转等。

在这里,我们将列出一些用于实验的列表。在实践中,最好创建 transforms.py 文件并将其全部放在那里。

请注意:A.Normalize函数——像ImageNet数据集训练中那样对图像进行归一化。建议在向模型发送数据之前进行此操作,特别是在ImageNet骨干上进行预先训练的情况下。

# 可视化目的,我在这里注释了它

D4_transforms = [A.Resize(height // 2, width // 2, interpolation=cv2.INTER_LINEAR, p=1),

# D4 Group augmentations

A.HorizontalFlip(p=1),

A.VerticalFlip(p=0.5),

A.RandomRotate90(p=0.5),

A.Transpose(p=0.5),

# A.Normalize()

]

geom_transforms = [A.ShiftScaleRotate(shift_limit=0.0625, scale_limit=0.1, rotate_limit=15,

interpolation=cv2.INTER_LINEAR, border_mode=cv2.BORDER_CONSTANT, value=0,

mask_value=0, p=0.5),

# D4 Group augmentations

A.HorizontalFlip(p=0.5),

A.VerticalFlip(p=0.5),

A.RandomRotate90(p=0.5),

A.Transpose(p=0.5),

# crop and resize

A.RandomSizedCrop((MAX_SIZE - 100, MAX_SIZE), height // 2, width // 2, w2h_ratio=1.0,

interpolation=cv2.INTER_LINEAR, always_apply=False, p=0.5),

A.Resize(height // 2, width // 2, interpolation=cv2.INTER_LINEAR, p=1),

# A.Normalize(),

]

heavy_transforms = [A.RandomRotate90(),

A.Flip(),

A.Transpose(),

A.GaussNoise(),

A.ShiftScaleRotate(shift_limit=0.0625, scale_limit=0.1, rotate_limit=45, p=0.7),

A.OneOf([

A.MotionBlur(p=0.2),

A.MedianBlur(blur_limit=3, p=0.1),

A.Blur(blur_limit=3, p=0.1),

], p=0.5),

A.OneOf([

A.Sharpen(),

A.Emboss(),

A.RandomBrightnessContrast(),

], p=0.5),

A.HueSaturationValue(p=0.3),

# A.Normalize(),

]

# 我们需要选择bbox格式,请参考这里的库文档:https://albumentations.readthedocs.io/en/latest/api/core.html#albumentations.core.composition.BboxParams

bbox_params = {'format': 'pascal_voc', 'min_area': 0, 'min_visibility': 0, 'label_fields': ['category_id']}

# 现在我们准备对图像、bbox 集和mask集应用增强。它需要 bboxes 作为列表,因此我们将其转换为列表格式。

boxes = list(boxes) # you need to send bounding boxes to a list

img = image.copy()

augs = A.Compose(heavy_transforms, bbox_params=bbox_params, p=1)

# 我在这里会报错,将augs.is_check_args = False即可

augmented = augs(image=img, masks=masks, bboxes=boxes, category_id=labels, force_apply=True)

aug_img = augmented['image']

aug_masks = augmented['masks']

aug_boxes = augmented['bboxes']

plot_image_aug(img, aug_img, masks, aug_masks, boxes, aug_boxes)

让我们测试一些其他的增强集

img = image.copy()

augs = A.Compose(geom_transforms, bbox_params=bbox_params, p=1)

augmented = augs(image=img, masks=masks, bboxes=boxes, category_id=labels)

aug_img = augmented['image']

aug_masks = augmented['masks']

aug_boxes = augmented['bboxes']

plot_image_aug(img, aug_img, masks, aug_masks, boxes, aug_boxes)

for i in range(2):

img = image.copy()

augs = A.Compose(heavy_transforms, bbox_params=bbox_params, p=1)

augmented = augs(image=img, masks=masks, bboxes=boxes, category_id=labels)

aug_img = augmented['image']

aug_masks = augmented['masks']

aug_boxes = augmented['bboxes']

plot_image_aug(img, aug_img, masks, aug_masks, boxes, aug_boxes)

最后,您可能希望在训练过程中增强您的图像。在这种情况下,您可能希望将它们包含在数据集中。在PyTorch上它是这样的:

class DatasetAugs(torch.utils.data.Dataset):

"""

My Dummy dataset for instance segmentation with augs

:param fold: integer, number of the fold

:param df: Dataframe with sample tokens

:param debug: if True, runs the debugging on few images

:param img_size: the desired image size to resize to

:param input_dir: directory with imputs and targets (and maps, optionally)

:param transforms: list of transformations

"""

def __init__(self, fold: int, df: pd.DataFrame,

debug: bool, img_size: int,

input_dir: str, transforms=None,

):

super(DatasetAugs, self).__init__() # inherit it from torch Dataset

self.fold = fold

self.df = df

self.debug = debug

self.img_size = img_size

self.input_dir = input_dir

self.transforms = transforms

self.classes = df.classes.unique()

if self.debug:

self.df = self.df.head(16)

print('Debug mode, samples: ', self.df.samples)

self.samples = list(self.df.samples)

def __len__(self):

return len(self.samples)

def __getitem__(self, idx):

sample = self.samples[idx]

input_filepath = '{}/{}'.format(self.input_dir, sample)

# load image

im = cv2.imread(input_filepath, cv2.IMREAD_UNCHANGED)

im = cv2.cvtColor(im, cv2.COLOR_BGR2RGB)

# get annotations

labels, rles = img_masks_targets(self.df, img_id=sample)

masks = np.zeros((len(rles), height, width), dtype=np.uint8)

for num in range(num_instances):

masks[num, :, :] = rle_decode(rles[num], (height, width), np.uint8)

# get boxes from masks

boxes = get_boxes_from_masks(masks)

boxes = list(boxes)

# augment image and targets

if self.transforms is not None:

bbox_params = {'format': 'pascal_voc', 'min_area': 5, 'min_visibility': 0.5,

'label_fields': ['category_id']}

augs = A.Compose(self.transforms, bbox_params=bbox_params, p=1)

augmented = augs(image=im, masks=masks, bboxes=boxes, category_id=labels)

im = augmented['image']

masks = augmented['masks']

boxes = augmented['bboxes']

# targets to tensor

boxes = torch.as_tensor(boxes, dtype=torch.float32)

labels = torch.as_tensor(labels, dtype=torch.int64)

masks = torch.as_tensor(masks, dtype=torch.uint8)

image_id = torch.tensor([idx])

area = (boxes[:, 3] - boxes[:, 1]) * (boxes[:, 2] - boxes[:, 0])

iscrowd = torch.zeros((len(boxes),), dtype=torch.int64)

target = {}

target["boxes"] = boxes

target["labels"] = labels

target["masks"] = masks

target["image_id"] = image_id

target["area"] = area

target["iscrowd"] = iscrowd

im = torch.from_numpy(im.transpose(2, 0, 1)) # channels first

return im, target

链接:https://pan.baidu.com/s/1KtXBe-pneHvzJ3KiHbcosA

提取码:123a