Deep Mind 团队论文Playing Atari with Deep Reinforcement Learning复现

笔者使用python tensorflow尝试复现了这篇论文中的dqn算法,玩Atari Breakout打砖头游戏,使得dqn能够最好可以打掉10块砖。由于硬件限制,同时本职工作忙碌,没有进一步优化,这里给出源码和一些可能的优化方向,欢迎讨论研究。

Atari环境配置

目前gym中已经移除了Atari环境,需要自己下载配置。这里会遇到一些报错,都是由于缺少一些相关的包或者dll。百度搜索报的错都能够找到解决办法,中文网站找不到可以Bing搜索,在GitHub或者Stackoverflow上找。

DQN算法

DQN基于Qlearning发展而来,核心是一张Q表,在不同的状态下,选择能获得Q值最大的动作,并根据环境反馈,依照算法对Q表进行更新。

若环境过于复杂,使用真实的二维表格包含所有状态,表格会非常大,Q值得查找将不现实。神经网络有强大得拟合能力,通过神经网络拟合Q表,实现状态到Q值的对应关系。进一步的,若状态是图片,则通过CNN进行处理,这个CNN也就体现了DQN中的Deep。

针对Atari Breakout这个游戏,一个state是由4帧游戏画面组合在一起的三维张量,每一帧画面的分辨率是84*84,所以一个state是84*84*4的张量。

stateInput = tf.placeholder("float", [None, 84, 84, 4]) # None表示minibatch有若干个stateCNN包括三层,第一层是32个8*8*4的卷积核,对卷积核维度的理解见笔者这篇博客。

W_conv1 = self.weight_variable([8, 8, 4, 32])第二层、第三层卷积核如下

W_conv1 = self.weight_variable([8, 8, 4, 32])

b_conv1 = self.bias_variable([32])

W_conv2 = self.weight_variable([4, 4, 32, 64])

b_conv2 = self.bias_variable([64])

W_conv3 = self.weight_variable([3, 3, 64, 64])

b_conv3 = self.bias_variable([64])

W_fc1 = self.weight_variable([3136, 512])

b_fc1 = self.bias_variable([512])把卷积后的结果reshape,输入到全连接层,全连接层激活函数为relu,输出层不用激活函数直接输出Q值。

h_conv3_flat = tf.reshape(h_conv3, [-1, 3136])

h_fc1 = tf.nn.relu(tf.matmul(h_conv3_flat, W_fc1) + b_fc1)

# Q Value layer

QValue = tf.matmul(h_fc1, W_fc2) + b_fc2网络的损失函数按照下面的方法构建

q_eval = self.sess.run(self.QValue, feed_dict={self.stateInput: state_batch})

q_target = q_eval.copy()

q_next = self.sess.run(self.QValueT, feed_dict={self.stateInputT: nextState_batch})

for i in range(0, self.batchsize):

if terminal[i]:

q_target[i, action_batch[i]] = reward_batch[i]

else:

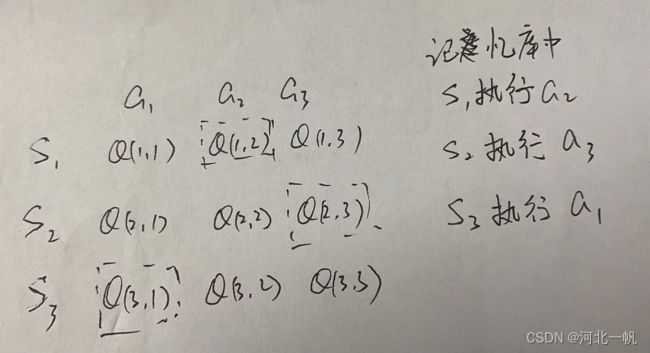

q_target[i, action_batch[i]] = reward_batch[i] + self.gamma * np.max(q_next[i])q_eval是minibatch中记忆(s, a, r, s_)s所在Q表的那一行的所有动作的Q值,q_next是state next对应的所有动作的Q值,根据执行了哪一个动作,对应更新q_next的中的Q值,跟新后的q_next即为q_target,训练神经网络,使q_eval趋近q_target。这里有一个trick,构建损失函数的,不能只选执行的动作,没执行的动作对应的Q值也要考虑进来。考虑下图这样的情况

q_target和q_eval都是3*3的张量,q_target - q_eval 在没执行的动作,即Q(1, 1) Q(1, 3) 等等位置是0,这样在训练时,没执行的动作的Q值不会受影响。

如果q_target和q_eval使用3*1的张量,那么在网络训练时,没执行的动作对应的Q值也会发生改变,不利于网络收敛。

另外注意在终止状态只有r,没有max(q_next)。

完整代码包括dqn.py和main.py两个文件

import tensorflow.compat.v1 as tf

tf.disable_v2_behavior()

# import tensorflow as tf

import cv2

import numpy as np

import random

class DeepQNetwork:

def __init__(self, action_dim=3):

self.action_dim = action_dim

self.start_learn = False

self.ret_index = 0

self.initial_index = 0

self.memory = []

self.memory_size = 10000

self.batchsize = 32

# init Q network

self.stateInput, self.QValue, self.W_conv1, self.b_conv1, self.W_conv2, self.b_conv2, self.W_conv3, self.b_conv3, self.W_fc1, self.b_fc1, self.W_fc2, self.b_fc2 = self.createQNetwork()

# init Target Q Network

self.stateInputT, self.QValueT, self.W_conv1T, self.b_conv1T, self.W_conv2T, self.b_conv2T, self.W_conv3T, self.b_conv3T, self.W_fc1T, self.b_fc1T, self.W_fc2T, self.b_fc2T = self.createQNetwork()

self.copyTargetQNetworkOperation = [self.W_conv1T.assign(self.W_conv1),self.b_conv1T.assign(self.b_conv1),self.W_conv2T.assign(self.W_conv2),self.b_conv2T.assign(self.b_conv2),self.W_conv3T.assign(self.W_conv3),self.b_conv3T.assign(self.b_conv3),self.W_fc1T.assign(self.W_fc1),self.b_fc1T.assign(self.b_fc1),self.W_fc2T.assign(self.W_fc2),self.b_fc2T.assign(self.b_fc2)]

self.createTrainingMethod()

self.sess = tf.Session()

self.sess.run(tf.global_variables_initializer())

self.gamma = 0.9

self.timeStep = 0

def set_initial_state(self, observation):

observation = cv2.cvtColor(cv2.resize(observation, (84, 110)), cv2.COLOR_BGR2GRAY)

observation = observation[26:110, :]

ret, observation = cv2.threshold(observation, 1, 255, cv2.THRESH_BINARY)

ret = np.reshape(observation, (84, 84))

self.s = np.zeros((84, 84, 4))

self.s_ = np.zeros((84, 84, 4))

self.s[:, :, 0] = ret

def story_memory(self, observation, a=0, r=0, terminal=False):

# convert to binary

observation = cv2.cvtColor(cv2.resize(observation, (84, 110)), cv2.COLOR_BGR2GRAY)

observation = observation[26:110, :]

ret, observation = cv2.threshold(observation, 1, 255, cv2.THRESH_BINARY)

ret = np.reshape(observation, (84, 84))

self.s_[:, :, 3] = self.s[:, :, 2].copy()

self.s_[:, :, 2] = self.s[:, :, 1].copy()

self.s_[:, :, 1] = self.s[:, :, 0].copy()

self.s_[:, :, 0] = ret

self.memory.append([self.s.copy(), a, r, self.s_.copy(), terminal])

self.s = self.s_.copy()

if len(self.memory) > self.memory_size:

self.memory.pop(0)

self.start_learn = True

def createQNetwork(self):

# network weights

W_conv1 = self.weight_variable([8, 8, 4, 32])

b_conv1 = self.bias_variable([32])

W_conv2 = self.weight_variable([4, 4, 32, 64])

b_conv2 = self.bias_variable([64])

W_conv3 = self.weight_variable([3, 3, 64, 64])

b_conv3 = self.bias_variable([64])

W_fc1 = self.weight_variable([3136, 512])

b_fc1 = self.bias_variable([512])

W_fc2 = self.weight_variable([512, self.action_dim])

b_fc2 = self.bias_variable([self.action_dim])

# input layer

stateInput = tf.placeholder("float", [None, 84, 84, 4])

# hidden layers

h_conv1 = tf.nn.relu(self.conv2d(stateInput, W_conv1, 4) + b_conv1)

# h_pool1 = self.max_pool_2x2(h_conv1)

h_conv2 = tf.nn.relu(self.conv2d(h_conv1, W_conv2, 2) + b_conv2)

h_conv3 = tf.nn.relu(self.conv2d(h_conv2, W_conv3, 1) + b_conv3)

h_conv3_shape = h_conv3.get_shape().as_list()

print("dimension:", h_conv3_shape[1] * h_conv3_shape[2] * h_conv3_shape[3])

h_conv3_flat = tf.reshape(h_conv3, [-1, 3136])

h_fc1 = tf.nn.relu(tf.matmul(h_conv3_flat, W_fc1) + b_fc1)

# Q Value layer

QValue = tf.matmul(h_fc1, W_fc2) + b_fc2

return stateInput, QValue, W_conv1, b_conv1, W_conv2, b_conv2, W_conv3, b_conv3, W_fc1, b_fc1, W_fc2, b_fc2

def copyTargetQNetwork(self):

self.sess.run(self.copyTargetQNetworkOperation)

def createTrainingMethod(self):

self.yInput = tf.placeholder("float", [None, self.action_dim])

self.cost = tf.reduce_mean(tf.squared_difference(self.yInput, self.QValue))

self.trainStep = tf.train.RMSPropOptimizer(0.00025, 0.99, 0.0, 1e-6).minimize(self.cost)

def learn(self):

minibatch = random.sample(self.memory, self.batchsize)

state_batch = np.array([data[0] for data in minibatch])

action_batch = [data[1] for data in minibatch]

reward_batch = np.array([data[2] for data in minibatch])

nextState_batch = np.array([data[3] for data in minibatch])

terminal = np.array([data[4] for data in minibatch])

q_eval = self.sess.run(self.QValue, feed_dict={self.stateInput: state_batch})

q_target = q_eval.copy()

q_next = self.sess.run(self.QValueT, feed_dict={self.stateInputT: nextState_batch})

for i in range(0, self.batchsize):

if terminal[i]:

q_target[i, action_batch[i]] = reward_batch[i]

else:

q_target[i, action_batch[i]] = reward_batch[i] + self.gamma * np.max(q_next[i])

self.sess.run(self.trainStep, feed_dict={

self.yInput: q_target,

self.stateInput: state_batch

})

# # save network every 100000 iteration

# if self.timeStep % 10000 == 0:

# self.saver.save(self.session, 'saved_networks/' + 'network' + '-dqn', global_step=self.timeStep)

if self.timeStep % 1000 == 0:

self.copyTargetQNetwork()

self.timeStep += 1

def choose_action(self):

if len(self.memory) < self.memory_size:

return random.randint(0, 2)

else:

ss = self.s[np.newaxis, :, :, :]

actions_values = self.sess.run(self.QValue, feed_dict={self.stateInput: ss})

action = np.argmax(actions_values) if random.randint(0, 10) < 9 else random.randint(0, 2)

return action

def weight_variable(self, shape):

initial = tf.truncated_normal(shape, stddev=0.01)

return tf.Variable(initial)

def bias_variable(self, shape):

initial = tf.constant(0.01, shape=shape)

return tf.Variable(initial)

def conv2d(self, x, W, stride):

return tf.nn.conv2d(x, W, strides=[1, stride, stride, 1], padding="VALID")

def max_pool_2x2(self, x):

return tf.nn.max_pool(x, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding="SAME")

import gym

import time

import random

from dqn import *

rl = DeepQNetwork()

env = gym.make("Breakout-v4")

episodes = 1000000

env_action_list = [3, 0, 2]

# for i_episode in range(episodes):

while True:

# 开始游戏

env.reset()

observation, reward, done, info = env.step(1)

env.render()

rl.set_initial_state(observation)

game_over = False

lives0 = info["ale.lives"]

while True:

action = rl.choose_action()

action_env = env_action_list[action]

# action = int(input("请输入动作"))

observation_, reward, done, info = env.step(action_env)

env.render()

# print(reward, done, info)

lives = info["ale.lives"]

if lives < lives0:

done = True

reward = -10

rl.story_memory(observation_, action, reward, done)

if rl.start_learn:

rl.learn()

if done:

break

一些改进方向

我的记忆库只存了10000条记忆,有点太少了,记忆库如果能达到100万,训练效果会更好,但是笔者如果把记忆库设置为10万条就报错了,原因还没来得及研究。

在设置状态的时候,是否可以不考虑砖的分布,即把上半部分画面裁掉,使DQN仅关注小球的位置,优先保证接到小球,至于小球能不能打到砖块就先随缘。只要不死,总有打到砖头的机会。