Low-Light Image and Video Enhancement Using Deep Learning: A Survey 论文阅读笔记

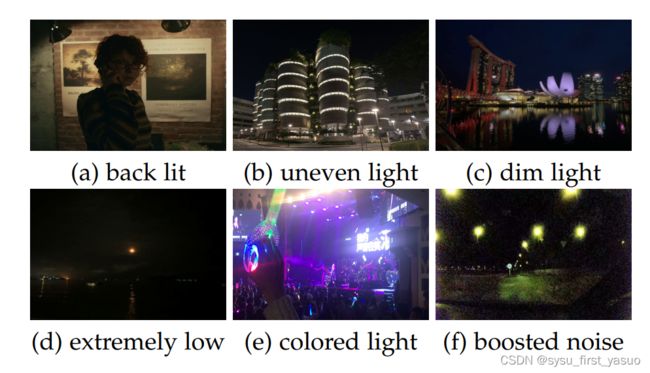

- 由于环境问题或者拍摄技巧的缺失,拍摄者可能得到一系列质量较差的图像如以下6类:

- 低光图像质量增强在许多方面都有用武之地,比如监控、自动驾驶、摄影图像处理等。传统的方法主要是两类——基于直方图均衡化的方法和基于 retinex model 的方法。后者根据先验知识或者正则,将一张低光图像分解为反射分量和照度分量。分解得到的反射分量即是增强的结果。然而基于 retinex model 的方法有以下几种问题:

- 一个是将反射分量作为增强结果并不一定行得通,特别是当光照条件复杂多样时经常失去细节或导致颜色失真。

- 忽视了噪声,从而要么在结果中仍然存在噪声,甚至导致噪声被放大

- 运行时间过长,因为优化过程太复杂

- 和难找到足够正确地用于分解反射分量和照度分量的先验或正则,而当这样的先验不正确时,通常得到的结果失真严重

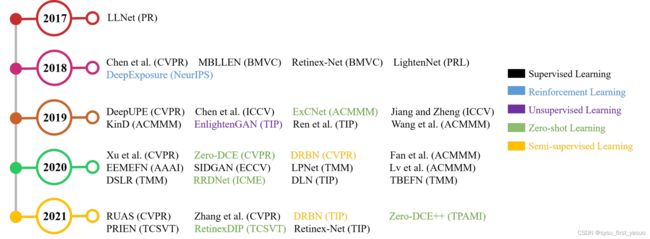

- 近几年基于深度学习的低光图像质量增强得到了很好的效果,其中有基于有监督、无监督、半监督、强化学习、zero-shot learing的方法

直接用神经网络生成增强结果

- 最开始是LLNet,用的是 variant of stacked-sparse denoising autoencoder,先后进行了 brighten 和 denoise

K. G. Lore, A. Akintayo, and S. Sarkar, “LLNet: A deep autoencoder approach to natural low-light image enhancement,” PR, vol. 61, pp. 650–662, 2017.

- 然后是MBLLEN,用的是multi-branch,包括三个模块:feature extraction,enhancement,和 fusion

F. Lv, F. Lu, J. Wu, and C. Lim, “MBLLEN: Low-light image/video enhancement using cnns,” in BMVC, 2018.

- 然后是MBLLEN的改进版,包括三个子模块:Illumination-Net, a Fusion-Net, and a Restoration-Net

F. Lv, B. Liu, and F. Lu, “Fast enhancement for non-uniform illumination images using light-weight cnns,” in ACMMM, 2020, pp. 1450–1458.

- 然后是用encoder-decoder做content enhancement,用RNN做 edge enhancement

W. Ren, S. Liu, L. Ma, Q. Xu, X. Xu, X. Cao, J. Du, and M.-H. Yang, “Low-light image enhancement via a deep hybrid network,” TIP, vol. 28, no. 9, pp. 4364–4375, 2019.

- 然后是EEMEFN,包括两个阶段:multi-exposure fusion 和 edge enhancement

M. Zhu, P. Pan, W. Chen, and Y. Yang, “EEMEFN: Low-light image enhancement via edge-enhanced multi-exposure fusion network,” in AAAI, 2020, pp. 13 106–13 113.

- 然后是TBEFN,用两个分支取平均后进一个refinement unit

K. Lu and L. Zhang, “TBEFN: A two-branch exposure-fusion network for low-light image enhancement,” TMM, 2020.

- 然后是针对网络结构提出的三篇论文:LPNet,一个不知道名字只知道是用resnetd,和一个叫DSLR的

J. Li, J. Li, F. Fang, F. Li, and G. Zhang, “Luminance-aware pyramid network for low-light image enhancement,” TMM, 2020.

L. Wang, Z. Liu, W. Siu, and D. P. K. Lun, “Lightening network for low-light image enhancement,” TIP, vol. 29, pp. 7984–7996, 2020.

S. Lim and W. Kim, “DSLR: Deep stacked laplacian restorer for low-light image enhancement,” TMM, 2020.

- 然后是一个频域分解后对高频分量和低频分量分别进行增强和去噪的网络:

K. Xu, X. Yang, B. Yin, and R. W. H. Lau, “Learning to restore lowlight images via decomposition-and-enhancement,” in CVPR, 2020, pp. 2281–2290.

- 然后是一个循环增强的网络

J. Li, X. Feng, and Z. Hua, “Low-light image enhancement via progressive-recursive network,” TCSVT, 2021.

- 这个是针对视频图像的

F. Zhang, Y. Li, S. You, and Y. Fu, “Learning temporal consistency for low light video enhancement from single images,” in CVPR, 2021.

结合retinex模型的基于深度学习的图像质量增强

- retinex模型的理论在下面这两篇论文:

E. H. Land, “An alternative technique for the computation of the designator in the retinex theory of color vision,” National Academy of Sciences, vol. 83, no. 10, pp. 3078–3080, 1986.

D. J. Jobson, Z. ur Rahman, and G. A. Woodell, “Properties and performance of a center/surround retinex,” TIP, vol. 6, no. 3, pp. 451–462, 1997.

- 首先是Retinex-Net,先用分解网络分解出照度分量和反射分量,然后用增强网络调节照度分量

C. Wei, W. Wang, W. Yang, and J. Liu, “Deep retinex decomposition for low-light enhancement,” in BMVC, 2018.

- 然后是对Retinex-Net的改进:

W. Yang, W. Wang, H. Huang, S. Wang, and J. Liu, “Sparse gradient regularized deep retinex network for robust low-light image enhancement,” TIP, vol. 30, pp. 2072–2086, 2021.

- 然后是一个轻量化的网络(4层)

C. Li, J. Guo, F. Porikli, and Y. Pang, “LightenNet: A convolutional neural network for weakly illuminated image enhancement,” PRL, vol. 104, pp. 15–22, 2018.

- 然后是DeepUPE,提取全局和局部信息进行image-to-illumination的映射

R. Wang, Q. Zhang, C.-W. Fu, X. Shen, W.-S. Zheng, and J. Jia, “Underexposed photo enhancement using deep illumination estimation,” in CVPR, 2019, pp. 6849–6857.

- 然后是KinD,用三个网络进行layer decomposition, reflectance restoration, and illumination adjustment

Y. Zhang, J. Zhang, and X. Guo, “Kindling the darkness: A practical low-light image enhancer,” in ACMMM, 2019, pp. 1632– 1640.

- 然后是改进版——KinD++

X. Guo, Y. Zhang, J. Ma, W. Liu, and J. Zhang, “Beyond brightening low-light images,” IJCV, 2020.

- 然后是在retinex-model中加入对噪声的考虑的模型,渐进地进行去噪和增强直至稳定

Y. Wang, Y. Cao, Z. Zha, J. Zhang, Z. Xiong, W. Zhang, and F. Wu, “Progressive retinex: Mutually reinforced illuminationnoise perception network for low-light image enhancement,” in ACMMM, 2019, pp. 2015–2023

- 然后是使用语义分割作为先验信息的模型

M. Fan, W. Wang, W. Yang, and J. Liu, “Integrating semantic segmentation and retinex model for low light image enhancement,” in ACMMM, 2020, pp. 2317–2325.

- 然后是一个使用了结构搜索的方法,得到一个高效的网络结构

R. Liu, L. Ma, J. Zhang, X. Fan, and Z. Luo, “Retinex-inspired unrolling with cooperative prior architecture search for low-light image enhancement,” in CVPR, 2021.

数据集

- 这个数据集的GT是用13中增强方法中选出一个张最好的作为GT:

J. Cai, S. Gu, and L. Zhang, “Learning a deep single image contrast enhancer from multi-exposure images,” TIP, vol. 27, no. 4, pp. 2049–2062, 2018.

- SID

C. Chen, Q. Chen, J. Xu, and V. Koltun, “Learning to see in the dark,” in CVPR, 2018, pp. 3291–3300.

- DRV 视频

C. Chen, Q. Chen, M. N. Do, and V. Koltun, “Seeing motion in the dark,” in ICCV, 2019, pp. 3185–3194.

- SMOID 视频

H. Jiang and Y. Zheng, “Learning to see moving object in the dark,” in ICCV, 2019, pp. 7324–7333.

强化学习

- DeepExposure,用的是不成对的黑暗图像

R. Yu, W. Liu, Y. Zhang, Z. Qu, D. Zhao, and B. Zhang, “DeepExposure: Learning to expose photos with asynchronously reinforced adversarial learning,” in NeurIPS, 2018, pp. 2149–2159.

无监督学习

- EnligthenGAN,使用了attention-guided U-Net作为 generator 和 global-local discriminators,同时引入了 self feature preserving losses

Y. Jiang, X. Gong, D. Liu, Y. Cheng, C. Fang, X. Shen, J. Yang, P. Zhou, and Z. Wang, “EnlightenGAN: Deep light enhancement without paired supervision,” TIP, vol. 30, pp. 2340–2349, 2021.

zero-shot learing

- ExCNet:用一个网络来估计输入的 S-curve,然后将图片分为两层——a base layer and a detail layer,然后用前面估计的 S-curve 来调整 base layer,最后用 Weber contrast 来融合两层。

L. Zhang, L. Zhang, X. Liu, Y. Shen, S. Zhang, and S. Zhao, “Zeroshot restoration of back-lit images using deep internal learning,”in ACMMM, 2019, pp. 1623–1631.

- RRDNet:用了各种各样的loss来将图片分为illumination分量、反射分量和噪声分量。

A. Zhu, L. Zhang, Y. Shen, Y. Ma, S. Zhao, and Y. Zhou, “Zeroshot restoration of underexposed images via robust retinex decomposition,” in ICME, 2020, pp. 1–6.

- RetinexDIP:受Deep Image Prior 启发,使用了随机采样的白噪声和一些loss

Z. Zhao, B. Xiong, L. Wang, Q. Ou, L. Yu, and F. Kuang, “Retinexdip: A unified deep framework for low-light image enhancement,” TCSVT, 2021.

- Zero-DCE:image-specific curve estimation

C. Guo, C. Li, J. Guo, C. C. Loy, J. Hou, S. Kwong, and R. Cong, “Zero-reference deep curve estimation for low-light image enhancement,” in CVPR, 2020, pp. 1780–1789.

- Zero-DCE++

C. Li, C. Guo, and C. C. Loy, “Learning to enhance low-light image via zero-reference deep curve estimation,” TPAMI, 2021.

Semi-Supervised Learning

- DRBN:先用有监督方法得到一个表征,然后用无监督方法将这个表征进行重组

W. Yang, S. Wang, Y. Fang, Y. Wang, and J. Liu, “From fidelity to perceptual quality: A semi-supervised approach for low-light image enhancement,” in CVPR, 2020, pp. 3063–3072.

- 在DRBN的基础上添加了LSTM

W. Yang, S. Wang, Y. F. nd Yue Wang, and J. Liu, “Band representation-based semi-supervised low-light image enhancement: Bridging the gap between signal fidelity and perceptual quality,” TIP, vol. 30, pp. 3461–3473, 2021.

- 有监督的难点和问题在于难以获取真实的大型成对图像数据集,而合成的又不能泛化到真实的上去,并且有监督通常泛化性比较差;

- 无监督和半监督的难点在于训练的稳定性、色彩的失真以及如何利用多个domain的信息

- 强化学习的难点在于设计一个有效的 reward mechanism 以及稳定高效的训练

- zero-shot learning 的难点在于损失函数的设计

- 现有的网络结构主要分为Unet、pyramid、multi-stage 和 frequency decomposition 四种结构,这些结构有一些缺点,比如梯度弥散、噪声的引入、没有考虑到低光图像的特点等等

- 将 retinex 理论和深度学习结合的方法也存在一些问题,如retinex的自带先验假设“反射分量是增强结果”仍然带来负面效应,以及深度学习的易过拟合等特点。如何取长补短地结合这两者是关键所在。

Loss函数

- reconstruction loss:L1 loss、L2 loss、SSIM loss。其中L1 loss 能够限制其色彩失真不过分严重,SSIM loss能限制纹理细节不过分丢失。

- Perceptual Loss

- Smoothness Loss:TV Loss,为了抑制噪声

- Adversarial Loss

- Exposure Loss:是典型的无监督和zero-shot损失,不需要高质量的图片就能计算损失

评价指标

- PSNR、MSE、MAE,都是常用的 image quality assessment(IQA)指标

- SSIM 作为 perception-based 指标用于衡量结构信息的损失程度

- LOE 用于衡量亮度误差

- high-level任务的评价指标也能反映问题

- 现有的指标,除了LOE是专门为低光图像质量增强提出的,其它的其实并不太适用于低光图像质量增强,因此提出新的,适用于低光图像质量增强的评价指标也是一个研究方向。