[ 轻量级网络 ] 经典网络模型4——Xception 详解与复现

Author :Horizon Max

✨ 编程技巧篇:各种操作小结

机器视觉篇:会变魔术 OpenCV

深度学习篇:简单入门 PyTorch

神经网络篇:经典网络模型

算法篇:再忙也别忘了 LeetCode

[ 轻量级网络 ] 经典网络模型4——Xception 详解与复现

- Xception

- Xception 详解

-

- Xception 网络结构

-

- 结构探索

- Xception 结构框图

- Xception 实验测试

- Xception 复现

Xception

Xception 是一种受启发于 Inception 的新颖深度卷积神经网络架构 ;

将 Inception 模块替换为 深度可分离卷积( depthwise separable convolution ) ;

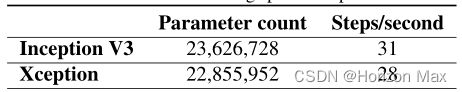

Xception 与 Inception V3 具有相同数量的参数,但由于模型参数的更有效使用,使得性能进一步的提高 ;

论文地址:Xception: Deep Learning with Depthwise Separable Convolutions

Xception 详解

Xception 网络结构

结构探索

(1)初始的 Inception 模块:

(2)简化 Inception 模块,仅包含 3×3卷积 去掉简单的模块和 平均池化:

(3)将所有 1×1卷积 进行拼接 :

(4)使 3×3卷积 的数量与 1×1卷积 输出通道的数量相等 :

Extreme Inception 也就是作者提出的新结构 —— Xception ;

Xception 结构框图

Xception 结构有36个卷积层,构成网络特征提取的基础 ;

每一层后面都接有 逻辑回归层 ,在逻辑回归层前可能会随机插入 全连接层 ;

将36个卷积层结构分为14个模块,除了第一个和最后一个模块,每个模块都有 残差结构 连接;

Xception 实验测试

Xception 复现

# Here is the code :

import torch

import torch.nn as nn

from torchinfo import summary

class SeparableConv(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size=1, stride=1, padding=0, dilation=1):

super(SeparableConv, self).__init__()

self.conv = nn.Conv2d(in_channels, in_channels, kernel_size, stride, padding,

dilation, groups=in_channels, bias=False)

self.pointwise = nn.Conv2d(in_channels, out_channels, kernel_size=1, stride=1, padding=0,

dilation=1, groups=1, bias=False)

def forward(self, x):

x = self.conv(x)

x = self.pointwise(x)

return x

class Block(nn.Module):

def __init__(self, in_channels, out_channels, num_conv, strides=1, start_with_relu=True, channel_change=True):

super(Block, self).__init__()

if out_channels != in_channels or strides != 1:

self.shutcut = nn.Sequential(

nn.Conv2d(in_channels, out_channels, 1, stride=strides, bias=False),

nn.BatchNorm2d(out_channels)

)

else:

self.shutcut = nn.Sequential()

layers = []

channels = in_channels

if channel_change:

layers.append(nn.ReLU(inplace=True))

layers.append(SeparableConv(in_channels, out_channels, 3, stride=1, padding=1))

layers.append(nn.BatchNorm2d(out_channels))

channels = out_channels

for i in range(num_conv - 1):

layers.append(nn.ReLU(inplace=True))

layers.append(SeparableConv(channels, channels, 3, stride=1, padding=1))

layers.append(nn.BatchNorm2d(channels))

if not channel_change:

layers.append(nn.ReLU(inplace=True))

layers.append(SeparableConv(in_channels, out_channels, 3, stride=1, padding=1))

layers.append(nn.BatchNorm2d(out_channels))

if start_with_relu:

layers = layers

else:

layers = layers[1:]

if strides != 1:

layers.append(nn.MaxPool2d(3, strides, 1))

self.layers = nn.Sequential(*layers)

def forward(self, x):

layer = self.layers(x)

shutcut = self.shutcut(x)

out = layer + shutcut

return out

class Xception(nn.Module):

def __init__(self, num_classes=1000):

super(Xception, self).__init__()

self.num_classes = num_classes

# Entry flow

self.entry_flow = nn.Sequential(

nn.Conv2d(3, 32, 3, 2, bias=False),

nn.BatchNorm2d(32),

nn.ReLU(inplace=True),

nn.Conv2d(32, 64, 3, bias=False),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

Block(64, 128, 2, 2, start_with_relu=False, channel_change=True),

Block(128, 256, 2, 2, start_with_relu=True, channel_change=True),

Block(256, 728, 2, 2, start_with_relu=True, channel_change=True),

)

# Middle flow

self.middle_flow = nn.Sequential(

Block(728, 728, 3, 1, start_with_relu=True, channel_change=True),

Block(728, 728, 3, 1, start_with_relu=True, channel_change=True),

Block(728, 728, 3, 1, start_with_relu=True, channel_change=True),

Block(728, 728, 3, 1, start_with_relu=True, channel_change=True),

Block(728, 728, 3, 1, start_with_relu=True, channel_change=True),

Block(728, 728, 3, 1, start_with_relu=True, channel_change=True),

Block(728, 728, 3, 1, start_with_relu=True, channel_change=True),

Block(728, 728, 3, 1, start_with_relu=True, channel_change=True),

)

# Exit flow

self.exit_flow = nn.Sequential(

Block(728, 1024, 2, 2, start_with_relu=True, channel_change=False),

SeparableConv(1024, 1536, 3, 1, 1),

nn.BatchNorm2d(1536),

nn.ReLU(inplace=True),

SeparableConv(1536, 2048, 3, 1, 1),

nn.BatchNorm2d(2048),

nn.ReLU(inplace=True),

nn.AdaptiveAvgPool2d((1, 1)),

)

self.fc = nn.Linear(2048, num_classes)

def forward(self, x):

x = self.entry_flow(x)

x = self.middle_flow(x)

x = self.exit_flow(x)

x = x.view(x.size(0), -1)

out = self.fc(x)

return out

def test():

net = Xception()

y = net(torch.randn(1, 3, 224, 224))

print(y.size())

summary(net, (1, 3, 224, 224), depth=5)

if __name__ == '__main__':

test()

输出结果:

torch.Size([1, 1000])

===============================================================================================

Layer (type:depth-idx) Output Shape Param #

===============================================================================================

Xception -- --

├─Sequential: 1-1 [1, 728, 14, 14] --

│ └─Conv2d: 2-1 [1, 32, 111, 111] 864

│ └─BatchNorm2d: 2-2 [1, 32, 111, 111] 64

│ └─ReLU: 2-3 [1, 32, 111, 111] --

│ └─Conv2d: 2-4 [1, 64, 109, 109] 18,432

│ └─BatchNorm2d: 2-5 [1, 64, 109, 109] 128

│ └─ReLU: 2-6 [1, 64, 109, 109] --

│ └─Block: 2-7 [1, 128, 55, 55] --

│ │ └─Sequential: 3-1 [1, 128, 55, 55] --

│ │ │ └─SeparableConv: 4-1 [1, 128, 109, 109] --

│ │ │ │ └─Conv2d: 5-1 [1, 64, 109, 109] 576

│ │ │ │ └─Conv2d: 5-2 [1, 128, 109, 109] 8,192

│ │ │ └─BatchNorm2d: 4-2 [1, 128, 109, 109] 256

│ │ │ └─ReLU: 4-3 [1, 128, 109, 109] --

│ │ │ └─SeparableConv: 4-4 [1, 128, 109, 109] --

│ │ │ │ └─Conv2d: 5-3 [1, 128, 109, 109] 1,152

│ │ │ │ └─Conv2d: 5-4 [1, 128, 109, 109] 16,384

│ │ │ └─BatchNorm2d: 4-5 [1, 128, 109, 109] 256

│ │ │ └─MaxPool2d: 4-6 [1, 128, 55, 55] --

│ │ └─Sequential: 3-2 [1, 128, 55, 55] --

│ │ │ └─Conv2d: 4-7 [1, 128, 55, 55] 8,192

│ │ │ └─BatchNorm2d: 4-8 [1, 128, 55, 55] 256

│ └─Block: 2-8 [1, 256, 28, 28] --

│ │ └─Sequential: 3-3 [1, 256, 28, 28] --

│ │ │ └─ReLU: 4-9 [1, 128, 55, 55] --

│ │ │ └─SeparableConv: 4-10 [1, 256, 55, 55] --

│ │ │ │ └─Conv2d: 5-5 [1, 128, 55, 55] 1,152

│ │ │ │ └─Conv2d: 5-6 [1, 256, 55, 55] 32,768

│ │ │ └─BatchNorm2d: 4-11 [1, 256, 55, 55] 512

│ │ │ └─ReLU: 4-12 [1, 256, 55, 55] --

│ │ │ └─SeparableConv: 4-13 [1, 256, 55, 55] --

│ │ │ │ └─Conv2d: 5-7 [1, 256, 55, 55] 2,304

│ │ │ │ └─Conv2d: 5-8 [1, 256, 55, 55] 65,536

│ │ │ └─BatchNorm2d: 4-14 [1, 256, 55, 55] 512

│ │ │ └─MaxPool2d: 4-15 [1, 256, 28, 28] --

│ │ └─Sequential: 3-4 [1, 256, 28, 28] --

│ │ │ └─Conv2d: 4-16 [1, 256, 28, 28] 32,768

│ │ │ └─BatchNorm2d: 4-17 [1, 256, 28, 28] 512

│ └─Block: 2-9 [1, 728, 14, 14] --

│ │ └─Sequential: 3-5 [1, 728, 14, 14] --

│ │ │ └─ReLU: 4-18 [1, 256, 28, 28] --

│ │ │ └─SeparableConv: 4-19 [1, 728, 28, 28] --

│ │ │ │ └─Conv2d: 5-9 [1, 256, 28, 28] 2,304

│ │ │ │ └─Conv2d: 5-10 [1, 728, 28, 28] 186,368

│ │ │ └─BatchNorm2d: 4-20 [1, 728, 28, 28] 1,456

│ │ │ └─ReLU: 4-21 [1, 728, 28, 28] --

│ │ │ └─SeparableConv: 4-22 [1, 728, 28, 28] --

│ │ │ │ └─Conv2d: 5-11 [1, 728, 28, 28] 6,552

│ │ │ │ └─Conv2d: 5-12 [1, 728, 28, 28] 529,984

│ │ │ └─BatchNorm2d: 4-23 [1, 728, 28, 28] 1,456

│ │ │ └─MaxPool2d: 4-24 [1, 728, 14, 14] --

│ │ └─Sequential: 3-6 [1, 728, 14, 14] --

│ │ │ └─Conv2d: 4-25 [1, 728, 14, 14] 186,368

│ │ │ └─BatchNorm2d: 4-26 [1, 728, 14, 14] 1,456

├─Sequential: 1-2 [1, 728, 14, 14] --

│ └─Block: 2-10 [1, 728, 14, 14] --

│ │ └─Sequential: 3-7 [1, 728, 14, 14] --

│ │ │ └─ReLU: 4-27 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-28 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-13 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-14 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-29 [1, 728, 14, 14] 1,456

│ │ │ └─ReLU: 4-30 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-31 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-15 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-16 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-32 [1, 728, 14, 14] 1,456

│ │ │ └─ReLU: 4-33 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-34 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-17 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-18 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-35 [1, 728, 14, 14] 1,456

│ │ └─Sequential: 3-8 [1, 728, 14, 14] --

│ └─Block: 2-11 [1, 728, 14, 14] --

│ │ └─Sequential: 3-9 [1, 728, 14, 14] --

│ │ │ └─ReLU: 4-36 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-37 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-19 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-20 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-38 [1, 728, 14, 14] 1,456

│ │ │ └─ReLU: 4-39 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-40 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-21 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-22 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-41 [1, 728, 14, 14] 1,456

│ │ │ └─ReLU: 4-42 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-43 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-23 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-24 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-44 [1, 728, 14, 14] 1,456

│ │ └─Sequential: 3-10 [1, 728, 14, 14] --

│ └─Block: 2-12 [1, 728, 14, 14] --

│ │ └─Sequential: 3-11 [1, 728, 14, 14] --

│ │ │ └─ReLU: 4-45 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-46 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-25 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-26 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-47 [1, 728, 14, 14] 1,456

│ │ │ └─ReLU: 4-48 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-49 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-27 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-28 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-50 [1, 728, 14, 14] 1,456

│ │ │ └─ReLU: 4-51 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-52 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-29 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-30 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-53 [1, 728, 14, 14] 1,456

│ │ └─Sequential: 3-12 [1, 728, 14, 14] --

│ └─Block: 2-13 [1, 728, 14, 14] --

│ │ └─Sequential: 3-13 [1, 728, 14, 14] --

│ │ │ └─ReLU: 4-54 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-55 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-31 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-32 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-56 [1, 728, 14, 14] 1,456

│ │ │ └─ReLU: 4-57 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-58 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-33 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-34 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-59 [1, 728, 14, 14] 1,456

│ │ │ └─ReLU: 4-60 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-61 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-35 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-36 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-62 [1, 728, 14, 14] 1,456

│ │ └─Sequential: 3-14 [1, 728, 14, 14] --

│ └─Block: 2-14 [1, 728, 14, 14] --

│ │ └─Sequential: 3-15 [1, 728, 14, 14] --

│ │ │ └─ReLU: 4-63 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-64 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-37 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-38 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-65 [1, 728, 14, 14] 1,456

│ │ │ └─ReLU: 4-66 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-67 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-39 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-40 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-68 [1, 728, 14, 14] 1,456

│ │ │ └─ReLU: 4-69 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-70 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-41 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-42 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-71 [1, 728, 14, 14] 1,456

│ │ └─Sequential: 3-16 [1, 728, 14, 14] --

│ └─Block: 2-15 [1, 728, 14, 14] --

│ │ └─Sequential: 3-17 [1, 728, 14, 14] --

│ │ │ └─ReLU: 4-72 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-73 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-43 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-44 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-74 [1, 728, 14, 14] 1,456

│ │ │ └─ReLU: 4-75 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-76 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-45 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-46 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-77 [1, 728, 14, 14] 1,456

│ │ │ └─ReLU: 4-78 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-79 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-47 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-48 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-80 [1, 728, 14, 14] 1,456

│ │ └─Sequential: 3-18 [1, 728, 14, 14] --

│ └─Block: 2-16 [1, 728, 14, 14] --

│ │ └─Sequential: 3-19 [1, 728, 14, 14] --

│ │ │ └─ReLU: 4-81 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-82 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-49 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-50 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-83 [1, 728, 14, 14] 1,456

│ │ │ └─ReLU: 4-84 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-85 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-51 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-52 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-86 [1, 728, 14, 14] 1,456

│ │ │ └─ReLU: 4-87 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-88 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-53 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-54 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-89 [1, 728, 14, 14] 1,456

│ │ └─Sequential: 3-20 [1, 728, 14, 14] --

│ └─Block: 2-17 [1, 728, 14, 14] --

│ │ └─Sequential: 3-21 [1, 728, 14, 14] --

│ │ │ └─ReLU: 4-90 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-91 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-55 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-56 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-92 [1, 728, 14, 14] 1,456

│ │ │ └─ReLU: 4-93 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-94 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-57 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-58 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-95 [1, 728, 14, 14] 1,456

│ │ │ └─ReLU: 4-96 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-97 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-59 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-60 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-98 [1, 728, 14, 14] 1,456

│ │ └─Sequential: 3-22 [1, 728, 14, 14] --

├─Sequential: 1-3 [1, 2048, 1, 1] --

│ └─Block: 2-18 [1, 1024, 7, 7] --

│ │ └─Sequential: 3-23 [1, 1024, 7, 7] --

│ │ │ └─ReLU: 4-99 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-100 [1, 728, 14, 14] --

│ │ │ │ └─Conv2d: 5-61 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-62 [1, 728, 14, 14] 529,984

│ │ │ └─BatchNorm2d: 4-101 [1, 728, 14, 14] 1,456

│ │ │ └─ReLU: 4-102 [1, 728, 14, 14] --

│ │ │ └─SeparableConv: 4-103 [1, 1024, 14, 14] --

│ │ │ │ └─Conv2d: 5-63 [1, 728, 14, 14] 6,552

│ │ │ │ └─Conv2d: 5-64 [1, 1024, 14, 14] 745,472

│ │ │ └─BatchNorm2d: 4-104 [1, 1024, 14, 14] 2,048

│ │ │ └─MaxPool2d: 4-105 [1, 1024, 7, 7] --

│ │ └─Sequential: 3-24 [1, 1024, 7, 7] --

│ │ │ └─Conv2d: 4-106 [1, 1024, 7, 7] 745,472

│ │ │ └─BatchNorm2d: 4-107 [1, 1024, 7, 7] 2,048

│ └─SeparableConv: 2-19 [1, 1536, 7, 7] --

│ │ └─Conv2d: 3-25 [1, 1024, 7, 7] 9,216

│ │ └─Conv2d: 3-26 [1, 1536, 7, 7] 1,572,864

│ └─BatchNorm2d: 2-20 [1, 1536, 7, 7] 3,072

│ └─ReLU: 2-21 [1, 1536, 7, 7] --

│ └─SeparableConv: 2-22 [1, 2048, 7, 7] --

│ │ └─Conv2d: 3-27 [1, 1536, 7, 7] 13,824

│ │ └─Conv2d: 3-28 [1, 2048, 7, 7] 3,145,728

│ └─BatchNorm2d: 2-23 [1, 2048, 7, 7] 4,096

│ └─ReLU: 2-24 [1, 2048, 7, 7] --

│ └─AdaptiveAvgPool2d: 2-25 [1, 2048, 1, 1] --

├─Linear: 1-4 [1, 1000] 2,049,000

===============================================================================================

Total params: 22,855,952

Trainable params: 22,855,952

Non-trainable params: 0

Total mult-adds (G): 4.55

===============================================================================================

Input size (MB): 0.60

Forward/backward pass size (MB): 250.18

Params size (MB): 91.42

Estimated Total Size (MB): 342.20

===============================================================================================

![[ 轻量级网络 ] 经典网络模型4——Xception 详解与复现_第1张图片](http://img.e-com-net.com/image/info8/e5501cfb554a40a48ba75bd5584a39b3.jpg)

![[ 轻量级网络 ] 经典网络模型4——Xception 详解与复现_第2张图片](http://img.e-com-net.com/image/info8/7b78a5594a12413e803ed86dc1210404.jpg)

![[ 轻量级网络 ] 经典网络模型4——Xception 详解与复现_第3张图片](http://img.e-com-net.com/image/info8/c3d832cfad1f403c8ef72e50b6cbfa9a.jpg)

![[ 轻量级网络 ] 经典网络模型4——Xception 详解与复现_第4张图片](http://img.e-com-net.com/image/info8/0ae70e71bbad44b084216f2aa76f3230.jpg)

![[ 轻量级网络 ] 经典网络模型4——Xception 详解与复现_第5张图片](http://img.e-com-net.com/image/info8/3f8c22e4a93b44e4bca6d2c704377bba.jpg)

![[ 轻量级网络 ] 经典网络模型4——Xception 详解与复现_第6张图片](http://img.e-com-net.com/image/info8/1fffa29bff02422cbfda97aa86e3df98.jpg)

![[ 轻量级网络 ] 经典网络模型4——Xception 详解与复现_第7张图片](http://img.e-com-net.com/image/info8/986133a4b80049549e7971342da78b5b.jpg)