bert模型及其应用场景分享

文章目录

- 1. Transformer优缺点:

- 2. 序列标注任务中为什么还要lstm

- 3.模型融合

-

- 3.1字词向量结合

- 3.2支持mask的最大池化

- 3.3支持mask的平均池化

- 3.4 Bert Finetune

- 3.5 BERT+TextCNN

- 3.6 BERT + RNN + CNN

- 3.7 10折交叉训练

- 融合代码参考

- 4. 模型下载

-

- 4.1 中文版下载地址

- 4.2 ALBERT v2下载地址

- 4.3 预训练模型

1. Transformer优缺点:

优点:(1)虽然Transformer最终也没有逃脱传统学习的套路,Transformer也只是一个全连接(或者是一维卷积)加Attention的结合体。但是其设计已经足够有创新,因为其抛弃了在NLP中最根本的RNN或者CNN并且取得了非常不错的效果,算法的设计非常精彩,值得每个深度学习的相关人员仔细研究和品位。(2)Transformer的设计最大的带来性能提升的关键是将任意两个单词的距离是1,这对解决NLP中棘手的长期依赖问题是非常有效的。(3)Transformer不仅仅可以应用在NLP的机器翻译领域,甚至可以不局限于NLP领域,是非常有科研潜力的一个方向。(4)算法的并行性非常好,符合目前的硬件(主要指GPU)环境。

缺点:(1)粗暴的抛弃RNN和CNN虽然非常炫技,但是它也使模型丧失了捕捉局部特征的能力,RNN + CNN + Transformer的结合可能会带来更好的效果。(2)Transformer失去的位置信息其实在NLP中非常重要,而论文中在特征向量中加入Position Embedding也只是一个权宜之计,并没有改变Transformer结构上的固有缺陷。

BERT是一种预训练语言表示的方法,这意味着在大型文本语料库(如维基百科)上训练通用“语言理解”模型,然后将该模型用于下游的NLP任务(如问答、情感分析、文本聚类等)。 (2)无法捕捉序列或者顺序信息

BERT优于以前的方法,因为它是第一个用于预训练NLP的无监督(Unsupervised)且深度双向(Deeply Bidirectional)的系统。

2. 序列标注任务中为什么还要lstm

首先BERT使用的是transformer,而transformer是基于self-attention的,也就是在计算的过程当中是弱化了位置信息的(仅靠position embedding来告诉模型输入token的位置信息),而在序列标注任务当中位置信息是很有必要的,甚至方向信息也很有必要,

所以我们需要用LSTM习得观测序列上的依赖关系,最后再用CRF习得状态序列的关系并得到答案,如果直接用CRF的话,模型在观测序列上学习力就会下降,从而导致效果不好。

3.模型融合

3.1字词向量结合

def remake(x,num):

L = []

for i,each in enumerate(num):

L += [x[i]]*each

return L

words = [t for t in jieba.cut(text)]

temp = [len(t) for t in words]

x3 = [word2id[t] if t in vocabulary else 1 for t in words]

x3 = remake(x3, temp)

if len(x3) < maxlen - 2:

x3 = [1] + x3 + [1] + [0] * (maxlen - len(x3) - 2)

else:

x3 = [1] + x3[:maxlen - 2] + [1]

主要思路是把词向量映射到每个字上,如:中国,中国的词向量为a,那么体现在字上即为[a , a],若中国的字向量为[b , c], 相加后即为[a+b, a+c]。此处x3即为对称好的词向量,直接输入到Embedding层即可。

3.2支持mask的最大池化

class MaskedGlobalMaxPool1D(keras.layers.Layer):

def __init__(self, **kwargs):

super(MaskedGlobalMaxPool1D, self).__init__(**kwargs)

self.supports_masking = True

def compute_mask(self, inputs, mask=None):

return None

def compute_output_shape(self, input_shape):

return input_shape[:-2] + (input_shape[-1],)

def call(self, inputs, mask=None):

if mask is not None:

mask = K.cast(mask, K.floatx())

inputs -= K.expand_dims((1.0 - mask) * 1e6, axis=-1)

return K.max(inputs, axis=-2)

3.3支持mask的平均池化

class MaskedGlobalAveragePooling1D(keras.layers.Layer):

def __init__(self, **kwargs):

super(MaskedGlobalAveragePooling1D, self).__init__(**kwargs)

self.supports_masking = True

def compute_mask(self, inputs, mask=None):

return None

def compute_output_shape(self, input_shape):

return input_shape[:-2] + (input_shape[-1],)

def call(self, x, mask=None):

if mask is not None:

mask = K.repeat(mask, x.shape[-1])

mask = tf.transpose(mask, [0, 2, 1])

mask = K.cast(mask, K.floatx())

x = x * mask

return K.sum(x, axis=1) / K.sum(mask, axis=1)

else:

return K.mean(x, axis=1)

3.4 Bert Finetune

x1_in = Input(shape=(None,))

x2_in = Input(shape=(None,))

bert_model = load_trained_model_from_checkpoint(config_path, checkpoint_path)

for l in bert_model.layers:

l.trainable = True

x = bert_model([x1_in, x2_in])

x = Lambda(lambda x: x[:, 0])(x)

x = Dropout(0.1)(x)

p = Dense(1, activation='sigmoid')(x)

model = Model([x1_in, x2_in], p)

model.compile(

loss='binary_crossentropy',

optimizer=Adam(1e-5),

metrics=['accuracy']

)

3.5 BERT+TextCNN

x1_in = Input(shape=(None,))

x2_in = Input(shape=(None,))

x3_in = Input(shape=(None,))

x1, x2,x3 = x1_in, x2_in,x3_in

x_mask = Lambda(lambda x: K.cast(K.greater(K.expand_dims(x, 2), 0), 'float32'))(x1)

bert_model = load_trained_model_from_checkpoint(config_path, checkpoint_path)

embedding1= Embedding(len(vocabulary) + 2, 200,weights=[embedding_index],mask_zero= True)

x3 = embedding1(x3)

embed_layer = bert_model([x1_in, x2_in])

embed_layer = Concatenate()([embed_layer,x3])

x = MaskedConv1D(filters=256, kernel_size=3, padding='same', activation='relu')(embed_layer )

pool = MaskedGlobalMaxPool1D()(x)

ave = MaskedGlobalAveragePooling1D()(x)

x = Add()([pool,ave])

x = Dropout(0.1)(x)

x = Dense(32, activation = 'relu')(x)

p = Dense(1, activation='sigmoid')(x)

model = Model([x1_in, x2_in,x3_in], p)

model.compile(

loss='binary_crossentropy',

optimizer=Adam(1e-3),

metrics=['accuracy']

)

3.6 BERT + RNN + CNN

x1_in = Input(shape=(None,))

x2_in = Input(shape=(None,))

x3_in = Input(shape=(None,))

x1, x2,x3 = x1_in, x2_in,x3_in

x_mask = Lambda(lambda x: K.cast(K.greater(K.expand_dims(x, 2), 0), 'float32'))(x1)

bert_model = load_trained_model_from_checkpoint(config_path, checkpoint_path)

embedding1= Embedding(len(vocabulary) + 2, 200,weights=[embedding_index],mask_zero= True)

x3 = embedding1(x3)

embed_layer = bert_model([x1_in, x2_in])

embed_layer = Concatenate()([embed_layer,x3])

embed_layer = Bidirectional(LSTM(units=128,return_sequences=True))(embed_layer)

embed_layer = Bidirectional(LSTM(units=128,return_sequences=True))(embed_layer)

x = MaskedConv1D(filters=256, kernel_size=3, padding='same', activation='relu')(embed_layer )

pool = MaskedGlobalMaxPool1D()(x)

ave = MaskedGlobalAveragePooling1D()(x)

x = Add()([pool,ave])

x = Dropout(0.1)(x)

x = Dense(32, activation = 'relu')(x)

p = Dense(1, activation='sigmoid')(x)

model = Model([x1_in, x2_in,x3_in], p)

model.compile(

loss='binary_crossentropy',

optimizer=Adam(1e-3),

metrics=['accuracy']

)

3.7 10折交叉训练

for train,test in kfold.split(train_data_X,train_data_Y):

model = getModel()

t1,t2,t3,t4 = np.array(train_data_X)[train], np.array(train_data_X)[test],np.array(train_data_Y)[train],np.array(train_data_Y)[test]

train_D = data_generator(t1.tolist(), t3.tolist())

dev_D = data_generator(t2.tolist(), t4.tolist())

evaluator = Evaluate()

model.fit_generator(train_D.__iter__(),

steps_per_epoch=len(train_D),

epochs=3,

callbacks=[evaluator,lrate]

)

del model

K.clear_session()

关键词特征

def extract(L):

return [r.word for r in L]

tr4w = TextRank4Keyword()

result = []

for sentence in train:

tr4w.analyze(text=text, lower=True, window=2)

s = extract(tr4w.get_keywords(10, word_min_len=1))

result = result + s

c = Counter(result)

print(c.most_common(100))

找到词后从其中人工遴选,选出每类的词,另外,在test集合中也运行该代码,同时用jieba辅助分割词的类。

融合代码参考

基于BERT和CNN的多模型虚假新闻分类

4. 模型下载

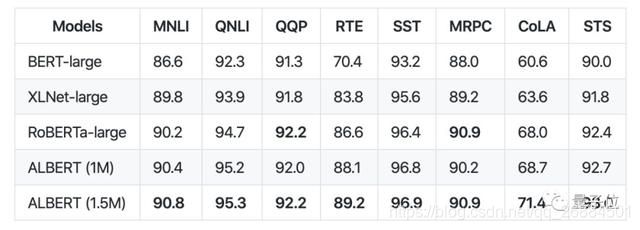

谷歌前不久发布的轻量级BERT模型——ALBERT,比BERT模型参数小18倍,性能还超越了它。还横扫各大“性能榜”,在SQuAD和RACE测试上创造了新的SOTA。

Albert使用了一个单模型设置,在 GLUE 基准测试中的性能:

Albert-xxl使用了一个单模型设置,在SQuaD和RACE基准测试中的性能:

4.1 中文版下载地址

-

Base

https://storage.googleapis.com/albert_models/albert_base_zh.tar.gz -

Large

https://storage.googleapis.com/albert_models/albert_large_zh.tar.gz -

XLarge

https://storage.googleapis.com/albert_models/albert_xlarge_zh.tar.gz -

Xxlarge

https://storage.googleapis.com/albert_models/albert_xxlarge_zh.tar.gz

4.2 ALBERT v2下载地址

-

Base

[Tar File]:

https://storage.googleapis.com/albert_models/albert_base_v2.tar.gz

[TF-Hub]:

https://tfhub.dev/google/albert_base/2 -

Large

[Tar File]:

https://storage.googleapis.com/albert_models/albert_large_v2.tar.gz

[TF-Hub]:

https://tfhub.dev/google/albert_large/2 -

XLarge

[Tar File]:

https://storage.googleapis.com/albert_models/albert_xlarge_v2.tar.gz

[TF-Hub]:

https://tfhub.dev/google/albert_xlarge/2 -

Xxlarge

[Tar File]:

https://storage.googleapis.com/albert_models/albert_xxlarge_v2.tar.gz

[TF-Hub]:

https://tfhub.dev/google/albert_xxlarge/2

4.3 预训练模型

-

Base

[Tar File]:

https://storage.googleapis.com/albert_models/albert_base_v1.tar.gz

[TF-Hub]:

https://tfhub.dev/google/albert_base/1 -

Large

[Tar File]:

https://storage.googleapis.com/albert_models/albert_large_v1.tar.gz

[TF-Hub]:

https://tfhub.dev/google/albert_large/1 -

XLarge

[Tar File]:

https://storage.googleapis.com/albert_models/albert_xlarge_v1.tar.gz

[TF-Hub]:

https://tfhub.dev/google/albert_xlarge/1 -

Xxlarge

[Tar File]:

https://storage.googleapis.com/albert_models/albert_xxlarge_v1.tar.gz

[TF-Hub]:

https://tfhub.dev/google/albert_xxlarge/1