CVPR 2018 论文分享会

Deep Learning

Towards Faster Training of Global Covariance Pooling Networks by Iterative Matrix Square Root Normalization

Abstract

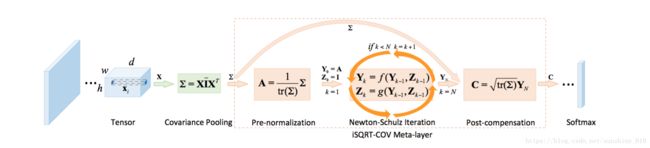

Global covariance pooling in convolutional neural networks has achieved impressive improvement over the classical first-order pooling. Recent works have shown matrix square root normalization plays a central role in achieving state-of-the-art performance. However, existing methods depend heavily on eigendecomposition (EIG) or singular value decomposition (SVD), suffering from inefficient training due to limited support of EIG and SVD on GPU. Towards addressing this problem, we propose an iterative matrix square root normalization method for fast end-toend training of global covariance pooling networks. At the core of our method is a meta-layer designed with loopembedded directed graph structure. The meta-layer consists of three consecutive nonlinear structured layers, which perform pre-normalization, coupled matrix iteration and post-compensation, respectively. Our method is much faster than EIG or SVD based ones, since it involves only matrix multiplications, suitable for parallel implementation on GPU. Moreover, the proposed network with ResNet architecture can converge in much less epochs, further accelerating network training. On large-scale ImageNet, we achieve competitive performance superior to existing counterparts. By finetuning our models pre-trained on ImageNet, we establish state-of-the-art results on three challenging finegrained benchmarks. The source code and network models will be available at http://www.peihuali.org/iSQRT-COV.

Introduction

Deep convolutional neural networks (ConvNets) have made significant progress in the past years, achieving recognition accuracy surpassing human beings in large-scale object recognition [7]. The ConvNet models pre-trained on ImageNet [5] have been proven to benefit a multitude of other computer vision tasks, ranging from fine-grained visual categorization (FGVC) [25], object detection [28], semantic segmentation [26] to scene parsing [37], where labeled data are insufficient for training from scratch. The common layers such as convolution, non-linear rectification, pooling and batch normalization [11] have become offthe-shelf commodities, widely supported on devices including workstations, PCs and embedded systems.

Although the architecture of ConvNet has greatly evolved in the past years, its basic layers largely keep unchanged [19, 18]. Recently, researchers have shown increasing interests in exploring structured layers to enhance representation capability of networks [12, 25, 1, 22]. One particular kind of structured layer is concerned with global covariance pooling after the last convolution layer, which has shown impressive improvement over the classical firstorder pooling, successfully used in FGVC [25], visual question answering [15] and video action recognition [34]. Very recent works have demonstrated that matrix square root normalization of global covariance pooling plays a key role in achieving state-of-the-art performance in both large-scale visual recognition [21] and challenging FGVC [24, 32].

For computing matrix square root, existing methods depend heavily on eigendecomposition (EIG) or singular value decomposition (SVD) [21, 32, 24]. However, fast implementation of EIG or SVD on GPU is an open problem, which is limitedly supported on NVIDIA CUDA platform, significantly slower than their CPU counterparts [12, 24]. As such, existing methods opt for EIG or SVD on CPU for computing matrix square root. Nevertheless, current implementations of meta-layers depending on CPU are far from ideal, particularly for multi-GPU configuration. Since GPUs with powerful parallel computing ability have to be interrupted and await CPUs with limited parallel ability, their concurrency and throughput are greatly restricted.

In [24], for the purpose of fast forward propagation (FP), Lin and Maji use Newton-Schulz iteration (called modified Denman-Beavers iteration therein) algorithm, which is proposed in [9], to compute matrix square-root. Unfortunately, for backward propagation (BP), they compute the gradient through Lyapunov equation solution which depends on the GPU unfriendly Schur-decomposition (SCHUR) or EIG. Hence, the training in [24] is expensive though FP which involves only matrix multiplication runs very fast. Inspired by that work, we propose a fast end-to-end training method, called iterative matrix square root normalization of covariance pooling (iSQRT-COV), depending on Newton-Schulz iteration in both forward and backward propagations.

At the core of iSQRT-COV is a meta-layer with loopembedded directed graph structure, specifically designed for ensuring both convergence of Newton-Schulz iteration and performance of global covariance pooling networks. The meta-layer consists of three consecutive structured layers, performing pre-normalization, coupled matrix iteration and post-compensation, respectively. We derive the gradients associated with the involved non-linear layers based on matrix backpropagation theory [12]. The design of sandwiching Newton-Schulz iteration using pre-normalization by Frobenius norm or trace and post-compensation is essential, which, as far as we know, did not appear in previous literature (e.g. in [9] or [24] ). The pre-normalization guarantees convergence of Newton-Schulz (NS) iteration, while post-compensation plays a key role in achieving state-ofthe-art performance with prevalent deep ConvNet architectures, e.g. ResNet [8]. The main differences between our method and other related works1 are summarized in Tab. 1.

Figure 1. Proposed iterative matrix square root normalization of covariance pooling (iSQRT-COV) network. After the last convolution layer, we perform second-order pooling by estimating a covariance matrix. We design a meta-layer with loop-embedded directed graph structure for computing approximate square root of covariance matrix. The meta-layer consists of three nonlinear structured layers, performing pre-normalization, coupled Newton-Schulz iteration and post-compensation, respectively. See Sec. 3 for notations and details.

Conclusion

We presented an iterative matrix square root normalization of covariance pooling (iSQRT-COV) network which can be trained end-to-end. Compared to existing works depending heavily on GPU unfriendly EIG or SVD, our method, based on coupled Newton-Schulz iteration [9], runs much faster as it involves only matrix multiplications, suitable for parallel implementation on GPU. We validated our method on both large-scale ImageNet dataset and challenging fine-grained benchmarks. Given efficiency and promising performance of our iSQRT-COV, we hope global covariance pooling will be a promising alternative to global average pooling in other deep network architectures, e.g., ResNeXt [36], Inception [11] and DenseNet [10].

Interleaved Group Convolutions for Deep Neural Networks

Abstract

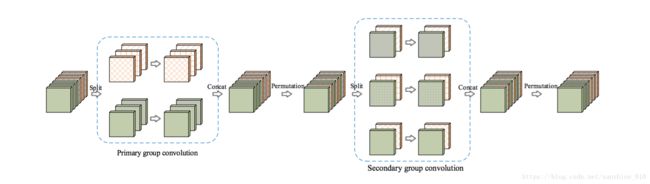

In this paper, we present a simple and modularized neural network architecture, named interleaved group convolutional neural networks (IGCNets). The main point lies in a novel building block, a pair of two successive interleaved group convolutions: primary group convolution and secondary group convolution. The two group convolutions are complementary: (i) the convolution on each partition in primary group convolution is a spatial convolution, while on each partition in secondary group convolution, the convolution is a point-wise convolution; (ii) the channels in the same secondary partition come from different primary partitions. We discuss one representative advantage: Wider than a regular convolution with the number of parameters and the computation complexity preserved. We also show that regular convolutions, group convolution with summation fusion, and the Xception block are special cases of interleaved group convolutions. Empirical results over standard benchmarks, CIFAR-10, CIFAR-100, SVHN and ImageNet demonstrate that our networks are more efficient in using parameters and computation complexity with similar or higher accuracy.

Introduction

Architecture design in deep convolutional neural networks has been attracting increasing interests. The basic design purpose is efficient in terms of computation and parameter with high accuracy. Various design dimensions have been considered, ranging from small kernels [15, 35, 33, 4, 14], identity mappings [10] or general multi-branch structures [38, 42, 22, 34, 35, 33] for easing the training of very deep networks, and multi-branch structures for increasing the width [34, 4, 14].

Our interest is to reduce the redundancy of convolutional kernels. The redundancy comes from two extents: the spatial extent and the channel extent. In the spatial extent, small kernels are developed, such as 3 × 3, 3 × 1, 1 × 3 [35, 29, 17, 26, 18]. In the channel extent, group convolutions [42, 40] and channel-wise convolutions or separable filters [28, 4, 14], have been studied. Our work belongs to the kernel design in the channel extent.

In this paper, we present a novel network architecture, which is a stack of interleaved group convolution (IGC) blocks. Each block contains two group convolutions: primary group convolution and secondary group convolution, which are conducted on primary and secondary partitions, respectively. The primary partitions are obtained by simply splitting input channels, e.g., L partitions with each containing M channels, and there are M secondary partitions, each containing L channels that lie in different primary partitions. The primary group convolution performs the spatial convolution over each primary partition separately, and the secondary group convolution performs a 1 × 1 convolution (point-wise convolution) over each secondary partition, blending the channels across partitions outputted by primary group convolution. Figure 1 illustrates the interleaved group convolution block.

It is known that a group convolution is equivalent to a regular convolution with sparse kernels: there is no connections across the channels in different partitions. Accordingly, an IGC block is equivalent to a regular convolution with the kernel composed from the product of two sparse kernels, resulting in a dense kernel. We show that under the same number of parameters/computation complexity, an IGC block (except the extreme case that the number of primary partitions, L, is 1) is wider than a regular convolution with the spatial kernel size same to that of primary group convolution. Empirically, we also observe that a network built by stacking IGC blocks under the same computation complexity and the same number of parameters performs better than the network with regular convolutions.

We study the relations with existing related modules. (i) The regular convolution and group convolution with summation fusion [40, 42, 38], are both interleaved group convolutions, where the kernels are in special forms and are fixed in secondary group convolution. (ii) An IGC block in the extreme case where there is only one partition in the secondary group convolution, is very close to Xception [4].

Our main contributions are summarized as follows.

• We present a novel building block, interleaved group convolutions, which is efficient in parameter and computation.

• Weshowthattheproposedbuildingblockiswiderthan a regular group convolution while keeping the network size and computational complexity, showing superior empirical performance.

• We discuss the connections to regular convolutions, the Xception block [4], and group convolution with summation fusion, and show that they are specific instances of interleaved group convolutions.

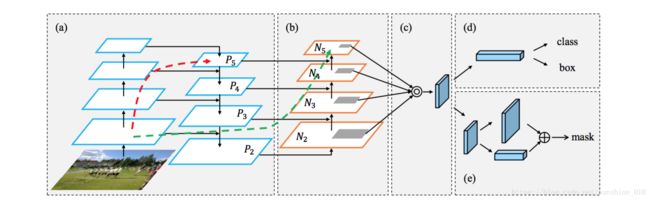

Figure 1. Illustrating the interleaved group convolution, with L = 2 primary partitions and M = 3 secondary partitions. The convolution for each primary partition in primary group convolution is spatial. The convolution for each secondary partition in secondary group convolution is point-wise (1 × 1). Details are given in Section 3.1.

Figure 2. (a) Regular convolution. (b) Four-branch representation of the regular convolution. The shaded part in (b), we call crosssummation, is equivalent to a three-step transformation: permutation, secondary group convolution, and permutation back.

Conclusion

In this paper, we present a novel convolutional neural network architecture, which addresses the redundancy problem of convolutional filters in the channel domain. The main novelty lies in an interleaved group convolution block: channels in the same partition in the secondary group convolution come from different partitions used in the primary group convolution. Experimental results demonstrate that our network is efficient in parameter and computation.

Partial Transfer Learning with Selective Adversarial Networks

Abstract

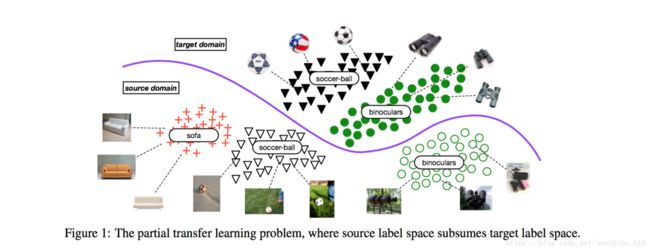

Adversarial learning has been successfully embedded into deep networks to learn transferable features, which reduce distribution discrepancy between the source and target domains. Existing domain adversarial networks assume fully shared label space across domains. In the presence of big data, there is strong motivation of transferring both classification and representation models from existing big domains to unknown small domains. This paper introduces partial transfer learning, which relaxes the shared label space assumption to that the target label space is only a subspace of the source label space. Previous methods typically match the whole source domain to the target domain, which are prone to negative transfer for the partial transfer problem. We present Selective Adversarial Network (SAN), which simultaneously circumvents negative transfer by selecting out the outlier source classes and promotes positive transfer by maximally matching the data distributions in the shared label space. Experiments demonstrate that our models exceed stateof-the-art results for partial transfer learning tasks on several benchmark datasets.

Introduction

Deep networks have significantly improved the state of the art for a wide variety of machine learning problems and applications. At the moment, these impressive gains in performance come only when massive amounts of labeled data are available. Since manual labeling of sufficient training data for diverse application domains on-the-fly is often prohibitive, for problems short of labeled data, there is strong motivation to establishing effective algorithms to reduce the labeling consumption, typically by leveraging off-the-shelf labeled data from a different but related source domain. This promising transfer learning paradigm, however, suffers from the shift in data distributions across different domains, which poses a major obstacle in adapting classification models to target tasks [22].

Existing transfer learning methods assume shared label space and different feature distributions across the source and target domains. These methods bridge different domains by learning domain-invariant feature representations without using target labels, and the classifier learned from source domain can be directly applied to target domain. Recent studies have revealed that deep networks can learn more transferable features for transfer learning [4, 29], by disentangling explanatory factors of variations behind domains. The latest advances have been achieved by embedding transfer learning in the pipeline of deep feature learning to extract domain-invariant deep representations [26, 15, 6, 27, 17].

In the presence of big data, we can readily access large-scale labeled datasets such as ImageNet-1K. Thus, a natural ambition is to directly transfer both the representation and classification models from large-scale dataset to our target dataset, such as Caltech-256, which are usually small-scale and with unknown categories at training and testing time. From big data viewpoint, we can assume that the large-scale dataset is big enough to subsume all categories of the small-scale dataset. Thus, we introduce a novel partial transfer learning problem, which assumes that the target label space is a subspace of the source label space. As shown in Figure 1, this new problem is more general and challenging than standard transfer learning, since outlier source classes (“sofa”) will result in negative transfer when discriminating the target classes (“soccer-ball” and “binoculars”). Thus, matching the whole source and target domains as previous methods is not an effective solution to this new problem.

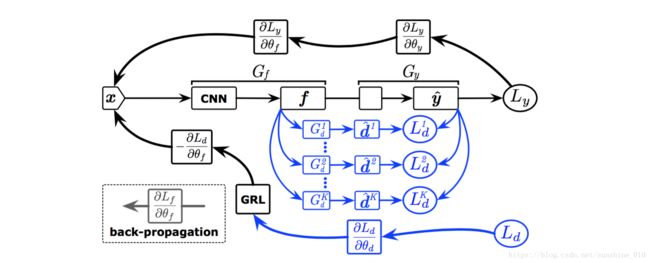

This paper presents Selective Adversarial Networks (SAN), which largely extends the ability of deep adversarial adaptation [6] to address partial transfer learning from big domains to small domains. SAN aligns the distributions of source and target data in the shared label space and more importantly, selects out the source data in the outlier source classes. A key improvement over previous methods is the capability to simultaneously promote positive transfer of relevant data and alleviate negative transfer of irrelevant data, which can be trained in an end-to-end framework. Experiments show that our models exceed state-of-the-art results for partial transfer learning on public benchmark datasets.

Figure 2: The architecture of the proposed Selective Adversarial Networks (SAN) for partial transfer learning, where f is the extracted deep features, yˆ is the predicted data label, and dˆ is the predicted

domain label; Gf is the feature extractor, Gy and Ly are the label predictor and its loss, Gkd and Lkd are the domain discriminator and its loss; GRL stands for Gradient Reversal Layer. The blue part shows the class-wise adversarial networks (|Cs| in total) designed in this paper. Best viewed in color.

Conclusion

This paper presented a novel selective adversarial network approach to partial transfer learning. Unlike previous adversarial adaptation methods that match the whole source and target domains based on the shared label space assumption, the proposed approach simultaneously circumvents negative transfer by selecting out the outlier source classes and promotes positive transfer by maximally matching the data distributions in the shared label space. Our approach successfully tackles partial transfer learning where source label space subsumes target label space, which is testified by extensive experiments.

Weakly Supervised Coupled Networks for Visual Sentiment Analysis

Abstract

Automatic assessment of sentiment from visual content has gained considerable attention with the increasing tendency of expressing opinions on-line. In this paper, we solve the problem of visual sentiment analysis using the high-level abstraction in the recognition process. Existing methods based on convolutional neural networks learn sentiment representations from the holistic image appearance. However, different image regions can have a different influence on the intended expression. This paper presents a weakly supervised coupled convolutional network with two branches to leverage the localized information. The first branch detects a sentiment specific soft map by training a fully convolutional network with the cross spatial pooling strategy, which only requires image-level labels, thereby significantly reducing the annotation burden. The second branch utilizes both the holistic and localized information by coupling the sentiment map with deep features for robust classification. We integrate the sentiment detection and classification branches into a unified deep framework and optimize the network in an end-to-end manner. Extensive experiments on six benchmark datasets demonstrate that the proposed method performs favorably against the state-ofthe-art methods for visual sentiment analysis.

Introduction

Visual sentiment analysis from images has attracted significant attention with the increasing tendency of expressing opinions through posting images on social media like Flickr and Twitter. The automatic assessment of image sentiment has many applications, e.g. education, entertainment, advertisement, etc. Recently, with the advances of convolutional neural networks (CNNs), numerous deep approaches have been proposed to predict sentiment [20,31]. The effectiveness of machine learning based deep features have been demonstrated over hand-crafted features (e.g. color, texture, and composition) [17, 28, 34]) on visual sentiment prediction. However, several issues remain when using CNNs to address such an abstract task as follows.

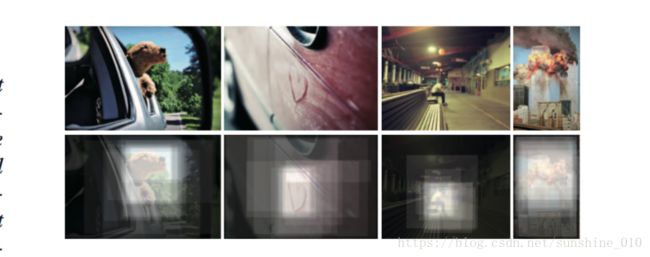

First, visual sentiment analysis is more challenging than conventional recognition tasks due to a higher level of subjectivity in the human recognition process [13]. It is necessary to take more cues into consideration for visual sentiment prediction. Figure 1 shows examples from the EmotionROI dataset [21], which provides the bounding box annotations that invoke sentiment from 15 users. As can be seen, humans’ emotional responses to images are determined by local regions [29]. However, most existing methods employ CNNs to learn feature representations only from entire images [4, 30]. Second, providing more precise annotations (e.g. bounding boxes [11]) than image-level labeling for training generally leads to better performance for recognition tasks.

However, there are two limitations for visual sentiment classification. On the one hand, the increased annotation cost prevents it from widespread use, especially for such a subjective task; on the other hand, different regions contribute differently to the viewer’s evoked sentiment, while crisp proposal boxes only tend to find the foreground objects in an image.

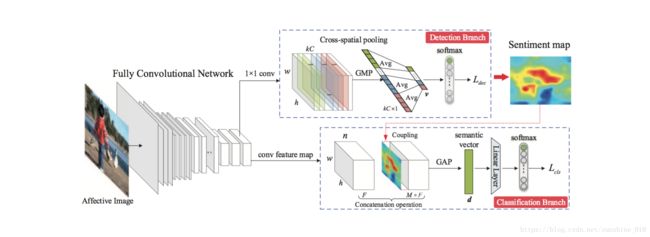

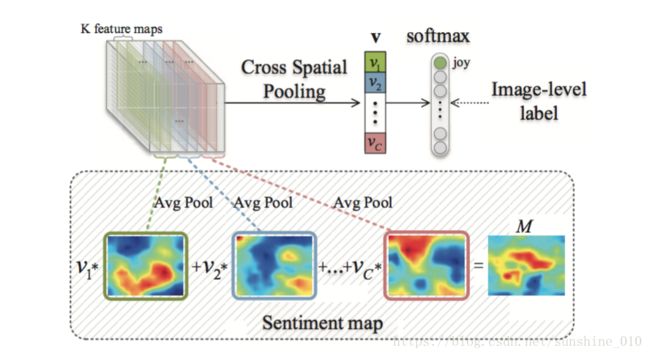

To address these problems, we propose a weakly supervised coupled framework (WSCNet) for joint sentiment detection and classification with two branches. The first branch is designed to generate region proposals evoking sentiment. Instead of extracting multiple crisp proposal boxes, we use a soft sentiment map to represent the probability of evoking the sentiment for each receptive field. In detail, we make use of a Fully Convolutional Network (FCN) followed by the proposed cross-spatial pooling strategy to preserve the spatial information of the convolutional feature maps. Based on this, the sentiment map is generated and utilized to highlight the regions of interest that are informative for classification. The second branch captures the localized representation by coupling the sentiment map with the deep features, which is then combined with the holistic representation to provide a more semantic vector. During the end-to-end training process, our approach only requires image-level sentiment labeling, which significantly reduces the annotation burden.

Our contributions are summarized as follows: First, we present a weakly supervised coupled network to integrate visual sentiment classification and detection into a unified CNN framework, which learns the discriminative representation for visual sentiment analysis in an end-to-end manner. Second, we exploit the sentiment map to provide imagespecific localized information with only the image-level label, with which both holistic and localized representations are fused for robust sentiment classification. Our proposed framework performs favorably against the state-of-the-art methods and off-the-shelf CNN classifiers on six benchmark datasets for visual sentiment analysis.

Figure 1. Examples from the EmotionROI dataset [21]. The normalized bounding boxes indicate the regions that influence the evoked sentiments annotated by 15 users. The first two examples are joy images, and the last two examples are sadness and fear images, respectively. As can be seen, the sentiments can be evoked by specific regions.

Figure 2. Illustration of the proposed WSCNet for visual sentiment analysis. The input image is first fed into the convolutional layers of FCN ResNet-101, and the response feature maps with good spatial resolution are then delivered into two branches. The detection branch employs the cross-spatial pooling strategy to summarize all the information contained in the feature maps for each class. The end-to-end training results in the sentiment map, which is then coupled with the conv feature maps in the classification branch capturing the localized information. Finally, both holistic and localized representations are fused as a semantic vector for sentiment classification.

Figure 3. Overview of the sentiment map generation. The predicted class scores of the input image are mapped back to the classification branch to generate the sentiment map, which can highlight comprehensive sentiment regions.

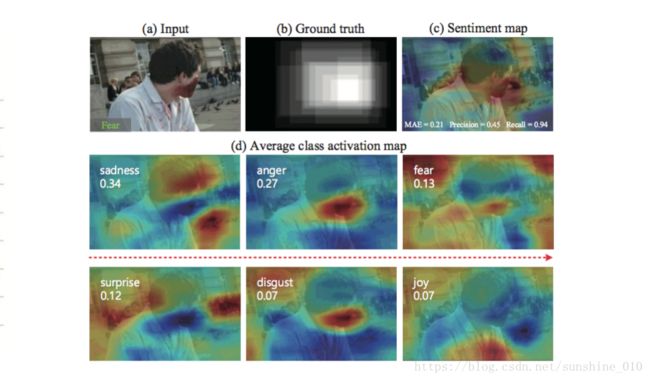

Figure 5. Detected sentiment map of the proposed WSCNet on the EmotionROI. Given the input (a) with ground truth (b), the detection result and the metrics are shown in (c). The class activation maps and the corresponding predicted scores are given in (d).

Figure 6. Weakly supervised detection results using different methods on the EmotionROI testing set. The input images and the ground truth are given in (a) and (b). The detected regions and metrics of weakly-supervised methods (i.e. CAM, SPN, ours) are shown in the last three columns. By activating the sentiment-related areas, our method is more accurate to the ground truth.

Conclusions

This paper addresses the problem of visual sentiment analysis based on convolutional neural networks, where the sentiments are predicted using multiple affective cues. We present WSCNet, an end-to-end weakly supervised deep architecture, which consists of two branches for discriminative representations learning. The detection branch is designed to automatically exploit the sentiment map, which can provide the localized information of the affective images. Then the classification branch leveraging both holistic and localized representations can predict the sentiments. Experimental results show the effectiveness of our method against the state-of-the-art on six benchmark datasets.

GAN and Synthesis

DA-GAN: Instance-level Image Translation by Deep Attention Generative Adversarial Networks

Abstract

Unsupervised image translation, which aims in translating two independent sets of images, is challenging in discovering the correct correspondences without paired data. Existing works build upon Generative Adversarial Network (GAN) such that the distribution of the translated images are indistinguishable from the distribution of the target set. However, such set-level constraints cannot learn the instance-level correspondences (e.g. aligned semantic parts in object configuration task). This limitation often results in false positives (e.g. geometric or semantic artifacts), and further leads to mode collapse problem. To address the above issues, we propose a novel framework for instance-level image translation by Deep Attention GAN (DA-GAN). Such a design enables DA-GAN to decompose the task of translating samples from two sets into translating instances in a highly-structured latent space. Specifically, we jointly learn a deep attention encoder, and the instancelevel correspondences could be consequently discovered through attending on the learned instance pairs. Therefore, the constraints could be exploited on both set-level and instance-level. Comparisons against several state-ofthe-arts demonstrate the superiority of our approach, and the broad application capability, e.g, pose morphing, data augmentation, etc., pushes the margin of domain translation problem.

Introduction

Can machines possess human ability to relate different image domains and translate them? This question can be formulated as image translation problem. In other words, learning a mapping function, by finding some underlying correspondences (e.g. similar semantics), from one image domain to the other. Years of research have produced powerful translation systems in supervised setting, where example pairs are available, e.g. [14]. However, obtaining paired training data is difficult and expensive.

Therefore, researchers turned to develop unsupervised learning approach which only relies on unpaired data. In the unsupervised setting, we only have two independent sets of samples. The lacking of pairing relationship makes it considered harder in finding the correct correspondences, and therefore it is much more challenging. Existing works typically build upon Generative Adversarial Network (GAN) such that the distribution of the translated samples is indistinguishable from the distribution of the target set. However, we point out that data itself is structured. Such set-level constraint impedes them from finding meaningful instance-level correspondences. By ’instance-level correspondences’, we refer to high-level content involving identifiable objects that shared by a set of samples. These identifiable objects could be adaptively task driven. For example, in Figure 1 (a), the words in the description corresponds to according parts and attributes of the bird image. Therefore, false positives often occur because of the instance-level correspondences missing in existing works. For example, in object configuration, the results just showing changes of color and texture, while fail in geometry changes (Figure 1). In text-to-image synthesis, fine-grained details are often missing (Figure 1).

Driven by this important issue, a question arises: Can we seek an algorithm which is capable of finding meaningful correspondences from both set-level and instance-level under unsupervised setting? To resolve this issue, in this paper, we introduce a dedicated unsupervised domain translation approach builds upon Generative Adversarial Network DA-GAN, which success in a large variety of translating tasks, and achieve visually appealing results.

To achieve these results, we have to address two fundamental challenges: First, how to exploit instance-level constraints while lacking correct pairing relationship in unsupervised setting. We take on this challenge and provide the first solution by decomposing the task of translating samples from two independent sets into translating instances in a highly-structured latent space. Specifically, we integrate the attention mechanism into the learning of the mapping function F , and a compound loss that consists of a consistency term, a symmetry term and a multi-adversarial term is used. Through attending on meaningful correspondences of samples on instance-level, the learned Deep Attention Encoder (DAE) projects samples in a latent space. Then the constraint on instance-level could be exploited in the latent space. We introduce a consistency loss to require the translated samples correspond to correct semantics with samples from the source domain in the latent space. To further enhance the constraint, we also consider the samples from the target domain by adding a symmetry loss that encourages the one-to-one mapping of F. As a result, the instancelevel constraints enable the mapping function to find the meaningful semantic corresponding, and therefore producing true positives and visually appealing results.

Second, how to further strengthen the constraints on set level such that the mode collapse problem could be mitigate. In practical, all input samples will map to the same sample, and optimization fails to make progress. To address this issue, we introduce a multi-adversarial training procedure to encourage different modes achieve fair possibility mass distribution during training and thus providing an effective solution to encourage the mapping function could cover all modes in the target domain, and make progress to achieve the optimal. Our main contributions can be summarized into three-fold:

• We decompose the task to instance-level image translation such that the constraints could be exploited on both instance-level and set-level by adopting the pro-

posed compound loss.

• To the best of our knowledge, we are the first that inte-

grate the attention mechanism into Generative Adver-

sarial Network.

• WeintroduceanovelframeworkDA-GAN,whichpro-

duces visually appealing results and is applicable in a large variety of tasks.

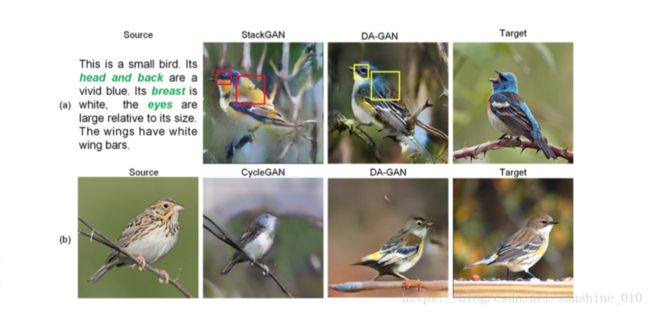

Figure 1: (a) text-to-image generation. (b) object configuration. We can observe that the absence of instance-level correspondences results in both semantic artifacts (labeled by red boxes) exist in StackGAN and geometry artifacts exist in CycleGAN. Our approach successfully produces the correct correspondences (labeled by yellow boxes) because of the proposed instance-level translating. Details can be found in Sec. 1

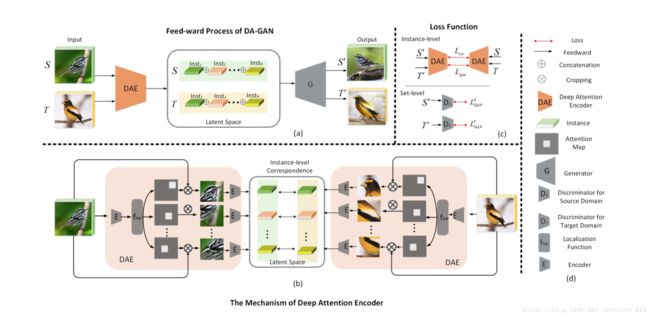

Figure 2: A pose morphing example for illustration the pipeline of DA-GAN. Given two images of birds from source domain S and target domain T, the goal of pose morphing is to translate the pose of source bird s into the pose of target one t, while still remain the identity of s. The feed-ward process is shown in (a), where two input images are fed into DAE which projects them into a latent space (labeled by dashed box). Then G takes these highly-structured representations (DAE(s) and DAE(t)) from the latent space to generated the translated samples, i.e.s′ = G(DAE(s)), t′ = G(DAE(t)). The details of the proposed DAE (labeled by orange block) is shown in (b). Given an image X, a localization function floc will first predict N attention regions’ coordinates from the feature map of X, (i.e. E(X), where E is an encoder, which can be utilized in any form). Then N attention masks are generated and activated on X to produce N attention regions {Ri}Ni=1. Finally, each region’s feature consists the instance-level representations {Insti}Ni=1. By operating the same way on both S and T, the instance-level correspondences can consequently be found in the latent space. We exploit constraints on both instance-level and set-level for optimization, it is illustrated in (c). All of the notations are listed in (d). [Best viewed in color.]

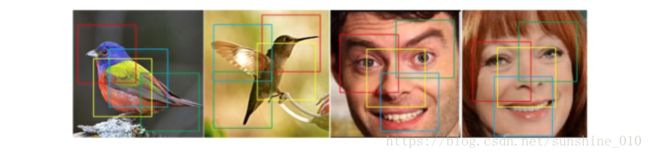

Figure 4: The attention locations predicted by DAE on birds images and face images from.

Conclusion

In this paper, we propose a novel framework for unsupervised image translation. Our intuition is to decompose the task of translating samples from two sets into translating instances in a highly-structured latent space. The instance-level corresponding could then be found by integrating attention mechanism into GAN. Extensive quantitative and qualitative results validate that, the proposed DA-GAN can significantly improve the state-of-the-arts for image-to-image translation. It is superiority in scalable for broader application, and succeeds in generating visually appealing images. We find that, some failure cases are caused by the incorrect attention results. It is because the instances are learned by a weak supervised attention mechanism, which some time showing a large gap with that learned under fully supervision. To tackle this challenge we may seek for more robust and effective algorithm in the future.

Look Closer to See Better: Recurrent Attention Convolutional Neural Network for Fine-grained Image Recognition

Attentive Generative Adversarial Network for Raindrop Removal from a Single Image

Abstract

Raindrops adhered to a glass window or camera lens can severely hamper the visibility of a background scene and degrade an image considerably. In this paper, we address the problem by visually removing raindrops, and thus transforming a raindrop degraded image into a clean one. The problem is intractable, since first the regions occluded by raindrops are not given. Second, the information about the background scene of the occluded regions is completely lost for most part. To resolve the problem, we apply an attentive generative network using adversarial training. Our main idea is to inject visual attention into both the generative and discriminative networks. During the training, our visual attention learns about raindrop regions and their surroundings. Hence, by injecting this information, the generative network will pay more attention to the raindrop regions and the surrounding structures, and the discriminative network will be able to assess the local consistency of the restored regions. This injection of visual attention to both generative and discriminative networks is the main contribution of this paper. Our experiments show the effectiveness of our approach, which outperforms the state of the art methods quantitatively and qualitatively.

Introduction

Raindrops attached to a glass window, windscreen or lens can hamper the visibility of a background scene and degrade an image. Principally, the degradation occurs because raindrop regions contain different imageries from those without raindrops. Unlike non-raindrop regions, rain

drop regions are formed by rays of reflected light from a wider environment, due to the shape of raindrops, which is similar to that of a fish-eye lens. Moreover, in most cases, the focus of the camera is on the background scene, making the appearance of raindrops blur.

In this paper, we address this visibility degradation problem. Given an image impaired by raindrops, our goal is to remove the raindrops and produce a clean background as shown in Fig. 1. Our method is fully automatic. We consider that it will benefit image processing and computer vision applications, particularly for those suffering from raindrops, dirt, or similar artifacts.

A few methods have been proposed to tackle the raindrop detection and removal problems. Methods such as [17, 18, 12] are dedicated to detecting raindrops but not removing them. Other methods are introduced to detect and remove raindrops using stereo [20], video [22, 25], or specifically designed optical shutter [6], and thus are not applicable for a single input image taken by a normal camera. A method by Eigen et al. [1] has a similar setup to ours. It attempts to remove raindrops or dirt using a single image via deep learning method. However, it can only handle small raindrops, and produce blurry outputs [25]. In our experimental results (Sec. 6), we will find that the method fails to handle relatively large and dense raindrops.

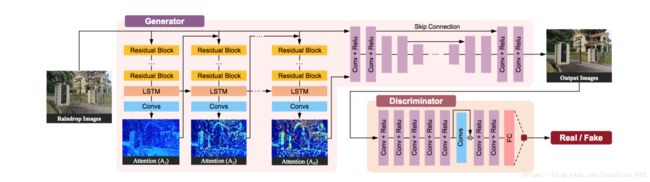

In contrast to [1], we intend to deal with substantial presence of raindrops, like the ones shown in Fig. 1. Generally, the raindrop-removal problem is intractable, since first the regions which are occluded by raindrops are not given. Second, the information about the background scene of the occluded regions is completely lost for most part. The problem gets worse when the raindrops are relatively large and distributed densely across the input image. To resolve the problem, we use a generative adversarial network, where our generated outputs will be assessed by our discriminative network to ensure that our outputs look like real images. To deal with the complexity of the problem, our generative network first attempts to produce an attention map. This attention map is the most critical part of our network, since it will guide the next process in the generative network to focus on raindrop regions. This map is produced by a recurrent network consisting of deep residual networks (ResNets) [8] combined with a convolutional LSTM [21] and a few standard convolutional layers. We call this attentive-recurrent network.

The second part of our generative network is an autoencoder, which takes both the input image and the attention map as the input. To obtain wider contextual information, in the decoder side of the autoencoder, we apply multi-scale losses. Each of these losses compares the difference between the output of the convolutional layers and the corresponding ground truth that has been downscaled accordingly. The input of the convolutional layers is the features from a decoder layer. Besides these losses, for the final output of the autoencoder, we apply a perceptual loss to obtain a more global similarity to the ground truth. This final output is also the output of our generative network.

Having obtained the generative image output, our discriminative network will check if it is real enough. Like in a few inpainting methods (e.g. [9, 13]), our discriminative network validates the image both globally and locally. However, unlike the case of inpainting, in our problem and particularly in the testing stage, the target raindrop regions are not given. Thus, there is no information on the local regions that the discriminative network can focus on. To address this problem, we utilize our attention map to guide the discriminative network toward local target regions.

Overall, besides introducing a novel method of raindrop removal, our other main contribution is the injection of the attention map into both generative and discriminative networks, which is novel and works effectively in removing raindrops, as shown in our experiments in Sec. 6. We will release our code and dataset.

The rest of the paper is organized as follows. Section 2 discusses the related work in the fields of raindrop detection and removal, and in the fields of the CNN-based image inpainting. Section 3 explains the raindrop model in an image, which is the basis of our method. Section 4 describes our method, which is based on the generative adversarial network. Section 5 discusses how we obtain our synthetic and real images used for training our network. Section 6 shows our evaluations quantitatively and qualitatively. Finally, Section 7 concludes our paper.

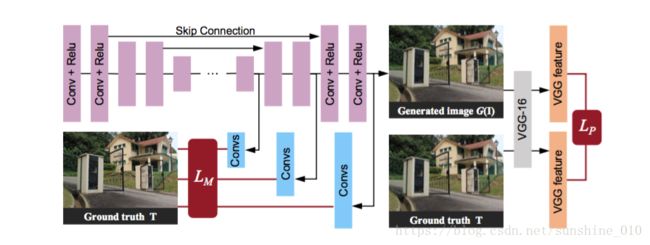

Figure 2. The architecture of our proposed attentive GAN.The generator consists of an attentive-recurrent network and a contextual autoencoder with skip connections. The discriminator is formed by a series of convolution layers and guided by the attention map. Best viewed in color.

Figure 4. The architecture of our contextual autoencoder. Multiscale loss and perceptual loss are used to help train the autoencoder.

Conclusion

We have proposed a single-image based raindrop removal method. The method utilizes a generative adversarial network, where the generative network produces the attention map via an attentive-recurrent network and applies this map along with the input image to generate a raindrop-free image through a contextual autoencoder. Our discriminative network then assesses the validity of the generated output globally and locally. To be able to validate locally, we inject the attention map into the network. Our novelty lies on the use of the attention map in both generative and discriminative network. We also consider that our method is the first method that can handle relatively severe presence of raindrops, which the state of the art methods in raindrop removal fail to handle.

Person Re-identification

Multi-shot Pedestrian Re-identification via Sequential Decision Making

Abstract

Multi-shot pedestrian re-identification problem is at the core of surveillance video analysis. It matches two tracks of pedestrians from different cameras. In contrary to existing works that aggregate single frames features by time series model such as recurrent neural network, in this paper, we propose an interpretable reinforcement learning based approach to this problem. Particularly, we train an agent to verify a pair of images at each time. The agent could choose to output the result (same or different) or request another pair of images to verify (unsure). By this way, our model implicitly learns the difficulty of image pairs, and postpone the decision when the model does not accumulate enough evidence.

Moreover, by adjusting the reward for unsure action, we can easily trade off between speed and accuracy. In three open benchmarks, our method are competitive with the state-of-the-art methods while only using 3% to 6% images. These promising results demonstrate that our method is favorable in both efficiency and performance.

Introduction

Pedestrian Re-identification (re-id) aims at matching pedestrians in different tracks from multiple cameras. It helps to recover the trajectory of a certain person in a broad area across different non-overlapping cameras. Thus, it is a fundamental task in a wide range of applications such as video surveillance for security and sports video analysis. The most popular setting for this task is single shot re-id, which judges whether two persons at different video frames are the same one. This setting has been extensively studied in recent years[7, 1, 16, 28, 17]. On the other hand, multishot re-id (or a more strict setting, video based re-id) is a more realistic setting in practice, however it is still at its early age compared with single shot re-id task.

Currently, the main stream of solving multi-shot re-id task is first to extract features from single frames, and then aggregate these image level features. Consequently, the key lies in how to leverage the rich yet possibly redundant and noisy information resided in multiple frames to build track level features from image level features. A common choice is pooling[37] or bag of words[38]. Furthermore, if the input tracks are videos (namely, the temporal order of frames is preserved), optical flow[5] or recurrent neural network (RNN)[24, 39] are commonly adopted to utilize the motion cues. However, most of these methods have two main problems: the first one is that it is computationally inefficient to use all the frames in each track due to the redundancy. The second one is there could be noisy frames caused by occlusion, blur or incorrect detections. These noisy frames may significantly deteriorate the performance.

To solve the aforementioned problems, we formulate multi-shot re-id problem as a sequential decision making task. Intuitively, if the agent is confident enough about existing evidences, it could output the result immediately. Otherwise, it needs to ask for another pair to verify. To model such human like decision process, we feed a pair of images from the two tracks to a verification agent at each time step. Then, the agent could output one of three actions: same, different or unsure. By adjusting the rewards of these three actions, we could trade off between the number of images used and final accuracy. We depict several examples in Fig. 1. In case of easy examples, the agent could decide using only one pair of images, while when the cases are hard, the agent chooses to see more pairs to accumulate evidences. In contrast to previous works that explicitly deduplicate redundant frames[6] or distinguish high quality from low quality frames[21], our method could implicitly consider these factors in a data driven end-to-end manner. Moreover, our method is general enough to accommodate all single shot re-id methods as image level feature extractor even those non-deep learning based methods.

The main contributions of our work are listed as following:

• We are the first to introduce reinforcement learning into multi-shot re-id problem. We train an agent to either output results or request to see more samples. Thus, the agent could early stop or postpone the decision as needed. Thanks to this behavior, we could balance speed and accuracy by only adjusting the rewards.

• We verify the effectiveness and efficiency on three popular multi-shot re-id datasets. Along with the deliberately designed image feature extractor, our method could outperform the state-of-the-art methods while only using 3% to 6% images without resorting to other post-processing or additional metric learning methods.

• We empirically demonstrate that the Q function could implicitly indicate the difficulties of samples. This desirable property makes the results of our method more interpretable.

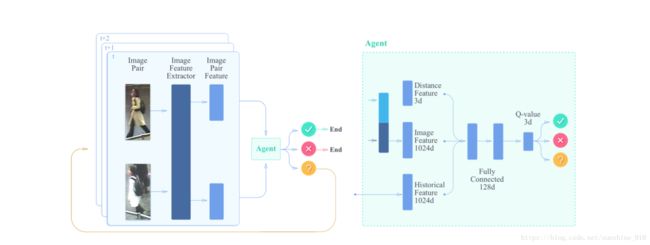

Figure 1: Examples to demonstrate the motivation of our work. For most tracks, several even only one pair of images are enough to make confident prediction. However, in other hard cases, it is necessary to use more pairs to alleviate the influence of these samples of bad quality.

Figure 2: An illustration of our proposed method. Firstly we train an image level feature extractor (the left part) and then aggregate sequence level feature with an agent (the right part). The agent takes several kinds of features of one pair of images,

and take one of three possible actions. If the taken action is “unsure”, the above process is repeated again.

Conclusion

In this paper we have introduced a novel approach for multi-shot pedestrian re-identification problem by casting it as a pair by pair decision making process. Thanks to reinforcement learning, we could train an agent for such task. Specifically, it receives image pairs sequentially, and output one of the three actions: same, different or unsure. By early stop or decision postponing, the agent could adjust the budget needs to make confident decision according to the difficulties of the tracks.

We have tested our method on three different multi-shot pedestrian re-id datasets. Experimental results have shown our model can yield competitive or even better results with state-of-the-art methods using only 3% to 6% of images. Furthermore, the Q values outputted by the agent is a good indicator of the difficulty of image pairs, which makes our decision process is more interpretable.

Currently, the weight for each frame is determined by the Q value heuristically, which means the weight is not guided fully by the final objective function. More advanced mechanism such as attention can be easily incorporated into our framework. We leave this as our future work.

Person Transfer GAN to Bridge Domain Gap for Person Re-Identification

Although the performance of person Re-Identification (ReID) has been significantly boosted, many challenging issues in real scenarios have not been fully investigated, e.g., the complex scenes and lighting variations, viewpoint and pose changes, and the large number of identities in a camera network. To facilitate the research towards conquering those issues, this paper contributes a new dataset called MSMT17 with many important features, e.g., 1) the raw videos are taken by an 15-camera network deployed in both indoor and outdoor scenes, 2) the videos cover a long period of time and present complex lighting variations, and 3) it contains currently the largest number of annotated identities, i.e., 4,101 identities and 126,441 bounding boxes. We also observe that, domain gap commonly exists between datasets, which essentially causes severe performance drop when training and testing on different datasets. This results in that available training data cannot be effectively leveraged for new testing domains. To relieve the expensive costs of annotating new training samples, we propose a Person Transfer Generative Adversarial Network (PTGAN) to bridge the domain gap. Comprehensive experiments show that the domain gap could be substantially narrowed-down by the PTGAN.

Introduction

Person Re-Identification (ReID) targets to match and return images of a probe person from a large-scale gallery set collected by camera networks. Because of its important applications in security and surveillance, person ReID has been drawing lots of attention from both academia and industry. Thanks to the development of deep learning and the availability of many datasets, person ReID performance has been significantly boosted. For example, the Rank-1 accuracy of single query on Market1501 [38] has been improved from 43.8% [21] to 89.9% [30]. The Rank-1 accuracy on CUHK03 [20] labeled dataset has been improved from 19.9% [20] to 88.5% [27]. A more detailed review of current approaches will be given in Sec. 2.

Although the performance on current person ReID datasets is pleasing, there still remain several open issues hindering the applications of person ReID. First, existing public datasets differ from the data collected in real scenarios. For example, current datasets either contain limited number of identities or are taken under constrained environments. The currently largest DukeMTMC-reID [40] contains less than 2,000 identities and presents simple lighting conditions. Those limitations simplify the person ReID task and help to achieve high accuracy. In real scenarios, person ReID is commonly executed within a camera network deployed in both indoor and outdoor scenes and processes videos taken by a long period of time. Accordingly, real applications have to cope with challenges like a large number of identities and complex lighting and scene variations, which current algorithms might fail to address.

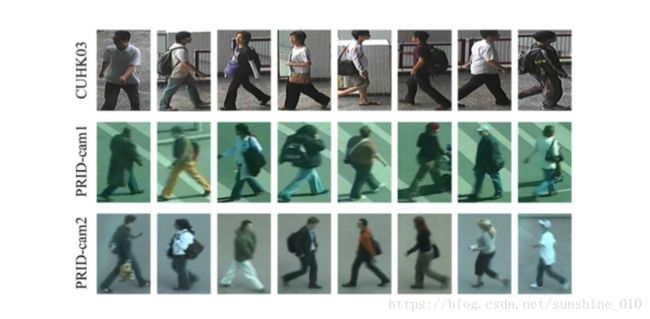

Another challenge we observe is that, there exists domain gap between different person ReID datasets, i.e., training and testing on different person ReID datasets results in severe performance drop. For example, the model trained on CUHK03 [20] only achieves the Rank-1 accuracy of 2.0% when tested on PRID [10]. As shown in Fig. 1, the domain gap could be caused by many reasons like different lighting conditions, resolutions, human race, seasons, backgrounds, etc. This challenge also hinders the applications of person ReID, because available training samples cannot be effectively leveraged for new testing domains. Since annotating person ID labels is expensive, research efforts are desired to narrow-down or eliminate the domain gap.

Aiming to facilitate the research towards applications in realistic scenarios, we collect a new Multi-Scene MultiTime person ReID dataset (MSMT17). Different from existing datasets, MSMT17 is collected and annotated to present several new features. 1) The raw videos are taken by an 15camera network deployed in both the indoor and outdoor scenes. Therefore, it presents complex scene transformations and backgrounds. 2) The videos cover a long period of time, e.g., four days in a month and three hours in the morning, noon, and afternoon, respectively in each day, thus present complex lighting variations. 3) It contains currently the largest number of annotated identities and bounding boxes, i.e., 4,101 identities and 126,441 bounding boxes. To our best knowledge, MSMT17 is currently the largest and most challenging public dataset for person ReID. More detailed descriptions will be given in Sec. 3.

To address the second challenge, we propose to bridge the domain gap by transferring persons in dataset A to another dataset B. The transferred persons from A are desired to keep their identities, meanwhile present similar styles, e.g., backgrounds, lightings, etc., with persons in B. We model this transfer procedure with a Person Transfer Generative Adversarial Network (PTGAN), which is inspired by the Cycle-GAN [41]. Different from Cycle-GAN [41], PTGAN considers extra constraints on the person foregrounds to ensure the stability of their identities during transfer. Compared with Cycle-GAN, PTGAN generates high quality person images, where person identities are kept and the styles are effectively transformed. Extensive experimental results on several datasets show PTGAN effectively reduces the domain gap among datasets.

Our contributions can be summarized into three aspects. 1) A new challenging large-scale MSMT17 dataset is collected and will be released. Compared with existing datasets, MSMT17 defines more realistic and challenging person ReID tasks. 2) We propose person transfer to take advantages of existing labeled data from different datasets. It has potential to relieve the expensive data annotations on new datasets and make it easy to train person ReID systems in real scenarios. An effective PTGAN model is presented for person transfer. 3) This paper analyzes several issues hindering the applications of person ReID. The proposed MSMT17 and algorithms have potential to facilitate the future research on person ReID.

Figure 1: Illustration of the domain gap between CUHK03 and PRID. It is obvious that, CUHK03 and PRID present different styles, e.g., distinct lightings, resolutions, human race, seasons, backgrounds, etc., resulting in low accuracy when training on CUHK03 and testing on PRID.

Conclusions and Discussions

This paper contributes a large-scale MSMT17 dataset. MSMT17 presents substantially variants on lightings, scenes, backgrounds, human poses, etc., and is currently the largest person ReID dataset. Compared with existing datasets, MSMT17 defines a more realistic and challenging person ReID task.

PTGAN is proposed as an original work on person transfer to bridge the domain gap among datasets. Extensive experiments show PTGAN effectively reduces the domain gap. Different cameras may present different styles, making it difficult to perform multiple style transfer with one mapping function. Therefore, the person transfer strategy in Sec. 5.4.2 and Sec. 5.5 is not yet optimal. This also explains why PTGAN learned on each individual target camera performs better in Sec. 5.4.1. A better strategy is to consider the style differences among cameras to get more stable mapping functions. Our future work would continue to study more effective and efficient person transfer strategies for large datasets.

vision and language

Learning Semantic Concepts and Order for Image and Sentence Matching

Abstract

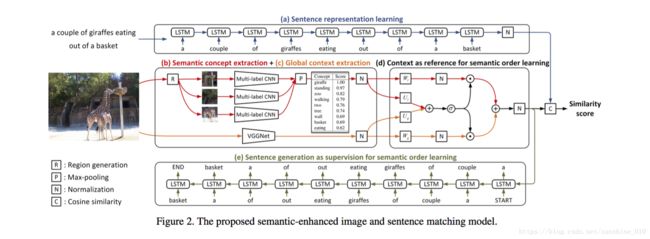

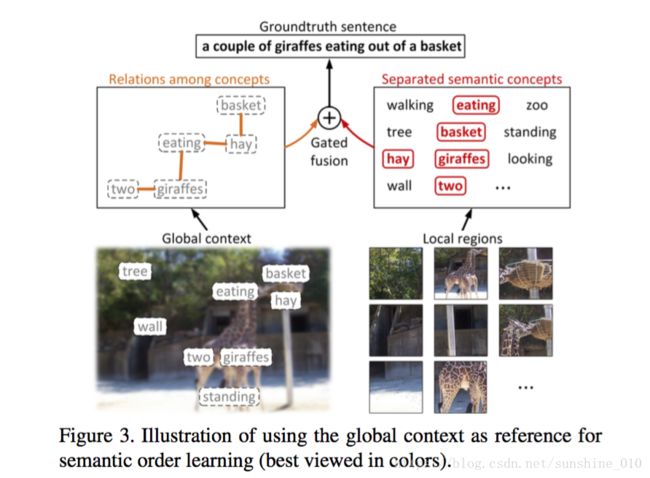

Image and sentence matching has made great progress recently, but it remains challenging due to the large visualsemantic discrepancy. This mainly arises from that the representation of pixel-level image usually lacks of high-level semantic information as in its matched sentence. In this work, we propose a semantic-enhanced image and sentence matching model, which can improve the image representation by learning semantic concepts and then organizing them in a correct semantic order. Given an image, we first use a multi-regional multi-label CNN to predict its semantic concepts, including objects, properties, actions, etc. Then, considering that different orders of semantic concepts lead to diverse semantic meanings, we use a context-gated sentence generation scheme for semantic order learning. It simultaneously uses the image global context containing concept relations as reference and the groundtruth semantic order in the matched sentence as supervision. After obtaining the improved image representation, we learn the sentence representation with a conventional LSTM, and then jointly perform image and sentence matching and sentence generation for model learning. Extensive experiments demonstrate the effectiveness of our learned semantic concepts and order, by achieving the state-of-the-art results on two public benchmark datasets.

Introduction

The task of image and sentence matching refers to measuring the visual-semantic similarity between an image and a sentence. It has been widely applied to the application of image-sentence cross-modal retrieval, e.g., given an image query to find similar sentences, namely image annotation, and given a sentence query to retrieve matched images, namely text-based image search.

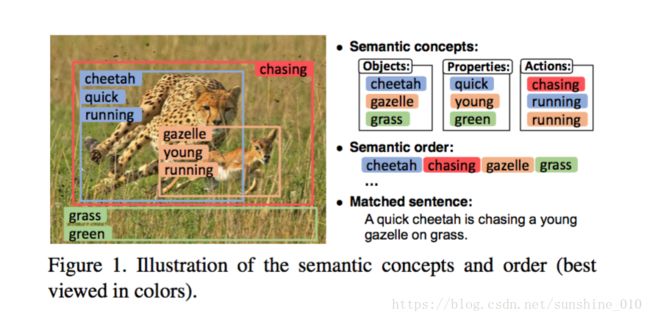

Although much progress in this area has been achieved,

it is still nontrivial to accurately measure the similarity between image and sentence, due to the existing huge visualsemantic discrepancy. Taking an image and its matched sentence in Figure 1 for example, main objects, properties and actions appearing in the image are: {cheetah, gazelle, grass}, {quick, young, green} and {chasing, running}, respectively. These high-level semantic concepts are the essential content to be compared with the matched sentence, but they cannot be easily represented from the pixel-level image. Most existing methods [11, 14, 20] jointly represent all the concepts by extracting a global CNN [28] feature vector, in which the concepts are tangled with each other. As a result, some primary foreground concepts tend to be dominant, while other secondary background ones will probably be ignored, which is not optimal for finegrained image and sentence matching. To comprehensively predict all the semantic concepts for the image, a possible way is to adaptively explore the attribute learning frameworks [6, 35, 33]. But such a method has not been well investigated in the context of image and sentence matching.

In addition to semantic concepts, how to correctly organize them, namely semantic order, plays an even more important role in the visual-semantic discrepancy. As illustrated in Figure 1, given the semantic concepts mentioned above, if we incorrectly set their semantic order as: a quick gazelle is chasing a young cheetah on grass, then it would have completely different meanings compared with the image content and matched sentence. But directly learning the correct semantic order from semantic concepts is very difficult, since there exist various incorrect orders that semantically make sense. We could resort to the image global context, since it already indicates the correct semantic order from the appearing spatial relations among semantic concepts, e.g., the cheetah is on the left of the gazelle. But it is unclear how to suitably combine them with the semantic concepts, and make them directly comparable to the semantic order in the sentence.

Alternatively, we could generate a descriptive sentence from the image as its representation. However, the imagebased sentence generation itself, namely image captioning, is also a very challenging problem. Even those state-ofthe-art image captioning methods cannot always generate very realistic sentences that capture all image details. The image details are essential to the matching task, since the global image-sentence similarity is aggregated from local similarities in image details. Accordingly, these methods cannot achieve very high performance for image and sentence matching [30, 3].

In this work, to bridge the visual-semantic discrepancy between image and sentence, we propose a semanticenhanced image and sentence matching model, which improves the image representation by learning semantic concepts and then organizing them in a correct semantic order. To learn the semantic concepts, we exploit a multiregional multi-label CNN that can simultaneously predict multiple concepts in terms of objects, properties, actions, etc. The inputs of this CNN are multiple selectively extracted regions from the image, which can comprehensively capture all the concepts regardless of whether they are primary foreground ones. To organize the extracted semantic concepts in a correct semantic order, we first fuse them with the global context of the image in a gated manner. The context includes the spatial relations of all the semantic concepts, which can be used as the reference to facilitate the semantic order learning. Then we use the groundtruth semantic order in the matched sentence as the supervision, by forcing the fused image representation to generate the matched sentence.

After enhancing the image representation with both semantic concepts and order, we learn the sentence representation with a conventional LSTM [10]. Then the representations of image and sentence are matched with a structured objective, which is in conjunction with another objective of sentence generation for joint model learning. To demonstrate the effectiveness of the proposed model, we perform several experiments of image annotation and retrieval on two publicly available datasets, and achieve the state-of-theart results.

Conclusions and Future Work

In this work, we have proposed a semantic-enhanced image and sentence matching model. Our main contribution is improving the image representation by learning semantic concepts and then organizing them in a correct semantic order. This is accomplished by a series of model components in terms of multi-regional multi-label CNN, gated fusion unit, and joint matching and generation learning. We have systematically studied the impact of these components on the image and sentence matching, and demonstrated the effectiveness of our model by achieving significant performance improvements.

In the future, we will replace the used VGGNet with ResNet in the multi-regional multi-label CNN to predict the semantic concepts more accurately, and jointly train it with the rest of our model in an end-to-end manner. Our model can perform image and sentence matching and sentence generation, so we would like to extend it for the image captioning task. Although Pan et al. [24] have shown the effectiveness of using visual-semantic embedding for video captioning, yet in the context of image captioning, its effectiveness has not been well investigated.

Are You Talking to Me? Reasoned Visual Dialog Generation through Adversarial Learning

Abstract

The Visual Dialogue task requires an agent to engage in a conversation about an image with a human. It represents an extension of the Visual Question Answering task in that the agent needs to answer a question about an image, but it needs to do so in light of the previous dialogue that has taken place. The key challenge in Visual Dialogue is thus maintaining a consistent, and natural dialogue while continuing to answer questions correctly. We present a novel approach that combines Reinforcement Learning and Generative Adversarial Networks (GANs) to generate more human-like responses to questions. The GAN helps overcome the relative paucity of training data, and the tendency of the typical MLE-based approach to generate overly terse answers. Critically, the GAN is tightly integrated into the attention mechanism that generates humaninterpretable reasons for each answer. This means that the discriminative model of the GAN has the task of assessing whether a candidate answer is generated by a human or not, given the provided reason. This is significant because it drives the generative model to produce high quality answers that are well supported by the associated reasoning. The method also generates the state-of-the-art results on the primary benchmark.

Introduction

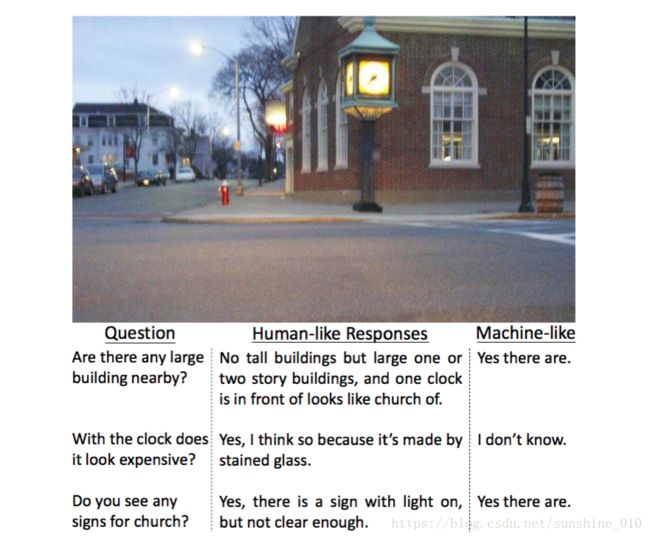

The combined interpretation of vision and language has enabled the development of a range of applications that have made interesting steps towards Artificial Intelligence, including Image Captioning [11, 34, 37], Visual Question Answering (VQA) [1, 22, 38], and Referring Expressions [10, 12, 41]. VQA, for example, requires an agent to answer a previously unseen question about a previously unseen image, and is recognised as being an AI-Complete problem [1]. Visual Dialogue [5] represents an extension to the VQA problem whereby an agent is required to engage in a dialogue about an image. This is significant because it demands that the agent is able to answer a series of questions, each of which may be predicated on the previous questions and answers in the dialogue. Visual Dialogue thus reflects one of the key challenges in AI and Robotics, which is to enable an agent capable of acting upon the world, that we might collaborate with through dialogue.

Due to the similarity between the VQA and Visual Dialog tasks, VQA methods [19, 40] have been directly applied to solve the Visual Dialog problem. The fact that the Visual Dialog challenge requires an ongoing conversation, however, demands more than just taking into consideration the state of the conversation thus far. Ideally, the agent should be an engaged participant in the conversation, cooperating towards a larger goal, rather than generating single word answers, even if they are easier to optimise. Figure 1 provides an example of the distinction between the type of responses a VQA agent might generate and the more involved responses that a human is likely to generate if they are engaged in the conversation. These more human-like responses are not only longer, they provide reasoning information that might be of use even though it is not specifically asked for.

Previous Visual Dialog systems [5] follow a neural translation mechanism that is often used in VQA, by predicting the response given the image and the dialog history using the maximum likelihood estimation (MLE) objective function. However, because this over-simplified training objective only focus on measuring the word-level correctness, the produced responses tend to be generic and repetitive. For example, a simple response of ‘yes’,‘no’, or ‘I don’t know’ can safely answer a large number of questions and lead to a high MLE objective value. Generating more comprehensive answers, and a deeper engagement of the agent in the dialogue, requires a more engaged training process.

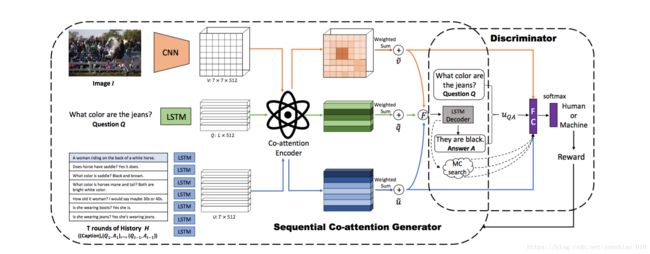

A good dialogue generation model should generate responses indistinguishable from those a human might produce. In this paper, we introduce an adversarial learning strategy, motivated by the previous success of adversarial learning in many computer vision [3, 21] and sequence generation [4, 42] problems. We particularly frame the task as a reinforcement learning problem that we jointly train two sub-modules: a sequence generative model to produce response sentences on the basis of the image content and the dialog history, and a discriminator that leverages previous generator’s memories to distinguish between the humangenerated dialogues and the machine-generated ones. The generator tends to generate responses that can fool the discriminator into believing that they are human generated, while the output of the discriminative model is used as a reward to the generative model, encouraging it to generate more human-like dialogue.

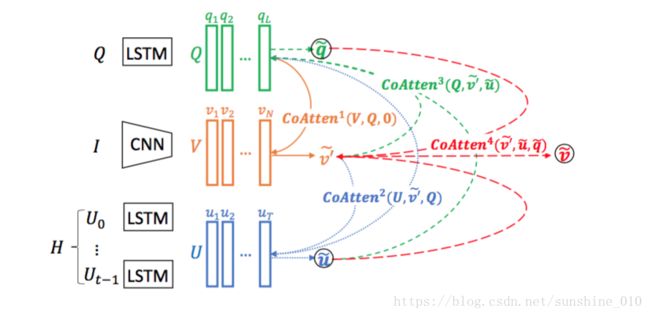

Although our proposed framework is inspired by generative adversarial networks (GANs) [9], there are several technical contributions that lead to the final success on the visual dialog generation task. First, we propose a sequential co-attention generative model that aims to ensure that attention can be passed effectively across the image, question and dialog history. The co-attended multi-modal features are combined together to generate a response. Secondly, and significantly, within the structure we propose the discriminator has access to the attention weights the generator used in generating its response. Note that the attention weights can be seen as a form of ‘reason’ for the generated response. For example, it indicates which region should be focused on and what dialog pairs are informative when generating the response. This structure is important as it allows the discriminator to assess the quality of the response, given word answers, even if they are easier to optimise. Figure 1 provides an example of the distinction between the type of responses a VQA agent might generate and the more involved responses that a human is likely to generate if they are engaged in the conversation. These more human-like responses are not only longer, they provide reasoning information that might be of use even though it is not specifically asked for.

Previous Visual Dialog systems [5] follow a neural translation mechanism that is often used in VQA, by predicting the response given the image and the dialog history using the maximum likelihood estimation (MLE) objective function. However, because this over-simplified training objective only focus on measuring the word-level correctness, the produced responses tend to be generic and repetitive. For example, a simple response of ‘yes’,‘no’, or ‘I don’t know’ can safely answer a large number of questions and lead to a high MLE objective value. Generating more comprehensive answers, and a deeper engagement of the agent in the dialogue, requires a more engaged training process.

A good dialogue generation model should generate responses indistinguishable from those a human might produce. In this paper, we introduce an adversarial learning strategy, motivated by the previous success of adversarial learning in many computer vision [3, 21] and sequence generation [4, 42] problems. We particularly frame the task as a reinforcement learning problem that we jointly train two sub-modules: a sequence generative model to produce response sentences on the basis of the image content and the dialog history, and a discriminator that leverages previous generator’s memories to distinguish between the humangenerated dialogues and the machine-generated ones. The generator tends to generate responses that can fool the discriminator into believing that they are human generated, while the output of the discriminative model is used as a reward to the generative model, encouraging it to generate more human-like dialogue.

Although our proposed framework is inspired by generative adversarial networks (GANs) [9], there are several technical contributions that lead to the final success on the visual dialog generation task. First, we propose a sequential co-attention generative model that aims to ensure that attention can be passed effectively across the image, question and dialog history. The co-attended multi-modal features are combined together to generate a response. Secondly, and significantly, within the structure we propose the discriminator has access to the attention weights the generator used in generating its response. Note that the attention weights can be seen as a form of ‘reason’ for the generated response. For example, it indicates which region should be focused on and what dialog pairs are informative when generating the response. This structure is important as it allows the discriminator to assess the quality of the response, given the reason. It also allows the discriminator to assess the response in the context of the dialogue thus far. Finally, as with most sequence generation problems, the quality of the response can only be assessed over the whole sequence. We follow [42] to apply Monte Carlo (MC) search to calculate the intermediate rewards.

We evaluate our method on the VisDial dataset [5] and show that it outperforms the baseline methods by a large margin. We also outperform several state-of-the-art methods. Specifically, our adversarial learned generative model outperforms our strong baseline MLE model by 1.87% on recall@5, improving over previous best reported results by 2.14% on recall@5, and 2.50% recall@10. Qualitative evaluation shows that our generative model generates more informative responses and a human study shows that 49% of our responses pass the Turing Test. We additionally implement a model under the discriminative setting (a candidate response list is given) and achieve the state-of-the-art performance.

Figure 1: Human-like vs. Machine-like responses in a visual dialog. The human-like responses clearly answer the questions more comprehensively, and help to maintain a meaningful dialogue.

Figure 2: The adversarial learning framework of our proposed model. Our model is composed of two components, the first being a sequential co-attention generator that accepts as input image, question and dialog history tuples, and uses the co-attention encoder to jointly reason over them. The second component is a discriminator tasked with labelling whether each answer has been generated by a human or the generative model by considering the attention weights. The output from the discriminator is used as a reward to push the generator to generate responses that are indistinguishable from those a human might generate.

Figure 3: The sequential co-attention encoder. Each input feature is coattend by the other two features in a sequential fashion, using the Eq.1-3. The number on each function indicates the sequential order, and the final attended features u ̃,v ̃ and q ̃ form the output of the encoder.

Conclusion

Visual Dialog generation is an interesting topic that requires machine to understand visual content, natural language dialog and have the ability of multi-modal reasoning. More importantly, as a human-computer interaction interface for the further robotics and AI, apart from the correctness, the human-like level of the generated response is a significant index. In this paper, we have proposed an adversarial learning based approach to encourage the generator to generate more human-like dialogs. Technically, by combining a sequential co-attention generative model that can jointly reason the image, dialog history and question, and a discriminator that can dynamically access to the attention memories, with an intermediate reward, our final proposed model achieves the state-of-art on VisDial dataset. A Turing Test fashion study also shows that our model can produce more human-like visual dialog responses.

Segmentation,Detection

Deep Unsupervised Saliency Detection: A Multiple Noisy Labeling Perspective

Abstract

The success of current deep saliency detection methods heavily depends on the availability of large-scale supervision in the form of per-pixel labeling. Such supervision, while labor-intensive and not always possible, tends to hinder the generalization ability of the learned models. By contrast, traditional handcrafted features based unsupervised saliency detection methods, even though have been surpassed by the deep supervised methods, are generally dataset-independent and could be applied in the wild. This raises a natural question that “Is it possible to learn saliency maps without using labeled data while improving the generalization ability?”. To this end, we present a novel perspective to unsupervised 1 saliency detection through learning from multiple noisy labeling generated by “weak” and “noisy” unsupervised handcrafted saliency methods. Our end-to-end deep learning framework for unsupervised saliency detection consists of a latent saliency prediction module and a noise modeling module that work collaboratively and are optimized jointly. Explicit noise modeling enables us to deal with noisy saliency maps in a probabilistic way. Extensive experimental results on various benchmarking datasets show that our model not only outperforms all the unsupervised saliency methods with a large margin but also achieves comparable performance with the recent state-of-the-art supervised deep saliency methods.

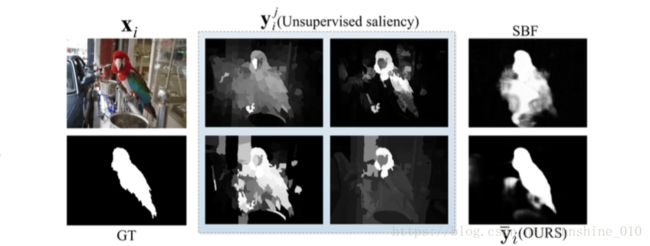

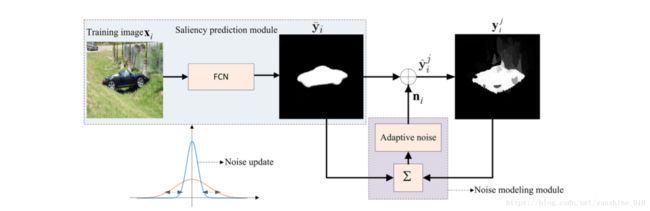

Figure 1. Unsupervised saliency learning from weak “noisy” saliency maps. Given an input image xi and its corresponding unsupervised saliency maps yij, our framework learns the latent saliency map y ̄i by jointly optimizing the saliency prediction module and the noise modeling module.

Compared with SBF [35] which also learns from unsupervised saliency but with different strategy, our model achieves better performance.

Introduction

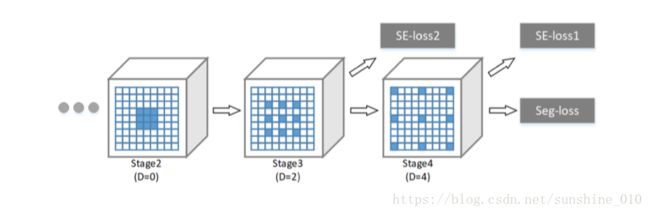

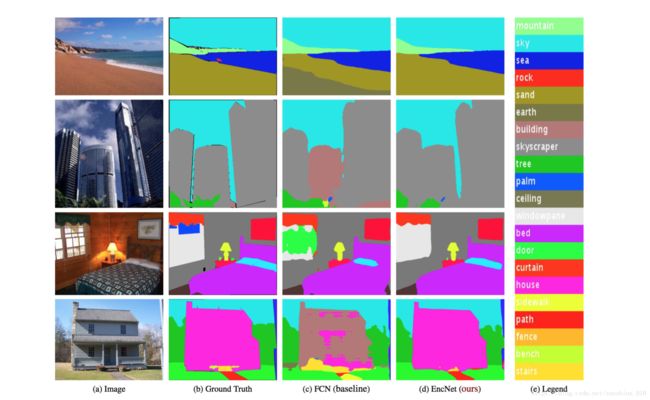

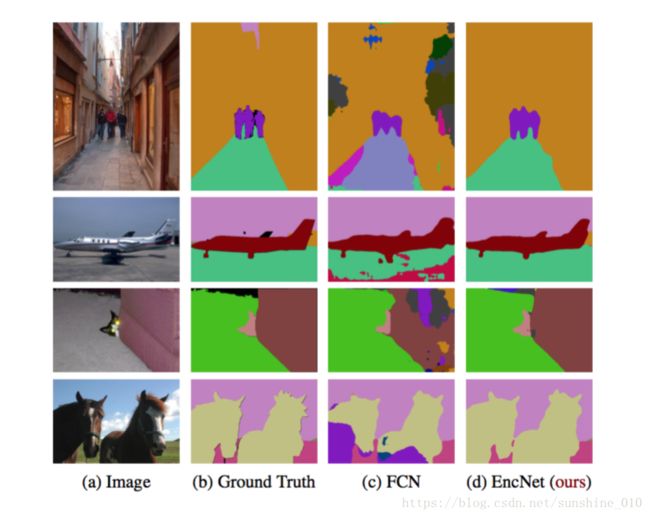

Saliency detection aims at identifying the visually interesting objects in images that are consistent with human perception, which is intrinsic to various vision tasks such as context-aware image editing [36], image caption generation [31]. Depending on whether human annotations have been used, saliency detection methods can be roughly divided as: unsupervised methods and supervised methods. The former ones compute saliency directly based on various priors (e.g., center prior [9], global contrast prior [6], background connectivity prior [43] and etc.), which are summarized and described with human knowledge. The later ones learn direct mapping from color images to saliency maps by exploiting the availability of large-scale human annotated database.