【Keras】LSTM和Bi-LSTM神经网络

KerasLSTM和Bi-LSTM神经网络

- 导入安装包

- 加载并划分数据集

- 数据处理

- 创建LSTM模型并训练

- 评估模型

- 创建Bi-LSTM模型并训练

- 打印Bi-LSTM模型

- 评估Bi-LSTM模型

导入安装包

import tensorflow.keras

from tensorflow.keras.datasets import mnist

from tensorflow.keras.layers import Dense,LSTM,Bidirectional

from tensorflow.keras.utils import to_categorical

from tensorflow.keras.models import Sequential

加载并划分数据集

使用手写数字数据

#划分数据集

(x_train,y_train),(x_test,y_test) = mnist.load_data()

数据处理

数据类型转换:

x_train和x_test里的数据都是int整数,要把它们转换成float32浮点数

数据归一化处理:

要把x_train和x_test里的整数变成0-1之间的浮点数,就要除以255。因为色彩的数值是0-255,所以要变成0-1之间的浮点数,只要简单的除以255

one-hot处理:

y值0-9数字变成onehot模式,以后就可以把分类数据变成这种形式

#设置数据类型为float32

x_train = x_train.astype('float32')

x_test = x_test.astype('float32')

# 数据值映射在[0,1]之间

x_train = x_train/255

x_test = x_test/255

#数据标签one-hot处理

y_train = keras.utils.to_categorical(y_train,10)

y_test = keras.utils.to_categorical(y_test,10)

print(y_train[1])

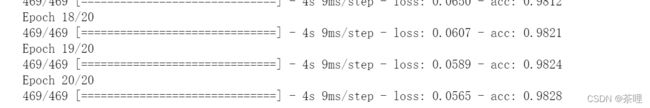

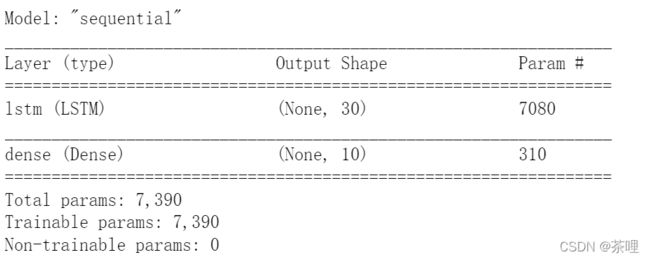

创建LSTM模型并训练

nb_lstm_outputs = 30#神经元个数

nb_time_steps = 28 #时间序列长度

nb_input_vector = 28 #输入序列

#创建模型

model = Sequential()

model.add(LSTM(nb_lstm_outputs,input_shape=(nb_time_steps,nb_input_vector)))

model.add(Dense(10,activation='softmax'))

model.compile(optimizer='adam',

loss='categorical_crossentropy',

metrics=['acc'])

model.fit(x_train,y_train,epochs=20,batch_size=128)

#打印模型

model.summary()

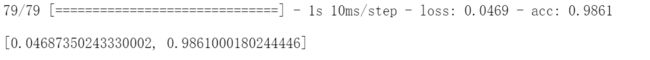

评估模型

model.evaluate(x_test, y_test,batch_size=128, verbose=1)

预测结果比前文的简单神经网络要好:

预测结果比前文的简单神经网络要好:

准确度从0.9615提升到0.9751

准确度从0.9615提升到0.9751

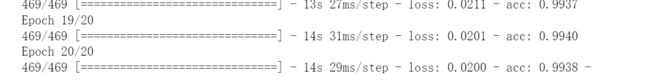

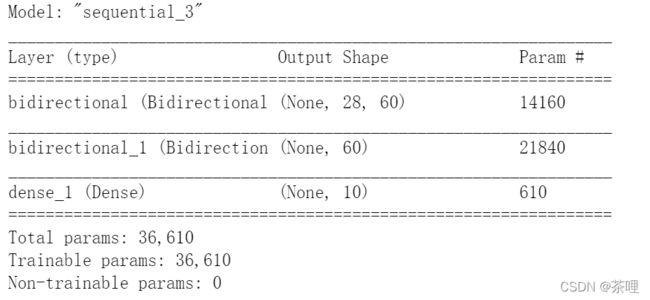

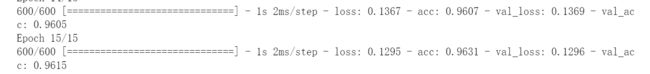

创建Bi-LSTM模型并训练

# building model

model = Sequential()

model.add(Bidirectional(

LSTM(nb_lstm_outputs,return_sequences=True),input_shape=(nb_time_steps, nb_input_vector)

))

model.add(Bidirectional(LSTM(nb_lstm_outputs)))

model.add(Dense(10,activation='softmax'))

#编译模型

model.compile(optimizer='adam',

loss='categorical_crossentropy',

metrics=['acc'])

#训练模型

model.fit(x_train,y_train,epochs=20,batch_size=128)

打印Bi-LSTM模型

model.summary()

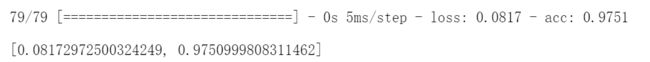

评估Bi-LSTM模型

model.evaluate(x_test, y_test,batch_size=128)

准确度从0.9751提升到0.9861

写文不容易,请给个赞吧!