FlinkCDC系列02: Seatunnel-Flink-JDBC-Sink如何实现Update

首先阅读Apache Seatunnel官网的关于Flink-JDBC-Sink的文档格式

JdbcSink {

source_table_name = fake

driver = com.mysql.jdbc.Driver

url = "jdbc:mysql://localhost/test"

username = root

query = "insert into test(name,age) values(?,?)"

batch_size = 2

}首先要吐槽一下就是官网的这个文档几乎什么都没说啊。

Seatunnel-2.1.1-flink-jdbc-sink

这样子有个问题,就是我能不能实现类似spark-jdbc-sink中的update呢?一个只能处理新增不能处理修改的Sink是不合格的!

直接加saveMode这个参数是不行,因为代码里就没有这个参数,要知道到底支持什么参数,必须要直接阅读源码才行。

2.1.1版本Flink-jdbc-sink源代码

查看Config.java可知,根本没有saveMode这个参数。

进一步阅读Sink目录下的JdbcSink.java文件(核心代码)

从prepare这一段代码可知,还有个文档没写的参数叫做password(吐槽)

@Override

public void prepare(FlinkEnvironment env) {

driverName = config.getString(DRIVER);

dbUrl = config.getString(URL);

username = config.getString(USERNAME);

query = config.getString(QUERY);

if (config.hasPath(PASSWORD)) {

password = config.getString(PASSWORD);

}

if (config.hasPath(SINK_BATCH_SIZE)) {

batchSize = config.getInt(SINK_BATCH_SIZE);

}

if (config.hasPath(SINK_BATCH_INTERVAL)) {

batchIntervalMs = config.getLong(SINK_BATCH_INTERVAL);

}

if (config.hasPath(SINK_BATCH_MAX_RETRIES)) {

maxRetries = config.getInt(SINK_BATCH_MAX_RETRIES);

}

}接下来就是重点了,阅读关于stream数据和batch数据分别以JDBC方式写入的核心实现

@Override

public void outputStream(FlinkEnvironment env, DataStream dataStream) {

Table table = env.getStreamTableEnvironment().fromDataStream(dataStream);

TypeInformation[] fieldTypes = table.getSchema().getFieldTypes();

int[] types = Arrays.stream(fieldTypes).mapToInt(JdbcTypeUtil::typeInformationToSqlType).toArray();

SinkFunction sink = org.apache.flink.connector.jdbc.JdbcSink.sink(

query,

(st, row) -> JdbcUtils.setRecordToStatement(st, types, row),

JdbcExecutionOptions.builder()

.withBatchSize(batchSize)

.withBatchIntervalMs(batchIntervalMs)

.withMaxRetries(maxRetries)

.build(),

new JdbcConnectionOptions.JdbcConnectionOptionsBuilder()

.withUrl(dbUrl)

.withDriverName(driverName)

.withUsername(username)

.withPassword(password)

.build());

if (config.hasPath(PARALLELISM)) {

dataStream.addSink(sink).setParallelism(config.getInt(PARALLELISM));

} else {

dataStream.addSink(sink);

}

}

@Override

public void outputBatch(FlinkEnvironment env, DataSet dataSet) {

Table table = env.getBatchTableEnvironment().fromDataSet(dataSet);

TypeInformation[] fieldTypes = table.getSchema().getFieldTypes();

int[] types = Arrays.stream(fieldTypes).mapToInt(JdbcTypeUtil::typeInformationToSqlType).toArray();

JdbcOutputFormat format = JdbcOutputFormat.buildJdbcOutputFormat()

.setDrivername(driverName)

.setDBUrl(dbUrl)

.setUsername(username)

.setPassword(password)

.setQuery(query)

.setBatchSize(batchSize)

.setSqlTypes(types)

.finish();

dataSet.output(format);

}

相关的api文档:

setRecordToStatement

Sink

简单的概括一下,在流式数据源中,需要一个query语句和一个statement装配器,flink程序会验证?的数量,并且按照顺序把row中数据装配进去。

在批处理中则是直接加进setQuery中了。

那么要如何实现Update呢?网上的答复基本上都是建议使用Table API(废话,我要是准备自己实现就不会用Seatunnel了!)

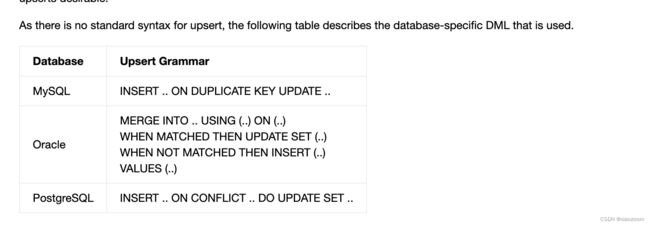

Flink的JDBC Connector是这么写的,如果定义了primary key,那么就可以以upsert的语法进行插入,然后我找了半天也不知道怎么在JdbcSink这个Sink代码里加入相关内容。

那么,既然query是直接进装配器的,那么可以不可以直接通过写一段?数量相同的upsert语句呢?

是可以的。

最终语句如下:

source {

# This is a example input plugin **only for test and demonstrate the feature input plugin**

FakeSourceStream {

result_table_name = "fake"

field_name = "name,age"

}

# If you would like to get more information about how to configure seatunnel and see full list of input plugins,

# please go to https://seatunnel.apache.org/docs/flink/configuration/source-plugins/Fake

}

sink {

JdbcSink {

source_table_name = fake

driver = "com.mysql.cj.jdbc.Driver"

url = "jdbc:mysql://192.168.SomeRandomIp:3306/data_for_test"

username = "root"

password = "Dont Try to Guess My Password"

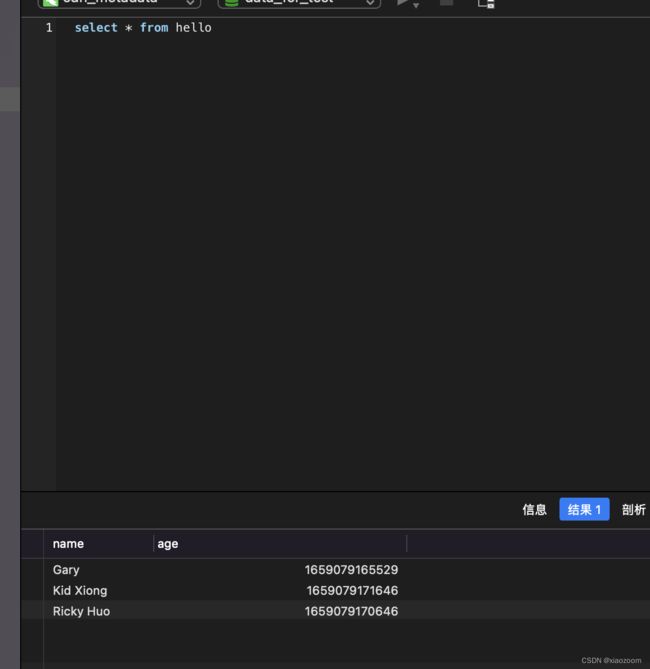

query = "insert into hello(name,age) values(?,?) on duplicate key update age=ifnull(VALUES (age), age)"

batch_size = 2

}}接上默认的FakeDataStream后实现效果如下: