论文翻译《FSCE: Few-Shot Object Detection via Contrastive Proposal Encoding》

论文地址:https://arxiv.org/pdf/2103.05950.pdf

代码地址:https://github.com/megvii-research/FSCE

目录

- Abstract

- 1、Introduction

- 2、Related work

- 3、Method

-

- 3.1、Preliminary

- 3.2、Contrastive object proposal encoding

- 3.3、Contrastive Proposal Encoding (CPE) Loss

- 4、Experiments

-

- 4.1、 Few-shot detection benchmarks

- 4.2、 Few-shot detection results

- 4.3、Ablation 消融实验

- 5、Conclusion

Abstract

Emerging interests have been brought to recognize previously unseen objects given very few training examples, known as few-shot object detection (FSOD). Recent researches demonstrate that good feature embedding is the key to reach favorable few-shot learning performance. We observe object proposals with different Intersection-of-Union (IoU) scores are analogous to the intra-image augmentation used in contrastive visual representation learning. And we exploit this analogy and incorporate supervised contrastive learning to achieve more robust objects representations in FSOD. We present Few-Shot object detection via Contrastive proposals Encoding (FSCE), a simple yet effective approach to learning contrastive-aware object proposal encodings that facilitate the classification of detected objects. We notice the degradation of average precision (AP) for rare objects mainly comes from misclassifying novel instances as confusable classes. And we ease the misclassification issues by promoting instance level intraclass compactness and inter-class variance via our contrastive proposal encoding loss (CPE loss). Our design outperforms current state-of-the-art works in any shot and all data splits, with up to +8.8% on standard benchmark PASCAL VOC and +2.7% on challenging COCO benchmark. Code is available at: https://github.com/megvii-research/FSCE.

近些年来,人们开始对识别以前从未见过的物体产生兴趣,这种使用很少的样本进行训练的目标检测任务被称为小样本目标检测(FSOD)。最近的研究表明,良好的特征嵌入是获得良好的小样本学习性能的关键。我们观察到,具有不同的IoU分数的目标建议框议类似于对比方法中使用的图像内增强。我们利用这种类比并结合监督对比学习,在FSOD中实现更健壮的目标表示。我们提出了一些通过对比建议编码(FSCE)的小样本目标检测方法,这是一种简单而有效的来学习对比感知的对象建议编码的方法,有助于对检测到的目标进行分类。我们注意到,稀有对象的平均精度(AP)下降主要来自将新实例错误分类为可混淆类。我们通过对比建议编码损失(CPE损失)来增大实例级的类间方差,减小类内方差,从而缓解了错误分类问题。我们的设计在数据集上的表现都优于当前的技术,在PASCAL VOC上提高+8.8%,在COCO上提高+2.7%。代码可从以下网址获取:https://github.com/MegviiDetection/FSCE.

1、Introduction

Development of modern convolutional neural networks (CNNs) [1, 2, 3] give rise to great advances in general object detection [4, 5, 6]. Deep detectors demand a large amount of annotated training data to saturate its performance [7, 8]. In few-shot learning scenarios, deep detectors suffer severer over-fitting and the gap between few-shot detection and general object detection is larger than the corresponding gap in few-shot image classification [9, 10, 11]. On the contrary, a child can rapidly comprehend new visual concepts and recognize objects from a newly learned category given very few examples. Closing such gap is therefore an important step towards more successful machine perception.

现代卷积神经网络(CNN)[1,2,3]的发展使一般的目标检测[4,5,6]取得了巨大进展。深度检测器需要大量带注释的训练数据来饱和其性能[7,8]。在小样本学习场景下,深度检测器过拟合严重,小样本检测和一般目标检测之间的差距大于小样本图像分类中相应的差距[9,10,11]。相反,一个孩子可以在只给出很少的例子的情况下迅速理解新的视觉概念,并从一个新学习的类别中识别物体。因此,缩小这种差距是迈向更成功的机器感知[12]的重要一步。

Precedented by few-shot image classification, earlier attempts in few-shot object detection utilize meta-learning strategy [13, 14, 15]. Meta-learners are trained with an episode of individual tasks, meta-task samples from common objects (base class) to pair with rare objects (novel class) to simulate few-shot detection tasks. Recently, the two-stage fine-tune based approach (TFA) reveals more potential in improving few-shot detection. Baseline TFA [16] simply freeze all base class trained parameters and fine-tune only box classifier and box regressor with novel data, yet outperforms previous meta-learners. MPSR [17] improves upon TFA by alleviating the scale bias inherent to few-shot dataset, but their positive refinement branch demands manual selection, which is somewhat less neat. In this work, we observe and address the essential weakness of the finetuning based approach – constantly mislabeling novel instances as confusable categories, and improve the few-shot detection performance to the new state-of-the-art (SOTA).

在小样本图像分类之前,小样本目标检测的早期尝试采用元学习策略[13,14,15]。元学习器使用一系列独立任务进行训练,元任务样本来自常见对象(基类),与稀有对象(新类)配对,以模拟小样本检测任务。近年来,基于两阶段微调的方法(TFA)在改进小样本检测方面显示出更大的潜力。基线TFA[16]只是简单地冻结了所有基类训练的参数,并使用新数据微调box分类器和box回归器,但性能优于以前的元学习器。MPSR[17]通过减轻小样本数据集固有的尺度偏差来改进TFA,但它的正向细化分支需要手动选择,这有点不太简洁。在这项工作中,我们观察并解决了基于微调的方法的本质弱点——不断将新实例错误标记为易混淆的类别,并将小样本检测性能提高到新的最先进的水平(SOTA)。

Object detection involves localization and classification of appeared objects. In few-shot detection, one might naturally conjecture the localization of novel objects is going to under perform its base categories counterpart, with the concern that rare objects would be deemed as background [14, 13, 18]. However, based on our experiments with Faster R-CNN [4], the commonly adopted detector in few-shot detection, class-agonistic region proposal network (RPN) is able to make foreground proposals for novel instances, and the final box regressor can localize novel instances quite accurately. In comparison, as demonstrated in Figure 2, misclassifying detected novel instances as confusable base classes is indeed the main source of error. We visualize the pairwise cosine similarity between class prototypes [19, 20, 21] of a Faster R-CNN box classifier trained with PASCAL VOC [22, 23]. The cosine similarity between prototypes from resembled categories can be 0.39, whereas the similarity between objects and background is on average -0.21. In few-shot setting, the similarity between cluster centers can go as high as 0.59, e.g., between sheep and cow, bicycle and motorbike, making classification for similar objects error-prone. We make a calculation upon baseline TFA, manually correcting misclassified yet accurately localized box predictions can increase novel class average precision (nAP) by over 20 points.

目标检测包括对出现的目标进行定位和分类。在小样本检测中,人们可能会自然而然地猜测,新物体的定位精度将低于其基本类别对应的定位精度,因为稀有对象可能会被视为背景[14,13,18]。但是,基于我们在Faster R-CNN[4]上的实验,在小样本检测中常用的区域建议网络(RPN)可以为新实例提出前景建议框,最终box回归器可以相当准确地对新实例进行定位。相比之下,如图2所示,错误地将检测到的新实例分类为容易混淆的基类确实是错误的主要来源。我们可视化了用PASCAL VOC[22,23]训练的Faster R-CNN box分类器的类原型[19,20,21]之间的成对余弦相似度。来自相似类别的原型之间的余弦相似度可以为0.39,而目标和背景之间的相似度平均为−0.21。在小样本设置下,聚类中心之间的相似度可以高达0.59,例如羊和牛,自行车和摩托车,使得相似物体的分类容易出错。我们在基线TFA上进行计算,手动纠正 分类错误但定位精确的预测框 可以将新类的密度归一化平均精度(nAP)提高20个点以上。

Figure 2. We find in fine-tuning based few-shot object detector, classification is more error-prone than localization. In the fine-tuning stage, RPN is able to make good enough foreground proposals for novel instances, hence novel objects are often accurately localized but mis-classified as confusable base classes. Here shows 20 top-scoring RPN proposals and example detection results from PASCAL VOC Split 1, wherein bird, sofa and cow are novel categories. The left panel shows the pair-wise cosine similarity between the class prototypes learned in the bounding box classifier. For example, the similarity between bus and bird is -0.10, but the similarity between cow and horse is 0.39. Our goal is to decrease the instance-level similarity between similar objects that are from different categories.

图2。我们发现在基于微调的小样本目标检测器中,分类比定位更容易出错。在微调阶段,RPN能够为新实例提供足够好的前景建议框,因此新目标常被精确定位,但被错误地归类为易混淆的基类。这里显示了20个得分最高的RPN方案和PASCAL VOC Split 1的示例检测结果,其中鸟类、沙发和奶牛是新的类别。左侧面板显示在边界框分类器中学习的类原型之间的成对余弦相似性。例如,公共汽车和鸟之间的相似性为-0.10,而牛和马之间的相似性为0.39。我们的目标是减少来自不同类别的相似目标之间的实例级相似性。

A common approach to learn well-separated decision boundary is to use a large margin classifier [24], but with our trials, category-level positive-margin based classifiers does not work in this data-hunger setting [20, 25]. To learn instance-level discriminative feature representations, contrastive learning [26, 27] has demonstrated its effectiveness in tasks including recognition [28], identification [29] and the recent successful self-supervised models [30, 31, 32, 33]. In supervised contrastive learning for image classification [34], intra-image augmentations of images from the same class are used to enrich the positive example pairs. We think region proposals with different Intersection-overUnion (IoU) for an object are naturally analogous to the intra-image augmentation cropping, as illustrated in Figure 1. Therefore in this work, we explore to extend the supervised batch contrastive approach [34] to few-shot object detection. We believe the contrastively learned object representations aware of the intra-class compactness and the inter-class difference can ease the misclassification of unseen objects as similar categories.

学习良好分离的决策边界的一种常用方法是使用大边界分类器[24],但在我们的实验中,基于类别级的正边界分类器在这种数据极少的环境中不起作用[20,25]。为了学习实例级的判别特征表示,对比学习[26,27]已经在包括认知[28]、识别[29]和最近成功的自监督模型[30,31,32,33]在内的任务中证明了其有效性。在图像分类[34]的监督对比学习中,使用来自同一类别的图像的图像内增强来丰富正样本对。我们认为,对于一个目标,具有不同的IOU的区域建议自然类似于图像内增强裁剪,如图1所示。因此,在本工作中,我们探索将监督批量对比方法[34]扩展到小样本目标检测。我们相信,意识到类内紧凑性和类间差异的对比学习对象表示可以缓解新类对象作为相似类别的错误分类。

Figure 1. Conceptualization of our contrastive object proposals encoding. We introduce a score function which measures the semantic similarity between region proposals. Positive proposals (x+) refer to region proposals from the same category or the same object. Negative proposals (x−) refer to proposals from different categories. We encourage the object encodings to have the property that score(f(x), f(x+)) >> score(f(x), f(x−)), such that our contrastively learned object proposals have smaller intra-class variance and larger inter-class difference.

图1。概念化对比对象建议编码。我们引入了一个分数函数来衡量区域建议之间的语义相似度。正提案(x+)是指来自同一类别或同一对象的区域建议框。负提案(x−)是指不同类别的区域建议框。我们鼓励目标编码具有分数 (f(x),f(x+)) >> (f(x),f(x−)) 的属性,这样我们对比学习的目标建议框具有更小的类内方差和更大的类间差异。

We present Few-Shot object detection via Contrastive proposals Encoding (FSCE), a simple yet effective fine-tune based approach for few-shot object detection. When transfer the base detector to few-shot novel data, we augment the primary Region-of-Interest (RoI) head with a contrastive branch, the contrastive branch measures the similarity between object proposal encodings. A supervised contrastive objective with specific considerations for detection will be optimized to reduce the variance of object proposal embeddings from the same category, while pushing differentcategory instances away from each other. The proposed contrastive objective, contrastive proposal encoding (CPE) loss, is employed to the original classification and localization objective in a multi-task fashion. The end-to-end training of our proposed method is identical to vanilla Faster R-CNN.

我们提出了一种基于对比建议编码(FSCE)的小样本目标检测方法,这是一种简单而有效的基于微调的小样本目标检测方法。在将基本检测器转移到小样本的新数据时,在ROI-head增加一个对比分支,该对比分支度量目标建议编码之间的相似性。将优化具有特定检测考虑的监督对比目标,以减少来自同一类别的目标建议框提取特征的差异,同时使不同类别实例彼此远离。所提出的对比目标,也就是对比建议编码(CPE)损失,以多任务方式应用于原始分类和定位目标。我们提出的方法的端到端训练与Faster R-CNN相同。

To our best knowledge, we are the first to bring contrastive learning into few-shot object detection. Our simple design sets the new state-of-the-art in any shot (1, 2, 3, 5, 10, and 30), with up to +8.8% on the standard PASCAL VOC benchmark and +2.7% on the challenging COCO benchmark.

据我们所知,我们是第一个将对比学习引入小样本目标检测的人。我们简单的设计在任何shot(1、2、3、5、10和30)中都取得了最先进的成果,在标准PASCAL VOC上提高+8.8%,在具有挑战性的COCO上提高+2.7%。

2、Related work

Few-shot learning. Few-shot learning aims to recognize new concepts given limited labeled examples. Metalearning approaches aim at training a meta-model on episodes of individual tasks such that it can adapt to new tasks with few samples [35, 11, 36, 10, 37, 38, 39], known as “learning-to-learn”. Deep metric-learning based approaches emphasize learning good feature representation embeddings that facilitate downstream tasks. The most intuitive metrics including cosine similarity [20, 40, 41, 21], euclidean distance to class center [19], and graph distances Interestingly, hallucinator-based methods solve the data deficiency via learning to generate fake-data [9]. Existing few-shot learners are mostly developed in the context of classification. In comparison, few-shot detection is more challenging as it involves both classification and localization, yet under-researched.

**小样本学习。**小样本学习的目的是在有限的标记示例中识别新类。元学习方法的目的是在单个任务的片段上训练元模型,使其能够适应样本较少的新任务[35,11,36,10,37,38,39],被称为“学会学习”。基于深度度量学习的方法强调学习良好的特征表示嵌入,以促进接下来的任务。最直观的度量指标包括余弦相似度[20,40,41,21],到类中心的欧氏距离[19]和图距离[42]。有趣的是,基于虚拟数据的方法通过学习生成假数据[9]来解决数据缺陷。现有的小样本学习器大多是在分类的背景下发展起来的。相比之下,小样本检测更具有挑战性,因为它涉及分类和定位,但研究尚不充分。

Few-shot object detection. There are two lines of work addressing the challenging few-shot object detection (FSOD) problem. First, meta-learning based approaches devise a stage-wise and periodic meta-training paradigm to train a meta-learner to help knowledge transfer from base classes. Meta R-CNN [13] meta-learns channel-wise attention layer for remodeling the RoI head. MetaDet [14] applies a weight prediction meta-model to dynamically transfer categoryspecific parameters from the base detector. FSIW [15] improves upon Meta R-CNN and FSRW [43] by more complex feature aggregation and meta-training on a balanced dataset. With the balanced dataset introduced in TFA [16], fine-tune based detectors are rowing over meta-learning based methods in performance, MPSR [17] sets the current state-of-the-art by mitigating the scale scarcity in few-shot datasets, but its generalizability is limited because the positive refinement branch contains manual decisions. RepMet [44] attaches an embedding sub-net in RoI head to model a posterior class distribution. It utilizes advanced tricks including OHEM [45] and SoftNMS [46] but fails to catch up with current SOTA. We criticize complex algorithms as they can easily overfit and exhibit poor test results in FSOD. Instead, our insight here is that the degeneration of average precision (AP) for novel categories mainly comes from misclassifying novel instances as confusable categories, and we resort to contrastive learning to learn discriminative object proposal representations without complexing the model.

小样本目标检测。 有两种方法可以解决具有挑战性的小样本目标检测(FSOD)问题。首先,基于元学习的方法设计了一个阶段性和周期性的元训练范式来训练元学习器,以帮助元学习器从基类迁移知识。Meta R-CNN[13]元学习通道方向的注意层重塑RoI head。MetaDet[14]应用权重预测元模型从基本检测器动态传输类别特定参数。FSIW[15]通过在平衡数据集上进行更复杂的特征聚合和元训练,改进了Meta R-CNN和FSRW[43]。随着TFA[16]中引入的平衡数据集,基于微调的检测器在性能上优于基于元学习的方法,MPSR[17]通过减轻小样本数据集中的规模稀缺来设置当前最先进的技术,但其泛化性受到限制,因为正向细化分支包含手动决策。RepMet[44]在RoI头部添加了一个嵌入子网来模拟后验类分布。它利用了OHEM[45]和SoftNMS[46]等先进的技巧,但未能赶上当前的SOTA。我们批判复杂的算法,因为它们很容易过拟合,在FSOD中表现出较差的测试结果。相反,我们在这里的见解是,新类别的平均精度(AP)的退化主要来自于将新实例误分类为可混淆的类别,我们采用对比学习来学习不同的目标建议框表示,而不使模型复杂化。

Contrastive learning. The recent success of self-supervised models can be attributed to the renewed interest in exploring contrastive learning. [47, 30, 48, 49, 32, 50, 33, 51]. Optimizing the contrastive objectives [48, 20, 21, 34] simultaneously maximize the agreement between similar instances defined as positive pairs and encourage the difference among dissimilar instances or negative pairs. With contrastive learning, the algorithm learns to build representations that do not concentrate on pixel-level details, but encoding high-level features effective enough to distinguish different images [33, 32, 50, 51]. Supervised contrastive learning [34] extends the batch contrastive approach to supervised setting, but for image classification.

**对比学习。**最近自我监督模式的成功可以归因于对探索对比学习的新兴趣[47, 30, 48, 49, 32, 50, 33, 51]。优化对比目标[48,20,21,34],同时最大化定义为正对的相似实例之间的一致性,并鼓励不同实例或消极对之间的差异。通过对比学习,算法学习构建表示不集中于像素级细节,而是编码足够有效地区分不同图像的高级特征[33,32,50,51]。监督对比学习[34]将批量对比方法扩展到监督设置,但用于图像分类。

To our best knowledge, this work is the first to integrate supervised contrastive learning [29, 34] into few-shot object detection. The state-of-the-art few-shot detection performance in any shot and all benchmarks demonstrate the effectiveness of our proposed method.

据我们所知,这项工作是第一个将监督对比学习[29,34]集成到小样本目标检测中的工作。在任何shot和所有基准测试中,最先进的小样本检测性能证明了我们提出的方法的有效性。

3、Method

Our proposed method FSCE involves a simple two-stage training. First, the standard Faster R-CNN detection model is trained with abundant base-class data (Dtrain = Dbase). Then, the base detector is transferred to novel data through fine-tuning on a balanced dataset [8] with novel instances and randomly sampled base instances (Dtrain = Dnovel ∪ Dbase). The backbone feature extractor is frozen during fine-tuning while the RoI feature extractor is supervised by a contrastive objective. We jointly optimize the contrastive proposal encoding (CPE) loss we proposed with the original classification and regression objectives in a multi-task fashion. Overview of our method is shown in Figure 3.

我们提出的FSCE方法包括一个简单的两阶段训练。首先,使用丰富的基类数据(Dtrain=Dbase)训练Faster R-CNN检测模型。

然后,将训练好的模型迁移到小数据集上。小数据集是由新类和随机抽样的基类组成的混合数据集( D t r a i n = D n o v e l ∪ D b a s e Dtrain=Dnovel\cup Dbase Dtrain=Dnovel∪Dbase)。在微调过程中,主干特征提取网络被冻结,而RoI特征提取器则由一个对比目标监督。我们以多任务方式联合优化我们提出的对比建议编码(CPE)损失与原始分类和回归目标。我们的方法概述如图3所示。

Figure 3. Overview of our proposed FSCE. In our method, we jointly fine-tune the FPN pathway and RPN while fixing the backbone. We find this is effective in coordinating backbone feature maps to activate on novel objects yet still avoid the risk of overfitting. To learn contrastive object proposal encodings, we introduce a contrastive branch to guide the RoI features to learn contrastive-aware proposal embeddings. We design a contrastive objective to maximize the within-category agreement and cross-category disagreement.

图3。FSCE概述。在我们的方法中,在固定backbone的同时联合微调FPN和RPN。我们发现,这在协调骨干特征映射以在新目标上激活时是有效的,但仍然可以避免过度拟合的风险。为了学习对比目标建议编码,我们引入了一个对比分支,引导RoI特征来学习对比特征向量。我们设计了一个对比目标函数来最大化类的一致性和类间的不一致性。

3.1、Preliminary

Rethink the two-stage fine-tuning approach. Original TFA [16] only fine-tunes the last two fc layers–box classifier and box regressor–with novel data, the rest structures are frozen and taken as a fixed feature extractor. This could be viewed as an approach to counter the over-fitting of limited novel data. However it is counter-intuitive that Feature Pyramid Network (FPN [52]), RPN, especially the RoI feature extractor which contain semantic information learned from base classes only, could be transferred directly to novel classes without any form of training. In baseline TFA, unfreezing RPN and RoI feature extractor leads to degraded results for novel classes. However, we find this behavior is reversible and can benefit novel detection results if trained properly. We propose a stronger baseline which adapts much better to novel data with jointly fine-tuned feature extractors and box predictors.

重新思考两阶段微调方法。 原始TFA[16]仅使用新数据对最后两个fc层(box分类器和box回归器)进行微调,其余结构被冻结并作为固定特征提取器。这可以被视为一种减轻有限新数据过拟合的方法。然而,特征金字塔网络(FPN[52])、RPN,特别是仅包含从基类中学习到的语义信息的RoI特征提取器相反,无需任何形式的训练就直接传输到新类。在基线TFA中,解冻RPN和RoI特征提取器会导致新类的结果降低。然而,我们发现这种行为是可逆的,如果训练得当,可以获得新的检测结果。我们提出了一个更好的基线,通过联合微调的特征提取器和框预测器更好地适应新数据。

Strong baseline. We establish our strong baseline from the following observations. Initially, the detection performance for novel classes decreases as more network components are fine-tuned with novel shots. However, we notice a significant gap in the key RPN and RoI statistics between the data-abundant base training stage and the novel fine-tuning stage. As shown in Figure 4, the number proposals from positive anchors in novel fine-tuning is only 14 of its base training counterpart and the number of foreground proposals decreases consequently. We observe, especially at the beginning of fine-tuning, the positive anchors for novel objects receive comparatively low scores from RPN. Due to the low objectness scores, less positive anchors can passnon-max suppression (NMS) and become proposals that provide actual learning opportunities in RoI head for novel objects. Our insight is to rescue the low objectness positive anchors that are suppressed. Besides, re-balancing the foreground proposals fraction is also critical to prevent the diffusive yet easy backgrounds from dominating the gradient descent for novel instances in fine-tuning.

更强大的基线。 我们根据以下观察结果建立了强有力的基线。最初,随着更多网络组件使用新类别进行微调,新类的检测性能降低。然而,我们注意到,在数据丰富的基础训练阶段和新的微调阶段之间,关键RPN和RoI统计数据存在显著差距。如图4所示,在微调阶段,正样本的建议框数量只有基础训练时的1/4,导致前景建议框的数量也随之减少。我们观察到,特别是在微调开始的阶段,新目标的正样本锚框从RPN获得的IOU相对较低。由于目标得分较低,只有较少的正样本锚框才能通过非最大抑制(NMS)的筛选,成为建议框,为ROI head提供学习新类别的机会。我们的想法是要保留一部分低分的正样本锚框。此外,重新平衡前景部分对于防止扩散但容易的背景在微调中控制新实例的梯度下降也是至关重要的。

Figure 4. Key detection statistics. Left shows the average number of positive anchors per image in RPN in base training and novel fine-tuning stage. Right shows the average number of foreground proposals per image during fine-tuning. In the left, orange line shows the original TFA setting, which use the same specs as base training. In the right, the blue line shows double the number of anchors kept after NMS in RPN, the gray line shows reducing RoI head batch size by half.

图4。关键检测统计信息。左图显示了在基础训练和新的微调阶段,RPN中每个图像的positive anchors的平均数量。右图显示了微调期间每张图像的前景建议的平均数量。在左侧,橙色线显示原始TFA设置,该设置使用与基础训练相同的规格。在右边,蓝线显示RPN中NMS后保留的锚数量增加了一倍,灰线显示RoI head batch大小减少了一半。

We use unfrozen RPN and ROI with two modifications, (1) double the maximum number of proposals kept after NMS, this brings more foreground proposals for novel instances, and (2) halving the number of sampled proposals in RoI head used for loss computation, as in fine-tuning stage the discarded half contains only backgrounds (standard RoI batch size is 512, and the number of foreground proposals are far less than half of it). As shown in Table 1, our strong baseline boosts the baseline TFA by non-trivial margins. Moreover, the tunable RoI feature extractor opens up room for realizing our proposed contrastive object proposal encoding.

我们使用未冻结的RPN和ROI,并进行了两个修改:(1)将NMS后保留的最大建议框数量增加一倍,这将为新实例带来更多前景建议框;(2)将ROI head中用于损失计算的抽样建议框数量减半,因为在微调阶段,丢弃的一半仅包含背景(标准RoI batch大小为512,前景建议框的数量远远少于其一半)。如表1所示,我们强大的基线通过非平凡的边距提高了基线TFA。此外,可调RoI特征提取器为实现我们提出的对比目标建议框编码打开了空间。

Table 1. Novel detection performance of our strong baseline on PASCAL VOC Novel Split 1.

表1。新类的检测性能,我们的new-baseline 在PASCAL VOC Novel Split 1上的表现效果。

3.2、Contrastive object proposal encoding

In two-stage detection frameworks, RPN takes backbone feature maps as inputs and generates region proposals, RoI head then classifies each region proposal and regresses a bounding box if it is predicted to contain an object. In Faster R-CNN pipeline, RoI head feature extractor first pools the region proposals to fixed size and then encodes them as vector embeddings x ∈ R D R x \in \mathbb{R}^{D_{R}} x∈RDR known as the RoI features. Typically D R = 1024 D_{R} = 1024 DR=1024 in Faster R-CNN w/ FPN. General detectors fail to establish robust feature representations for region proposals from limited shots, resulting in mislabeling localized objects and low average precision. The idea is to learn more discriminative object proposal embeddings, but according to our experiments, the category-level positivemargin classifier [20, 25] does not work in this data-hungry setting. In order to learn more robust object feature representations from fewer shots, we propose to apply batch contrastive learning [34] to explicitly model instance-level intra-class similarity and inter-class distinction [29, 26] of object proposal embeddings.

在两阶段检测框架中,RPN以骨干特征图为输入,生成区域建议框,然后RoI head对每个区域建议框进行分类,如果预测包含对象,则对边界框进行回归。在 Faster R-CNN中,RoI head特征提取器首先将区域建议框调整到固定大小,然后将其编码为矢量嵌入 x ∈ R D R x \in \mathbb{R}^{D_{R}} x∈RDR,称为RoI特性。在Faster R-CNN w/FPN中,通常 D R = 1024 D_{R} = 1024 DR=1024 。常规检测器无法为有限类别的区域建议框建立稳健的特征表示,导致局部目标标记错误和平均精度低。我们的想法是学习更多的辨别性目标建议框特征,但根据我们的实验,类别级的正边缘分类器[20,25]在这种数据量极少的环境中不起作用。为了从小样本中学习更健壮的目标特征表示,我们建议应用批量对比学习[34]来明确建模目标建议框嵌入的实例级类内相似性和类间差异[29,26]。

To incorporate contrastive representation learning into the Faster R-CNN framework, we introduce a contrastive branch to the primary RoI head, parallel to the classification and regression branches. The RoI feature vector x contains post-ReLU [53] activations thus is truncated at zero, so the similarity between two proposals embeddings can not be measured directly. Therefore, the contrastive branch applies a 1-layer multi-layer-perceptron (MLP) head with negligible cost to encode the RoI feature to contrastive feature , by default DC = 128. Subsequently, we measure similarity scores between object proposal representations on the MLP-head encoded RoI features and optimize a contrastive objective to maximize the agreement between object proposals from the same category and promote the distinctiveness of proposals from different categories. The proposed contrastive loss for object detection is described in the next section.

为了将对比表征学习纳入Faster R-CNN框架,我们在主要RoI head引入了一个对比分支,与分类和回归分支平行。RoI特征向量x包含post-ReLU[53]激活,因此被截断为零,所以无法直接测量两个边界框特征之间的相似性。因此,对比分支应用一个成本可以忽略不计的1层多层感知器(MLP)头将RoI特征编码为对比特征,默认情况下 D C = 128 D_{C} = 128 DC=128。随后,我们在MLP头部编码的RoI特征上测量目标建议框表示之间的相似性分数,并优化对比目标,以最大化来自同一类别的目标建议框之间的一致性,并提高来自不同类别建议框的区别性。下一节将介绍拟议的目标检测对比损失。

We adopt a cosine similarity based bounding box classifier, where the logit to predict i-th instance as j-th class is computed by the scaled cosine similarity between the RoI feature xi and the class weight wj in the hypersphere,

l o g i t { i , j } = α x i T w j ∣ ∣ x i ∣ ∣ ⋅ ∣ ∣ w j ∣ ∣ , (1) logit_{\{i,j\}} = \alpha\frac{x_{i}^Tw_{j}}{||x_{i}|| \cdot ||w_{j}||},\tag{1} logit{i,j}=α∣∣xi∣∣⋅∣∣wj∣∣xiTwj,(1)

采用基于余弦相似性的边界框分类器,通过在RoI特征 x i x_{i} xi与类别权重 w j w_{j} wj之间的比例余弦相似性计算出第 i 个实例作为 j 类的相似度度量logit,

l o g i t { i , j } = α x i T w j ∣ ∣ x i ∣ ∣ ⋅ ∣ ∣ w j ∣ ∣ , (1) logit_{\{i,j\}} = \alpha\frac{x_{i}^Tw_{j}}{||x_{i}|| \cdot ||w_{j}||},\tag{1} logit{i,j}=α∣∣xi∣∣⋅∣∣wj∣∣xiTwj,(1)

α \alpha α is a scaling factor to enlarge the gradient. We empirically fix α \alpha α = 20 in our experiments. The proposed contrastive branch guides the RoI head to learn contrastive-aware object proposal embeddings which ease the discrimination between different categories. In the cosine projected hypersphere, our contrastive object proposal embeddings form tighter clusters with enlarged distances between different clusters, therefore increasing the generalizability of the detection model in the few-shot setting.

α \alpha α是放大梯度的比例因子。我们在实验中根据经验设定 α \alpha α=20。所提出的对比分支引导RoI head学习对比感知目标建议框特征,从而缓解不同类别之间的区分。在余弦投影超球面中,我们的对比对象建议嵌入形成更紧密的簇,不同簇之间的距离更大,因此增加了检测模型在小样本设置下的通用性和泛化性。

3.3、Contrastive Proposal Encoding (CPE) Loss

Inspired by supervised contrastive objectives in classification [34] and identification [29], our CPE loss is defined as follows with considerations tailored for detection. Concretely, for a mini-batch of N RoI box features , where zi is contrastive head encoded RoI feature for i-th region proposal, ui denotes its Intersectionover-Union (IOU) score with matched ground truth bounding box, and yi denotes the label of the ground truth, L C P E = 1 N ∑ i = 1 N f ( u i ) ⋅ L z i , (2) L_{CPE} = \frac{1}{N}\sum_{i=1}^{N}{f(u_i)}\cdot L_{z_{i}},\tag{2} LCPE=N1i=1∑Nf(ui)⋅Lzi,(2)

L z i = − 1 N y i − 1 ∑ j = 1 , j ≠ i N ∣ ∣ { y i = y j } ⋅ l o g e x p ( z i ~ ⋅ z j ~ / τ ) ∑ k = 1 N ∣ ∣ k ≠ i ⋅ e x p ( z i ~ ⋅ z k ~ / τ ) , (3) L_{z_{i}} = \frac{-1}{N_{y_{i}}-1}\sum_{j=1,j\ne i}^{N}{||\{ y_{i}=y_{j}\}\cdot log \frac{exp(\widetilde{z_{i}} \cdot \widetilde{z_{j}}/\tau)}{\sum_{k=1}^{N}{||_{k\ne i}\cdot exp(\widetilde{z_{i}} \cdot \widetilde{z_{k}}/\tau)}}},\tag{3} Lzi=Nyi−1−1j=1,j=i∑N∣∣{yi=yj}⋅log∑k=1N∣∣k=i⋅exp(zi ⋅zk /τ)exp(zi ⋅zj /τ),(3)

N y i N_{y_{i}} Nyi is the number of proposals with the same label as y i y_{i} yi, and τ \tau τ is the hyper-parameter temperature as in InfoNCE [48].

受分类[34]和识别[29]中监督对比目标的启发,我们的CPE损失定义如下,并考虑了专门针对检测的因素。具体地说,对于一个mini-batch有N个RoI框特征, { z i , u i , y i } i = 1 N \{z_{i},u_{i},y_{i}\}_{i=1}^N {zi,ui,yi}i=1N,其中 z i z_{i} zi表示ROI head对第 i i i个区域建议框所编码成的128维向量; u i u_{i} ui表示建议框和真实框的IOU值; y i y_{i} yi表示真实框的标签,

L C P E = 1 N ∑ i = 1 N f ( u i ) ⋅ L z i , (2) L_{CPE} = \frac{1}{N}\sum_{i=1}^{N}{f(u_i)}\cdot L_{z_{i}},\tag{2} LCPE=N1i=1∑Nf(ui)⋅Lzi,(2)

L z i = − 1 N y i − 1 ∑ j = 1 , j ≠ i N ∣ ∣ { y i = y j } ⋅ l o g e x p ( z i ~ ⋅ z j ~ / τ ) ∑ k = 1 N ∣ ∣ k ≠ i ⋅ e x p ( z i ~ ⋅ z k ~ / τ ) , (3) L_{z_{i}} = \frac{-1}{N_{y_{i}}-1}\sum_{j=1,j\ne i}^{N}{||\{ y_{i}=y_{j}\}\cdot log \frac{exp(\widetilde{z_{i}} \cdot \widetilde{z_{j}}/\tau)}{\sum_{k=1}^{N}{||_{k\ne i}\cdot exp(\widetilde{z_{i}} \cdot \widetilde{z_{k}}/\tau)}}},\tag{3} Lzi=Nyi−1−1j=1,j=i∑N∣∣{yi=yj}⋅log∑k=1N∣∣k=i⋅exp(zi ⋅zk /τ)exp(zi ⋅zj /τ),(3)

N y i N_{y_{i}} Nyi是与 y i y_{i} yi具有相同标签的建议框数量, τ \tau τ 是InfoNCE[48]中的超参数温度。

In the above formula, denotes normalized features hence measures the cosine similarity between the i-th and j-th proposal in the projected hypersphere. The optimization of the above loss function increases the instancelevel similarity between object proposals with the same label and spaces proposals with different labels apart in the projection space. As a result, instances from each category will form a tighter cluster, and the margins around the periphery of the clusters are enlarged. The effectiveness of our CPE loss has been confirmed by t-SNE visualization, as shown in Figure 5 (a) and (b).

在上面的公式中, z j ~ = z i ∣ ∣ z i ∣ ∣ \widetilde{z_{j}}=\frac{z_{i}}{||z_{i}||} zj =∣∣zi∣∣zi表示归一化特征,因此 z i ~ ⋅ z j ~ \widetilde{z_{i}} \cdot \widetilde{z_{j}} zi ⋅zj 测量投影超球面中第 i 次和第 j 次建议框之间的余弦相似性。上述损失函数的优化增加了投影空间中具有相同标签的目标建议框和具有不同标签的空间建议框之间的实例级相似性。因此,每个类别的实例将形成一个更紧密的集群,集群外围的边界将扩大。我们的CPE损失的有效性已通过t-SNE可视化得到证实,如图5(a)和(b)所示。

Figure 5. Conceptually and t-SNE visualization of the object proposal embeddings learned with and without our CPE loss, our CPE loss explicitly model the within-class similarity and cross-class distance. t-SNE here shows the proposal encodings from randomly selected 200 PASCAL VOC images. Right panel shows bad cases rescued by our contrastive-aware representations.

图5。在概念上和在没有CPE损失的情况下学习的目标建议框嵌入的t-SNE可视化,我们的CPE损失明确地建模了类内相似性和类间差异性。t-SNE在这里展示了随机选择的200张PASCAL VOC图像的建议框编码。右边显示了我们的对比感知表示所改进的错误框。

Proposal consistency control. Unlike image classification where semantic information comes from the entire image, classification signals in detection come from region proposals. We propose to use an IoU threshold to assure the consistency of proposals that are used to be contrasted, with the consideration that low IoU proposals deviate too much from the center of regressed objects, therefore might contain irrelevant semantics. In the formula above, f ( u i ) {f(u_i)} f(ui) controls the consistency of proposals, defined with proposal consistency threshold ϕ \phi ϕ, and a re-weighting function g ( ⋅ ) g(\cdot) g(⋅),

f ( u i ) = ∣ ∣ { u i ≥ ϕ } ⋅ g ( u i ) , (4) {f(u_i)}=||\{u_{i}\geq \phi\}\cdot g(u_{i}),\tag{4} f(ui)=∣∣{ui≥ϕ}⋅g(ui),(4)

g(·) assigns different weight coefficients for object proposals with different level of IoU scores. We find φ=0.7 is a good cut-off such that the contrastive head is trained with most centered object proposals. Ablations regarding φ and g are shown in Sec. 4.3.

建议框一致性控制。 与语义信息来自整个图像的图像分类问题不同,目标检测问题中的分类信号来自区域建议框。我们使用IOU阈值来确保用于对比的建议框的一致性,同时考虑到低IoU建议框偏离回归对象的中心太多,因此可能包含不相关的语义。在上面的公式中, f ( u i ) {f(u_i)} f(ui)用于控制建议框的一致性,用建议框一致性阈值 ϕ \phi ϕ和重加权函数 g ( ⋅ ) g(\cdot) g(⋅)定义,

f ( u i ) = ∣ ∣ { u i ≥ ϕ } ⋅ g ( u i ) , (4) {f(u_i)}=||\{u_{i}\geq \phi\}\cdot g(u_{i}),\tag{4} f(ui)=∣∣{ui≥ϕ}⋅g(ui),(4)

g ( ⋅ ) g(\cdot) g(⋅)为具有不同IOU分数水平的目标建议框分配不同的权重系数。我们发现 ϕ = 0.7 \phi = 0.7 ϕ=0.7是一个很好的分界点,这样对比头部就可以用最中心的物体建议进行训练。关于 ϕ \phi ϕ和 g ( ⋅ ) g(\cdot) g(⋅)的消融研究如第4.3节所示。

Training objectives. In the first stage, the base detector is trained with a standard Faster R-CNN loss [4], a binary cross-entropy loss L r p n L_{rpn} Lrpn to make foreground proposals from anchors, a cross-entropy loss L c l s L_{cls} Lcls for bounding box classifier, and a smoothed-L1 loss L r e g L_{reg} Lreg for box regression deltas. When transfer to novel data in the fine-tuning stage, we find the contrastive loss can be added to the primary Faster RCNN loss in a multi-task fashion without destabilizing the training,

L = L r p n + L c l s + L r e g + λ L C P E , (5) \mathbb{L}=L_{rpn}+L_{cls}+L_{reg}+\lambda L_{CPE},\tag{5} L=Lrpn+Lcls+Lreg+λLCPE,(5)

λ \lambda λis set to 0.5 to balance the scale of the losses.

训练目标。 在第一阶段,使用标准Faster R-CNN损失[4]、, L r p n L_{rpn} Lrpn是二元交叉熵损失,用于从众多anchor中得到前景proposals、 L c l s L_{cls} Lcls是交叉熵损失,用于proposals分类, L r e g L_{reg} Lreg是smoothed-L1损失,用于box回归。当在微调阶段传输到新数据时,我们发现对比损耗可以以多任务方式添加到主要Faster RCNN损耗中,而不会破坏训练,

L = L r p n + L c l s + L r e g + λ L C P E , (5) \mathbb{L}=L_{rpn}+L_{cls}+L_{reg}+\lambda L_{CPE},\tag{5} L=Lrpn+Lcls+Lreg+λLCPE,(5)

λ \lambda λ设置为0.5,以平衡损失规模。

4、Experiments

Extensive experiments are performed in both PASCAL VOC [22, 23] and COCO [55] benchmarks. Our FSCE forms an upper envelope for all fine-tuning based methods and memory-inefficient meta-learns with large margins in any shots in all data splits. We strictly follow the consistent few-shot detection data construction and evaluation protocol [43, 16, 17, 15] to ensure fair and direct comparison. In this section, we first describe the few-shot detection settings, then provide complete comparisons of contemporary few-shot detection works on PASCAL VOC and COCO benchmarks, and provide ablation studies.

在PASCAL VOC[22,23]和COCO[55]基准中进行了大量实验。我们的FSCE为所有基于微调的方法和内存效率低下的元学习进行了改进,在所有数据分割的类别中都有很好的效果。我们严格遵循一致的小样本检测数据构建和评估协议[43,16,17,15],以确保公平和直接的比较。在本节中,我们首先描述了小样本检测设置,然后提供了PASCAL VOC和COCO数据集上现有小样本检测工作的完整比较,并进行了消融实验。

Implementation Details. For the detection model, we use Faster-RCNN [4] with Resnet-101 [1] and Feature Pyramid Network [52]. All experiments are run on 8 GPUs with standard batch-size 16. The solver is standard SGD with momentum 0.9 and weight decay 1e-4. Naturally, we scale the training steps when training number of shots. Every detail will be open-sourced in a self-contained codebase to facilitate future research.

实施细节。 对于检测模型,我们将Faster-RCNN[4]与Resnet-101[1]和特征金字塔网络FPN[52]结合使用。所有实验都在8个gpu上运行,batch-size为16,训练方法为标准SGD,动量为0.9,重量衰减为1e-4。当然,我们在训练类别数目时会调整训练步骤。每一个细节都将在一个独立的代码库中开源,以便于将来的研究。

4.1、 Few-shot detection benchmarks

PASCAL VOC. The overall 20 categories in PASCAL VOC are divided into 15 base categories and 5 novel categories. All base category data from PASCAL VOC 07+12 trainval sets are considered available, and K-shot of novel instances are randomly sampled from previously unseen novel classes for K = 1, 2, 3, 5 and 10. Following existing works [16, 43, 15], we consider the same three random partitions of base and novel categories and samplings introduced in [43], referred as Novel Split 1, 2, and 3. And we report AP50 for novel predictions (nAP50) on PASCAL VOC 2007 test set. Note, this is different from the N-Way Kshot settings commonly used in meta learning based methods [44]. The huge variance between different random runs make the N-Way K-shot evaluation protocol unsuitable for few-shot object detection. For methods that provide results over 10 random seeds, we provide the corresponding results to compare with.

PASCAL VOC。 PASCAL VOC中的20个类别分为15个基本类别和5个新类别。所有来自PASCAL VOC 07+12 trainval集合的所有基类数据都是可用的,新实例的K-shot是从以前未见过的新类中随机抽取的,K=1、2、3、5和10。根据现有的工作[16,43,15],我们考虑[43]中介绍的相同的三个基本类别和新类别和抽样的随机分区,称为novel Split 1,2,3。我们在PASCAL VOC 2007测试集上报告了新预测的AP50(nAP50)。注意,这与基于元学习的方法中常用的N-Way K-shot设置不同[44]。不同随机运行之间的巨大差异使得N-way k-shot评估协议不适用于小样本目标检测。对于提供超过10个随机图片的结果的方法,我们提供了相应的结果进行比较。

MS COCO. Similarly, for the 80 categories in COCO, 20 categories in common with PASCAL VOC are reserved as novel classes, the rest 60 categories are used as base classes. The K = 10 and 30 shots detection performance are evaluated on 5K images from COCO 2014 val dataset, COCOstyle AP and AP75 for novel categories are reported by convention.

MS COCO。 同样,对于COCO中的80个类别,与PASCAL VOC相同的20个类别保留为新类,其余60个类别用作基类。在COCO 2014 val数据集的5K图像上评估了K=10和30个shot的检测性能,并获取了新类的COCOstyle AP和AP75。

4.2、 Few-shot detection results

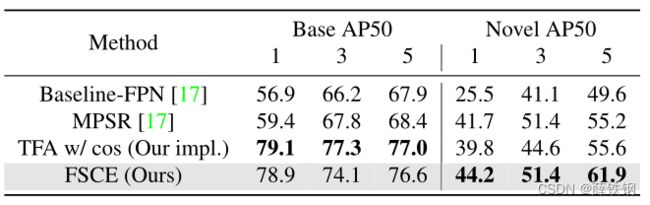

PASCAL VOC Results. Results for all three random novel splits from PASCAL VOC are shown in Table 2. Our FSCE outperforms all existing works in any shot and all splits. The effectiveness of our method is fully demonstrated. We are the first to achieve >50 nAP50 on split 2 and split 3, with up to +8.8 nAP50 above current SOTA on split 3. At the same time, our contrastive proposal encodings powered FSCE persists the less base forgetting property as in TFA. Demonstrated below in Table 4.

PASCAL VOC Results. PASCAL VOC的所有三种随机新拆分结果如表2所示。我们的FSCE在任何类别样本和所有拆分中都优于所有算法。充分证明了该方法的有效性。我们是第一个在split 2和split 3上实现>50 nAP50的公司,在split 3上比当前SOTA高出+8.8 nAP50。同时,我们的基于FSCE的对比方案编码保持了TFA中较少的碱基遗忘特性。如下表4所示。

Table 2. Performance evaluation (nAP 50) of existing few-shot detection methods on three PASCAL VOC Novel Split sets. † marks meta-learning based methods. ∗ \ast ∗ represents average over 10 random seeds. ‡ marks methods use N-way K-Shot meta-testing, which is a different evaluation protocol, see in Sec. 4.1.

表2。现有小样本检测方法在三种PASCAL VOC Novel Split集上的性能评估(nAP 50)。†标记基于元学习的方法。 ∗ \ast ∗表示平均超过10个随机种子。‡标记方法使用n向K-Shot元测试,这是一种不同的评估协议,见第4.1节。

Table 4. Base forgetting comparisons on PASCAL VOC Split 1. Before fine-tuning, the base AP50 in base training is 80.8.

表4。基于PASCAL VOC Split 1的遗忘比较。微调前,基础训练中的基础AP50为80.8。

COCO Results. Few-shot detection results for COCO are shown in Table 3. Our FSCE set new state-of-the-art for all shots, under the same testing protocol and same metrics. Our proposed methods gain +1.7 nAP and +2.7 nAP75 above current SOTA, which is more significant than the gaps between any previous advancements.

COCO Results. 表3显示了COCO的小样本检测结果。我们的FSCE在相同的测试方法和相同的指标下,为所有类别样本使用了新的最先进技术。我们提出的方法比目前的SOTA高出+1.7 nAP和+2.7 nAP75,这比以往任何进展之间的差距都要大。

Table 3. Few-shot detection evaluation results on COCO. ∗ \ast ∗ represents average over 10 random seeds. † marks meta-learning based methods.

表3。COCO小样本检测评价结果。 ∗ \ast ∗ 表示平均超过10个随机种子。†标记基于元学习的方法。

4.3、Ablation 消融实验

Components of our proposed FSCE. First, with our modified training specification for fine-tune stage, the classagnostic RPN and RoI head can be directly transferred to novel data and incur huge performance gain, this is because we utilize more low-quality RPN proposals that would normally be suppressed by NMS and provide more foregrounds to learn given the limited optimization opportunity in fewshot setting. And the jointly fine-tuned FPN top-down convolution and RoI feature extractor opens up room for better representation learning. Second, our CPE loss guides the RoI feature extractor to establish contrastive-aware objects embeddings, intra-class compactness and inter-class variance ease the classification task and rescue misclassifications. The whole system benefits from the proposal consistency control by employing only high-IoU region proposals that are less deviated from objects center to contrast. All ablation studies are done with PASCAL VOC Novel Split 1 unless otherwise specified.

FSCE的组成模块。 首先,通过我们修改后的微调阶段训练规范,类无关RPN和RoI head可以直接转换为新数据,并带来巨大的性能增益,这是因为我们使用了更多低质量的RPN建议框,这些建议通常会被NMS抑制,并在优化机会非常有限的小样本目标检测问题中提供更多的前景来学习。联合微调的FPN自上而下卷积和RoI特征提取器为更好的表示学习开辟了空间。其次,我们的CPE损失指导RoI特征提取器提取对比特征向量、类内紧凑性和类间差异,从而简化分类任务并避免误分类。整个系统只采用高IOU区域建议框,与目标中心的对比度偏差较小。除非另有说明,所有消融研究均使用PASCAL VOC Novel Split 1进行。

Table 5. Ablation for key components proposed in FSCE.

表5。FSCE中关键组成模块的消融。

Ablation for contrastive branch hyper-parameters. Primary RoI feature vector contains post-ReLU activations truncated at zero, we therefore encode the RoI feature with a contrastive head to z ∈ R D c z\in\mathbb{R}^{D_{c}} z∈RDc such that similarity can be meaningfully measured. Based on our ablations, the few-shot detection performance is not sensitive to the contrastive head dimension. And among the commonly used temperature τ used in contrastive objectives [34, 32, 50], a medium temperature τ = 0.2 \tau = 0.2 τ=0.2 works better than relatively small value 0.07 and large value 0.5.

对比分支超参数的消融。 主要RoI特征向量包含在零处截断的ReLU后激活,因此我们使用对比头对RoI特征编码为 z ∈ R D c z\in\mathbb{R}^{D_{c}} z∈RDc,以便可以有意义地测量相似性。基于我们的消融,小样本检测性能对对比头部尺寸不敏感。在对比目标[34,32,50]中常用的超参数温度 τ \tau τ中,中等温度 τ = 0.2 \tau = 0.2 τ=0.2比相对较小的值0.07和较大的值0.5更有效。

Table 6. Ablation for contrastive hyper-parameters, results from 10 shot of PASCAL VOC Split 1.

表6。对比超参数消融,展示了PASCAL VOC Split 1的10shot的结果。

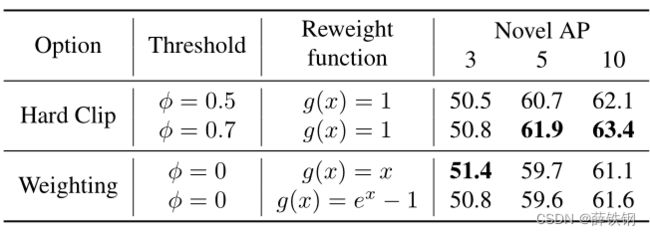

Ablation for Proposal Consistency Control. In equation (3) and (4), we propose a compound proposal consis tency control mechanism, comprised of an indicator function with an IoU cut-off threshold ϕ \phi ϕ, and a function g ( ⋅ ) g(\cdot) g(⋅) for re-weighting proposals with different level of IoU. Turns out a re-weighting is not necessary and a simple high-IoU cut-off works the best for 5 and 10 shots, but when number of shots is low, simply filtering out proposals with IoU less than ϕ \phi ϕ becomes less favorable as the data sparsity is too severe. In low-shot cases, keeping all proposals but down-weight low-IoU ones make more sense, and empirically, exponential decay (easy mining) does worse than a simple linear weighting.

建议框一致性控制的消融。 在式(3)和(4)中,我们提出了一种复合建议框一致性控制机制,由一个具有IOU阈值 ϕ \phi ϕ的指标函数和一个用于对具有不同IoU级别的建议框重新加权的函数 g ( ⋅ ) g(\cdot) g(⋅)组成。结果表明,重新加权是没有必要的,简单的高IoU临界值对于每类5和10张样本图片效果最好,但当每类样本数量较小时,简单地筛选出IOU小于 ϕ \phi ϕ的建议框变得不太有利。在低成本的情况下,保留所有的建议框,但保留较低权重的低IoU建议框更有意义,而且从经验来看,指数衰减(容易挖掘)比简单的线性加权更糟糕。

Table 7. Ablation for proposal consistency control in FSCE.

表7。建议框一致性控制的消融。

Visual inspections and analysis. Figure 5 shows visual inspections of our proposed FSCE. We find in data-abundant general detection, the saturated performance of fc classifier and cosine classifier are essentially equal. fc layer can learn sophisticated decision boundary from enough data. Existing literature and we all confirm that cosine box classifier excels in few shot object detection, this can be attributed to the explicitly modeled similarity helps form tighter instances clusters on the projected unit hypersphere. The intuition to spacing different categories is trivial, but per our experiments well-established margin-based classifiers [20, 21] does not work in this data-hunger setting (- 2 nAP compared to FSCE in 10 shots and worse in lower shots). Instead of adding a margin to classifier, FSCE models the instance-level intra-class similarity and inter-class via CPE loss and guide RoI head to learn contrastiveaware object proposal representations. t SNE [56] visualization of objects proposal embeddings affirms the effectiveness of our CPE loss in reducing intra-class variance and form more defined decision boundaries, this aligns well with our proposition. Figure 5 © shows example bad cases from TFA that are rescued by our FSCE including, missing detection for novel instances, low confidence scores for novel instances, and the pervasive misclassifications.

可视化结果和分析。 图5显示了我们提出的的FSCE的可视化结果。我们发现在数据丰富的一般检测中,fc分类器和余弦分类器的饱和性能基本相同。fc层可以从足够的数据中学习复杂的决策边界。现有文献和我们的实验都证实,余弦边界框分类器在小样本目标检测方面表现出色,这可以归因于显式建模的相似性有助于在投影单位超球面上形成更紧密的实例簇。区分不同类别的直觉是微不足道的,但根据我们的实验,建立良好的基于边缘的分类器[20,21]在这种数据极少的场景下不起作用(-2 nAP,与10-shots中的FSCE相比,在较低的shots中更差)。FSCE通过CPE损失对实例级类内相似性和类间相似性进行建模,并指导RoI head学习具有对比意识的对比特征表示,而不是向分类器添加边缘。对象建议嵌入的t-SNE[56]可视化确认了我们的CPE损失在减少类内方差和形成更明确的决策边界方面的有效性,这与我们的想法非常一致。图5(c)显示了我们的FSCE改进TFA的示例,包括新实例的漏检、新实例的低置信分数和普遍的错误分类。

5、Conclusion

In this work, we propose a new perspective of solving FSOD via contrastive proposals encoding. Effectively saving accurately localized objects from being misclassified, our method achieves state-of-the-art results in any shot and both benchmarks, with up to +8.8% on PASCAL VOC and +2.7% on COCO. Our proposed contrastive proposal encoding head has a negligible cost and is generally applicable. It can be chipped into any two-stage detectors without interfering with the training pipeline. Also, we provide a strong baseline comparable to contemporary SOTA to facilitate future research in FSOD. For a broader impact, FSOD is of great worth considering the vast amount of objects in the real world. Our work proves the plausibility of incorporating contrastive learning into object detection frameworks. We hope our work can inspire more researches in contrastive visual embedding and few-shot object detection.

在这项工作中,我们提出了一个新的角度来解决FSOD通过对比建议框编码。有效地避免了准确定位的目标被错误分类,我们的方法在any-shot和两个基准测试中都取得了最先进的结果,PASCAL VOC和COCO分别达到+8.8%和+2.7%。我们提出的对比建议框编码的成本可以忽略不计,并且普遍适用。它可以作为一个即插即用的模块被嵌入到任何两阶段检测器中,并且不会干扰训练。此外,我们还提供了一个与当代SOTA相当的强大基线,以促进FSOD的未来研究。对于更广泛的影响,FSOD非常值得考虑现实世界中的大量对象。我们的工作证明了将对比学习纳入目标检测框架的合理性。我们希望我们的工作能够在对比视觉嵌入和小样本目标检测方面激发更多的研究。